Stuff The Internet Says On Scalability For December 2nd, 2016

Hey, it's HighScalability time:

A phrase you've probably heard a lot this week: AWS announces...If you like this sort of Stuff then please support me on Patreon.

- 18 minutes: latency to Mars; 100TB: biggest dynamodb table; 55M: visits to Kaiser were virtual; $2 Billion: yearly Uber losses; 91%: Apple's take of smartphone profits; 825: AI patents held by IBM; $8: hourly cost of a spot welding in the auto industry; 70%: Walmart website traffic was mobile; $3 billion: online black friday sales; 80%: IT jobs replaceable by automation; $7500: cost of the one terabit per second DDoS attack on Dyn;

- Quotable Quotes:

- @BotmetricHQ: #AWS is deploying tens of thousands of servers every day, enough to power #Amazon in 2005 when it was a $8.5B Enterprise. #reInvent

- bcantrill: From my perspective, if this rumor is true, it's a relief. Solaris died the moment that they made the source proprietary -- a decision so incredibly stupid that it still makes my head hurt six years later.

- Dropbox: it can take up to 180 milliseconds for data traveling by undersea cables at nearly the speed of light to cross the Pacific Ocean. Data traveling across the Atlantic can take up to 90 milliseconds.

- @James_R_Holmes: The AWS development cycle: 1) Have fun writing code for a few months 2) Delete and use new AWS service that replaces it

- @swardley: * asked "Can Amazon be beaten?" Me : of course * : how? Me : ask your CEO * : they are asking Me : have you thought about working at Amazon?

- @etherealmind: Whatever network vendors did to James Hamilton at AWS, he is NEVER going to forgive them.

- Stratechery: the flexibility and modularity of AWS is the chief reason why it crushed Google’s initial cloud offering, Google App Engine, which launched back in 2008. Using App Engine entailed accepting a lot of decisions that Google made on your behalf; AWS let you build exactly what you needed.

- @jbeda: AWS Lambda@Edge thing is huge. It is the evolution of the CDN. We'll see this until there are 100s of DCs available to users.

- erikpukinskis: Everyone in this subthread is missing the point of open source industrial equipment. The point is not to get a cheap tractor, or even a good one. The point is not to have a tractor you can service. The point is to have a shared platform.

- John Furrier: Mark my words, if Amazon does not start thinking about the open-source equation, they could see a revolt that no one’s ever seen before in the tech industry. If you’re using open source to build a company to take territory from others, there will be a revolt.

- @toddtauber: As we've become more sophisticated at quantifying things, we've become less willing to take risks. via @asymco

- Resilience Thinking: Being efficient, in a narrow sense, leads to elimination of redundancies-keeping only those things that are directly and immediately beneficial. We will show later that this kind of efficiency leads to drastic losses in resilience.

- Connor Gibson: By placing advertisements around the outside of your game (in the header, footer and sidebars) as well as the possibility video overlays it is entirely possible to earn up to six figures through this platform.

- Google Analytics: And maybe, if nothing else, I guess it suggests that despite the soup du jour — huge seed/A rounds, massive valuations, binary outcomes— you can sometimes do alright by just taking less money and more time.

- badger_bodger: I'm starting to get Frontend Fatigue Fatigue.

- Steve Yegge: But now, thanks to Moore's Law, even your wearable Android or iOS watch has gigs of storage and a phat CPU, so all the decisions they made turned out in retrospect to be overly conservative. And as a result, the Android APIs and frameworks are far, far, FAR from what you would expect if you've come from literally any other UI framework on the planet. They feel alien.

- David Rosenthal: Again we see that expensive operations with cheap requests create a vulnerability that requires mitigation. In this case rate limiting the ICMP type 3 code 3 packets that get checked is perhaps the best that can be done.

- @IAmOnDemand: Private on public cloud means the you can burst public/private workloads intothe public and shut down yr premise or... #reinvent

- @allingeek: It isn’t “serverless" if you own the server/device. It is just a functional programing framework. #reinvent

- brilliantcode: If you told me to use Azure two years ago I would've laughed you out of the room. But here I am in 2016, using Azure, using ASP.net + IIS on Visual Studio. that's some powerful shit and currently AWS has cost leadership and perceived switching cost as their edge.

-

seregine: Having worked at both places for ~4 years each, I would say Amazon is much more of a product company, and a platform is really a collection of compelling products.Amazon really puts customers first...Google really puts ideas (or technology) first.

-

api: Amazon seems to be trying to build a 100% proprietary global mainframe that runs everywhere.

-

Athas: No, it [Erlang] does not use SIMD to any great extent. Erlang uses message passing, not data parallelism. Erlang is for concurrency, not parallelism, so it would benefit little from these kinds of massively parallel hardware.

-

@chuhnk: @adrianco @cloud_opinion funnily those of us who've built platforms at various startups now think a cloud provider is the best place to be.

-

@jbeda: So the guy now in charge of building OSS communities at @awscloud says you should just join Amazon? Communities are built on diversity.

-

@JoeEmison: There's also an aspect of some of these AWS services where they only exist because of problems with other AWS services.

-

logmeout: Until bandwidth pricing is fixed rather than nickel and dimeing us to death; a lot of us will choose fixed pricing alternatives to AWS, GCP and Rackspace.

- arcticfox: 100%. I can't stand it [AWS]. It's unlimited liability for anyone that uses their service with no way to limit it. If you were able to set hard caps, you could have set yours at like $5 or even $0 (free tier) and never run into that.

- @edw519: I hate batch processing so much that I won't even use the dishwasher. I just wash, dry, and put away real time.

- @CodeBeard: it could be argued that games is the last real software industry. Libraries have reduced most business-useful code to glue.

- Gall's Law: A complex system that works is invariably found to have evolved from a simple system that worked. A complex system designed from scratch never works and cannot be patched up to make it work. You have to start over with a working simple system.

- @mathewlodge: AWS now also designing its own ASICs for networking #Reinvent

- @giano: From instances to services, AWS better than anybody else understood that use case specific wins over general purpose every day. #reinvent

- @ben11kehoe: AWS hitting breadth of capability hard. Good counterpoint to recent "Google is 50% cheaper" news #reinvent

- Michael E. Smith: But there are also positive effects of energized crowding. Urban economists and economic geographers have known for a long time that when businesses and industries concentrate themselves in cities, it leads to economies of scale and thus major gains in productivity. These effects are called agglomeration effects.

- Andrew Huang: The inevitable slowdown of Moore’s Law may spell trouble for today’s technology giants, but it also creates an opportunity for the fledgling open-hardware movement to grow into something that potentially could be very big.

- Stratechery: This is Google’s bet when it comes to the enterprise cloud: open-sourcing Kubernetes was Google’s attempt to effectively build a browser on top of cloud infrastructure and thus decrease switching costs; the company’s equivalent of Google Search will be machine learning.

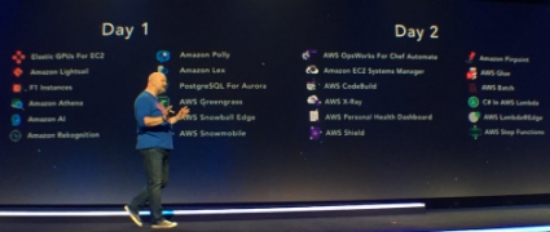

- Just what has Amazon been up to?

- Apparently AWS was feeling some pressure from low cost VPS services so they started their own. LightSail. Here's a good price comparison. LightSale, DO, VULTR, Linode are all at the same price and capability points.

- Amazon's solution to the data gravity problem. AWS Snowmobile. Moving a datacenter's worth of data in a semi. There's even an optional armed escort service. Maybe this is what the Internet Archive should use when they move.

- Time to dust off my Verilog skills, AWS now has some mondo FPGAs. fpgaminer: These FPGAs are absolutely _massive_ (in terms of available resources). AWS isn't messing around. duskwuff: The VU9P FPGAs Amazon is using cost between $30,000 and $55,000 each, depending on the speed grade. Yes, this means a fully equipped F1 instance costs nearly half a million dollars. Don't count on the instances being cheap to run. jeffbarr: You can do all of the design and simulation on any EC2 instance with enough memory and cores.

- Networked attached GPUs are a thing. Amazon EC2 Elastic GPUs.

- Amazon is keeping up with Google and Microsoft with an image detection service. Google Cloud Vision and Microsoft's service both cost $1.50 per 1,000 images; Amazon Rekognition is $1.00 per 1,000 images.

- AWS Greengrass. Local compute, messaging & data caching for connected devices. AWS will get pressure from more flexible platforms that can work on-prem. Looks like AWS is competing on that front now too.

- AWS Step Functions. Build Distributed Applications Using Visual Workflows. talawahdotnet: Nice. So this is basically a replacement for SWF (Simple Workflow Service) that allows you to build the decision steps using a GUI/JSON and allows you to execute tasks using either Lambda or the traditional activity pollers. Building a multi-step workflow that handles branching, retries and failure just got a lot easier.

- AWS Shield. Managed DDos protection. @BrandonRich: Wow! If your autoscaling spikes due to DDoS, AWS Shield will absorb the extra cost!

- AWS CodeBuild. Build and test code with continuous scaling. Timmy_C: Using CodePipelines and CodeCommit you can create a workflow where a git commit to a CodeCommit repo can get picked up by pipelines and sent to the build service (i.e. CodeBuild or Jenkins). Then CodeBuild will push the resulting artifact to S3. CodeDeploy (and Elastic Beanstalk, CloudFormation and OpsWorks) can be configured to deploy the built artifacts to your application fleet. It's the last piece in AWS's solution for continuous deployment.

- AWS Batch. Fully Managed Batch Processing at Any Scale. hackcrafter: Lambda is for shared-compute. You don't need a dedicated server to run a "function" that takes < 60 seconds and can be called in a stateless manner as an API endpoint. This is dedicated host compute-heavy batch processing. It's a pain to do this at scale!...Imagine you have a set of input S3 files, each needs multiple-hours of compute to produce output S3 files. Doesn't seem that hard, until EC2 instances fail, programs crash, etc. etc.

- Amazon Polly. Text to Speech. qsun: I realized this tool might not be cheap, since it may take the voice actor/actress 2 hours per day to produce my content ... Now, with Polly, things changed - it produces reasonable voice, and 2-hour content would only cost ~$0.3.

- I've thought about this too, but I'm not sure how well it work. The latency of lambda components composed together could perform horribly and will have high latency variability. Logging will be a pain. And you basically have a distributed monolith that will be hard to upgrade. Integrating as a library seems more workable. @swardley: Now let us suppose that AWS launch some form of Lambda market place i.e. you can create a lambda function, add it to the API gateway and sell it through the market place. PS I think you'll find they've just done that - Amazon API gateway integrates with API marketplace and Lambda integrates with API gateway.

- Millions of $$$ saved at Pinterest. Auto scaling Pinterest. They tried autoscaling in 2013 and it didn't work. So they had to keep a fixed number of instances to serve peak traffic. This resulted in lots of wasted resources. They tried again with good results. There's an excellent description of the ever important health check and triggering process. The result: Auto scaling saves a significant number of instance hours every day, which leads to several million dollars in savings every year.

- What can an FPGA do that a GPU can't do? cheez: FGPA = Field Programmable Gate Array. Basically, you can create a custom "CPU" for your particular workflow. Imagine the GPU didn't exist and you couldn't multiply vectors of floats in parallel on your CPU. You could use a FPGA to write something to multiply a vector of floats in parallel without developing a GPU. It would probably not be as fast as a GPU or the equivalent CPU, but it would be faster than doing it serially.

- So how do you actually use this machine learning stuff? Evernote has a great example of using Cloud Natural Language API to extracting data by parsing images of airline tickets. Not simple, but it seems doable.

- Is Google Cloud really 50% cheaper than AWS? ranman throws some shade and backs it up. TL;DR: I urge all readers to take this post with a grain/boulder of salt: The author is anonymous, prone to hyperbole and error, and makes multiple unverified claims.

- Vidoes from DOES16 San Francisco are now available.

- Dropbox is continuing the buildout of their own infrastructure. 90% of user data is stored on their custom-built architecture and they are building their own world-wide points-of-presence network. Infrastructure Update: Pushing the edges of our global performance.

- How your startup can leverage production-grade infrastructure for less than $200/month: host your back-end API on Heroku; for the database use Compose, it has node failover, daily backups, SSL encryption, and a basic monitoring panel; Netlify for front-end hosting; S3 for file hosting; use Let’s Encrypt to generate certificates; Slack to aggregate all the error reports in a single communication hub; Papertrail for log management; Sentry to report errors and exceptions; Uptimerobot as a health check; Librato for metrics.

- This is cool. An Interactive Tutorial on Numerical Optimization. It runs in the browser so you can graphically see and play with how algorithms work. Curiously, Gradient Descent looks a lot like how my dog searches for a stick.

- How We’re Implementing Service-Oriented Architecture at Buffer After 6 years of Technical Buildup. Buffer went from a common web/api/queue/workers monolith architecture to a SOA architecture. The main driver for going SOA was the fragility of all the shared code between teams. They went with Kubernetes as their platform. Then they began slicing up the monolith. The result: new services could deploy in under 60 seconds with a single Slack slash command; the project was a huge success and the team felt incredibly empowered by the system; today we’ve now released five production-grade services.

- It looks like cord cutters have been a little too successful. Governments are now considering thinking of services like Netflix as utilities that can be taxed to make up for lost revenue. California Today: Fretting Over the ‘Netflix Tax’: At 9.4 percent, the so-called Netflix tax would treat streaming services as a traditional utility, the city said. If you use multiple services — for example, Hulu, Amazon Video and HBO — it would be added to each bill.

- Don’t Build Private Clouds: Here's why: You don’t need to own data centers unless you’re special; Private cloud makes you procrastinate doing the right things; Private cloud cost models are misleading; The state of infrastructure influences your organizational culture. A modern enterprise running on programmable cloud contributes to autonomous teams, rapid learning, and faster iterations of ideas.

- Interesting idea: the decentralized Web needs a decentralized content registry. A Decentralized Content Registry for the Decentralized Web: Coala IP + IPDB + IPFS for creating a shared global commons to connect creators with audience. Licensing: Coala IP is a blockchain-friendly, community driven protocol for attribution and licensing of IP; e: IPDB is a global decentralized database to store attribution & licensing info; File system: IPFS & FileCoin together are a global decentralized file system to store the content itself.

- Can we just do away with secret questions? Maybe we can take a vote at the next meeting. San Francisco Rail System Hacker Hacked: Finally, as I hope this story shows, truthfully answering secret questions is a surefire way to get your online account hacked. Personally, I try to avoid using vital services that allow someone to reset my password if they can guess the answers to my secret questions.

- Probabilistic Data Structure Showdown: Cuckoo Filters vs. Bloom Filters: a Cuckoo filter, which can be implemented simply, provides better practical performance, under certain circumstances, out of the box and without tuning than counting Bloom filters. Ultimately, Cuckoo filters can serve as alternatives in scenarios where a counting Bloom filter would normally be used.

- Couldn't get into MIT? Most all the course are available for free. Is there any data comparing the results between people who go to the school and go the online route?

- All we need is another divide. Artificial intelligence is the new digital divide: In short, very soon, companies will be divided between those who are able to take advantage of artificial intelligence and machine learning for their day-to-day operations, and those that continue to operate as they always have, making them much less productive and much more unpredictable. We are talking here about the emergence of a new digital divide, a virtually Darwinian event in terms of competitiveness.

- Handling Overload. A good discussion of common overload patterns and workarounds available today. First, Little's Law: "what really matters to the capacity of your system is going to be how long a task takes to go through it, with how many can be handled at once (or concurrently) in the system. Anything above that will mean that you will sooner or later find yourself in a situation of overload. Increasing capacity or speeding up processing times will be the only way to help things if the average load does not subside." Backpressure: Mechanism by which you can resist to a given input, usually by blocking. Load-shedding: Mechanism by which you drop tasks on the floor instead of handling them. The naive and fast system: all the tasks are done asynchronously: the only feedback you get is that the request entered the system, and then you trust things to be fine for the rest of processing. Lots more details on how to get these done. It targets Erlang, but the ideas are general enough to be useful for all.

- Riot build their own scheduling system, Admiral, for scheduling containers across clusters of Docker hosts. RUNNING ONLINE SERVICES AT RIOT: PART II: The advantage of not using a more general system: Keeping Admiral small, and focused on our infrastructure and needs allowed it to be manageable by a single team. Its basic operation is quite stable now, so one major thing that we are hoping to gain by moving to DC/OS is the ability to leverage the surrounding ecosystem for solutions like CNI on the bottom, Marathon packages on the top, and general knowledge and community all around.

- Walmart shares An Approach to Designing Distributed, Fault-Tolerant, Horizontally Scalable Event Scheduler.

- MyRocks: use less IO on writes to have more IO for reads: when you spend less on IO to write back changes then you can spend more on IO to handle user queries. That benefit is more apparent on slower storage (disk array) than on faster storage (MLC NAND flash) because slower storage is more likely to be the bottleneck.

- On DNA and Transistors: Here is the important bit: the total market value for transistors has grown for decades precisely because the total number of transistors shipped has climbed even faster than the cost per transistor has fallen. In contrast, biological manufacturing requires only one copy of the correct DNA sequence to produce billions in value.

- Brett Slatkin has written a really nice post on Building robust software with rigorous design documents. The idea is: "Writing a design document is how software engineers use simple language to capture their investigations into a problem." So you need a structure and the one he proposes looks like it could work for a lot of people.

- A new perovskite could lead the next generation of data storage: We have essentially discovered the first magnetic photoconductor. This new crystal structure combines the advantages of both ferromagnets, whose magnetic moments are aligned in a well-defined order, and photoconductors, where light illumination generates high density free conduction electrons.

- tozny/e3db: a tool for programmers who want to build an end-to-end encrypted database with sharing into their projects

- baidu/tera: a high performance distributed NoSQL database, which is inspired by google's BigTable and designed for real-time applications. Tera can easily scale to petabytes of data across thousands of commodity servers. Besides, Tera is widely used in many Baidu products with varied demands,which range from throughput-oriented applications to latency-sensitive service, including web indexing, WebPage DB, LinkBase DB, etc.

- Nordstrom/serverless-artillery: Combine serverless with artillery and you get serverless-artillery for instant, cheap, and easy performance testing at scale.

- Firmament: Fast, centralized cluster scheduling at scale: In the final implementation, instead of implementing some fancy predictive algorithm to decide when to use cost scaling and when to use relaxation, the Firmament implementation makes the pragmatic choice of running both of them (which is cheap) and uses the solution from whichever one finishes first! A technique called price refine (originally developed for use within cost scaling) helps when the next run of the incremental cost scaling algorithm must execute based on the state from a previous relaxation algorithm run. It speeds up incremental cost scaling by up to 4x in 90% of cases in this situation.

- The Emergence of Organizing Structure in Conceptual Representation: Here we introduce a new computational model of how organizing structure can be discovered, utilizing a broad hypothesis space with a preference for sparse connectivity. Given that the inductive bias is more general, the model's initial knowledge shows little qualitative resemblance to some of the discoveries it supports. As a consequence, the model can also learn complex structures for domains that lack intuitive description, as well as predict human property induction judgments without explicit structural forms.

- The Verification of a Distributed System: Distributed Systems are difficult to build and test for two main reasons: partial failure & asynchrony. These two realities of distributed systems must be addressed to create a correct system, and often times the resulting systems have a high degree of complexity. Because of this complexity, testing and verifying these systems is critically important. In this talk we will discuss strategies for proving a system is correct, like formal methods, and less strenuous methods of testing which can help increase our confidence that our systems are doing the right thing.

- Early detection of configuration errors to reduce failure damage: test all of your configuration settings as part of system initialization and fail-fast if there’s a problem. 75% of all high severity configuration-related errors are caused by latent configuration errors.

- Antikernel: A Decentralized Secure Hardware-Software Operating System Architecture: a novel operating system architecture consisting of both hardware and software components and designed to be fundamentally more secure than the state of the art. To make formal verification easier, and improve parallelism, the Antikernel system is highly modular and consists of many independent hardware state machines (one or more of which may be a general-purpose CPU running application or systems software) connected by a packet-switched network-on-chip (NoC). We create and verify an FPGA-based prototype of the system.

- Slicer: Auto-Sharding for Datacenter Applications: Slicer is Google’s general purpose sharding service. It monitors signals such as load hotspots and server health to dynamically shard work over a set of servers. Its goals are to maintain high availability and reduce load imbalance while minimizing churn from moved work.

- Greg Linden is back with another tasty batch of Quick links.