Running Large Graph Algorithms - Evaluation of Current State-of-the-Art and Lessons Learned

On the surface nothing appears more different than soft data and hard raw materials like iron. Then isn’t it ironic, in the Alanis Morissette sense, that in this Age of Information, great wealth still lies hidden deep beneath piles of stuff? It's so strange how directly digging for dollars in data parallels the great wealth producing models of the Industrial Revolution.

The piles of stuff is the Internet. It takes lots of prospecting to find the right stuff. Mighty web crawling machines tirelessly collect stuff, bringing it into their huge maws, then depositing load after load into rack after rack of distributed file system machines. Then armies of still other machines take this stuff and strip out the valuable raw materials, which in the Information Age, are endless bytes of raw data. Link clicks, likes, page views, content, head lines, searches, inbound links, outbound links, search clicks, hashtags, friends, purchases: anything and everything you do on the Internet is a valuable raw material.

By itself data is no more useful than a truck load of iron ore. Data must be brought to a factory. It must be purified, processed, and formed. That’s the job for a new field of science called Data Science. Yes, while you weren't looking a whole new branch of science was created. It makes sense in a way. Since data is a new kind of material we need a new profession paralleling that of the Material Scientist, someone who seeks to deeply understand data, the Data Scientist. We aren't so much in the age of data, as the age of data inference.

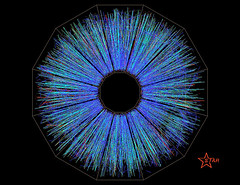

In this Google Tech Talk video, Running Large Graph Algorithms - Evaluation of Current State-of-the-Art and Lessons Learned, Andy Yoo, a Computer Scientist at Lawrence Livermore Labs, says the idea behind Data Science is to: fuse different forms of data from different sources into a graph, run graph mining algorithms, and extract out useful information. Data Science tries to understand unstructured data. Scientific data is usually structured, but web data is highly unstructured, consisting of text, web pages, sensor data, images, and so on.

Finding meaning in unstructured data requires using different techniques, like graph mining, which has been profitably put to use in:

- Google’s PageRank - finding the relative importance of web pages for searching.

- Social Network Analysis - finding how groups are divided; find who leads a group; find who has influence within a group; find who knows who and who does what in a terrorist network; disect messy social network interactions and figure out who is the most popular and who hangs out with who. For example, some key people from a large Enron email graph were identified by running the Page Rank algorithm.

- Bioinformatics - find which proteins function similarly.

- Pattern Matching - given a pattern find all the instances of a subgraph of this pattern. Useful for fraud detection and cyber security.

From the above list we’ve established mining large graphs is a money maker. And like mining for materials in the ground, running large graph algorithms turns out to be very hard work. Mr. Yoo says the difficulties arise because:

- Graphs are Very Large

- Graphs with 10^9+ nodes and edges are increasingly common

- Intermediate result increases exponentially in many cases

- Data is too large to be stored in memory.

- Graphs are Complex and Hard to Parallelize

- Common graph mining algorithms have high-order computational complexity

- High-order algorithms (O(N^2+)) - Page rank, community finding, path traversal

- NP-Complete algorithms - Maximal cliques, subgraph pattern matching

At the urging of Livermore Labs, Mr. Yoo and his team conducted a number of experiments to evaluate different technologies for solving large graph problems. The bulk of the video is taken up explaining each experiment. Only a brief summary of each experiment is given here. The general pattern is to pick a promising technology, figure out how to make their example graph search program run on the platform, and then run the search. The promising technologies are: Distributed-memory Parallel Architectures (IBM BLueGene/L), Shared-memory Multi-threading Architecture (Sun UltraSPARC T2 Niagra), Relational Database (Netezza), Custom Software (their own MSSG program), Cloud Computing (Hadoop Map/Reduce), Dataflow System (Data Analytic Supercomputer (DAS)). Each platform presents different problems that need to be solved. It's those problems that make it difficult to scale to solve large graphs problems.

Each experiment ran a “strong scaling” and “weak scaling” form of the search test:

- Strong Scaling - fix the global problem size while more processors are added.

- Weak Scaling - increase the number of processors as the global problem size is increased.

Different technologies respond to these scenarios in different ways. For example, in the strong scaling test as you add more processors you may see linear performance. Search times decrease and communication overhead increases because processors have to exchange more data. In the weak scaling test as you add more work performance may be sublinear as disk writes and communication overhead inreases. Or you may see lock contention increase and performance just take a dive.

The result for each experiment:

- Distributed-memory Parallel Architecture : IBM BLueGene/L

- Massively parallel,130,000 low power processors. 32TB total memory. MPI message-passing between nodes. Fast interconnects.

- The solution created an all-to-all communication pattern. The message size increases and becomes the scalability bottleneck. Steps were taken to minimize communication, optimize memory management, reduce communication time, and reduce message volume. Removing redundant vertices had a large effect.

- Lessons learned:

- Programming using message passing is difficult and scaling message passing systems is even harder.

- Communication is the bottleneck. Optimizations to get around these bottlenecks took a lot of work.

- Existing tools bomb on large algorithms so not that useful a platform.

- Scale-free graphs may not scale due to the “hubs.”

- Shared-memory Multi-threading Architecture : Sun UltraSPARC T2 Niagra

- Server-on-a-chip design, power efficient, throughput oriented design, 128GB RAM, PCI-Express, 8 cores, 8 threads per core, chip multithreading. Goal is to have threads running all the time, thread context switching is in hardware.

- Lessons learned:

- Shared state between threads killed performance because of lock contention.

- Easier to program than the message passing model.

- Memory, number hardware threads, and lock contention were bottlenecks.

- To fully exploit this style of hardware asynchronous lock-free algorithms need to be developed.

- Relational Database : Netezza

- Relational database widely deployed because they are available and easy to use.

- Netezza moves the computation to where the data is stored. A FGPA is used as an accelerator.

- The advantage of a relational database is you can store a lot of data. They tried a 300 billion edge graph with 11 billion nodes for a total of 13.3TB storage on a 673 node NPS configuration. 80% of the queries returned in 5 minutes using a bidirectional search technique which halved the diameter of the graph.

- Lessons learned:

- Graph algorithms require a lot of joins. Join performance was not that good so the performance was not that good.

- Easy to program and relatively inexpensive.

- Could not impact optimization because the SQL compiler is hidden and changes between compilers.

- Initialization of data may take hours for large graphs.

- Custom Software : MSSG

- This is a stream graph clustering engine that they built themselves specifically to solve the graph search problem. It can run on any cluster. Data is stored on disk.

- Lessons learned:

- Well designed custom software can easily scale to billion edge graphs, perform well, and be relatively inexpensive compared to other options. The cost is in long software development times and a lack of generality across other graph algorithm domains.

- Communication and disk writes are bottlenecks.

- Need a clearly defined programming model.

- Cloud Computing : Hadoop Map/Reduce

- Lessons learned:

- Cost effective.

- Performed better that Netezza NPS, but was slow.

- Model limited to embarrassingly parallel applications, not ideal for complex graph algorithms.

- Poor handling of intermediate results was a performance bottleneck. It’s all stored to disk and read back again.

- Map/reduce will have to evolve into a dataflow model to increase data parallelism.

- Minimize global states, group related data together.

- Need to stream data between nodes instead of storing intermediate data to disk and to be able to process data in memory.

- Lessons learned:

- Dataflow System : Data Analytic Supercomputer (DAS)

- Map/reduce on steroids. More flexible and more complex than Map/Reduce.

- Data is operated on in parallel and data is independent.

- Tasks are triggered by the availability of data.

- No flow of control.

- They had access to Data Analytic Supercomputer (DAS).

- DAS is a parallel dataflow engine that runs on a standard cluster. It is very expensive.

- The smarts is in the software, it is highly optimized. Streaming data is pipelined for maximum in-memory processing. Disk access is made sequential for optimized throughput. Optimized sort and join operations. Users can structure their data management nodes anyway they want and combine them together for better performance.

- Uses an active-disk system to “bring computation to where data is, instead of moving data from data store”

- As soon as nodes arrive into the DAS they start executing. It all runs in parallel.

- Search performance was 5 times better than the next best system (MSSG) because of data parallelism and a highly optimized library.

- Ran some real-world queries on PubMed data and found a 250-300X speedup compared to relational databases. The key in these queries was the intermediate data really grew and DAS was able to handle it.

- Lessons learned:

- Orders of magnitude performance over state-of-art SQL machines.

- Relatively easy to program.

- Enables asynchronous data parallelism. Streaming data for handling large intermediate results, pipelines data flow, flexible user optimization.

- Software is very expensive, but hardware is off the shelf.

- Memory for in-core processing is performance bottleneck.

- To handle graph algorithms there needs to be some sort of flow control for the data. Tell it to stop here or go there. This was very limiting, almost a show stopper.

Overall Lessons Learned

- No real winner for large graphs.

- For people needing real-time response the BlueGene type systems are required.

- Hadoop won on cost.

- MSSG and dataflow won on performance.

- Thinks none of the systems will scale to very large graphs.

- The future is the dataflow model because it supports the asynchronous data parallel model.

- Graph algorithms require a lot of global states which become the bottleneck. So we need to learn how to write new kinds of algorithms.

- Interested in Pregel because it is easy to program and works on a message passing infrastructure.

As graphs are used to solve more and more problems, a graph view of data may become a standard view on data, sitting right along side more traditional views like objects, search, and transactional. Graphs will become ever larger and graphs will need to be mined, so it seems some flexible declarative means of operating on graphs over available topographies will be needed to make these systems perform well and be scalable. We haven't got there yet.

Even more interesting to me is what this implies for data openess. When we talk about open data in the future, will we talk about basic import/export dumps or simple APIs, or is what we really need to talk about is how open are your highly organized graphs and how can I run my data mining code on them?

Related Articles

- Microsfot’s Dryad - high-performance, general-purpose distributed computing engine that handles some of the most difficult aspects of cluster-based distributed computing such as automatic scheduling of processes on the cluster machines, monitoring, fault-tolerance, and support for efficient data-transfer between processes. Dryad provides excellent performance and scalability, and can handle very large-scale data-parallel computations. Microsoft routinely uses Dryad to analyze petabytes of data on cluster of thousands of computers.

- HCDF: A Hybrid Community Discovery Framework by Tina Eliassi-Rad. We introduce a novel Bayesian framework for hybrid community discovery in graphs.

- Evaluating use of data flow systems for large graph analysis by Andy Yoo and Ian Kaplan. (slides)

- Pregel - large scale graph processing at Google.

- Pregel: Google’s other data-processing infrastructure by Royans.

- Rise of the Data Scientist by Nathan Yau.

- Hive - provides tools to enable easy data ETL, a mechanism to put structures on the data, and the capability to querying and analysis of large data sets stored in Hadoop files.

- Neo4j - a Graph Database that Kicks Buttox.

- DARPA-SN-10-36: Graph Understanding and Analysis for Rapid Detection - Deployed on the Ground (GUARD DOG) Industry Day. One of the key challenges facing U.S. soldiers operating in counterinsurgency environments is developing and maintaining an understanding of the local and regional political, social, economic, and infrastructure networks.

- Gremln - Gremlin is a Turing-complete, graph-based programming language developed in Java 1.6+ (JSR 223) for key/value-pair multi-relational graphs called property graphs. Gremlin makes extensive use of XPath 1.0 to support complex graph traversals. This language has application in the areas of graph query, analysis, and manipulation.

- CLTV45: The Evolution of the Graph Data Structure from Research to Production - In this recording from “NoSQL Live Boston” we learn how Graph Data Structures evolved from research into production.

- Paper: Graph Twiddling in a MapReduce World by Hyunsik Choi

- Cascading - a feature rich API for defining and executing complex, scale-free, and fault tolerant data processing workflows on a Hadoop cluster.

- The Ongoing Data Revolution by Ed Sim. The question is who will create the next great back-end technologies and new web services that drive a whole new conversation and new way of thinking about what we do with the data that is around everywhere.