Intercloud: How Will We Scale Across Multiple Clouds?

In A Brief History of the Internet it was revealed that the Internet was based on the idea that there would be multiple independent networks of rather arbitrary design. The Internet as we now know it embodies a key underlying technical idea, namely that of open architecture networking. In this approach, the choice of any individual network technology was not dictated by a particular network architecture but rather could be selected freely by a provider and made to interwork with the other networks through a meta-level "Internetworking Architecture".

With the cloud we are in the same situation today, just a layer or two higher up the stack. We have independent clouds that we would like to connect and work seamlessly together, preferably with the ease at which we currently connect nodes to a network and networks to the Internet. This technology seems to be called the Intercloud: an interconnected global "cloud of clouds" as apposed to the Internet which is a "network of networks."

Vinton Cerf, person most often called the father of the Internet, says in Cloud Computing and the Internet that we are ripe for an Intercloud in the same way we were once ripe for the Internet:

Cloud computing is at the same stage [pre-Internet]. Each cloud is a system unto itself. There is no way to express the idea of exchanging information between distinct computing clouds because there is no way to express the idea of “another cloud.” Nor is there any way to describe the information that is to be exchanged. Moreover, if the information contained in one computing cloud is protected from access by any but authorized users, there is no way to express how that protection is provided and how information about it should be propagated to another cloud when the data is transferred.

...

There are many unanswered questions that can be posed about this new problem. How should one reference another cloud system? What functions can one ask another cloud system to perform? How can one move data from one cloud to another? Can one request that two or more cloud systems carry out a series of transactions? If a laptop is interacting with multiple clouds, does the laptop become a sort of “cloudlet”? Could the laptop become an unintended channel of information exchange between two clouds? If we implement an inter-cloud system of computing, what abuses may arise? How will information be protected within a cloud and when transferred between clouds. How will we refer to the identity of authorized users of cloud systems? What strong authentication methods will be adequate to implement data access controls?

The question is: what will the Intercloud look like? Many have weighed in with their visions of the future.

Father of the Web Tim Berners Lee, says Mr. Cerf, thinks that data linking may prove to be a part of the vocabulary needed to interconnect computing clouds. The semantics of data and of the actions one can take on the data, and the vocabulary in which these actions are expressed appear to me to constitute the beginning of an inter-cloud computing language.

This seems an oddly sterile vision to me. It's like a language without verbs and if there's one thing the Internet is good at, it's action.

Here's Cisco's vision of the Intercloud:

Apparently there's a document explaining their vision, Blueprint for the Intercloud: Protocols and Formats for Cloud Computing Interoperability, but like a disturbingly large percentage of potentially useful computer science papers, it's hidden behind a paywall. A kind reader came through with a direct link.

A somewhat more chaotic vision can be found in Building Super Scalable Systems: Blade Runner Meets Autonomic Computing in the Ambient Cloud.

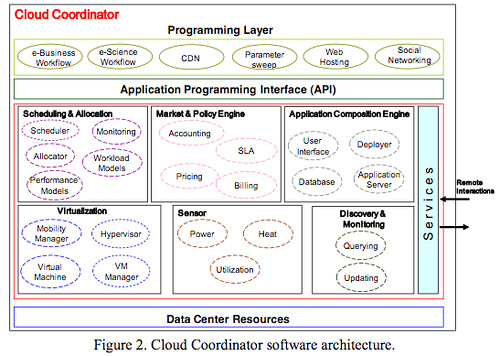

Another Intercloud possibility is given in the paper InterCloud: Scaling of Applications across multiple Cloud Computing Environments, which makes a very thoughtful attemp at defining what an Intercloud would look like. The paper's abstract gives a good overview of their approach:

Cloud computing providers have setup several data centers at different geographical locations over the Internet in order to optimally serve needs of their customers around the world. However, existing systems do not support mechanisms and policies for dynamically coordinating load distribution among different Cloud-based data centers in order to determine optimal location for hosting application services to achieve reasonable QoS levels. Further, the Cloud computing providers are unable to predict geographic distribution of users consuming their services, hence the load coordination must happen automatically, and distribution of services must change in response to changes in the load. To counter this problem, we advocate creation of federated Cloud computing environment (InterCloud) that facilitates just-in-time, opportunistic, and scalable provisioning of application services, consistently achieving QoS targets under variable workload, resource and network conditions. The overall goal is to create a computing environment that supports dynamic expansion or contraction of capabilities (VMs, services, storage, and database) for handling sudden variations in service demands.

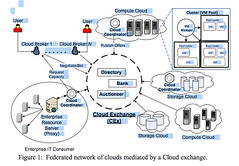

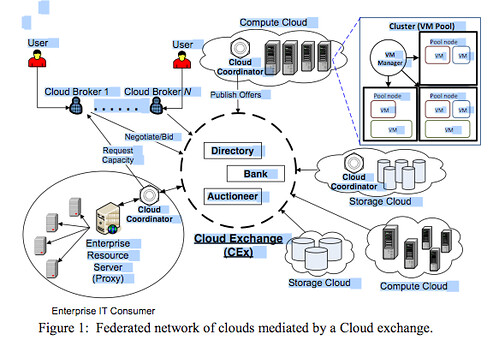

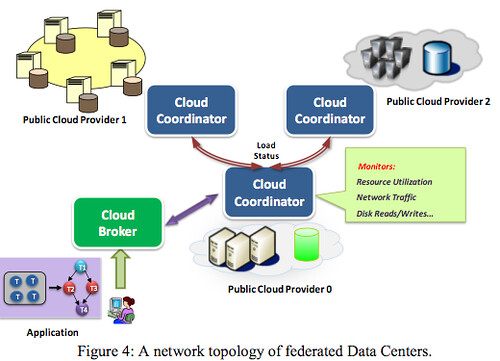

The parts of their system include:

- Cloud Coordinator - exports Cloud services and their management driven by market-based trading and negotiation protocols for optimal QoS delivery at minimal cost and energy.

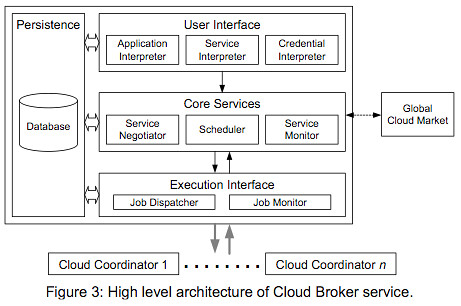

- Cloud Broker - responsible for mediating between service consumers and Cloud coordinators.

- Cloud Exchange - a market maker enabling capability sharing across multiple Cloud domains through its match making services.

They've created a great series of pictures showing what their system looks like:

Some complained in the Cloud Computing Group that billing and security issues were not addressed, but it's one the most complete presentations that I've seen. That completeness may be part of the problem.

The original isolation of the Internet from mass adoption allowed a long period of experimentation and development to evolve and mature the Internet concepts and technology. The Intercloud will make its first steps into a completely formed world. Very scary for a newborn. Will time and space be given the Intercloud so it can develop on its own terms?

Constructed on high level stateful services like brokers and coordinators, the Intercloud infrastructure is very specialized. The Internet on the other hand was designed to be a general platform on which any application can be built. The Intercloud seems more like an application layer feature being pushed down into the network layer. Is standardization at this high a level the best idea? Yet routing protocols like BGP and services like DNS are quite complex too.

When faced with a confusing and complicated subject like the Intercloud, it helps to identify a few guiding principles. A Brief History of the Internet recounts how Bob Kahn, responsible for much of the overall ARPANET architectural design, designed the TCP/IP communications protocol using these four ground rules:

- Each distinct network would have to stand on its own and no internal changes could be required to any such network to connect it to the Internet.

- Communications would be on a best effort basis.

- Black boxes would be used to connect the networks; these would later be called gateways and routers. There would be no information retained by the gateways about the individual flows of packets passing through them.

- There would be no global control at the operations level.

That these resiliency rules and the RFC process have worked is undeniable. The Internet has proven robust, scalable, and infinitely maleable, if not a bit maddening. Should the success of the process that created the Internet make us weary of fully formed solutions (from any source) that haven't had the time to evolve in response to real world scenarios?

So what are we to make of all this? There are so many potential directions. I like how Chris Hoff (no relation) sees it playing out in his post Inter-Cloud Rock, Paper, Scissors: Service Brokers, Semantic Web or APIs?:

In the short term we’ll need the innovators to push with their own API’s, then the service brokers will abstract them on behalf of consumers in the mid-stream and ultimately we will arrive at a common, open and standardized way of solving the problem in the long term with a semantic capability that allows fluidity and agility in a consumer being able to take advantage of the model that works best for their particular needs.

Related Articles

- Dr. Buyya and Cloud Computing Blog

- The Cloud Computing and Distributed Systems (CLOUDS) Laboratory, University of Melbourne

- Intercloud on Wikipedia

- A Hitchhiker's Guide to the Inter-Cloud by Krishna Sankar

- Life on the Inter-cloud by David Smith

- Cloud Computing and the Internet by Vinton Cerf, Chief Internet Evangelist.

- A Brief History of the Internet

- Rulers of the Cloud: A Multi-Tenant Semantic Cloud is Forming & EMC Knows that Data Matters by Mike Kirkwood

- Cloud Balancing, Reverse Cloud Bursting, and Staying PCI-Compliant

- The Inter-Cloud: Will MAE Become a MAC? by Peter Silva

- The Inter-Cloud and internet analogies by James Urquhart