Strategy: Use Linux Taskset to Pin Processes or Let the OS Schedule It?

This question comes from Ulysses on an interesting thread from the Mechanical Sympathy news group, especially given how multiple processors are now the norm:

Ulysses:

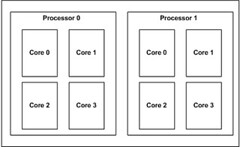

- On an 8xCPU Linux instance, is it at all advantageous to use the Linux taskset command to pin an 8xJVM process set (co-ordinated as a www.infinispan.org distributed cache/data grid) to a specific CPU affinity set (i.e. pin JVM0 process to CPU 0, JVM1 process to CPU1, ...., JVM7process to CPU 7) vs. just letting the Linux OS use its default mechanism for provisioning the 8xJVM process set to the available CPUs?

- In effrort to seek an optimal point (in the full event space), what are the conceptual trade-offs in considering "searching" each permutation of provisioning an 8xJVM process set to an 8xCPU set via taskset?

Given taskset is they key to the question, it would help to have a definition:

Used to set or retrieve the CPU affinity of a running process given its PID or to launch a new COMMAND with a given CPU affinity. CPU affinity is a scheduler property that "bonds" a process to a given set of CPUs on the system. The Linux scheduler will honor the given CPU affinity and the process will not run on any other CPUs.

On the thread there's a suggestion to use Java-Thread-Affinity instead of taskset.

There are different opinions on the subject. The most common of which is just let the OS do the scheduling for you. The OS knows best. And this is what Paul de Verdière found in general, but makes an exception for low-latency tasks:

In my somewhat empirical experience with CPU pinning, I observed that pinning an entire JVM (single thread, cpu-intensive application) to a single core gave not as good performance as letting the OS choose CPUs with its default scheduler. This is probably due to misc housekeeping threads competing with the applicative threads. CPU-pinning on a per-thread basis(*) makes sense when low-latency/high responsiveness is involved, in which case CPU isolation should also be used to avoid pollution by other processes. For heavy parallel computations, I tend to think this is not really necessary.

Performance guru Martin Thompson has wrote about how to Exploit Processor Affinity For High And Predictable Performance.

Russell Sullivan in Russ’ 10 Ingredient Recipe For Making 1 Million TPS On $5K Hardware talked about a related concept, using IRQ affinity in the NIC to avoid ALL soft interrupts (generated by tcp packets) bottlenecking on a single core.

In The Secret To 10 Million Concurrent Connections -The Kernel Is The Problem, Not The Solution, Robert Graham suggests telling the OS to use the first two cores, then set where your threads run on which cores, so you own these CPUs and Linux doesn’t.

Mike (I'm assuming Michael Barker, but I don't know for sure) gave a really great answer with specific tool suggestions from their experience on LMAX:

Taskset is a fairly blunt tool, thread affinity will give you finer grained control and will probably be more useful if you are trying to exploit memory locality. As Peter himself also points out (http://vanillajava.blogspot.co.nz/2013/07/micro-jitter-busy-waiting-and-binding.html), if your goal is to eliminate latency jitter, thread affinity is best combined with isolcpus. While using thread affinity will prevent your thread from being scheduled elsewhere, it doesn't preclude the OS from scheduling something else on the bound CPU potentially introducing jitter.