Stuff The Internet Says On Scalability For November 14th, 2014

Hey, it's HighScalability time:

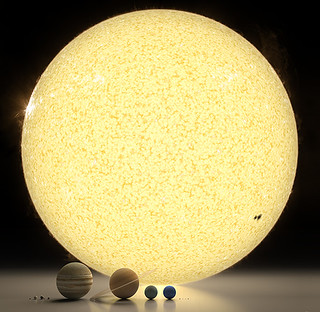

Spectacular rendering of the solar system to scale. (Roberto Ziche)

- 700: number of low-orbit satellites in a sidecar cheap internet; 130 terabytes: AdRoll ad data processed daily; 15 billion: daily Weather Channel forecasts; 1 million: AWS customers

- Quotable Quotes:

- @benkepes: Each AWS data center has typically 50k to 80k physical servers. Up to 102Tbps provisioned networking capacity #reinvent

- @scottvdp: AWS just got rid of infrastructure behind any application tier. Lambda for async distributed events, container engine for everything else.

- @wif: AWS is handling 7 trillion DynamoDB requests per month in a single region. 4x over last year. same jitter. #reinvent

- Philae: If my path was off by even half a degree the humans would have had to abort the mission.

- Al Aho: Well, you can get a stack of stacks, basically. And the nested stack automaton has sort of an efficient way of implementing the stack of stacks, and you can think of it as sort of almost like a cactus. That's why some people are calling it cactus automata, at the time.

- Gilt: Someone spent $30K on an Acura & LA travel package on their iPhone.

- @cloudpundit: Gist of Jassy's #reinvent remarks: Are you an enterprise vendor? Do you have a high-margin product/service? AWS is probably coming for you.

- @mappingbabel: Things coming out from the AWS #reinvent analyst summit - Amazon has minimum 3 million servers & lights up own globe-spanning fibre.

- @cloudpundit: James Hamilton says mobile hardware design patterns are future of servers. Single-chip offerings, semiconductor-level innovation. #reinvent

- @rightscale: RT @owenrog: AWS builds its own electricity substations simply because the power companies can't build fast enough to meet demand #reInvent

- @timanderson: New C4 instances #reinvent up to 36 cores up to 16TB SSD

- @holly_cummins: L1 cache is a beer in hand, L3 is fridge, main memory is walking to the store, disk access is flying to another country for beer.

- @ericlaw: Sample HTTP compression ratios observed on @Facebook: -1300%, -34.5%, -14.7%, -25.4%. ProTip: Don't compress two byte responses. #webperf

- @JefClaes: It's not the concept that defines the invariants but the invariants that define the concept.

- It's hard to imagine just a few short years ago AWS did not exist. Now it has 1 million customers, runs 70 million hours of software per month, and their AWS re:Invent conference has a robust 13,500 attendees. Re:Invent shows if Amazon is going to be disrupted, a lack of innovation will not be the cause. The key talking point is that AWS is not just IaaS anymore, AWS is a Platform. The underlying subtext is lock-in. Minecraft-like, Amazon is building out their platform brick by brick. Along with GCE, AWS announced a Docker based container service. Intel designed a special new cloud processor for AWS, which will be available in a new C4 instance type. There's Aurora, a bigger, badder MySQL. To the joy of many EBS is getting bigger and faster. The world is getting more reactive, S3 is emitting events. With less fan fare are an impressive suite of code deployment and management tools. There's also a key management service, a configuration manager, and a service catalog. Most provocative is Lambda, or PaaS++, which as the name suggests is the ability to call a function in response to events. Big deal? It could be, though quite limited currently, only supporting Node.js and a few event types. You can't, for example, terminate a REST request. What it could grow in to is promising, a complete abstraction layer over the cloud so any sense of machines and locations are removed. They aren't the first, but that hardly matters.

- It's not a history of the Civil War. Or WWW I. Or the Dust Bowl. But An Oral History of Unix. Yes, that much time has passed. Interviewed are many names you'll recognize and some you've probably never heard of. A fascinating window into the deep deep past.

- No surprise at all. Plants talk to each other using an internet of fungus: We suggest that tomato plants can 'eavesdrop' on defense responses and increase their disease resistance against potential pathogen...the phantom orchid, get the carbon they need from nearby trees, via the mycelia of fungi that both are connected to...Other orchids only steal when it suits them. These "mixotrophs" can carry out photosynthesis, but they also "steal" carbon from other plants...The fungal internet exemplifies one of the great lessons of ecology: seemingly separate organisms are often connected, and may depend on each other.

- How do you persist a 200 thousand messages/second data stream while guaranteeing data availability and redundancy? Tadas Vilkeliskis shows you how with Apache Kafka. It excels at high write rates, compession saves lots on network traffic, and a custom C++ http-to-kafka accommodates performance.

- Jeremy Rifkin: We’ve got a new potential platform to get us to where we need to go. I don’t know if it’s in time, but if there’s an alternative plan I have no idea what it could be. What I do know is that staying with a vertically integrated system – based on large corporations with fossil fuels, nuclear power and centralised telecommunications, alongside growing unemployment, a narrowing of GDP and technologies that are moribund – is not the answer.

- How will this new world work? Apropos of Rifkin we have Taylor Swift with the only album selling over one million copies this year. Swift takes home 50 percent of retail on digital downloads, which is 50¢ or 60¢, compared to a fraction of a penny on Spotify. The crux: We’re not against anybody, but we’re not responsible for new business models.

- Is this scary or comforting? Chip Overclock talks about some interesting trends in Unintended Consequences of the Information Economy: Although the Pentagon historically exported many technologies to the commercial sector, it is now a net importer...The combined R & D budgets of five of the largest U.S. defense contractors (about $4 billion, according to the research firm Capital Alpha Partners) amount to less than half of what companies such as Microsoft or Toyota spend on R & D in a single year...High tech companies are reticent to reveal what may be valuable intellectual property to the U. S. government... in the 21st century information economy, the key component to growth isn’t enormous capital investment...The days of the DoD calling the shots in high-tech are over.

- Netflix's Brendan Gregg with a very sophisticated process for Performance Tuning EC2 Instances.

- Joe Armstrong asks something I've also asked myself: Why do we need modules at all? My thinking was for the same reason our brain divides the world up into things. Categorization leads to a quite workable fast path for reasoning. Interesting discussion, though warning, language lawyers ahead.

- Mrb shares Two Things Types Have Taught Me: A type signature is not enough; Type systems are excellent for globally enforcing invariants.

- Efficient usage of local drives in the cloud for big data processing: To summarize: I believe that to be able to efficiently process big data in the cloud we need to use local drives as a cache of the dataset stored in the cloud storage. I also believe that other ways will not be efficient, as long as cloud storage is much slower and cheaper than local drives.

- Daniel Abadi with a great break down on Optimizing Disk IO and Memory for Big Data Vector Analysis: However, several other bottlenecks may exist. To understand where they may come from, let’s examine the path of a single record from the transactions table through the database query processing engine. Let’s assume for now that the transactions table is larger than the size of memory, and the record we will track starts off on stable storage (e.g. on magnetic disk or SSD).

- How would you process queries over tens of terabytes of data in a few hundred milliseconds? Facebook, impressively, shows how in Audience Insights query engine: In-memory integer store for social analytics: AI is powered by a query engine with a hybrid integer store that organizes data in memory and on flash disks so that a query can process terabytes of data in real time on a distributed set of machines...All attributes are indexed as inverted indices...To eliminate intersection operations, we have replaced the user IDs with bitsets, where one bit per person indicates whether that person is indexed for a specific value of an attribute...Furthermore, bitmap indices are cumulative to support fast range filters...The size of the bitset allocated for each key is fixed...bitset-based filtering techniques inspired by bloom filters to reduce the number of hash lookups during group-by...The AI engine can utilize GPU hardware when it exists to offload computationally complex operations like affinity computation...We have implemented tail latency reduction techniques.

- if you want to figure out how to reduce latency in a game so you can "play this game from bed like a lazy sack of crap" then here's a meditation for you: Dragon Quest X for 3DS and the lag monster.

- Why CoreOS is a game-changer in the data center and cloud: in the cloud era, we’re starting to treat servers like cows: If one falls over, that's part of the business. Cloud architecture distributes applications and replicates data across many commodity servers, so you can pull the plug on one and everything still keeps running.

- Now here's an interesting application of the Sapir–Whorf hypothesis to computing. There's No Such Thing as a General-purpose Processor: Because processors optimized for these cases have been the cheapest processing elements that consumers can buy, many algorithms have been coerced into exhibiting some of these properties. With the appearance of cheap programmable GPUs, this has started to change, and naturally data-parallel algorithms are increasingly run on GPUs. Now that GPUs are cheaper per FLOPS (floating-point operations per second) than CPUs, the trend is increasingly toward coercing algorithms that are not naturally data parallel to run on GPUs. The problem of dark silicon (the portion of a chip that must be left unpowered) means that it is going to be increasingly viable to have lots of different cores on the same die, as long as most of them are not constantly powered. Efficient designs in such a world will require admitting that there is no one-size-fits-all processor design and that there is a large spectrum, with different trade-offs at different points.

-

Jef Claes with a fun analysis of how to reduce concurrency using uncoventional means in an on-line casino. Splitting hot aggregates: By exploring alternatives, we discovered that we can work the model to reduce activity and concurrency on the account aggregate, lowering the chances for transactional failures.

- Gossip protocols: a clarification: The main use for gossip protocols is to disseminate information in a robust, randomized way, by having each peer forward information it receives from other peers to a random selection of other peers. As the function of LOCKSS boxes is to act as custodians of copyright information, this would be a very bad thing for them to do.

- Security is always a problem with rental schemes. Zennet: Users will be able to rent computation power from peers on the Zennet open market platform. This means that people can make money from their idle computation power, and those that need this power can access it at a better price and with less hassle than centralised cloud computing providers.