Stuff The Internet Says On Scalability For April 17th, 2015

Hey, it's HighScalability time:

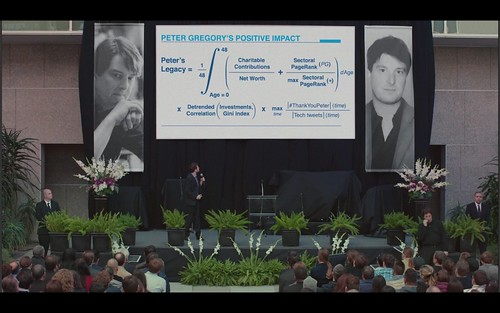

A fine tribute on Silicon Valley & hilarious formula evaluating Peter Gregory's positive impact on humanity.

-

118/196: nations becoming democracies since mid19th century; $70K: nice minimum wage; 70 million: monthly StackExchange visitors; 1 billion: drone planted trees; 1,000 Years: longest-exposure camera shot ever

-

Quotable Quotes:

-

@DrQz: #Performance modeling is really about spreading the guilt around.

-

@natpryce: “What do we want?” “More levels of indirection!” “When do we want it?” “Ask my IDateTimeFactoryImplBeanSingletonProxy!”

-

@BenedictEvans: In the late 90s we were euphoric about what was possible, but half what we had sucked. Now everything's amazing, but we worry about bubbles

-

Twitter: Some of our biggest MySQL clusters are over a thousand servers.

-

Calvin Zito on Twitter: "DreamWorks Animation: One movie, 250 TB to make.10 movies in production at one time, 500 million files per movie. Wow."

-

@SaraJChipps: It's 2015: open source your shit. No one wants to steal your stupid CRUD app. We just want to learn what works and what doesn't.

-

Calvin French-Owen: And as always: peace, love, ops, analytics.

-

@Wikipedia: Cut page load by 100ms and you save Wikipedia readers 617 years of wait annually. Apply as Web Performance Engineer

-

@IBMWatson: A person can generate more than 1 million gigabytes of health-related data.

-

@allspaw: "We’ve learned that automation does not eliminate errors." (yes!)

-

@Obdurodon: Immutable data structures solve everything, in any environment where things like memory allocators and cache misses cost nothing.

-

KaiserPro: Pixar is still battling with lots of legacy cruft. They went through a phase of hiring the best and brightest directly from MIT and the like.

-

@Obdurodon: Immutable data structures solve everything, in any environment where things like memory allocators and cache misses cost nothing.

-

@abt_programming: "Duplication is far cheaper than the wrong abstraction" - @sandimetz

-

@kellabyte: When I see places running 1,200 containers for fairly small systems I want to scream "WHY?!"

-

chetanahuja: One of the engineers tried running our server stack on a raspberry for a laugh.. I was gobsmacked to hear that the whole thing just worked (it's a custom networking protocol stack running in userspace) if just a bit slower than usual.

-

Chances are if something can be done with your data, it will be done. @RMac18: Snapchat is using geofilters specific to Uber's headquarter to poach engineers.

Why (most) High Level Languages are Slow. Exactly this by masterbuzzsaw: If manual memory management is cancer, what is manual file management, manual database connectivity, manual texture management, etc.? C# may have “saved” the world from the “horrors” of memory management, but it introduced null reference landmines and took away our beautiful deterministic C++ destructors.

Why NFS instead of S3/EBS? nuclearqtip with a great answer: Stateful; Mountable AND shareable; Actual directories; On-the-wire operations (I don't have to download the entire file to start reading it, and I don't have to do anything special on the client side to support this; Shared unix permission model; Tolerant of network failures Locking!; Better caching ; Big files without the hassle.

Google Cloud Dataflow is an answer to the fast/slow path Lambda Architecture: Merge your batch and stream processing pipelines thanks to a unified and convenient programming model. The model and the underlying managed service let you easily express data processing pipelines, make powerful decisions, obtain insights and eliminate the switching cost between batch and continuous stream processing.

Software is still munching on the world. How doomed is NetApp? Bulk file storage hardware is commoditized. The upstart vendors are virtually all software-only, offering tin-wrapped software through resellers rather than direct sales, which saves a lot of margin dollars.

Curt Monash with an excellent look at the particulars for MariaDB and MaxScale: MariaDB, the product, is a MySQL fork...MaxScale is a proxy, which starts out by intercepting and parsing MariaDB queries.

Yahoo is going to offer their Cloud Object Store as a hosted service. Yahoo Cloud Object Store - Object Storage at Exabyte Scale. 250 billion objects currently call YCOS their home. The store is built on Ceph, a unified, distributed storage system, deployed in pods.

Yahoo’s Pistachio: co-locate the data and compute for fastest cloud compute: a distributed key value store system...~2 million reads QPS, 0.8GB/s read throughput and ~0.5 million writes QPS, 0.3GB/s write throughput. Average latency is under 1ms...hundreds of servers in 8 data centers all over the globe...We found 10x-100x improvements because we can embed compute to the data storage to achieve best data locality.

Clever solution. Distributing Your Game via CDN - Not Just for Megacorps Anymore.

I'm not sure, is this generational garbage collection? Cellular Garbage Disposal Illuminated: Cells dispose of worn-out proteins to maintain normal function. One type of protein degradation relies upon such proteins being tagged with peptides called ubiquitins so that the cell can recognize them as trash. Harvard researcher Marc Kirschner and his colleagues have used single-molecule fluorescence methods to show how an enzyme adds ubiquitins to proteins, and how those proteins are recognized and recycled in the cell’s proteosome.

The good thing: it’s pretty easy these days to set up peer-to-peer video. Twilio shows how in Real-time video infrastructure and SDKs. Skip a centralized service and use your own paid for network resources. The downside: according to the comments on HN is the number of people you can conference in at the same time is still small, in the 15-25 range.

DynamoDB Auto-Scaling. The pros: no sharding logic; no DevOps team babysitting the data layer which is a massive efficiency gain. The con: it's not cloudy. You can't auto-scale capacity as needed. You only get decreases per day. You could over allocate to handle spikes. You could get an alarm and go set the capacity by hand. What Cake did is build their own rules engine for making allocation/deallocation decisions and the input is an XML file. Interesting approach.

Does this make too much sense? A hybrid model for a High-Performance Computing infrastructure for bioinformatics: In other words, our infrastructure is a mix of self-owned, and shared resources that we either apply for, or rent. We own several high-memory (1.5 TB RAM) servers with 64 CPUs and 64 TB local disk each. We also own 256 ‘grid’ CPUs for highly parallel calculations; these are in boxes of 16 CPUs with 64 GB RAM each. We rent project disk space, currently 60 TB, for which we pay a reasonable price.

To improve performance Swiftype built: bulk create/update API endpoints to reduce the number of HTTP requests required to index a batch of documents and moved most processing out of the web request; front-end routing technology to limit the impact customers have on each other; an asynchronous indexing API.

Greg Ferro with an excellent review of AWS Summit London – Come For The IaaS, Stay For the PaaS. He almost sounds like a cloud convert. How times have changed! "This is an “advanced cloud topic” that I feel many existing IT people don’t comprehend properly. Sure, AWS IaaS is costly, limited and requires a significant effort to adopt & adapt. But once you are in the room, AWS has all of these software platforms ready to go. Want some remote desktops, billing gateway, CDN, Global DNS services ? Sure, all ready to go. More storage ? Sure, what type of storage do you want – file or object, fast or slow, archive or near line. AWS has all of that “in stock” and ready to go. No need to perform a vendor evaluation, no meetings to perform a gap analysis, no capital budgets to get allocated and compete with marketing or corporate strategist for CEO time. Just turn it on, start using."

3D Flash - not as cheap as chips: Chris Mellor has an interesting piece at The Register pointing out that while 3D NAND flash may be dense, its going to be expensive. The reason is the enormous number of processing steps per wafer - between 96 and 144 deposition layers for the three leading 3D NAND flash technologies.

This is good advice. ColinWright: Certainly I'd be incorporating an exit strategy, and my game plan would be to leverage off FB to start with, to grow as fast as possible, develop my own community and eco-system, and then be able to shed FB and replace it with something else.

Columnar databases for the win. Using Apache Parquet at AppNexus: Parquet really excels when the query is on sparse data or low cardinality in column selection. The CPU usage can be reduced by more than 98% in total and the total memory consumption is cut by at least half.

You might be interested to know that Azure also has a machine learning service.

A nicely detailed story of How Airbnb uses machine learning to detect host preferences. One more example of how software is enlivening the world. Conclusions: in a two sided marketplace personalization can be effective on the buyer as well as the seller side; you have to roll up your sleeves and build a machine learning model tailored for your own application.

Jakob Jenkov with a great post rounding up Concurrency Models. He covers: Parallel Workers, Assembly Line, Functional Parallelism. Which is best? That would be telling.

Build an efficient and scalable streaming search engine. Real-time full-text search with Luwak and Samza. Great detail here on open source tools to search on streams. Samza is a stream processing framework based on Kafka. Luwak is a java library for stored queries. Excellent graphics on the concept of streams and how the search works.

There's a Difference between Scaling and Not Being Stupid: there’s a huge difference between being ignorant of limitations and not being scalable...you might have a different controller that doesn’t allow you to do something that stupid, but cannot scale beyond 50 physical switches due to control-plane limitations...As I wrote before, loosely coupled architectures that stay away from centralizing data- or control plane scale much better than those that believe in centralized intelligence.

Square releases Keywhiz: a secret management and distribution service that is now available for everyone. Keywhiz helps us with infrastructure secrets, including TLS certificates and keys, GPG keyrings, symmetric keys, database credentials, API tokens, and SSH keys for external services — and even some non-secrets like TLS trust stores.

LightQ: a high performance, brokered messaging queue which supports transient (1M+ msg/sec) and durable (300K+ msg/sec) queues. Durable queues are similar to Kafka where data are written to the file and consumers consume from the file.

Mojim: A Reliable and Highly-Available Non-Volatile Memory System: For NVMM to serve as a reliable primary storage mechanism (and not just a caching layer) we need to address reliability and availability requirements. The two main storage approaches for this: replication and erasure coding, both make the fundamental assumption that storage is slow. In addition, NVMM is directly addressed like memory, not via I/O system calls as a storage device.

Here's a nice New Batch of Machine Learning Resources and Articles.