How MySQL is able to scale to 200 Million QPS - MySQL Cluster

This is a guest post by Andrew Morgan, MySQL Principal Product Manager at Oracle.

The purpose of this post is to introduce MySQL Cluster - which is the in-memory, real-time, scalable, highly available version of MySQL. Before addressing the incredible claim in the title of 200 Million Queries Per Second it makes sense to go through an introduction of MySQL Cluster and its architecture in order to understand how it can be achieved.

Introduction to MySQL Cluster

MySQL Cluster is a scalable, real-time in-memory, ACID-compliant transactional database, combining 99.999% availability with the low TCO of open source. Designed around a distributed, multi-master architecture with no single point of failure, MySQL Cluster scales horizontally on commodity hardware with auto-sharding to serve read and write intensive workloads, accessed via SQL and NoSQL interfaces.

Originally designed as an embedded telecoms database for in-network applications demanding carrier-grade availability and real-time performance, MySQL Cluster has been rapidly enhanced with new feature sets that extend use cases into web, mobile and enterprise applications deployed on-premise or in the cloud, including: - High volume OLTP - Real time analytics - E-commerce, inventory management, shopping carts, payment processing, fulfillment tracking, etc. - Online Gaming - Financial trading with fraud detection - Mobile and micro-payments - Session management & caching - Feed streaming, analysis and recommendations - Content management and delivery - Communications and presence services - Subscriber/user profile management and entitlements

MySQL Cluster Architecture

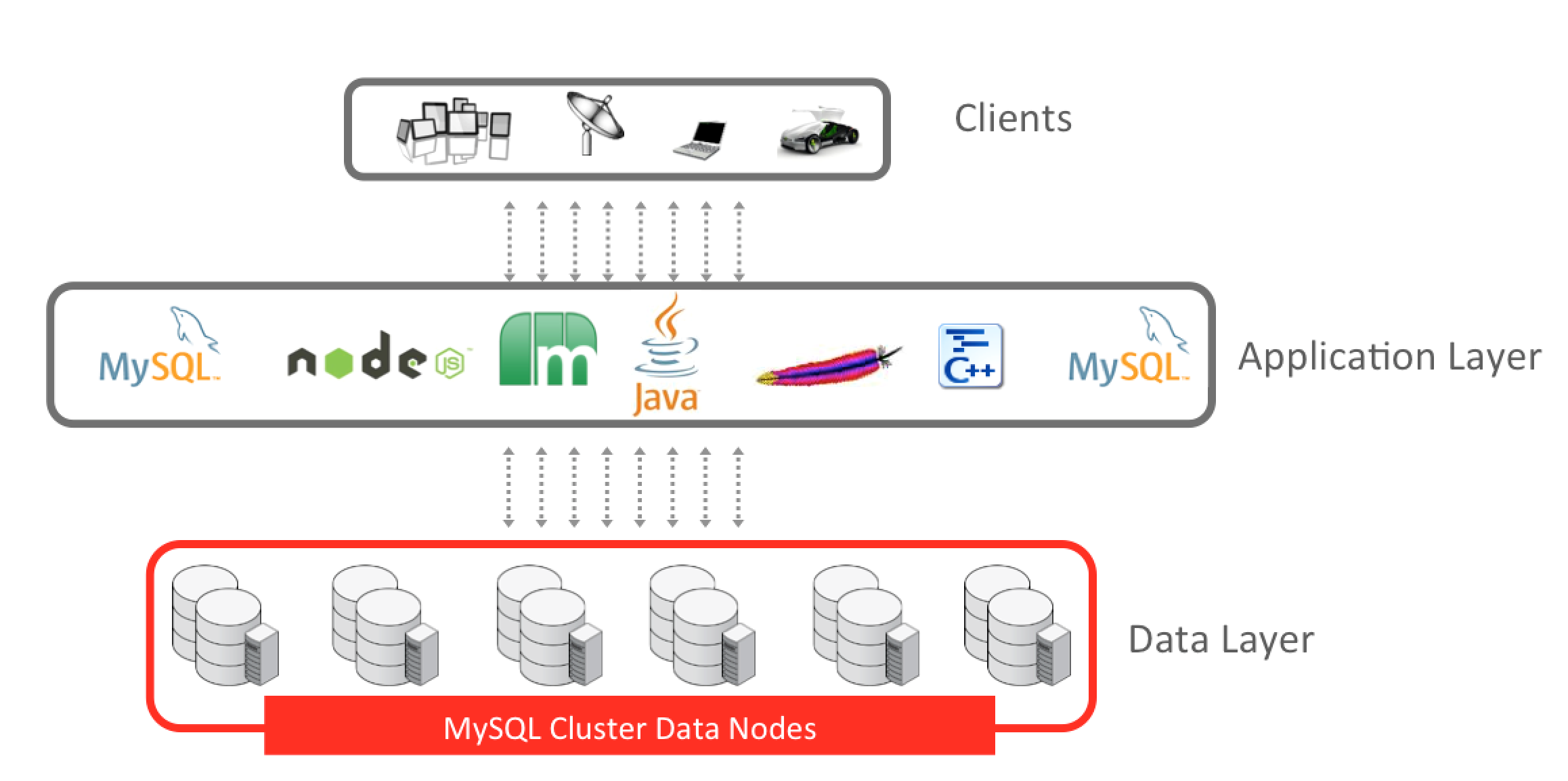

While transparent to the application, under the covers, there are three types of node which collectively provide service to the application. The figure shows a simplified architecture diagram of a MySQL Cluster consisting of twelve Data Nodes split across six node groups.

Data Nodes are the main nodes of a MySQL Cluster. They provide the following functionality: - Storage and management of both in-memory and disk-based data - Automatic and user defined partitioning (sharding) of tables - Synchronous replication of data between data nodes - Transactions and data retrieval - Automatic fail over - Automatic resynchronization after failure for self-healing

Tables are automatically sharded across the data nodes and each data node is a master accepting write operations, making it very simple to scale write-intensive workloads across commodity nodes, with complete application transparency.

By storing and distributing data in a shared-nothing architecture, i.e. without the use of a shared-disk, and synchronously replicating data to at least one replica, if a Data Node happens to fail, there will always be another Data Node storing the same information. This allows for requests and transactions to continue to be satisfied without interruption. Any transactions which are aborted during the short (sub-second) failover window following a Data node failure are rolled back and can be re-run.

It is possible to choose how to store data; either all in memory or with some on disk (non-indexed data only). In-memory storage can be especially useful for data that is frequently changing (the active working set). Data stored in-memory is routinely check pointed to disk locally and coordinated across all Data Nodes so that the MySQL Cluster can be recovered in case of a complete system failure – such as a power outage. Disk-based data can be used to store data with less strict performance requirements, where the data set is larger than the available RAM. As with most other database servers, a page-cache is used to cache frequently used disk-based data in the Data Nodes’ memory in order to increase the performance.

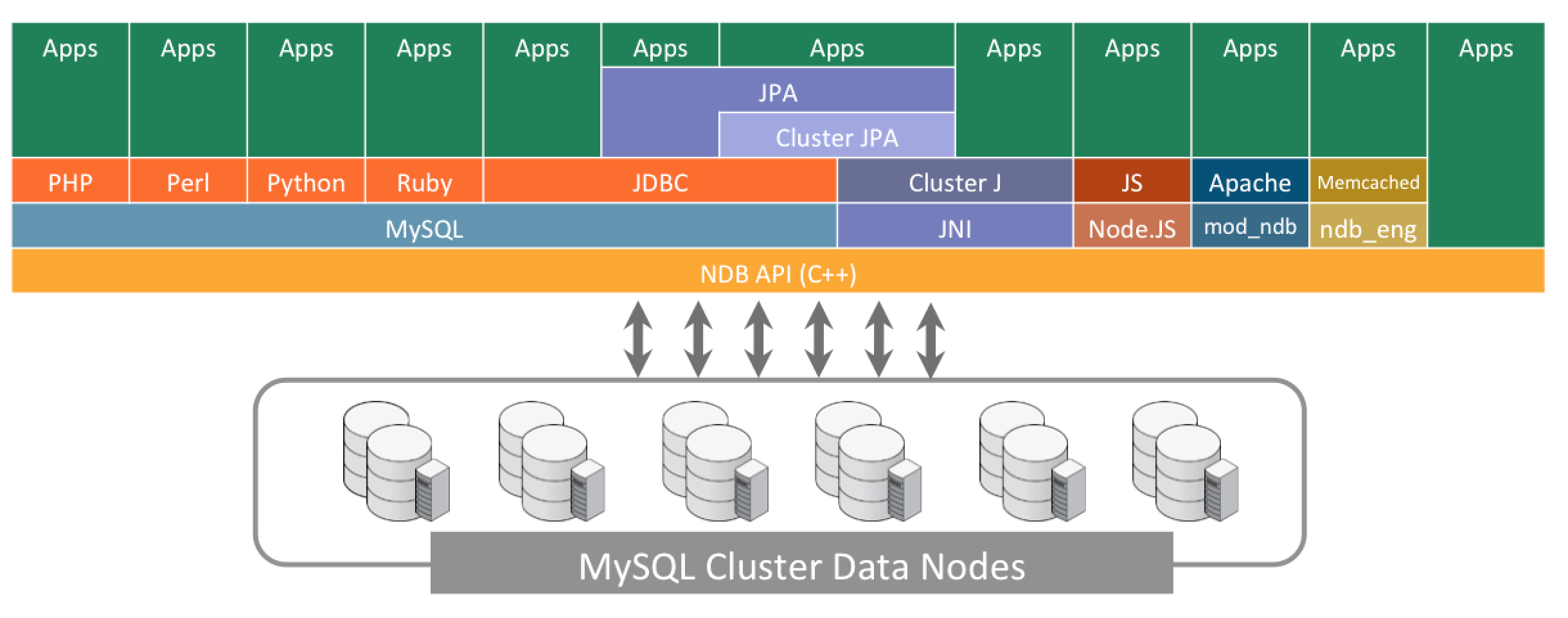

Application Nodes provide connectivity from the application logic to the data nodes. Applications can access the database using SQL through one or many MySQL Servers performing the function of SQL interfaces into the data stored within a MySQL Cluster. When going through a MySQL Server, any of the standard MySQL connectors can be used , offering a wide range of access technologies. Alternatively, a high performance (C++ based) interface called NDB API can be used for extra control, better real-time behavior and greater throughput. The NDB API provides a layer through which additional NoSQL interfaces can directly access the cluster, bypassing the SQL layer, allowing for lower latency and improved developer flexibility. Existing interfaces include Java, JPA, Memcached, JavaScript with Node.js and HTTP/REST (via an Apache Module). All Application Nodes can access data from all Data Nodes and so they can fail without causing a loss of service as applications can simply use the remaining nodes.

Management Nodes are responsible for publishing the cluster configuration to all nodes in the cluster and for node management. The Management Nodes are used at startup, when a node wants to join the cluster, and when there is a system reconfiguration. Management Nodes can be stopped and restarted without affecting the ongoing execution of the Data and Application Nodes. By default, the Management Node also provides arbitration services, in the event there is a network failure which leads to a split-brain or a cluster exhibiting network-partitioning.

Achieving Scalability Through Transparent Sharding

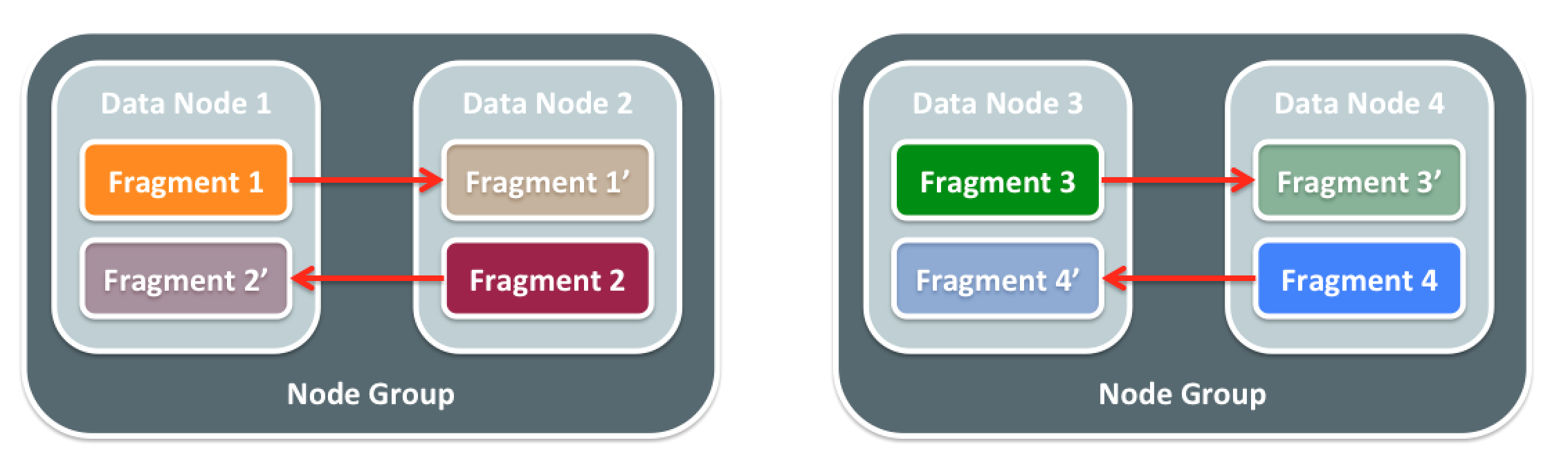

The rows from any given table are transparently split into multiple partitions/fragments. For each fragment there will be a single data node that holds all of its data and handles all reads and writes on that data. Each data node also has a buddy and together they form a node group; the buddy holds a secondary copy of the fragment as well as a primary fragment of its own. There is synchronous 2-phase commit protocol used to ensure that when a transaction has been committed the changes are guaranteed to be stored within both data nodes.

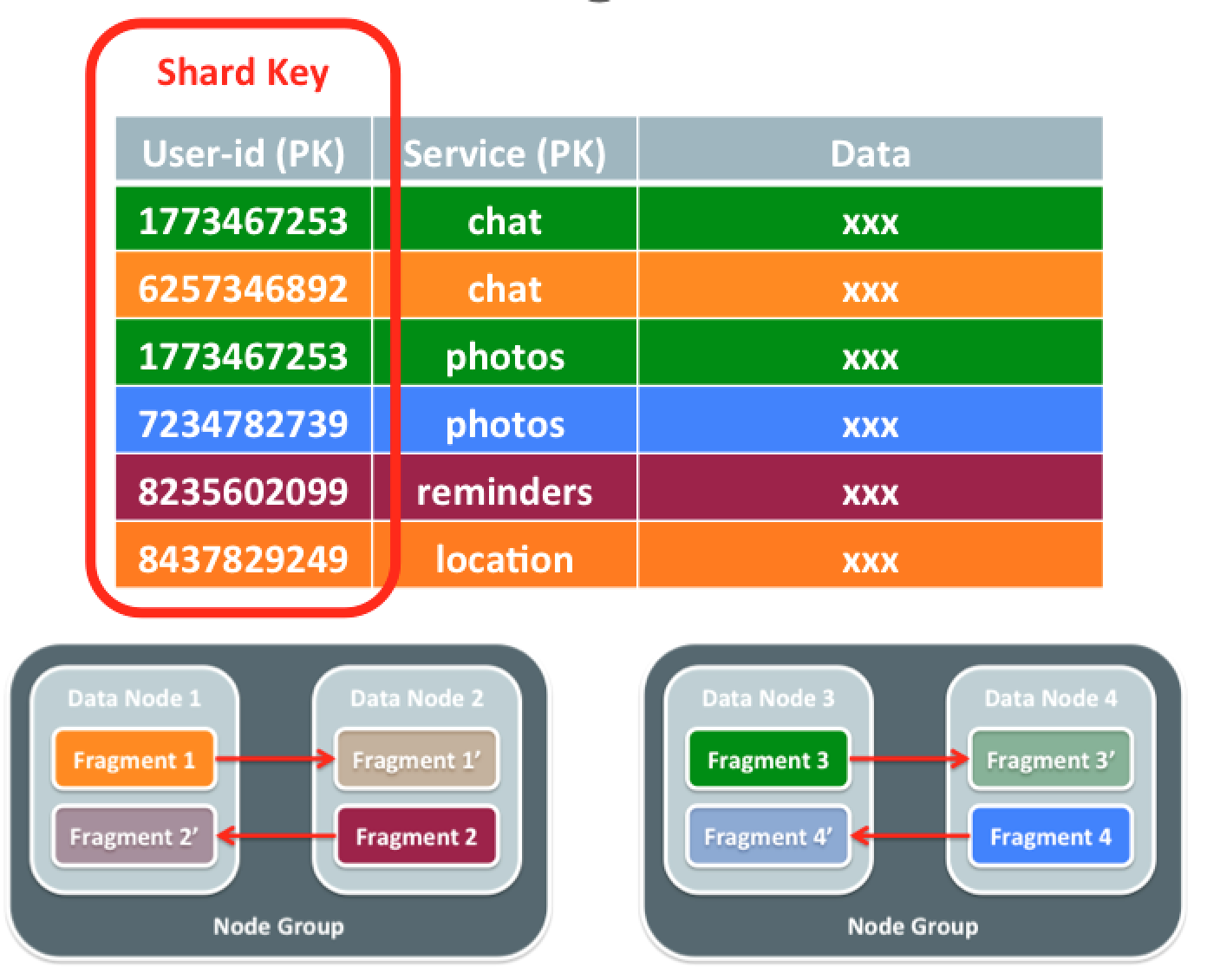

By default, a table's Primary Key is used as the shard key and MySQL Cluster will perform an MD5 hash on that shard key to select which fragment/partition it should be stored in. If a transaction or query needs to access data from multiple data nodes then one of the data nodes takes on the role of the transaction coordinator and delegates work to the other required data nodes; the results are then combined before they're presented to the application. Note that it is also possible to have transactions or queries that join data from multiple shards and multiple tables - this is a big advantage over typical NoSQL data stores that implement sharding.

The best (linear) scaling is achieved when high running queries/transactions can be satisfied by a single data node (as it reduces the network delays from the inter-data node messaging). To achieve this, the application can be made distribution aware - all this really means is that the person defining the schema can override what column(s) is used for the sharding key. As an example, the figure shows a table with a composite Primary Key made up of a user-id and a service name; by choosing to just use the user-id as the sharding key, all rows for a given user in this table will always be in the same fragment. Even more powerful, is the fact that if the same user-id column is used in your other tables and you designate it as the sharding key for those too then all of the given user's data from all tables will be in the same fragment and queries/transactions on that user can be handled within a single data node.

Use NoSQL APIs for the Fastest Possible Access to Your Data

MySQL Cluster provides many ways to access the stored data; the most common method is SQL but as can be seen in the figure, there are also many native APIs that allow the application to read and write the data directly from the database without the inefficiency and development complexity of converting to SQL and passing through a MySQL Server. These APIs exist for C++, Java, JPA, JavaScript/Node.js, http and the Memcached protocol.

200 Million Queries Per Second Benchmark

There are two kinds of workloads that MySQL Cluster is designed to handle: - OLTP (On-Line Transaction Processing): Memory-optimized tables provide sub-millisecond low latency and extreme levels of concurrency for OLTP workloads while still providing durability; they can also be used alongside disk-based tables. - Ad-hoc Searches: MySQL Cluster has increased the amount of parallelism that can be used when performing a table scan – providing a significant speed-up when performing searches on un-indexed columns.

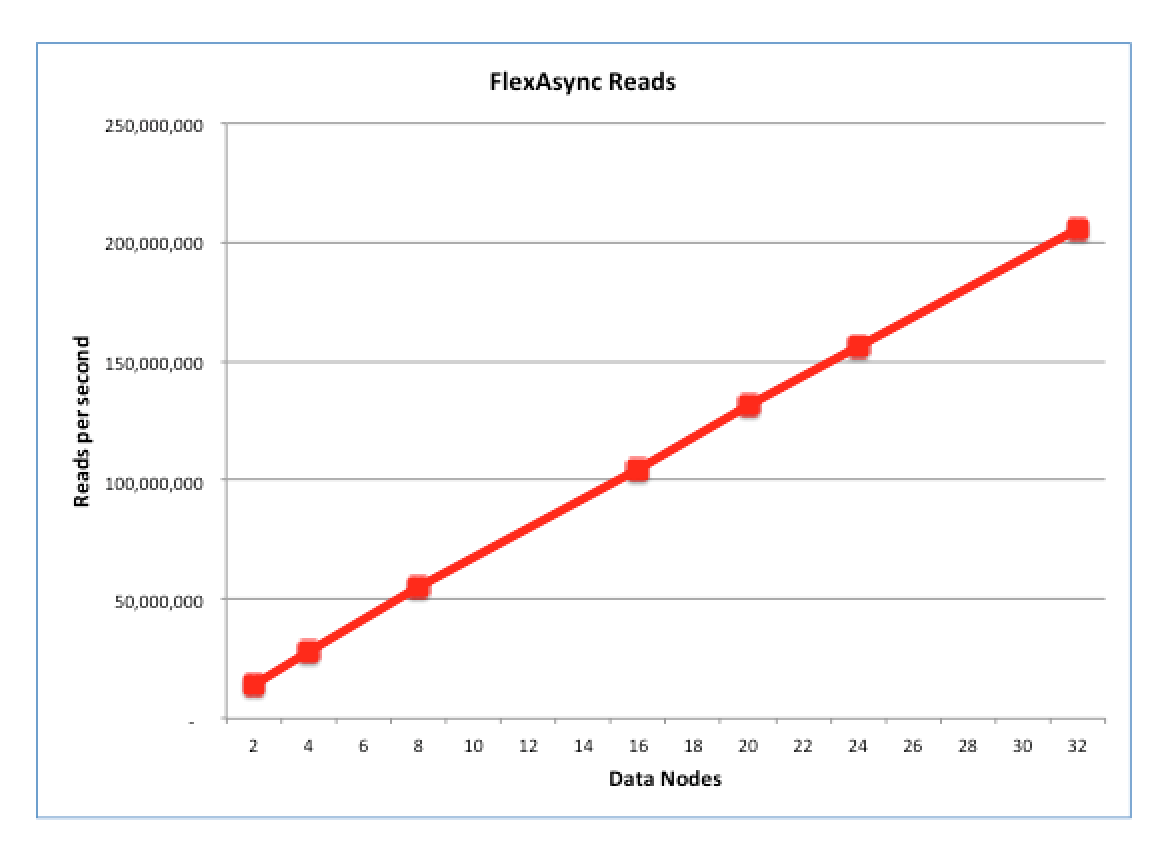

Having said that, MySQL Cluster is going to perform at its best with OLTP workloads; in particular when large numbers of queries/transactions are sent in in parallel. To this end, the flexAsynch benchmark has been used to measure how NoSQL performance scales as more data nodes are added to the cluster.

The benchmark was performed with each data node running on a dedicated 56 thread Intel E5-2697 v3 (Haswell) machine. The figure shows how the throughput scaled as the number of data nodes was increased in steps from 2 up to 32 (note that MySQL Cluster currently supports a maximum of 48 data nodes). As you can see, the the scaling is virtually linear and at 32 data nodes, the throughput hits 200 Million NoSQL Queries Per Second.

Note that the latest results and a more complete description of the tests can be found at the MySQL Cluster Benchmark page.

These 200 Million QPS benchmark was run as part of MySQL Cluster 7.4 (currently the latest GA version) - you can find out more of went into that release in this MySQL Cluster 7.4 blog post or this webinar replay.