Stuff The Internet Says On Scalability For November 18th, 2016

Hey, it's HighScalability time:

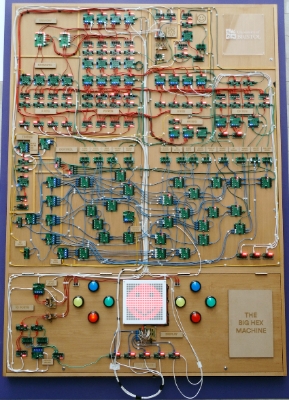

Now you don't have to shrink yourself to see inside a computer. Here's a fully functional 16-bit computer that's over 26 square feet huge! Bighex machine. If you like this sort of Stuff then please support me on Patreon.

- 50%: drop in latency and CPU load after adopting PHP7 at Tumblr; 4,425: satellites for Skynet; 13%: brain connectome shared by identical twins; 20: weird & wonderful datasets for machine learning; 200 Gb/sec: InfiniBand data rate; 15 TB: data generated nightly by Large Synoptic Survey Telescope; 17.24%: top comments that were also first comments on reddit; $120 million: estimated cost of developing Kubernetes; 3-4k: proteins involved in the intracellular communication network;

- Quotable Quotes:

- Westworld: Survival is just another loop.

- Leo Laporte: All bits should be treated equally.

- Paul Horner: Honestly, people are definitely dumber. They just keep passing stuff around. Nobody fact-checks anything anymore

- @WSJ: "A conscious effort by a nation-state to attempt to achieve a specific effect" NSA chief on WikiLeaks

- encoderer: For the saas business I run, Cronitor, aws costs have consistently stayed around 10% total MRR. I think there are a lot of small and medium sized businesses who realize a similar level of economic utility.

- @joshtpm: 1: Be honest: Facebook and Twitter maxed out election frenzy revenues and cracked down once the cash was harvested. Also once political ...

- boulos: As a counter argument: very few teams at Google run on dedicated machines. Those that do are enormous, both in the scale of their infrastructure and in their team sizes. I'm not saying always go with a cloud provider, I'm reiterating that you'd better be certain you need to.

- Renegade Facebook Employees: Sadly, News Feed optimizes for engagement. As we've learned in this election, bullshit is highly engaging. A bias towards truth isn't an impossible goal.

- Russ White: The bottom line is this—don’t be afraid to use DNS for what it’s designed for in your network...We need to learn to treat DNS like it’s a part of the IP stack, rather than something that “only the server folks care about,” or “a convenience for users we don’t really take seriously for operations.”

- Wizart_App: It's always about speed – never about beauty.

- Michael Zeltser: MapReduce is just too low level and too dumb. Mixing complex business logic with MapReduce low level optimization techniques is asking too much.

- Michael Zeltser: One thing that always bugged me in MapReduce is its inability to reason about my data as a dataset. Instead you are forced to think in single key-value pair, small chunk, block, split, or file. Coming from SQL, it felt like going backwards 20 years. Spark has solved this perfectly.

- Guillaume Sachot: I can confirm that I've seen high availability appliances fail more often than non-clustered ones. And it's not limited to firewalls that crash together due to a bug in session sharing, I have noticed it for almost anything that does HA: DRBD instances, Pacemaker, shared filesystems...

- Albert-Laszlo Barabasi: The bottom line is: Brother, never give up. When you give up, that’s when your creativity ends

- SpaceX: According to a transcript received by Space News, he argued that the supercooled liquid oxygen that SpaceX uses as propellant actually became so cold that it turned into a solid. And that’s not supposed to happen.

- Murat: Safety is a system-level property, unit testing of components is not enough.

- @alexjc: 1/ As deep learning evolves as a discipline, it's becoming more about architecting highly complex systems that leverage data & optimization.

- btgeekboy: Indeed. If there's one thing I've learned in >10 years of building large, multi-tenant systems, it's that you need the ability to partition as you grow. Partitioning eases growth, reduces blast radius, and limits complexity.

- @postwait: Monitoring vendors that say they support histograms and only support percentiles are lying to their customers. Full stop. #NowYouKnow

- @crucially: Fastly hit 5mm request per seconds tonight with a cache hit ratio of 96% -- proud of the team.

- Rick Webb: Just because Silicon Valley has desperately wanted to believe for twenty years that communities can self-police does not make it true.

- Cybiote: Humans can additionally predict other agents and other things about the world based on intuitive physics. This is why they can get on without the huge array of sensors and cars cannot. Humans make up for the lack of sensors by being able to use the poor quality data more effectively. To put this in perspective, 8.75 megabits / second is estimated to pass through the human retina but only on the order of a 100 bits is estimated to reach conscious attention.

- David Rand: What I found was consistent with the theory and the initial results: in situations where there're no future consequences, so it's in your clear self-interest to be selfish, intuition leads to more cooperation than deliberation.

- @crucially: Fastly hit 5mm request per seconds tonight with a cache hit ratio of 96% -- proud of the team

- SpaceX: With deployment of the first 800 satellites, SpaceX will be able to provide widespread U.S. and international coverage for broadband services. Once fully optimized through the Final Deployment, the system will be able to provide high bandwidth (up to 1 Gbps per user), low latency broadband services for consumers and businesses in the U.S. and globally.

-

Steve Gibson: Anyone can make a mistake [regarding Pixel ownage], and Google is playing security catch up. But what they CAN and SHOULD be proud of is that they had the newly discovered problem patched within 24 hours!

-

dragonnyxx: Calling a 10,000 line program a "large project" is like calling dating someone for a week a "long-term relationship".

-

Brockman: I have three friends: confusion, contradiction, and awkwardness. That’s how I try to meander through life. Make it strange.

-

Martin Sústrik: In this particular case, almost everybody will agree that adding the abstraction was not worth it. But why? It was a tradeoff between code duplication and increased level of abstraction. But why would one decide that the well known cost of code duplication is lower than somewhat fuzzy "cost of abstraction"?

- Biomedical engineering might be an area a lot of tech people interested in real-time monitoring and control at scale could be of help. Hr2: Wireless Spinal Tech, Climate Policy, Moon Impact. Researchers want to use wireless technology to record 100k+ neurons simultaneously, 24x7, for long periods of time. The goal is to use this data to control high dimensional systems, like when when reaching and grasping the shoulder, elbow, hand, wrist, and fingers must all work together in real-time. Sound familiar?

- Making the Switch from Node.js to Golang. Digg switched a S3 heavy service from Node to Go and: Our average response time from the service was almost cut in half, our timeouts (in the scenario that S3 was slow to respond) were happening on time, and our traffic spikes had minimal effects on the service...With our Golang upgrade, we are easily able to handle 200 requests per minute and 1.5 million S3 item fetches per day. And those 4 load-balanced instances we were running Octo on initially? We’re now doing it with 2.

- Not a lie. The best explanation to resilience. Resilience is how you maintain the self-organizing capacity of a system. Great explanation. The way you maintain the resilience of a system is by letting it probe its boundaries. The only way to make forest resilient to fire is to burn it. Efficiency is riding as close as possible to the boundary by using feedback to keep the system self-organizing.

- Facebook does a lot of work making their mobile apps work over poor networks. One change they are making is Client-side ranking to more efficiently show people stories in feed. Previously, all story ranking occurred on the server and entries paged up to the device and displayed in order. The problem with this approach is that an article's rank could change while media is being loaded. Now a pool of stories is kept on the client and as new stories are added they are reranked and shown to users in rank order. This approach adapts well to slow networks because slow-loading content is temporarily down-ranked while it loads.

- How We [GitLab] Knew It Was Time to Leave the Cloud. It's the usual forces driving GitLab to the Bare Metal: cost, reliability, performance. boulos of Google makes an impassioned plea to reconsider: I know you're frustrated, but rolling your own infrastructure just means you have to build systems even better than the providers. On the plus side, when it's your fault, it's your fault (or the hardware vendor, or the colo facility). You've been through a lot already, but I'd suggest you'd be better off returning to AWS or coming to us (Google) [Note: Our PD offering historically allowed up to 10 TiB per disk and is now a full 64 TiB, I'm sorry if the docs were confusing]. Again, I'm not saying this to have you come to us or another cloud provider, but because I honestly believe this would be a huge time sink for GitLab. Instead of focusing on your great product, you'd have to play "Let's order more storage" (honestly managing Ceph has

- We have another entrant into the pick your compute unit of choice market. Announcing general availability of Azure Functions. Languages supported: C#, Node.js, Python, F#, PHP, batch, bash, Java, any executable. Seems to have a flexible event model. Pricing as with all these services is hard to grok, but a function with observed memory consumption of 1,536MB, executes 2,000,000 times during the month and has an execution duration of 1 second would cost $41.80 per month.

- Reactive is the new batch. From Big Data to Fast Data in Four Weeks or How Reactive Programming is Changing the World – Part 1. Five years ago PayPal had built an industry standard data processing platform: "We collected data in real-time, buffered it in memory and small files, and finally uploaded consolidated files onto Hadoop cluster. At this point a variety of MapReduce jobs would crunch the data and publish the “analyst friendly” version to Teradata repository, from which our end users could query the data using SQL." Too slow, too hard, to painful. Now they've gone reactive. Kafka instead of Hadoop. Spark instead of MapReduce. Scala instead of Java. Now life is good.

- How the SoC is Displacing the CPU: Over time, in line with classic disruption theory, the SoC transistor platform caught up to the incumbent CPU transistor platform to the point where now the Apple A9X SoC offers 64 bit desktop-class computing enabling a handheld tablet to go toe-to-toe with a state-of-the-art laptop CPU from Intel

- Iron.io is releasing an open source version of AWS Lambda that you can run anywhere. iron-io/functions [article]. Travis Reader says: We built a base level layer using containers that people can use directly or add higher level abstractions like Lambda, for example, it supports Lambda functions: https://github.com/iron-io/functions/blob/master/docs/lambda/README.md . You can even import functions directly from AWS, it will just suck them down and you can run them on IronFunctions.

- This is like halting cancer because all the different cancer cells are fighting each other over scarce body resources. You still have cancer, parts may fail, but it won't be systemic. Competing hackers dampen the power of Mirai botnets: Competing hackers have all been trying to take advantage of Mirai to launch new DDoS attacks...The problem is the malware may have run out of new devices to infect, forcing the hackers to vie for control over a limited resource pool...The problem is the malware may have run out of new devices to infect, forcing the hackers to vie for control over a limited resource pool...Hackers also face another challenge to fully exploit Mirai -- the malicious coding has been designed to kick out competing malware.

- How we ditched HTTP and transitioned to MQTT!: MQTT is much more battery efficient (than HTTP) in maintaining long running connections and transmitting data periodically; With MQTT we now have a real 2-way connection where server can push commands to the clients whenever required.

- Just software developers? This sounds like programmer as ubermensch. 10 characteristics of an excellent software developer: Passionate, Open-minded, Data Driven, Being knowledgeable about customers and business, Being knowledgeable about engineering processes and good practices, Not making it personal, Honest, Personable, Creating shared success, Creative.

- It's not just PayPal, Walmart wants to share their love of Kafka too. Tech Transformation: Real-time Messaging at Walmart: They started Kafka in a shared bare-metal deployment and moved to a cloud-oriented, decentralized, and self-serving deployment. They really emphasize the business value of the self-serving model: each team deploys and owns its infrastructures and services to meet business goals and expectations, rather than the shared clusters and services with weak promise...By adopting the self-serving Kafka model, the application teams now have full control of the operation, capacity, and configuration tune-up, leading to the guaranteed performance on dedicated resources.

- Interesting failure mode for caching is when it's not properly parameterized. Why Is This Query Sometimes Fast and Sometimes Slow?: SQL Server builds one execution plan, and caches it as long as possible, reusing it for executions no matter what parameters you pass in.

- Change your terminology change your thinking? Remote is Dead. Long Live Distributed: I am not a remote worker, I am a part of a distributed team. The term “remote” focuses on where the team member is. It doesn’t address what needs to be done or how to do it. The where is totally irrelevant to the work. The term “distributed,” however, naturally lends itself to thinking about what needs to be done and how we go about doing it together.

- Business model innovation is as important as product innovation. Steve Ballmer Says Smartphones Strained His Relationship With Bill Gates: I wish I'd thought about the model of subsidizing phones through the operators," he said. "You know, people like to point to this quote where I said iPhones will never sell, because the price at $600 or $700 was too high. And there was business model innovation by Apple to get it essentially built into the monthly cell phone bill.

- Why Distributed File-systems Are Fun?: To sum up, for a distributed file-system to be truly scalable, it must allow for multiple servers to handle different files and/or directories. Once multiple servers handle different entities, guaranteeing specific relationships between these entities (such as having a sequence of timestamps, or no loops in the directory structure) becomes a distributed task.

- Secret Agent is the soundtrack your life has been missing.

- Facebook wants you to know they can do fast, small, mobile phone based AI too. Delivering real-time AI in the palm of your hand. What they did isn't as important as the direction the future is going.

- Great description of the data processing needs of several large projects. The big data ecosystem for science. It's clear Big Data and Big Science are one. Descriptions are included for Large Hadron Collider; Cosmology and astronomical image surveys; Genomics and DNA sequencers; Climate science. Do all these really need different systems?

- Building Complex AI Services That Scale. Nice description of their architecture and development infrastructure. Seems about standard for this age.

- Nature uses chemicals to communicate in our bodies, we may be able to build communicating systems using the same ideas. Stanford researchers send text messages using chemicals.

- Elections are just the start. A lightbulb worm could take over every smart light in a city in minutes: smart lightbulbs that allows them to wirelessly take over the bulbs from up to 400m, write a new operating system to them, and then cause the infected bulbs to spread the attack to all the vulnerable bulbs in reach, until an entire city is infected. The researchers demonstrate attacking bulbs by drone or ground station...There are many ways that a hijacked Hue system can be used to cause mischief. Zigbee uses the same radio spectrum as wifi, so a large mesh of compromised Zigbees could simply generate enough radio noise to jam all the wifi in a city. Attackers could also brick all the Hue devices citywide. They could use a kind of blinking morse code to transmit data stolen from users' networks. They could even induce seizures in people with photosensitive epilepsy.

- Building A Modern, Scalable Backend: Modernizing Monolithic Applications. Good story of taking an existing RoR/EC2/CloudFormation/Chef based system and moving it to a more capable backend. They made a smart decision of scoping the problem by only solving what needed to be solved. They chose a microservices approach using several different implementation languages, including Elixir, a dynamic functional language built on the Erlang VM. JsonAPI was used as the interface. Writing example requests and responses helped find problems early in the process. Kubernetes was used as the orchestration platform.

- The Infancy of Julia: An Inside Look at How Traders and Economists Are Using the Julia Programming Language: Talk to almost anyone who is currently using Julia or is experimenting with the language and they'll likely tell you that speed was the main reason for looking at this nascent offering. According to Julia Computing, when testing seven basic algorithms, Julia is 20 times faster than Python, 100 times faster than R and 93 times faster than Matlab.

- "Scale Out" Applies to Interfaces, Too: there are two ways that an interface can increase in complexity. Yep, you guessed it: scale up or scale out. A "scale up" interface is one that gets monolithically bigger - you can't use any part of it without having to deal with significant complexity...By contrast, a "scale out" interface is one that gets bigger in a modular way. Maybe it just has a lot of functions, but using any one of those is simple and straightforward. In some cases, those functions might be grouped according to the objects they operate upon or the functionality they provide, but if you don't use a particular subset then you don't have to set up for it. Defaults are applied intelligently, so that simple calls yield obvious results but more sophisticated usage is also possible. Secondary objects are automatically created using defaults, so the user has to go through fewer steps. Hooks and callbacks are provided to customize behavior further, but remain entirely optional

- Awesome read. The secret world of microwave networks.

- CppCon 2016 videos are available. Here's Bjarne Stroustrup on "The Evolution of C++ Past, Present and Future". I'd watch it, but I don't have the heart for it. See also AnthonyCalandra/modern-cpp-features.

- Quantum Hanky-Panky: What problems are we going to solve? A quantum computer with, say, 500 quantum bits, the kind we're going to see soon, would not be able to factor large numbers, break codes, and strike fear into the heartless NSA, but it would be able to do some of these problems, like quantum machine learning—finding patterns in large amounts of data...By contrast, with a small quantum computer, even one with a few hundred quantum bits, you'd be able to find complicated patterns and topological systems like holes and gaps and voids that you could never find classically. We've progressed to a new stage. The first twenty years of quantum computing were very interesting ideas from theory, establishing connections with other branches of physics, coming up with different algorithms that you would love to perform if you only had a quantum computer that was big enough to perform them.

- AWS is Not Magic says AWS IO Performance: What’s Bottlenecking Me Now?: When consulting AWS documentation about your instance, one should be cautious about taking the listed IO limits at face value...WS achieves the listed instance benchmarks only by assuming a read-only workload in “perfect weather"...AWS CloudWatch metrics make it very easy to validate if your high throughput I/O application is having disk-related performance bottlenecks. Combining this with standard disk benchmarking tools at the OS level will allow clear picture of your IO subsystem to emerge...At the end of the day, most AWS applications outside of big data/log processing will boil down to a choice between either io1 or gp2 volumes. HDD volumes have their place, but they must be carefully considered due to their extreme IOPS limitations even though their admirably high bandwidth limits might be appealing...So just remember: EBS snapshots are back-ended by S3, and blocks for snapshot-initialized volumes are fetched lazily...Your best bet is to test the network connection directly via an I/O load test utility (such as SQLIO.exe or diskspd.exe in the Windows world).

- Nice reddit discussion thread on IT Hare's article Choosing RDMBS for OLTP DB. Lots of good old Postgres vs MySQL talk, buying big iron, licensing costs, why Uber was wrong about Postgres, and more.

- optimizing spot instance allocation: There is surprisingly little information on how to optimize costs using the AWS spot instance market. There are several services that provide management on top of the spot market if you have an architecture that supports an interruptible workload but very little in the way of how to go about doing it yourself other than surface level advice on setting up autoscaling groups. To remedy the situation here’s an outline of how I’ve solved part of the problem for CI (continuous integration) type of workload

- Someday caching won't be the solution to nearly every problem, but today is not that day. Ready-to-use Virtual-machine Pool Store via warm-cache: The warm-cache module intends to solve these issues by creating a cache pool of VM instances well ahead of actual provisioning needs. Many pre-baked VMs are created and loaded in a cache pool. These ready-to-use VMs cater to the cluster-provisioning needs of the Pronto platform.

- Deepomatic/dmake: a tool to manage micro-service based applications. It allows to easily build, run, test and deploy an entire application or one of its micro-services.

- Tesco/mewbase: an engine that functions as a high performance streaming event store but also allows you to run persistent functions in the engine that listen to raw events and maintain multiple views of the event data.

- rook/rook: a distributed storage system designed for cloud native applications. It exposes file, block, and object storage on top of shared resource pools. Rook has minimal dependencies and can be deployed in dedicated storage clusters or converged clusters.

- The Time-Less Datacenter: We describe, and demonstrate, a novel foundation for datacenter communication: a new "event based" protocol that can dispense with the need for conventional heartbeats and timeouts at the network layer --paving a new path for efficient recovery for distributed algorithms as they scale. We then show how this can be composed into arbitrary graph-based distributed communications for application infrastructures. The Earth Computing Network Fabric (ECNF) takes a different approach. An ECNF segment within a datacenter has no switches within its own fabric. Instead, each cell combines the compute functions of a server with the routing functions of a switch. Each cell has multiple ports (7±2), and each port of a cell is directly connected to a port of another cell via a link. Because the link is a dedicated channel between exactly two cells, we can use the Earth Computing Link Protocol (ECLP) instead of standard protocols, such as Ethernet or TCP/IP.

- Microservices.com Practitioner Summit in SF on Jan 31. The summit is a forum of pragmatic advice from microservices practitioners who have adopted microservices at scale at leading tech companies like Netflix, Google, and Yelp. Featured speakers this year are Susan Fowler, Site Reliability Engineer at Uber, and Nic Benders, Chief Architect at New Relic.

- State Machines All The Way Down: In this paper, I present an architecture for dependently typed applications based on a hierarchy of state transition systems, implemented as a library called states. Using states, I show: how to implement a state transition system as a dependent type, with type level guarantees on its operations; how to account for operations which could fail; how to combine state transition systems into a larger system; and, how to implement larger systems as a hierarchy of state transition systems. As an example, I implement a simple high level network application protocol.