The Tech that Turns Each of Us Into a Walled Garden

(source)

How we treat each other is based on empathy. Empathy is based on shared experience. What happens when we have nothing in common?

Systems are now being constructed so we’ll never see certain kinds of information. Each of us live in our own algorithmically created Skinner Box /silo/walled garden, fed only information AIs think will be simultaneously most rewarding to you and their creators (Facebook, Google, etc).

We are always being manipulated, granted, but how we are being manipulated has taken a sharp technology driven change and we should be aware of it. This is different. Scary different. And the technology behind it all is absolutely fascinating.

Divided We Are Exploitable

How the manipulation process works is explained by Cathy O'Neil, author of Weapons of Math Destruction, in a recent episode of Triangulation. Cathy's insight occurred while working at her job as a data scientist at Expedia.com, where she engaged in that most modern of occupations, creating data models to predict user clicks and purchases.

One day a VC walked in and gave a talk. The VC said he was looking “forward to the day when all I see are advertisements for trips to Aruba and jets skis and I never have to see another add for University of Phoenix, because those aren’t for people like me.”

On the surface this is a harmless sounding comment. Cathy drives the analysis deeper. What the VC wants, she says, is to create an internet that silos and segregates people by class, by race, etc, so they can be more easily targeted as prey, by predatory industries like for profit colleges and loan companies.

Now, I never see University of Phoenix ads (or Aruba or jet ski ads), but Cathy looked it up and it turns out they were the number one ad buyer on Google for the quarter she investigated. See what I mean about incentives?

Ads aren’t just targeted by our zip code anymore. Ads are targeted specifically to us as individual people. Ads follow as around like ghosts of purchases past. This is not news and it’s just good business. The better the targeting the higher the profit.

The big change is: there’s now no difference between ads and content. Content, the information you see on your feed, is targeted at you just like ads. And that content can be anything and serve any purpose. There’s no implied social contract for content to be true. Content is now weaponized for a purpose. In other words: content is now propaganda.

The problem is the incentives are all aligned to keep us pressing buttons for our next dose of content/heroin. That’s how money is made: slapping ads on inventory we find engaging. The more inventory the better. The more crazy the inventory the better because that’s what we find engaging. What the inventory is doesn’t matter. Except it matters deeply to US as a democracy.

What’s implied behind those ads is even scarier. Each of has a profile that has been built up over time from thousands of different sources. These profiles never go away, they only get better and better, and they are used to manipulate us.

Democrats Fought the Last War

The recent US presidential election makes a perfect case in point. What follows is not political commentary. It's not about who won or who lost. It's about how new technologies were used to change the game. And that should concern everyone as there’s no doubt this is how politics will be carried out in the future. The Republicans just figured it out first. In politics first movers have the advantage over fast followers.

The Democrats may have thought they had a technological lead because of the last presidential election. Inside the Secret World of the Data Crunchers Who Helped Obama Win. It turns out the Democrats were fighting the last war. Technology changed and they did not.

What’s Old: targeting, organizing and motivating voters.

What’s New: Moneyball meets Social Media with a twist of message tailoring, sentiment manipulation and machine learning.

The whole amazing story is told here: Exclusive Interview: How Jared Kushner Won Trump The White House. It’s genius. Trump’s team, with the help of Cambridge Analytica, really cracked the code on how to use technology in this election.

The Dark Side of Facebook - How the Tech Works

Traditional microtargeting is almost quaint. Facebook has something called dark posts. A dark post is a newsfeed message seen only by the users being targeted. Adomas Baltagalvis explains: these posts are assigned to your Facebook page like any other post, but they are not visible on the page timeline, unless you actually publish them. And so the only way people will see it, is if you use Facebook ads to promote them (see the incentive problem again?).

They aren’t hard to create. Here’s a good explanation of the process. And even if you aren’t on Facebook they still probably have a shadow profile on you.

Using dark posts it’s possible to serve “different ads to different potential voters, aiming to push the exact right buttons for the exact right people at the exact right times.” If your profile says you have a darker view of the world, for example, you will be shown scarier ads. If you have a more optimistic view of the world they’ll show you more hopeful ads. And that's just the beginning.

How would advertisers know such a thing? Now this is clever. You know those personality quizzes you see? Those aren’t just fun and games, quizzes are a stealth way of building a deep profile on you. They are used to compute what is known as your Ocean score, which is how you rate according to the big five psychological traits: Openness, Conscientiousness, Extraversion, Agreeableness and Neuroticism.

Your profile data is then fed into automated systems that build and deploy targeted messaging campaigns that exploit very recent increases in the power of smart algorithms and cheap compute resources. This is the first time in history this sort of thing has been possible at scale. That's the promise of the cloud.

We now have the means computationally, at a low cost basis, to address each human being individually. And we know have the means from a software infrastructure perspective to do so intelligently and in real-time.

We can text, we can email, we can post, we can analyze big data, we can run learning algorithms, we can purchase data sets, we can run ads, we can get metrics on successes and failures, we can do everything now in software. Software has eaten politics. All those services with scriptable APIs running on top a sea of elastic compute power makes it possible...makes it inevitable.

For a fuller explanation see The Secret Agenda of a Facebook Quiz:

Imagine the full capability of this kind of “psychographic” advertising. In future Republican campaigns, a pro-gun voter whose Ocean score ranks him high on neuroticism could see storm clouds and a threat: The Democrat wants to take his guns away. A separate pro-gun voter deemed agreeable and introverted might see an ad emphasizing tradition and community values, a father and son hunting together.

In this election, dark posts were used to try to suppress the African-American vote. According to Bloomberg, the Trump campaign sent ads reminding certain selected black voters of Hillary Clinton’s infamous “super predator” line. It targeted Miami’s Little Haiti neighborhood with messages about the Clinton Foundation’s troubles in Haiti after the 2010 earthquake. Federal Election Commission rules are unclear when it comes to Facebook posts, but even if they do apply and the facts are skewed and the dog whistles loud, the already weakening power of social opprobrium is gone when no one else sees the ad you see — and no one else sees “I’m Donald Trump, and I approved this message.”

It’s brilliant. It really is. But that doesn’t mean it’s good.

Is the New Politics an Existential Threat to Democracy?

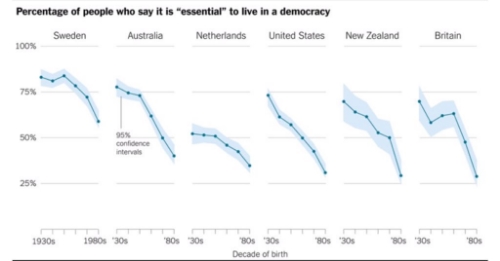

This may be a bit of a shock, but it appears the support for democracy is on the decline:

So yes, it sounds hyperbolic, but I think the new politics is a threat because it can only make people feel worse, drive us further apart, and make us more cynical. Not exactly the context needed to create a more perfect union.

Where are the unifying influences anymore? Does religion bring us together anymore? We don’t all watch TV at the same time anymore. We don’t all do anything together anymore. Economic trends conspire to further separate the haves and have-nots. What brings us together?

The incentives all run towards creating division.

Business makes money off the churn. Politicians win by dividing. Media wins by turning politics into a tribal sporting event. And humans crave their dopamine hits of the fantastic.

The future portends only more of the same. This is assured by the continued aggregation of masses of people onto just a few services and the high profitability and zero-marginal economics of digital data collection and targeting.

A classic vicious circle. Technology is never value neutral.

It’s the ultimate in identity politics, a direct appeal to the self with few countervailing forces.

It’s now trivially easy to create resentment at scale using automation. It has never been easier to create divisions of us vs them. This is the acid that dissolves a democracy.

I like the words of Kathy Cramer, who has been researching in this area for a while:

In a democracy, we’re making choices that govern each other. So yes, we all have an obligation to understand each other.

New technology has made that understanding, which is hard to begin with, so much harder.

Are Critical Thinking Skills the Answer?

No. There’s a faction out there that thinks teaching skepticism and critical thinking skills is the way to save us from an ever more divided fate. That’s advocated in this article: Most Students Don’t Know When News Is Fake, Stanford Study Finds.

The problem is because of a neat trick of human psychological that if we see a message repeatedly we will think it’s true:

Repetition makes a fact seem more true, regardless of whether it is or not. Understanding this effect can help you avoid falling for propaganda, says psychologist Tom Stafford.

All the critical thinking in the world might not be enough to counter a constant stream of disinformation. And what's great at delivering a constant stream of disinformation? Social networks. With their targeting platforms they are perfect for delivering repeated messages to individuals. In the past we called those ads, now we just call it news.

There's another cool brain hack described in an episode of Freakonomics Radio. It's how the sequencing of decision-making affects the decisions. You think the sequence of events shouldn’t matter, but it does. For baseball umpires if a previous pitch was a strike the umpire is less likely to call the next pitch a strike. Judges are much more likely to grant parole early in the day, shortly after breakfast, and again shortly after the lunch break. For a judge granting asylum if the previous two cases were approved then the next case is less likely to be approved.

Imagine what happens in your feed if you see a dozen articles asserting X and then just one or two articles saying the opposite? Will critical thinking weigh those article sequences evenly? Unlikely.

Rather than democratizing the world technology is poised to only deepen the divide. Who saw that coming?