To Compress or Not to Compress, that was Uber's Question

Uber faced a challenge. They store a lot of trip data. A trip is represented as a 20K blob of JSON. It doesn't sound like much, but at Uber's growth rate saving several KB per trip across hundreds of millions of trips per year would save a lot of space. Even Uber cares about being efficient with disk space, as long as performance doesn't suffer.

This highlights a key difference between linear and hypergrowth. Growing linearly means the storage needs would remain manageable. At hypergrowth Uber calculated when storing raw JSON, 32 TB of storage would last than than 3 years for 1 million trips, less than 1 year for 3 million trips, and less 4 months for 10 million trips.

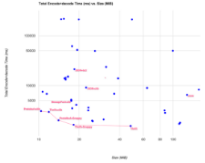

Uber went about solving their problem in a very measured and methodical fashion: they tested the hell out of it. The goal of all their benchmarking was to find a solution that both yielded a small size and a short time to encode and decode.

The whole experience is described in loving detail in the article: How Uber Engineering Evaluated JSON Encoding and Compression Algorithms to Put the Squeeze on Trip Data. They came up with a matrix of 10 encoding protocols (Thrift, Protocol Buffers, Avro, MessagePack, etc) and 3 compression libaries (Snappy, zlib, Bzip2). The target environment was Python. Uber went to an IDL approach to define and verify their JSON protocol, so they ended up only considering IDL solutions.

The conclusion: MessagePack with zlib. Encoding time: 4231 ms. Decoding: 715 ms. There was a 78% reduction in size relative to the JSON zlib combination.

The result: 1 TB disk will now last almost a year (347 days), compared to a month (30 days) without compression. Uber now has enough space to last over 30 years compared to just under 1 year before. That's a huge win for a relatively simple change. Hopefully there's a common library handling all the messaging so this change could be completely transparent to all the developers. Uber also noted that smaller packet sizes mean less data transiting through the system which means less processing time which means less hardware is needed. Another big win.

Something to consider: don't use JSON for messaging. The compression/decompression times are still dog slow. If you are going to use an IDL, which every grown up project eventually moves to for reliability and security reasons, consider not using JSON for messaging. Go for a binary protocol from the start. The performance savings can be dramatic. A lot of the performance drain comes from serialization/deserialization churning through memory and that can be reduced greatly by not using text based protocols like JSON. JSON is convenient, but it's also hugely wasteful at scale.