Stuff The Internet Says On Scalability For September 2nd, 2016

Hey, it's HighScalability time:

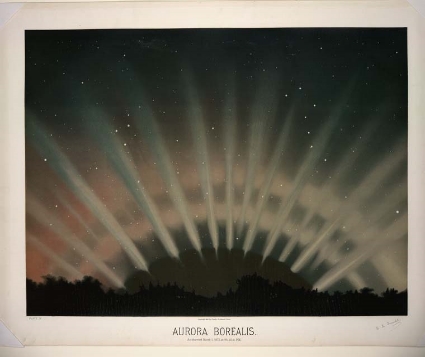

Spectacular iconic drawing of Aurora Borealis as observed in 1872. (Drawings vs. NASA Images)

- 4,000 GB: projected bandwidth used per autonomous vehicle per day; 100K: photos of US national parks; 14 terabytes: code on Github in 1 billion files held in 400K repositories; 25: age of Linux; $5 billion: cost of labor for building Linux; $3800: total maintenance + repairs after 100K miles and 5 years of Tesla ownership; 2%: reduction in Arizona's economy by deporting all illegal immigrants; 15.49TB: available research data; 6%: book readers who are "digital only";

- Quotable Quotes

- @jennyschuessler: "Destroy the printing press, I beg you, or these evil men will triumph": Venice, 1473

- @Carnage4Life: Biggest surprise in this "Uber for laundry" app shutting down is that there are still 3 funded startups in the space

- @tlipcon: "backpressure" is right up there with "naming things" on the top 10 list of hardest parts of programming

- cmcluck: Please consider K8s [kubernetes] a legitimate attempt to find a better way to build both internal Google systems and the next wave of cloud products in the open with the community. We are aware that we don't know everything and learned a lot by working with people like Clayton Coleman from Red Hat (and hundreds of other engineers) by building something in the open. I think k8s is far better than any system we could have built by ourselves. And in the end we only wrote a little over 50% of the system. Google has contributed, but I just don't see it as a Google system at this point.

- looncraz: AMD is not seeking the low end, they are trying to redefine AMD as the top-tier CPU company they once were. They are aiming for the top and the bulk of the market.

- lobster_johnson: Swarm is simple to the point of naivety.

- @BenedictEvans: That is, vehicle crashes, >90% caused by human error & 30-40% by alcohol, cost $240bn & kill 30k each year just in the USA. Software please

- @joshsimmons: "Documentation is like serializing your mental state." - @ericholscher, just one of many choice moments in here.

- @ArseneEdgar: "better receive old data fast rather than new data slow"

- @aphyr: hey if you're looking for a real cool trip through distributed database research, https://github.com/cockroachdb/cockroach/blob/develop/docs/design.md … is worth several reads

- @pwnallthethings: It's a fact 0day policy-wonks consistently get wrong. 0day are merely lego bricks. Exploits are 0day chains. Mitigations make chains longer.

- andrewguenther: Speaking of [Docker] 1.12, my heart sank when I saw the announcement. Native swarm adds a huge level of complexity to an already unstable piece of software. Dockercon this year was just a spectacle to shove these new tools down everyone's throats and really made it feel like they saw the container parts of Docker as "complete."

- @johnrobb: Foxconn just replaced 60,000 workers with robots at its Kushan facility in China. 600 companies follow suit.

- @epaley: Well publicized - Uber has raised ~$15B. Yet the press is shocked @Uber is investing billions. Huh? What was the money for? Uber kittens?

- Ivan Pepelnjak: One of the obsessions of our industry is to try to find a one-size-fits-everything solutions. It's like trying to design something that could be a mountain bike today and an M1 Abrams tomorrow. Reality doesn't work that way

- @gdead: This one word NY article takes nearly 6MB and over 150 requests to load

- @antirez: one of the few things engineers can do is to pick their stack, freedom is cool!

- Databricks: Spark could reliably shuffle and sort 90 TB+ intermediate data and run 250,000 tasks in a single job. The Spark-based pipeline produced significant performance improvements (4.5-6x CPU, 3-4x resource reservation, and ~5x latency) compared with the old Hive-based pipeline, and it has been running in production for several months.

- Intel: Intel estimates that each one of us will use 1.5 gigabytes of data per day by 2020. A smart hospital will use 3,000 gigabytes a day. And a smart factory could use a million gigabytes of data per day.

- @l4stewar: if all you have is a log, everything looks like a replicated state machine

- Frederic Filloux: One page of text requires between 6 and 55 pages of HTML. More than ever, code inflation rules the web.

- American Institute of Physics: China and a team of researchers sought to demonstrate numerically and experimentally that chaotic systems can be used to create a reliable and efficient wireless communication system.

- lugg: Don't try to put storage into containers at this point. You'll just be in for a bad time. Either run a separate vps for MySQL or use a hosted solution.

- NYU: the highest-performing group of real participants were those who saw a virtual peer that consistently outperformed them.

-

@bcjbcjbcj: programmers: Non-STEM fields are bullshit. It's just words instead of objective science. programmers: The hardest problem is naming things.

- @postwait~ If you're ingesting high volumes of data and not storing it on #ZFS, it can only be assumed you are actively trying to f*ck your customers.

- @amontalenti: Hmm, two popular free services I use, @WagonHQ and @Readability, will be shutting down soon. This is why charging real money matters, folks!

- @_krisjack: I love living in a world where a large number of very smart people work for big tech companies and share their work in blogs #DataScience

- @ColinPeters: Watched a Korean TV character last night duck into a "phone booth"--a box for using your mobile phone--in a library.

- @kris_marwood: Sharing state via databases makes software hard to change. Write business events to a queue or use private tables - @ted_dunning #HS16Melb

- Lord of the Rings Online: So what is sort of unfortunate about some of the lag that some of our players have seen is that there is not a single source for it. It is a many-headed beast that basically means we have to keep hacking heads off as we can find them.

- Mark Callaghan: RocksDB did better than InnoDB for a write-heavy workload and a range-scan heavy workload. The former is expected, the latter is a welcome surprise.

- This should concern every iPhone user. Total ownage.

- Steve Gibson, Security Now 575, with a great explanation of Apple's previously unknown professional grade zero-day iPhone exploits, Pegasus & Trident, that use a chain of flaws to remotely jail break an iPhone. It's completely stealthy, surviving both reboots and upgrades. The exploits have been around for years and were only identified by accident. It's a beautiful hack.

- Your phone is totally open and it happens just like in the movies: A user infected with this spyware is under complete surveillance by the attacker because, in addition to the apps listed above, it also spies on: Phone calls, Call logs, SMS messages the victim sends or receives, Audio and video communications that (in the words a founder of NSO Group) turns the phone into a 'walkietalkie'

- Bugs happen in complicated software. Absolutely. But these exploits were for sale...for years. The companies that sell these exploits do not have to disclose them. Apple should be going to the open market and buying these exploits so they can learn about them and fix them. Apple should be outbidding everyone in their bug bounty system so they can find hacks and fix them.

- Paying for exploits is not an ethical issue, it's smart business in a realpolitik world. If you can figure out the Double Irish With a Dutch Sandwich you can figure out how to go to the open market and find out all the ways you are being hacked. Apple needs to think about security strategically, not only as a tactical technical issue.

- See also This Leaked Catalog Offers ‘Weaponized Information’ That Can Flood the Web.

- Is all that hacking on Mr Robot realish? Looks like it. Here's a great breakdown in detail of different hacks: Mr. Robot’ Rewind: Analyzing Fsociety’s hacking rampage in Episode 8. Though I have a hard time believing a guy who will help bring down the economy would be stupid enough to go to a random website. If you've wondered how the infamous Rubber Ducky might work here's a thorough explanation: 15 Second Password Hack, Mr Robot Style.

- Facebook is making Telegraph, a multi-node wireless system focused on bringing high-speed internet connectivity to dense urban areas that operates at 60GHz. You're thinking the signal will be disrupted by rain, fog, and butterfly farts. But that's a point-to-point system. These type of systems, including 5g, will operate in a mesh. This is not your grandpa's mesh. The technology has improved, the scheduling algorithms have improved. The increased availability of processing power means paths can be recalculated and rescheduled in real-time. More in PQ Show 91: Intel And 5G Networks.

- Private clouds are the new embedded systems. FPGAs + fast CPUs/GPUs are the new prototyping systems.

- Demystifying the Secure Enclave Processor. If you've evere wondered how Apple's secure enclave works, the most holy of holies that prevents the main processor from gaining direct access to sensitive data, then these slides from a Black Hat presentation are the best tour you can take outside of Apple or shadowy government agencies. As one commenter says, this talk was not given by the "B" team. While many potential attacks are listed no actual attacks seem to work...that we know of. Fascinating stuff. Here's a more accessible series of articles on the same topic: Security Principles in iOS Architecture.

- Facebook has a new compression technology. Smaller and faster data compression with Zstandard. You can find it at facebook/zstd. It's ~3-5x faster than zlib, 10-15 percent smaller, 2x faster at decompression, and scales to much higher compression ratios, while sustaining lightning-fast decompression speeds. The magic comes by taking advantage of several recent innovations and targeting modern hardware. It uses large amounts of memory better, data is separated out into multiple parallel streams to take advantage of multiple ALUs (arithmetic logic units) and increasingly advanced out-of-order execution design, and by using a branchless coding style that prevent pipeline flushes. And there's a new very high tech sounding compression algorithm called Finite State Entropy: A next-generation probability compressor, which is based on a new theory called ANS (Asymmetric Numeral System) that precomputes many coding steps into tables, resulting in an entropy codec as precise as arithmetic coding, using only additions, table lookups, and shifts. See also Google is using AI to compress photos, just like on HBO’s Silicon Valley.

- My new hero is a 65-year-old woman with a 20-gauge shotgun. Woman shoots drone: “It hovered for a second and I blasted it to smithereens.”

- Who said AI isn't practical? How a Japanese cucumber farmer is using deep learning and TensorFlow. The task: sorting cucumbers, which is apparently quite a skill. They system went into production in July. The training: three months and 7,000 pictures. The process: Raspberry Pi 3 takes images of the cucumbers with a camera; a small-scale neural network on TensorFlow to detect whether or not the image is of a cucumber; it then forwards the image to a larger TensorFlow neural network running on a Linux server to perform a more detailed classification. The win: Farmers want to focus and spend their time on growing delicious vegetables. See also Infrastructure for Deep Learning.

- The future might be delayed a while. Why We Still Don’t Have Better Batteries: the grail of compact, low-cost energy storage remains elusive...many researchers believe energy storage will have to take an entirely new chemistry and new physical form...In terms of moving from an idea to a product it’s hard for batteries, because when you improve one aspect, you compromise other aspects...It’s hard to invest $500 million in manufacturing when your company has $5 million in funding a year...Meanwhile, the Big Three battery producers, Samsung, LG, and Panasonic, are less interested in new chemistries and radical departures in battery technology than they are in gradual improvements to their existing products.

- Lessons Learned While Building Microservices: Larger services are more difficult to change; A microservices architecture promotes keeping your technology stack more up-to-date and fresh by allowing your components to evolve organically and independently. Large tasks (like a Node version migration) are naturally split into smaller, more manageable subtasks; Microservices promote trying out new technologies. They enable experimentation with patterns and tools.

- Public real-time data feeds + map API = wonderful data-based maps that continuously update. Mapping U.S. wildfire data from public feeds. We have clearly leveled down from the Age of Aquarius to the Age of Fire. The world she is burning.

- This touches on most of the major issues. Serverless architectures: game-changer or a recycled fad? Yes. Recycled in a away because as one commenter said it's like CGI. Which is fine with me. As someone who has written metric tons of perl and java CGI programs I always liked CGI. It's clean and simple. Once you remove the whole server maintenance and load balancing work it becomes even cleaner and simples. So thinking of Lambda and CGI done right is not damning with faint praise, it's a compliment.

- Microservices are independent, distributed transactions won't save you. Distributed Transactions: The Icebergs of Microservices: every business event needs to result in a single synchronous commit. It’s perfectly okay to have other data sources updated, but that needs to happen asynchronously, using patterns that guarantee delivery of the other effects to the other data sources...The way to achieve this is with the combination of a commander, retries and idempotence.

- More Spark love. Percona Live Europe featured talk with Alexander Krasheninnikov — Processing 11 billion events a day with Spark in Badoo: Our initial implementation was built on top of Scribe + WebHDFS + Hive. But we’ve realized that processing speed and any lag of data delivery is unacceptable (we need near-realtime data processing). One of our BI team mentioned Spark as being significantly faster than Hive in some cases, (especially ones similar to ours). When investigated Spark’s API, we found the Streaming submodule — ideal for our needs. Additionally, this framework allowed us to use some third-party libraries, and write code. We’ve actually created an aggregation framework that follows “divide and conquer” principle. Without Spark, we definitely went way re-inventing lot of things from it.

- The new train robberies. Crimes of opportunity created by new technology. Notes on that StJude/MuddyWatters/MedSec thing: hackers drop 0day on medical device company hoping to profit by shorting their stock

- A Large-Scale Comparison of x264, x265, and libvpx - a Sneak Peek: x265 and libvpx demonstrate superior compression performance compared to x264, with bitrate savings reaching up to 50% especially at the higher resolutions. x265 outperforms libvpx for almost all resolutions and quality metrics, but the performance gap narrows (or even reverses) at 1080p.

- Apache Spark @Scale: A 60 TB+ production use case from Facebook. The numbers are impressive, but the most interesting part of the article is the extensive laundry list of changes and improvements that were made to Spark because of real-life testing at this scale. Things like Make PipedRDD robust to fetch failure; Configurable max number of fetch failures; Less disruptive cluster restart; Fix Spark executor OOM; TimSort issue due to integer overflow for large buffer; Cache index files for shuffle fetch speed-up.

- Another good example of using Elasticsearch and Kibana to set up aggregated cluster level logging, log querying, and log analytics. Kubernetes Logging With Elasticsearch and Kibana.

- Simit: a new programming language that makes it easy to compute on sparse systems using linear algebra. Simit programs are typically shorter than Matlab programs yet are competitive with hand-optimized code and also run on GPUs.

- bluestreak01/questdb: a time series database built for non-compromising performance, data accessibility and operational simplicity. It bridges the gap between traditional relational and time series databases by providing fast SQL access to both types of data

- soundcloud/lhm: the basic idea is to perform the migration online while the system is live, without locking the table. In contrast to OAK and the facebook tool, we only use a copy table and triggers. The Large Hadron is a test driven Ruby solution which can easily be dropped into an ActiveRecord migration.

- Execution Models For Energy-Efficient Computing Systems - EXCESS: results of tests run on large data-streaming aggregations have been impressive, as a coder can offer a solution 54 times more energy efficient solution on the Excess framework than on a standard high-end PC processor deployment. The holistic strategy initially presents the hardware benefits and then shows the optimal process for dividing computations within the processor for additional performance enhancement.

- Introduction to Queueing Theory and Stochastic Teletraffic Models: The aim of this textbook is to provide students with basic knowledge of stochastic models that may apply to telecommunications research areas, such as traffic modelling, resource provisioning and traffic management.

- Flexible Paxos: Quorum intersection revisited: we demonstrate that Paxos, which lies at the foundation of many production systems, is conservative. Specifically, we observe that each of the phases of Paxos may use non-intersecting quorums. Majority quorums are not necessary as intersection is required only across phases. Using this weakening of the requirements made in the original formulation, we propose Flexible Paxos, which generalizes over the Paxos algorithm to provide flexible quorums. We show that Flexible Paxos is safe, efficient and easy to utilize in existing distributed systems

- Automating Failure Testing Research at Internet Scale: In this paper, we describe how we adapted and implemented a research prototype called lineage-driven fault injection (LDFI) to automate failure testing at Netflix. Along the way, we describe the challenges that arose adapting the LDFI model to the complex and dynamic realities of the Netflix architecture

- Runway - A New Tool for Distrubuted System Design: Runway is a new design tool for distributed systems. A Runway model consists of a specification and a view. The specification describes the model’s state and how that state may change over time. Specifications are written in code using a new domain-specific language. This language aims to be familiar to programmers and have simple semantics, while expressing concurrency in a way suited for formal and informal reasoning.

- Full Resolution Image Compression with Recurrent Neural Networks: This paper presents a set of full-resolution lossy image compression methods based on neural networks. Each of the architectures we describe can provide variable compression rates during deployment without requiring retraining of the network: each network need only be trained once. All of our architectures consist of a recurrent neural network (RNN)-based encoder and decoder, a binarizer, and a neural network for entropy coding. We compare RNN types (LSTM, associative LSTM) and introduce a new hybrid of GRU and ResNet.