Stuff The Internet Says On Scalability For December 15th, 2017

Hey, wake up, it's HighScalability time:

Merry Christmas and Happy New Year everyone! I'll be off until the new year. Here's hoping all your gifts were selected using machine learning.

If you like this sort of Stuff then please support me on Patreon. And I'd appreciate your recommending my new book—Explain the Cloud Like I'm 10—to anyone who needs to understand the cloud (who doesn't?). I think they'll like it. Now with twice the brightness and new chapters on Netflix and Cloud Computing.

- 157 terabytes: per second raw data output of the Square Kilometre Array; $11 million: made by a 6 year old on YouTube; 14TB: helium hard drive; 1: year education raises IQ 1-5 points; 10: seconds mining time to pay for wifi; 110 TFLOPS: Nvidia Launches $3,000 Titan V; 400: lines of JavaScript injected by Comcast; 20 million: requests per second processed by Netflix to personalize artwork; 270: configuration parameters in postgresql.conf; hundreds: eyes in scallop from a unique mirroring system; $72 billion: record DRAM revenue; 20: rockets landed by SpaceX;

- Quotable Quotes:

- Bill Walton: Mirai was originally developed to help them corner the Minecraft market, but then they realized what a powerful tool they built. Then it just became a challenge for them to make it as large as possible.

- Stephen Andriole: The entire world of big software design, development, deployment and support is dead. Customers know it, big software vendors know it and next generation software architects know it. The implications are far-reaching and likely permanent. Business requirements, governance, cloud delivery and architecture are the assassins of old "big" software and the liberators of new "small" software. In 20 years very few of us will recognize the software architectures of the 20th century or how software in the cloud enables ever-changing business requirements.

- Melanie Johnston-Hollitt: There is not yet compute available that can process the data we want to collect and use to understand the universe.

- Brandon Liverence: Credit and debit card transaction data shows, at these businesses, the average customer in the top 20 percent spent 8x as much as the average customer from the bottom 80 percent.

- @evonbuelow: After looking at the source code for a series of k8s components & operators, I'm struck by how go (#golang) is used more as a declarative construct than a set of procedural steps encoding sophisticated logic.

- apandhi: I had a run-in with CoinHive this weekend so I did a bit of research. Most modern computers can do about 30/h a second. Coinhive currently pays out 0.00009030 XMR ($0.02 USD) per 1M hashes. For a 10 second pause, they'd mine 300 hashes (about $0.000006 USD). To make $1 USD, they'd need to have ~166,666.66 people connect to their in store WIFI.

- @matt_healy: Went from zero clue about #aws codepipeline and friends yesterday, to setting up an automatic Lambda and API gateway deployment with every git push in production today. Awesome!

- lgierth: Pubsub is probably one of the lesser known features of IPFS right now, given that it's still marked as experimental. We're researching more efficient tree-forming and message routing algorithms, but generally the interface is pretty stable by now. Pubsub is supported in both go-ipfs and js-ipfs. A shining example of pubsub in use is PeerPad, a collaborative text editor exchanging CRDTs over IPFS/Pubsub

- Manish Rai Jain: Given these advancements, Amazon Neptune’s design is pre-2000. Single server vertically scaled, asynchronously replicated, lack of transactions — all this screams outdated.

- Brandon Liverence: The top 20 percent of Uber customers rode with Uber 73 times on average in 2016, and all those trips cost them an average of $1,160 per person. The bottom 80 percent of riders only averaged seven trips and spent $108 with Uber.

- @russelldb: OH: "Riak is so robust that it survives its own company's demise"

- @DanielGershburg: A waiter at a sushi restaurant in New Brunswick, NJ stopped taking my order, mid-order, because he ran to sell his bitcoin after it hit 18,000 and he was alerted by another waiter, who also owned bitcoin. I don't have a joke.

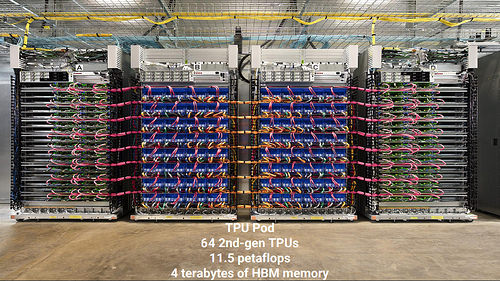

- Jeff Dean~ Single-core performance plateauing after decades of exponential growth just when deep learning is creating insatiable computation demands. More computational power needed. Deep learning is transforming how we design computers.

- Spoonofdarkness: A better comparison would be DC/OS community edition vs Kubernetes. Mesos aims to do one job well and I feel that it accomplished that (abstracting a cluster into a single execution environment across multiple hosts). DC/OS and Kubernetes both better fulfill an almost PaaS infrastructure.

- @copyconstruct: A few years ago, people used to pick Redis or SQS or NSQ or Beanstalk or RabbitMQ for queueing. These days Kafka is soon becoming the one message broker to rule them all. Wonder when the service mesh authors would build first class support for Kafka into the sidecar proxies ...

- Mark LaPedus: Still, the question is clear—How will 2018 play out? It’s difficult to predict the future, but shortages in the packaging supply chain are expected to linger, at least for the first part of 2018. For now, vendors are taking a wait and see approach. “I see a cool down period before the next wave”

- boulos: Right. Encryption in transit within a building at line rate (and more importantly low latency) is hard. To my knowledge, none of the providers do this currently, otherwise they'd be talking about it. This is us saying it's time to talk about it :). Disclosure: I work on Google Cloud.

- @davidgerard: PoW is a kludge, a terrible one. It was literally never an actually good idea. And Bitcoin had substantially recentralised after five years anyway. Now PoW's just a disgusting waste. And as other coins have shown, nobody actually cares about 51% as long as number go up.

- @swardley: Dear Google focusing on skirmishes around ML and Kubernetes ... you seem to be missing the war. Lambda is going to tear you apart ->

-

@Iron_Spike: Reminder that the concept of "I made it all by myself with no help from anybody" is a complete f*cking myth unless you were born from the primordial void like a chaos god and lived alone naked in a cave in the wilderness from that point onward.

-

Juanita Bawagan: The network shows that certain mammalian neurons have the shape and electrical properties that are well-suited for deep learning.

-

Susan Fahrbach: My jaw just dropped. If you didn’t tell me I was looking at a mantis shrimp, I could have been looking at a honeybee brain. I had that feeling of, ‘Wow, that’s what I study—only it’s in a shrimp.

-

Onnar: A Google engineer once told me that we shouldn't expect any revolutions in V8 speed, only small incremental improvements. The reason is that JS is dynamic and objects are basically hash tables. You can only do so much.

-

@TevinJeffrey: "The in-kernel proxy is capable of having two pods talk to each other directly from socket to socket without ever creating a single TCP packet" Mindblown!

-

Yann LeCun: In the history of science and technology, the engineering artifacts have almost always preceded the theoretical understanding: the lens and the telescope preceded optics theory, the steam engine preceded thermodynamics, the airplane preceded flight aerodynamics, radio and data communication preceded information theory, the computer preceded computer science.

-

@darylginn: He's making a databaseHe's sorting it twiceSELECT * FROM contacts WHERE behaviour = 'nice'SQL Clause is coming to town

-

rdoherty: My last job we were 100% hosted on Heroku and coming from managing hundreds of EC2 instances and databases via autoscaling, CF, Puppet and home-grown scripts, it was a dream to use Heroku. We simply didn't need any operations staff to manage infrastructure, maybe a few hours a week for 1 developer out of 11 to adjust or verify a few things.

- Benedict Evans: Amazon, then, is a machine to make a machine - it is a machine to make more Amazon. The opposite extreme might be Apple, which rather than radical decentralization looks more like an ASIC, with everything carefully structured and everyone in their box, which allows Apple to create certain kinds of new product with huge efficiency but makes it pretty hard to add new product lines indefinitely.

- Jakob: When you think it through, you realize that non-volatile memory does indeed have the potential to change if not everything, at least a rather significant portion of common assumptions used in software development. The split between volatile memory and non-volatile persistent storage that has been part of computers for the last half a century or more is not just a performance annoyance. It is also a rather useful functionality benefit.

- Maish Saidel-Keesing: I see three major trends in 2018. 1. AWS will continue to innovate at an amazing pace. There will be no slowing down, as much as industry professionals cannot believe they can keep up the pace, they will! 2. Docker as a product will no longer be relevant in the enterprise. Kubernetes is the wonder child of the year, and has eaten their lunch. 3. Openstack will continue to lose contributions from the globe, except for one demographic that will continue to grow: China. Interest will continue to decrease, I believe, and will wither out in the next 18 months

- Kevin Chang: The access bandwidth of DRAM-based computer memory has improved by a factor of 20x over the past two decades. Capacity increased 128x during the same period. But latency improved only 1.3x

- tgrech: "I don't need more than 1TB so no one else does". Meanwhile, we have a vast array of new games clocking in at 100GB+ with their 4K textures and/or DLC, making the XboneX's 1TB drive seem tiny after just a few AAA installs. We're a long way off being able to stream in game textures and the like over the cloud without catastrophic performance hits or a massive HDD cache/buffer.

- KirinDave: This is a really wrongheaded way to look at this. BTrees are themselves an adaptive function that can be used at the top of bottom of an index. We've just got other ones here being proposed that are much faster and smaller, when they work. And there is a methodology here to identify and train them, when they do. What's most amazing about this approach is that you've got a recursive model where the model is trained in layers that propose a submodel, or "an expert which has a better knowledge about certain keys."

- grawprog: When I was in school for Wildlife Management, we learned pretty much the same thing about hunting. More money gets made by, outfitting companies, tour guides and local communities by having a lottery style big game hunt than an open season. It attracts a few people who are willing to spend upwards of $20000 just to shoot one animal as opposed to many hunters coming in and not spending as much overall. It also tends to be better for the survival of the population as a whole though it does tend to lead to an overall weakening of the population though as only the biggest and strongest animals, that should be breeding, get removed.

- litirit: Yes, exchanges support real-time trading, but not every company has all their models running in modern paradigms. It's not uncommon to find companies that run multiple VMs for the sole purpose of... running Excel calculations. Yes, this is exactly what it sounds like. A server that has a several, multi-meg Excel files in memory with external APIs that plug values into cells and read outputs. As I said, within the grand scheme of things, for sports who still run on legacy models, this is a major bottleneck. Remember, many of these companies were started in the 90s, and that's when these models were created. The people who created them are usually swallowed up by the financial industry and now come with a hefty price tag. So the question for the business is "Do we spend millions recreating these proven models and run the risk of them failing? Or do we simply just add another VM?"

- Doug Stephens: Where today the retail market is largely divided by luxury, mid-tier, and discount, the coming decade will see the market more clearly bifurcate into two distinct retail approaches. The first will encompass an ever-swelling number of vertically-integrated brands that focus on serving individual consumers at scale and in a manner that best befits the brand. The second will be a new class of “experiential merchants” that use their physical stores and online assets to perfect the consumer experience across a category or categories of products.

- Mark Re: Heat Assisted Magnetic Recording (HAMR) is the next step [in hard drive technology] to enable us to increase the density of grains — or bit density. Current projections are that HAMR can achieve 5 Tbpsi (Terabits per square inch) on conventional HAMR media, and in the future will be able to achieve 10 Tbpsi or higher with bit patterned media (in which discrete dots are predefined on the media in regular, efficient, very dense patterns). These technologies will enable hard drives with capacities higher than 100 TB before 2030.

- Are you a programmer who doesn't think machine learning has anything to do with you? Jeff Dean says not so fast bucko. Learning opportunities are everywhere. You just have to look. Where do We Use Learning?

- Learning Should Be Used Throughout our Computing Systems. Traditional low-level systems code (operating systems, compilers, storage systems) does not make extensive use of machine learning today. This should change!

- Using reinforcement learning so the computer can figure out how to parallelize code and models on its own. In experiments, the machine beats human-designed parallelization.

- Learned Index Structures not Conventional Index Structures: 2.06X Speedup vs. Btree at 0.23X size. Huge space improvement in hash tables. ~2X space improvement over Bloom Filter at same false positive rate.

- Machine Learning for Improving Datacenter Efficiency: by applying DeepMind’s machine learning to our own Google data centres, we’ve managed to reduce the amount of energy we use for cooling by up to 40 percent.

- Computer Systems are Filled With Heuristics. Use learning Anywhere Heuristics are used To Make a Decision! Compilers: instruction scheduling, register allocation, loop nest parallelization strategies. Networking: TCP window size decisions, backoff for retransmits, data compression. Operating systems: process scheduling, buffer cache insertion/replacement, file system prefetching. Job scheduling systems: which tasks/VMs to co-locate on same machine, which tasks to pre-empt. ASIC design: physical circuit layout, test case selection.

- Anywhere We’ve Punted to a User-Tunable Performance Option! Many programs have huge numbers of tunable command-line flags, usually not changed from their defaults.

- Meta-learn everything: learning placement decisions, learning fast kernel implementations, learning optimization update rules, learning input preprocessing pipeline steps, learning activation functions, learning model architectures for specific device types, or that are fast, for inference on mobile device X, learning which pre-trained components to reuse.

- AWS Re:Invent and KubeCon: The Race to Invisible Infrastructure: AWS re:Invent was about consumption and while KubeCon was about our community of creators...I couldn’t help thinking the difference between both shows was that of consumption vs. creation. AWS re:Invent was about “this is what we built and how you use it to run faster” (i.e. consumption) vs. the theme at KubeCon which was “this is how we are building it and here’s where you can download the sources” (as a community of creators). Judging by the number of people at both shows, it seems like advanced technology consumers outnumber us creators by a factor of 10:1.

- Fun thread. What's your most controversial technical opinion? ssrobbi: I think many programmers make up architectural problems to solve to cope with their work getting dull. oldretard: By luring open source programmers into their gamified "social coder" playpen, GitHub did more damage to the future of software than anyone else within the last three decades. GhostBond: Agile is giant pile of 2015/2016 political-level bullshit. It's a skeezy marketing tactic - you take the drawbacks of your product, but you push them as advantages. Agile completely screws your ability as a dev to make any strategic decisions. rverghes: There is something wrong with functional programming. I don't know what it is. To me, functional programming seems to make a lot of sense. But pretty much no one produces useful public software written in a functional language. pts_: Hiring bros is burning money and that's my not even technical controversial opinion. steven_h: A lot of shit people put in application code should be in RDBMS stored procedures instead. yourparadigm: There is no such thing as a "business layer" in application programming. It's a false abstraction that is never fully realized.

- NIPS (Neural Information Processing Systems) 2017 — notes and thoughts. Key trends: Deep learning everywhere – pervasive across the other topics listed below. Lots of vision/image processing applications. Mostly CNNs and variations thereof. Two new developments: Capsule Networks and WaveNet...Meta-Learning and One-Shot learning are often mention in Robotics and RL context...Graphic models are back! Also, NIPS 2017 Notes.

- Good to see. Azure Functions Get More Scalable and Elastic: Scaling responsiveness improved significantly. The max delay reduced from 40 to 6 minutes. There are some improvements still to be desired: sub-minute latency is not yet reachable for similar scenarios. Here's what still can be done better: Scale faster initially; Do not scale down that fast after backlog goes to 0; Do not allow the backlog to grow without message spikes; Make scaling algorithms more open.

- More reinvent. My first AWS re:Invent. AWS re:invent 2017 recap.

- 40 Years of Microprocessor Trend Data: There are two interesting things to note: First, single-thread performance has kept increasing slightly, reflecting that this is still an important quantity. These increases were achieved with clever power management and dynamic clock frequency adjustments ("turbo"). Second, the number of cores is now increasing with a power law. Is this a surprise? Probably not: Transistor counts are increasing in accordance to Moore's Law, so one way of using them is in additional cores...How long can we keep Moore's Law going? My (careful) prediction is that we will see an increase in the number of cores in proportion to the number of transistors. Current top-of-the-line Haswell Xeon CPUs already offer up to 18 cores, so we are well in a regime where Amdahl's Law requires us to use parallel algorithms anyway. Upcoming Knights Landing Xeon Phis will be equipped with 72 cores, which is more than the 61 cores in the current Knights Corner Xeon Phis.

- Apparently we search for very boring things. These are the top 10 most popular Google searches of 2017.

- True, DynamoDB is not a RDBMS. The Short-Life of DynamoDB at Transposit: I found that DynamoDB promised scale by under-promising canonical database features. Amazon doesn’t clearly explain this tradeoff in their marketing materials...I took another look at our product and decided we would never have peak traffic like Amazon and never want to handle inconsistencies this way. In fact, our product had grown in complexity and what we actually needed were relational database features. Two months later, we had abandoned DynamoDB for a relational database...DynamoDB provides no means of performing a transactional change to persisted data...DynamoDB provides no means of ensuring the data it stores matches an expected structure...DynamoDB was never designed to handle multi-table queries...we adopted MySQL.

- WAR, really? Napoleon was the Best General Ever, and the Math Proves it: Among all generals, Napoleon had the highest WAR (16.679) by a large margin. In fact, the next highest performer, Julius Caesar (7.445 WAR), had less than half the WAR accumulated by Napoleon across his battles. Napoleon benefited from the large number of battles in which he led forces. Among his 43 listed battles, he won 38 and lost only 5. Napoleon overcame difficult odds in 17 of his victories, and commanded at a disadvantage in all 5 of his losses. No other general came close to Napoleon in total battles.

- Becoming huge is a random walk and then you're set. Got it. Youtube Views Predictor: Ultimately, it looks like past performance dictates future success. The best predictor of how well your channel will do is the number of views your previous videos have had. The suggestive nature of your thumbnail and the “clickbait-iness” of a videos title has marginal influence on the number of views a viewer can get.

- Can't get enough microservices? Here's davecremins/Microservices-Resources, links to excellent videos, articles, blogs, etc. on microservices architecture.

- Great deep dive on how AlphaZero taught itself chess. Have to say, my first thoughts were on what are the implications for warfare? The future is here – AlphaZero learns chess: If Karpov had been a chess engine, he might have been called AlphaZero. There is a relentless positional boa constrictor approach that is simply unheard of. Modern chess engines are focused on activity, and have special safeguards to avoid blocked positions as they have no understanding of them and often find themselves in a dead end before they realize it. AlphaZero has no such prejudices or issues, and seems to thrive on snuffing out the opponent’s play. It is singularly impressive, and what is astonishing is how it is able to also find tactics that the engines seem blind to.

- Videos from the Conference on Robot Learning are now available. As are the papers. It's all above my spherical processor cavity.

- We saw an 80% reduction in processing time with the new stack and a near 0% failure rate. PayPal uses Akka to implement Payouts, a PayPal product through which users can submit up to 15,000 payments in a single payment request. Learnings from Using a Reactive Platform – Akka/Squbs: use Akka to build the payment orchestration engine as a data flow pipeline...Real world entities also have a dynamic nature to them – the way in which they come to life over the lifetime of the application and how they interact with each other. Java unfortunately does not have a sufficiently nuanced abstraction for representing such changes in states of entities over time or their degree of concurrency. Instead, we have a CPU’s (hardware) model of the world – threads and processors, that has no equivalent in the real world. This results in an impedance mismatch that the programmer is left to reconcile...Data flow programming using streams and the actor model provide great conceptual models for reasoning about the dynamic aspects of a real world system. These models represent a paradigm shift once adopted. We can use them to represent concurrency using abstractions that are intuitive...adopting reactive streams and the actor model has enabled us to service our customers better by providing a more reliable and performant API.

- They can't stop the signal, Mal. They can never stop the signal. Norway switches off FM radio, but this station is defying government order.

- We estimate it to have saved 2–3 weeks of engineering time per Java service over the previous development process. A common theme, moving from one architecture to another while having to keep both going. The other common theme, JSON sucks for RPC. Building Services at Airbnb, Part 1: moving from a monolithic Rails service towards a SOA while building out new products and features is not without its challenges...At Airbnb, backend services are mostly written in Java using the Dropwizard web service framework...It lacks clearly defined and strongly typed service interface and data schema...A RESTful service framework does not provide service RPCs; developers have to spend a non-trivial amount of time writing both Ruby and Java clients for their services...JSON request/response data payloads are large and inefficient...Our approach is to keep the Dropwizard service framework, add customized Thrift service IDL (interface definition language) to the framework, switch the transport protocol from JSON-over-Http to Thrift-over-Http, and build tools to generate RPC clients in different languages...The new service IDL-driven Java service development process has been widely embraced by product teams since its release. It has dramatically increased development velocity.

- It's amazing what 3 programmers can do these days. It's also amazing how many different systems it takes to run a service. Dubsmash: Scaling To 200 Million Users With 3 Engineers: Not only has Dubsmash seen immense user growth (well over 350 million installations) but also dozens of features to service them...Dubsmash mobile applications are still written natively in Java on Android and Swift on iOS...The backend systems consist of 10 services (and rising …), written primarily in Python with a couple in Go and Node.js. The Python services are written using Django and Django REST Framework since we collected quite a lot of domain-knowledge over the years and Django has proven itself as a reliable tool for us to quickly and reliably build out new services and features. We recently started to add Flask to our Python stack to support smaller services running on AWS Lambda using the (awesome) Zappa framework...We started using Go as the language of choice for high-performance services a little over a year ago and both our authentication services as well as our search-service are running on Go today. In order to build out web applications we use React, Redux, Apollo, and GraphQL...Since we deployed our very first lines of Python code more than 2 years ago we are happy users of Heroku: it lets us focus on building features rather than maintaining infrastructure, has super-easy scaling capabilities...we moved this part of our processing over to Algolia a couple of months ago; this has proven to be a fantastic choice, letting us build search-related features with more confidence and speed...Memcached is used in front of most of the API endpoints to cache responses in order to speed up response times and reduce server-costs on our side...We since have moved to a multi-way handshake-like upload process that uses signed URLs vendored to the clients upon request so they can upload the files directly to S3. These files are then distributed, cached, and served back to other clients through Cloudfront...we rely on AWS Lambda [for notifications]...We recently started using Stream for building activity feeds in various forms and shapes...In order to accurately measure & track user behaviour [by] sending JSON blobs of events to AWS Kinesis from where we use Lambda & SQS to batch and process incoming events and then ingest them into Google BigQuery...On the backend side we started using Docker almost 2 years ago...We use Buildkite to run our tests on top of elastic EC2 workers (which get spun-up using Cloudformation as needed) and Quay.io to store our Docker images for local development and other later uses.

- Server-side I/O Performance: Node vs. PHP vs. Java vs. Go. Begins by giving a good intro into system calls, Blocking vs. Non-blocking Calls, and scheduling. Then it goes into how each language handles the various issues. The benchmark, which of course everyone found fault with, revealed: at high connection volume the per-connection overhead involved with spawning new processes and the additional memory associated with it in PHP+Apache seems to become a dominant factor and tanks PHP’s performance. Clearly, Go is the winner here, followed by Java, Node and finally PHP.

- Most people do not need supercomputers. Some estimates and common sense are often enough to get code running much faster. No, a supercomputer won’t make your code run faster: Powerful computers tend to be really good at parallelism...dumping your code on a supercomputer can even make things slower!...Sadly, I cannot think of many examples where the software automatically tries to run using all available silicon on your CPU...it is quite possible that a little bit of engineering can make the code run 10, 100 or 1000 times faster. So messing with a supercomputer could be entirely optional...I recommend making back-of-the-envelope computations. A processor can do billions of operations a second. How many operations are you doing, roughly? If you are doing a billion simple operations (like a billion multiplications) and it takes minutes, days or weeks, something is wrong and you can do much better. If you genuinely require millions of billions of operations, then you might need a supercomputer.

- 2017 Year in review: Better global networks: Part of what makes our data centers some of the most advanced facilities in the world is the idea of disaggregation — decoupling hardware from software and breaking down the technologies into their core components. This allows us to recombine the technologies in new ways or upgrade components as new versions become available, ultimately enabling more flexible, scalable, and efficient systems...While serving 100G traffic will help increase the capacity of our data centers, we still faced growing bandwidth demands from both internet-facing traffic and cross-data center traffic as people share richer content like video and 360 photos. We decided to split our internal and external traffic into two separate networks and optimize them individually, so that traffic between data centers wouldn't interfere with the reliability of our worldwide networks...We flew the second test flight of our Aquila solar-powered aircraft, building on the lessons we learned from the first flight in 2016 to further demonstrate the viability of high-altitude connectivity. We set new records in wireless data transfer, achieving data rates of 36 Gb/s with millimeter-wave technology and 80 Gb/s with optical cross-link technology between two terrestrial points 13 km apart. We demonstrated the first ground-to-air transmission at 16 Gb/s over 7 km.

- Internet protocols are changing: HTTP/2 (based on Google’s SPDY) was the first notable change — standardized in 2015, it multiplexes multiple requests onto one TCP connection, thereby avoiding the need to queue requests on the client without blocking each other...TLS 1.3 is just going through the final processes of standardization and is already supported by some implementations...QUIC is an attempt to address that by effectively rebuilding TCP semantics (along with some of HTTP/2’s stream model) on top of UDP...The newest change on the horizon is DOH — DNS over HTTP.

- Why are protocols so hard to change? Protocol designers are increasingly encountering problems where networks make assumptions about traffic. For example, TLS 1.3 has had a number of last-minute issues with middleboxes that assume it’s an older version of the protocol. gQUIC blacklists several networks that throttle UDP traffic, because they think that it’s harmful or low-priority traffic. When a protocol can’t evolve because deployments ‘freeze’ its extensibility points, we say it has ossified. TCP itself is a severe example of ossification; so many middleboxes do so many things to TCP — whether it’s blocking packets with TCP options that aren’t recognized, or ‘optimizing’ congestion control. It’s necessary to prevent ossification, to ensure that protocols can evolve to meet the needs of the Internet in the future.

- You might be interested in a free (requires bleeding some personal data) book on Chaos Engineering by Casey Rosenthal, Engineering Manager for the Chaos, Traffic, and Intuition Teams at Netflix.

- Flash is dying. You have a 10 year old product with over a million lines of ActionScript and $1 million in revenue per month. Your quest is to figure out what the heck to do. Post Mortem: The Death of Flash and Rewriting 1.4 Million Lines of Code: Haxe was undoubtedly the right choice of platform for our company. It actually performed better than we had expected at the outset and its ability to natively support our games across multiple devices is critical to the ongoing success of our business...The cross-compiling approach feels extremely strong. Not only does it give us access to native performance and features, it allows us to easily target additional devices...Haxe’s open source nature also means the language (or platform) does less directly for the developer than a system like Unity

- Nicely done. Convolutional Neural Networks (CNNs) explained.

- Did you know there are 35 PaaS providers? 35 Leading PaaS Providers Offering Built-In Infrastructure and Scalability. Pricing and features are all over the map. Some are WYSIWYG, which is distinctive.

- Here's a good explanation of Aeron the ultimate example of high performance messaging best engineering practices. Aeron — low latency transport protocol: Aeron uses unidirectional connections. If you need to send requests and receive responses, you should use two connections...to test Aeron with the lowest latency, you may start from LowLatencyMediaDriver...Garbage-free in a steady state, Applies Smart Batching in the message path, Lock-free algorithms in the message path, Non-blocking IO in the message path, No exceptional cases in the message path, Apply the Single Writer Principle, Prefers unshared state, Avoids unnecessary data copies.

- Fascinating collection of old yet still relevant Technology books from Project Gutenberg. A History of the Growth of the Steam-Engine., 2d rev. ed. A Study of American Beers and Ales.

- Why run your own FTP servers anymore? IMDb Data – Now available in Amazon S3. And has happen when there's a change, functionality is reduced in the process. Marcel Korpel: So, in short, it seems that there is less data (that is easier to parse, I assume) and you actually have to pay and jump through hoops setting up (an) account(s)* to an AWS and S3 to be able to access the bulk data using an API I have to learn using. If I am correct, you have to pay for the bandwidth, so are there even diff files provided to lessen that burden, like on the FTP sites? All in all, I am sad to say this doesn't sound as an improvement to me. TheObviousOne: This seems to negate the bidirectional, symbiotic nature of IMDb. It seems to purposefully ignore that IMDb wouldn't be the "same" if it wouldn't be for both the contributors and for ... IMDb itself. One without the other(s) and the IMDb data (what "IMDb" is at its core) of today would be many times of "poorer" quality.

- If you're interested in the history behind power meters you'll like Amp Hour #371 – An Interview With Joe Bamberg. Surprisingly rich.

- 3 Hard Lessons from Scaling Continuous Deployment to a Monolith with 70+ Engineers: 1) Continuous Deployment is much harder with lots of engineers and code 2) Use trains to manage many changes 3) Kill release teams — democratize deployment workflows instead

- How I built a replacement for Heroku and cut my platform costs by 4X: It didn’t take too long until I realized I was paying $100+ to Heroku. It just didn’t make sense. Some of my reader bots that require 24hr availability consume only 128MB of RAM...Since I had no luck in finding a good replacement for Heroku, I decided to build one...CaptainDuckDuck is written in NodeJS. But it’s not the NodeJS that your end users deal with. The main engines that Captain uses under the hood are nginx and docker.

- Yep, only end-to-end validation and flow control count IRL. Day 13 - Half-Dead TCP Connections and Why Heartbeats Matter.

- Metaparticle: a standard library for cloud native applications on Kubernetes. The goals of the Metaparticle project are to democratize the development of distributed systems. Metaparticle achieves this by providing simple, but powerful building blocks, built on top of containers and Kubernetes.

- githubsaturn/captainduckduck: Easiest app/database deployment platform and webserver package for your NodeJS, Python, Java applications. No Docker, nginx knowledge required!

- Fine-Grained Object Recognition and Zero-Shot Learning in Remote Sensing Imagery: Fine-grained object recognition that aims to identify the type of an object among a large number of subcategories is an emerging application with the increasing resolution that exposes new details in image data...The experiments show that the proposed model achieves 14.3% recognition accuracy for the classes with no training examples, which is significantly better than a random guess accuracy of 6.3% for 16 test classes, and three other ZSL algorithms.

- SAP HANA adoption of non-volatile memory: In this paper, we present the early adoption of Non-Volatile Memory within the SAP HANA Database, from the architectural and technical angles. We discuss our architectural choices, dive deeper into a few challenges of the NVRAM integration and their solutions, and share our experimental results. As we present our solutions for the NVRAM integration, we also give, as a basis, a detailed description of the relevant HANA internals.

- Adversarial Examples that Fool Detectors: In this paper, we demonstrate a construction that successfully fools two standard detectors, Faster RCNN and YOLO. The existence of such examples is surprising, as attacking a classifier is very different from attacking a detector, and that the structure of detectors - which must search for their own bounding box, and which cannot estimate that box very accurately - makes it quite likely that adversarial patterns are strongly disrupted.