Stuff The Internet Says On Scalability For February 3rd, 2017

Hey, it's HighScalability time:

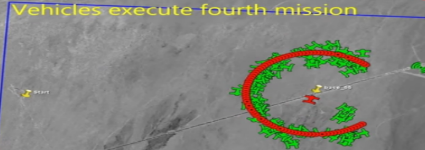

We live in interesting times. F/A-18 Super Hornets Launch drone swarm.

If you like this sort of Stuff then please support me on Patreon.

- 100 billion: words needed to train large networks; 73,653: hard drives at Backblaze; 300 GB hour: raw 4k footage; 1993: server running without rebooting; 64%: of money bet is on the Patriots; 950,000: insect species; 374,000: people employed by solar energy; 10: SpaceX launched Iridium Next satellites; $1 billion: Pokémon Go revenue; 1.2 Billion: daily active Facebook users; $7.17 billion: Apple service revenue; 45%: invest in private cloud this year;

- Quoteable Quotes:

- @kevinmarks: #msvsummit @varungyan: Google's scale is about 10^10 RPCs per second in our microservices

-

language: "Order and chaos are not a properties of things, but relations of an observer to something observed - the ability for an observer to distinguish or specify pattern."

- general_ai: Doing anything large on a machine without CUDA is a fool's errand these days. Get a GTX1080 or if you're not budget constrained, get a Pascal-based Titan. I work in this field, and I would not be able to do my job without GPUs -- as simple as that. You get 5-10x speedup right off the bat, sometimes more. A very good return on $600, if you ask me.

- Al-Khwarizmi: Maybe I'm just not good at it and I'm a bit bitter, but my feeling is that this DL [deep learning] revolution is turning research in my area from a battle of brain power and ingenuity to a battle of GPU power and economic means

- Space Rogue: pcaps or it didn't happen

- LtAramaki: Everyone thinks they understand SOLID, and when they discuss it with other people who say they understand SOLID, they think the other party doesn't understand SOLID. Take it as you will. I call this the REST phenomenon.

- evaryont: I don’t see this as them [Google] trying to “seize” a corner of the web, but rather Google taking it’s paranoia to the next level. If they can’t ever trust anyone in the system [Certificate Authority], why not create your own copy of the system that no one else can use? Being able to have perfect security from top to bottom, similar to their recently announced custom chips they put in every one of their servers.

- David Press: The benefits of SDN are less about latency and uptime and more about flexibility and programmability.

- Benedict Evans: Web 2.0 was followed not by anything one could call 3.0 but rather a basic platform shift...one can see the rise of machine learning as a fundamental new enabling technology...one can see quite a lot of hardware building blocks for augmented reality glasses...so the things that are emerging at the end of the mobile S-Curve might also be the beginning of the next curve.

- @kevinmarks: 20% people have 0 microservices in production - the rest are already running microservices

- @joeerl: You've got to be joking - should be 1M clients/server at least

- SikhGamer: We considered using RabbitMQ at work but ultimately opted for SNS and SQS instead. Main reason being that we cared about delivering value and functionality. Over the cost of yet managing another resource. And the problems of reliability become Amazon's problem. Not ours.

- DataStax: A firewall is the simplest, most effective means to secure a database. Sounds complicated, but it’s so easy a government agent could do it.

- @danielbryantuk: "If you think good architecture is expensive, try bad architecture" @KevlinHenney #OOP2017

- Peter Dizikes: The new method [wisdom from crowds] is simple. For a given question, people are asked two things: What they think the right answer is, and what they think popular opinion will be. The variation between the two aggregate responses indicates the correct answer.

- Philip Ball: Looked at this way, life can be considered as a computation that aims to optimize the storage and use of meaningful information. So living organisms can be regarded as entities that attune to their environment by using information to harvest energy and evade equilibrium.

- Ed Sutton: The study shows the effectiveness of personality targeting by showing that marketers can attract up to 63% more clicks and up to 1400% more conversions in real-life advertising campaigns on Facebook when matching products and marketing messages to consumers’ personality characteristics.

- Pete Trbovitch: Today’s mobile app ecosystem most closely resembles the PC shareware era. Apps that are offered free to download can carry an ad-supported income model, paid extended content, or simply bonus features to make the game easier to beat. The bar to entry is as low as it’s ever been

- @BenedictEvans: Global mainframe capacity went up 4-5x from 2000-2010. ‘Dead’ technology can have a very long half-life

- @searls: I keep seeing teams spend months building custom infrastructure that could be done in 20 minutes with Heroku, Github, Travis. Please stop.

- @mdudas: Starbucks says popularity of its mobile app has created long lines at pickup counters & led to drop in transactions.

- @cdixon: Software eats networking: Nicira (NSX) will generate $1B revenue for VMWare this year

- raubitsj: With respect to vibration: we [Google] found vibration caused by adjacent drives in some of our earlier drive chassis could cause off-track writes. This will cause future reads to the data to return uncorrectable read errors. Based on Backblaze's methodology they will likely call out these drives as failed based on SMART or RAID/ReedSolomon sync errors.

- Well this is different. GitLab live streamed the handling of their GitLab.com Database Incident - 2017/01/31. It wasn't what you would call riveting, but that's an A+++ for transparency. They even took audience questions during the process. What went wrong? The snippets function was DDoSd which generated a large increase of data to the database so the slaves were not able to keep up with the replication state. WAL transaction files that were no longer in the production backlog were being requested so transaction logs were missed. They were starting the copy again from a known good state then things went sideways. They were lucky to have a 6 hour old backup and that's what they were restoring too. Sh*te happens, how the team handled it and their knowledge of the system should give users confidence going forward.

- OK, this turned out to be false, but nobody doubted it could be true or where things are going in the future. Hotel ransomed by hackers as guests locked out of rooms.

- Interesting use of Lambda by AirBnB. StreamAlert: Real-time Data Analysis and Alerting. There's an evolution from compiling software using libraries that must be in the source tree; running software that requires downloading lots of package from a repository; and now using services that require a lot of other services to be available in the environment for a complex pipeline to run. StreamAlert just doesn't use Lambda, it also uses Kinesis, SNS, S3, Cloudwatch, KMS, and IAM. Each step is both a deeper level of lock-in and an enabler of richer functionality. What does StreamAlert do?: a real-time data analysis framework with point-in-time alerting. StreamAlert is unique in that it’s serverless, scalable to TB’s/hour, infrastructure deployment is automated and it’s secure by default.

- Cloud Bottlenecks: How Pokémon Go (and other game dev teams) caught them all: as developers have found with just about every networked platform in the past, having all that capacity doesn't matter if the connectivity isn't there...When it came to that first big spike in adoption, Keslin said, “Google didn’t seem to notice—the game probably doubled the amount of data it was handling"...Some of the bottlenecks were in Niantic’s code, but “we also had problems with a couple of open source libraries that we never anticipated—those were hardest to find...ran into some problems with the cloud infrastructure; the container engine had some subsystems that had never been tested at that scale. It also had a couple of issues with the networking stack...The issues that came up were more about how mobile carriers around the world have different ways of marketing product...We were lucky, too. We had designed the system to scale, we just hadn’t tested it. Fortunately, we’d designed the architecture to scale robustly.

- When using a bloated text format is the problem compression is usually the answer. How eBay’s Shopping Cart used compression techniques to solve network I/O bottlenecks. When JSON BLOBs caused cache misses which caused shopping cart recomputes the solution was compression using LZ4_HIGH. Results: oplog write rates went from 150GB/hour to 11GB/hour, a 1300% drop; average document size went from 32KB to 5KB, a 600% drop; even saw some improvements in our eventual service response times. See also Beating JSON performance with Protobuf: performed up to 6 times faster than JSON. See also Evolving MySQL Compression - Part 2.

- Google's magnum opus, Site Reliability Engineering, is now available for free. Thanks Google.

- Great trip through the low level details of source-level debugging of native code in gdb. Debugging with the natives, part 2.

- Two VC models have emerged. The Cloudcast #287 -Venture Capital and the Cloud Native Landscape. 1: Make enterprise products on top of open source as applications, deliver end-to-end solutions, make it very easy to embrace technology, innovate up the stack, get closer to end users. 2: Operate open source as a service, makes best sense for products that are complicated and difficult to manage. Plus: there's a somewhat unconvincing argument for why services won't get Sherlocked by Amazon implementing the service you build on Amazon; build services that delight developers and deliver highly differentiated experiences.

- Videos from Disaggregate: Networking are now available. This was an invite only conference on the topic of decoupling hardware and software so hardware and software can develop independently of each other at their own rate. The goal is to build more efficient, flexible, and scalable networking solutions. Some of the talks: Networking at Facebook, Managing a Hybrid Network, Wedge100 and Backpack: From the Leaf to the Spine, Why Forwarding Planes will be Programmable: New Paradigms and Use Cases in Networking, Open Networking Landscape Overview, Disaggregating The Network OS With Ubuntu, OpenBMC: An Open Software Framework for BMC, Experiences Deploying Disaggregated Solutions, The Disaggregated Network Stack.

- ShmooCon 2017 (open discussions of critical infosec issues) videos are now available. You might like: Space Rogue - 35 Years of Cyberwar: The Squirrels are Winning.

- For an amazing low level tour of IPv6 Chip Overclock is your Virgil. Buried Treasure. It's rich in practical detail and history. Result: My testbed has convinced me that, with just a little work, it is possible to deploy IPv6 into a server-side product (for example, in a data center), and communicate with that server using either new IPv6 clients or legacy IPv4 clients in the field.

- Will externalizing program logic become a thing? AWS has Step Functions. Then there's Netflix’s Open Source Orchestrator, Conductor, May Prove the Limits of Ordinary Scalability: Pub/sub model worked for simplest of the flows, but quickly highlighted some of the issues associated with the approach. In their list, they said process flows tend to be “embedded” within applications, and that the assumptions around their workflow, such as SLAs, tend to be tightly coupled and non-adaptable. Finally, there wasn’t an easy way to monitor their progress...So Netflix’ approach with Conductor takes the next step...The state of the workflow is determined centrally, by Conductor’s Decider, by comparing each worker’s progress against what it calls the “blueprint.”...Netflix Conductor is the latest evidence that, for computing processes to be more effective and viable at huge scales, they have to behave less and less like anything a rational businessperson would expect

- Is it possible to hit A million requests per second with Python?: Probably not until recently...The Python community is doing a lot of around performance lately. CPython 3.6 boosted overall interpreter performance with new dictionary implementation. CPython 3.7 is going to be even faster, thanks to the introduction of faster call convention and dictionary lookup caches.

- If you've wanted to build a parser here's a very good overview. Parsing absolutely anything in JavaScript using Earley algorithm.

- Please observe a moment of silence. The Linux Kernel Archives is Shutting down FTP services. Parts of the old Internet are falling away, like the famed Ringling Brothers Circus closing because everyone has gone digital.

- Jürgen Schmidhuber: Given such raw computational power, I expect huge (by today’s standards) recurrent neural networks on dedicated hardware to simultaneously perceive and analyse an immense number of multimodal data streams (speech, texts, video, many other modalities) from many sources, learning to correlate all those inputs and use the extracted information to achieve a myriad of commercial and non-commercial goals. Those RNNs will continually and quickly learn new skills on top of those they already know. This should have innumerable applications, although I am not even sure whether the word “application” still makes sense here. This will change society in innumerable ways. What will be the cumulative effect of all those mutually interacting changes on our civilisation, which will depend on machine learning in so many ways? In 2012, I tried to illustrate how hard it is to answer such questions: A single human predicting the future of humankind is like a single neuron predicting what its brain will do.

- Hacking security protocols is not for the timid. Here's Facebook on Building Zero protocol for fast, secure mobile connections. Problem: in emerging markets like India, people would spend 600ms (75th percentile) trying to establish a TLS connection. Solution: build a zero round-trip TLS 1.2 protocol. Method: lots of complicated stuff. Result: connection establishment time reduced by 41 percent at the 75th percentile, and an overall 2 percent reduction in request time.

- Do you really need a distributed cache? Estimating the cache efficiency using big data. By doing a smart analysis Allegro found: there is no need to apply a distributed cache, as it makes the overall system architecture more complex and more difficult to maintain. Based on data, we realised that simple local cache can significantly reduce number of Cassandra queries.

- Neural Network Learns The Physics of Fluids and Smoke. Watch a neural network predict how the behaviour of a smoke puff will change in time. We already know how to do this, but the neural network learned how to do it and the run time of the algorithm is a few milliseconds. With traditional techniques it normally takes several minutes.

- LtAramaki: In a nutshell, the author explores what happens to our system and API complexity when we indiscriminately shove CQRS end-to-end in our architecture, and it's not pretty. Things like not seeing other people's writes in your reads, or even not seeing your own writes in your own reads. The author proposes we live with the complexity, because end-to-end CQRS is now the only valid way of doing everything, and gives us some band-aids we can slap on APIs to make things work sort of. The bandaids basically are about making clients participate in our implementation details by passing things like "domain state version" numbers with their requests, as if a client calling our API really cares which silver bullet architecture we swear by this week. But the solution I'd propose differs from the author, and it's: don't shove CQRS everywhere, and relax your consistency only as much as you need in order to implement your domain within known parameters of volume and efficiency. Or in simpler words, we shouldn't architect a project that will be used by three people once a month, using architecture intended to service millions of requests a second.

- Garbage collection strikes again. Post-mortem: Outages on 1/19/17 and 1/23/17: Analysis of the heap dump identified an unbounded, non-evicting, strongly-referencing cache (clojure.core/memoize) in a third-party library (Amazonica) which stored AWS SDK client objects given the credentials and configurations which were used to originally construct the objects. Skyliner is a multi-tenant application which uses temporary AWS credentials of short duration to manage customer resources across all 14 public regions. As a result, the cache quickly accumulated hundreds of thousands of client objects, eventually exhausting the JVM’s heap and sending the garbage collector into a death spiral.

- Summary of My Meeting with Google AMP Team after “Google may be stealing your mobile traffic” post: I don’t think that Google set out to steal our traffic. In Malte’s own words, from the start Google has been very careful to construct a project in such a way that publishers maintain full control of their content. Stealing content, is exact opposite of what they are trying to achieve. At the same time, it is clear to me that AMP is not as decentralized as the original web.

- Things I Wish I Knew When I Started Building Reactive Systems: You’ll be using a different concurrency model- You’re going to use sub-thread level concurrency; You’ll be using asynchronous I/O (or if you keep using synchronous I/O, it’ll need special treatment); There’s no Two-Phase-Commit; There’s no Application Server.

- mattkrause: Classification/supervised learning is essentially about learning labels. We have some examples that have already been labeled: cats vs. dogs, suspicious transactions vs legitimate ones, As vs Bs vs...Zs. From those examples, we want to learn some way to assign new, unlabeled instances, to one of those classes. Reinforcement learning, in contrast, is fundamentally about learning how to behave. Agents learn by interacting with their environment: some combinations of states and actions eventually lead to a reward (which the agent "likes") and others do not. The reward might even be disconnected from the most recent state or action and instead depend on decisions made earlier. The goal is to learn a "policy" that describes what should be done in each state and balances learning more about the environment ("exploration", which may pay off by letting us collect more rewards later) and using what we know about the environment to maximize our current reward intake (exploitation).

- The Trillion Internet Observations Showing How Global Sleep Patterns Are Changing: In general, major cities tend to have longer sleeping times compared to surrounding satellite cities...Whilst North America has remained largely static over the study window, Europe sleep duration has declined, and East Asian sleep duration has grown.

- How Lil Todo Syncs Tasks Across Multiple Devices Just Using Dropbox: instead of writing to a database of tasks, have each device write the changes it would like to make to the database. In other words, each file for each device is a transaction log of all edits made from that device...This sync system has been reliable thus far and there are no worries about race conditions or locking issues since each device is solely responsible for its own transaction log. The system can also scale up indefinitely (at the cost of perf) since each device gets a UUID and it’s simply a matter for all devices to “notice” the new transaction log in the shared app folder on Dropbox. There is no central registry of devices, just transaction logs representing each device. No locking of resources is ever needed

- Oh poor Internet, what will become of you? [Internet] Addressing 2016: We are witnessing an industry that is no longer using technical innovation, openness and diversification as its primary means of propulsion. The widespread use of NATs in IPv4 limit the technical substrate of the Internet to a very restricted model of simple client/server interactions using TCP and UDP...What is happening is that today's internet carriage service is provided by a smaller number of very large players, each of whom appear to be assuming a very strong position within their respective markets...The evolving makeup of the Internet industry has quite profound implications in terms of network neutrality, the separation of functions of carriage and service provision, investment profiles and expectations of risk and returns on infrastructure investments, and on the openness of the Internet itself. The focus now is turning to the regulatory agenda. Given the economies of volume in this industry, it was always going to be challenging to sustain an efficient, fully open and competitive industry, but the degree of challenge in this agenda is multiplied many-fold when the underlying platform has run out of the basic currency of IP addresses...The Internet is now the established norm. The days when the Internet was touted as a poster child of disruption in a deregulated space are long since over, and these days we appear to be increasingly looking further afield for a regulatory and governance framework that can continue to challenge the increasing complacency of the newly-established incumbents.

- frankmcsherry/explanation: This project demonstrates how one can use differential dataflow to explain the outputs of differential dataflow computations, as described in the paper Explaining outputs in modern data analytics. There is also an earlier blog post discussing the material, and trying to explain how it works.

- saurabhmathur96/clickbait-detector: Detects clickbait headlines using deep learning.

- apache/incubator-gossip: Gossip protocol is a method for a group of nodes to discover and check the liveliness of a cluster.

- mTCP: A Highly Scalable User-level TCP Stack for Multicore Systems: This work presents mTCP, a high-performance userlevel TCP stack for multicore systems. mTCP addresses the inefficiencies from the ground up—from packet I/O and TCP connection management to the application interface. In addition to adopting well-known techniques, our design (1) translates multiple expensive system calls into a single shared memory reference, (2) allows efficient flowlevel event aggregation, and (3) performs batched packet I/O for high I/O efficiency. Our evaluations on an 8-core machine showed that mTCP improves the performance of small message transactions by a factor of 25 compared to the latest Linux TCP stack and a factor of 3 compared to the best-performing research system known so far. It also improves the performance of various popular applications by 33% to 320% compared to those on the Linux stack.

- Outrageously Large Neural Networks: The Sparsely-Gated Mixture-of-Experts Layer: In this work, we address these challenges and finally realize the promise of conditional computation, achieving greater than 1000x improvements in model capacity with only minor losses in computational efficiency on modern GPU clusters. We introduce a Sparsely-Gated Mixture-of-Experts layer (MoE), consisting of up to thousands of feed-forward sub-networks. A trainable gating network determines a sparse combination of these experts to use for each example. We apply the MoE to the tasks of language modeling and machine translation, where model capacity is critical for absorbing the vast quantities of knowledge available in the training corpora. We present model architectures in which a MoE with up to 137 billion parameters is applied convolutionally between stacked LSTM layers. On large language modeling and machine translation benchmarks, these models achieve significantly better results than state-of-the-art at lower computational cost.