Stuff The Internet Says On Scalability For March 10th, 2017

Hey, it's HighScalability time:

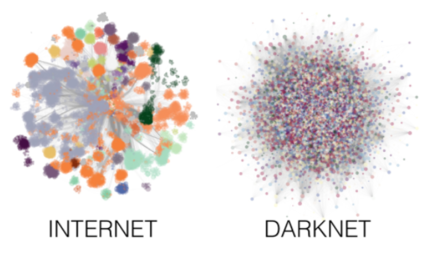

Darknet is 4x more resilient than the Internet. An apt metaphor? (URV)

If you like this sort of Stuff then please support me on Patreon.

- > 5 9s: Spanner availability; 200MB: random access from DNA storage; 215 Pbytes/gram: DNA storage; 287,024: Google commits to open source; 42: hours of audio gold; 33: minutes to get back into programming after interruption; 12K: Chinese startups started per day; 35 million: tons of good shipped under Golden Gate Bridge; 209: mph all-electric Corvette; 500: Disney projects in the cloud; 40%: rise in CO2;

- Quoteable Quotes:

- Marc Rogers: Anything man can make man can break

- @manupaisable: 10% of machines @spotify rebooted every hour because of defunct #docker - war stories by @i_maravic @qconlondon

- @robertcottrell: “the energy cost of each bitcoin transaction is enough to power 3.17 US households for a day”

- Eric Schmidt: We put $30 billion into this platform. I know this because I approved it. Why replicate that?

- dim: It uses p30 technology. Just basic things, gliders and lightweight spaceships. Basically, the design goes top-down: At the very top, there's the clock. It is a 11520 period clock. Note that you need about 10.000 generations to ensure the display is updated appropriately, but the design should still be stable with a clock of smaller period (about 5.000 or so - the clock needs to be multiple of 60).

- Luke de Oliveira: Most people in AI forget that the hardest part of building a new AI solution or product is not the AI or algorithms — it’s the data collection and labeling. Standard datasets can be used as validation or a good starting point for building a more tailored solution.

- @violetblue: Did a lot of people not know that the CIA is a spy agency?

- @viktorklang: Async is not about *performance*—it is about *scalability*. Let your friends know

- stillsut: The difference is in the old days, you adapted to computer. Now, computer must adapt to you.

- Eric Brewer: Spanner uses two-phase commit to achieve serializability, but it uses TrueTime for external consistency, consistent reads without locking, and consistent snapshots.

- Emily Waltz: Nomura’s molecular robot differs in that it is composed entirely of biological and chemical components, moves like a cell, and is controlled by DNA.

- Chris Anderson: Most of the devices in our life, from our cars to our homes, are “entropic,” which is to say they get worse over time. Every day they become more outmoded. But phones and drones are “negentropic” devices. Because they are connected, they get better, because the value comes from the software, not hardware

- William Dutton: Most people using the internet are actually more social than those who are not using the internet

- @swardley: ... by 2016, you should have dabbled / learn / tested serverless. "Go IaaS" or "build our biz as a cloud" in 2017 is #facepalm

- Bradford Cross: The incompetent segment: the incompetent segment isn’t going to get machine learning to work by using APIs. They are going to buy applications that solve much higher level problems. Machine learning will just be part of how they solve the problems.

- @denormalize: What do we want? Machine readable metadata! When do we want it? ERROR Line 1: Unexpected token `

- @Ocramius: "And we should get rid of users: users are not pure, since they modify the state of our system" #confoo

- Morning Paper: The most important overarching lesson from our study is this: a single file-system fault can induce catastrophic outcomes in most modern distributed storage systems.

- Linus Torvalds: And if the DRM "maintenance" is about sending me random half-arsed crap in one big pull, I'm just not willing to deal with it. This is like the crazy ARM tree used to be.

- Shaun McCormick: Technical Debt is a Positive and Necessary Step in software engineering

- @tdierks: Hello, my name is Tim. I'm a lead at Google with over 30 years coding experience and I need to look up how to get length of a python string.

- @codinghorror: I colocated a $600 Ali Express mini pc for $15/month and it is 2x faster than "the cloud"

- @antirez: "Group chat is like being in an all-day meeting with random participants and no agenda".

- @sriramhere: Wise man once wrote "As flexible as it is, compute in AWS is optimized for the old capex world." @sallamar

- @wattersjames: AI will come to your company carefully disguised as a lot of ETL and data-pipeline work...

- ceejayoz: Lambda's billed in 100 millisecond increments. EC2 servers are billed in one hour increments. If you need short tasks that run in bursty workloads, Lambda's (potentially) a no-brainer.

- @codinghorror: we have not found bare metal colocation to be difficult, with one exception: persistent file storage. That part, strangely, is quite hard.

- @jbeda: Lesson from 10 years at Google: this is true until it isn't. Sometimes you *can* build a better mouse trap.

- jfoutz: I agree. It's genius in a Lex Luthor kind of way. If I understood the full scope of the application, I like to think i'd decline to work on that. It's easy to imagine engineers working on small parts of the system, and never really connecting the dots that the whole point is to evade law enforcement.

- dsr_: It's harder (but not impossible) to have complete service lossage like this [Slack] in a federated protocol. That's why you didn't hear about the great email collapse of 2006.

- throw_away_777: I agree that neural nets are state-of-the-art and do quite well on certain types of problems (NLP and vision, which are important problems). But a lot of data is structured (sales, churn, recommendations, etc), and it is so much easier to train an xgboost model than a neural net model.

- @GossiTheDog: #Vault7 CIA - Wiki that Wikileaks released is/was on hosted on DEVLAN, the CIA's "dirty" development network - a major architecture error.

- Alison Gopnik: new studies suggest that both the young and the old may be especially adapted to receive and transmit wisdom. We may have a wider focus and a greater openness to experience when we are young or old than we do in the hurly-burly of feeding, fighting and reproduction that preoccupies our middle years.

- @pierre: Wow, audacious to say the least. Intentionally flagging authorities to mislead them. It's like the VW emissions code

- Joan Gamell: Starting with the obvious: the CIA uses JIRA, Confluence and git. Yes, the very same tools you use every day and love/hate.

- Chris Baraniuk: The networks of genes in each animal is a bit like the network of neurons in our brains, which suggests they might be "learning" as they go

- futurePrimitive: Managers seem to think that programming is typing. No. Programming is *thinking*. The stuff that *looks* like work to a manager (energetic typing) only happens after the hard work is done silently in your head.

- @danielbryantuk: "There is no such thing as a 'stateless' architecture. It's just someone else's problem" @jboner #qconlondon

- Platypus: There's no panacea for vendor lock-in. Not even open source, but open source alone gets you further than any number of standards that don't cover what really matters or vendor-provided tools that might go away at any moment. It's the first and best tool for dealing with lock-in, even if it's not perfect.

- @tpuddle: @cliff_click talk at #qconlondon about fraud detection in financial trades. Searching 1 billion trades a day "is not that big". !

- @charleshumble: "Something I see in about 95% of the trading data sets is there are a small number of bad guys hammering it." Cliff Click #qconlondon

- You may not be able to hear doves cry, but you can listen to machines talk. Elevators to be precise. Watch them chat away as they selflessly shuttle to and fro. Yes, it is as exciting as you might imagine. Though probably not very different than the interior dialogue of your average tool.

- It used to be that winners wrote history. Now victors destroy data. Terabytes of Government Data Copied

- Battling legacy code seems to be the number one issue on Stack Overflow, as determined by top books mentioned on Stack Overflow. Not surprising. What was surprising is what's not on the list: algorithm books. Books on the craft of programming took top honors. Gratifying, but at odds with current interviewing dogma. The top 10 books: Working Effectively with Legacy Code; Design Patterns; Clean Code; Java concurrency in practice; Domain-driven Design; JavaScript; Patterns of Enterprise Application Architecture; Code Complete; Refactoring; Head First Design Patterns.

- Need to run Wordpress? kodiashi has some good advice: Your goal should be to reduce server I/O as much as possible. What typically kills Wordpress sites is way too many database requests and too many plugins being loaded on every page request: Use a CDN or caching system to offload requests for common, static files; Cache as many of your articles as possible using something like W3 Total Cache; Cache common database results using Memcache or Redis; Greatly reduce the number of plugins you use; Use a load balancer and/or auto-scaling to handle traffic spikes; Get servers with as much RAM as you can afford and SSD drives.

- Getting your BaaS on. Firebase now hooks up with Google Cloud Functions, so now you can run server-side code from events generated in your Firebase app. It's now a viable Lambda competitor, though more limited at the moment. There's a limit of 400 concurrent invocations. Only node.js is supported for now. skybrian asks a good question: How does this compare to App Engine? Not an easy question to answer.

- Interesting update on Azure Stack: Azure will be pay-as-you-go for services in the same way as they are in Azure, with the same invoices and subscriptions; Services will be typically metered on the same units as Azure, but prices will be lower, since customers operate their own hardware and facilities; There's also going to be core-based pricing for scenarios where customers are unable to have their metering information sent to Azure. Also, Building a Scalable Online Game with Azure - Part 3.

- Yep, if you want to manage your own stuff it's hard to beat colo. How much is Discourse affected by a faster CPU?: I recently moved my own Discourse from Digital Ocean to a colocated mini-PC I purchased from Ali Express. This is a good apples to apples of increasing CPU performance with the exact same Discourse instance. The monthly colo fee is a little cheaper than DO, you do have the upfront cost of the hardware, but you get a much more powerful machine.

- AMD’s Naples put them back in the server game: On a critical HPC workload, albeit a memory bound one, AMD trounces Intel’s best and should trounce Intel’s upcoming best. On a socket to socket performance basis, AMD looks to beat Broadwell-EP and roughly tie Skylake-EP’s performance but this will probably swing wildly with differing workloads. On the energy use front things are a little murkier but AMD looks to be very close to Intel with Naples, time will tell. The last metric is price, something AMD can both substantially undercut Intel with and make healthy margins in the process. Things are looking very good for AMD at the moment in servers

- Sounds like a good life skills class. Calling Bullshit in the Age of Big Data. Especially Week 2: Spotting bullshit.

- 802.eleventy what? A deep dive into why Wi-Fi kind of sucks. Kronopath with a good gloss: This is actually a really good article. To summarize one of its key points: your mental intuition for how signal congestion works under wifi is wrong. A stronger signal won’t “drown out” noise from other networks since wifi enforces collision avoidance (i.e. “waiting your turn to speak”) even between different networks. The better idea is to have a mesh of smaller, lower-powered networks and to keep as many devices wired as you can.

- What server would you suggest to handle 5k to 7k concurrent users? Wordpress is a dog for performance, so the best thing to do is to not use it; is the content mostly-static? With a proper CDN setup, and if the content is mostly static (guessing yes if it's mostly just article-based), a very very lightweight dedicated server would likely be able to cope...If your content is indeed mostly static, a basic i3 CPU (2 core, 4 thread) would likely be sufficient. You'd want enough memory for the database and all the connections; so 8+ GB?; I would look into a caching reverse proxy like varnish or squid. With this setup, it doesn't matter if it's a wordpress site because 99% of the traffic never touches wordpress; 100k visitors isn't massive, so any VPS (e.g. Linode or Digital Ocean) should be able to handle it fine. Set up a LEMP stack (Linux, Nginx, MySQL, PHP) and look at WP caching plugins. You could also try putting CloudFlare in front of it; I'd recommend amazon ECS specifically the T series EC2 instance; 100k visitors a day is entirely different from 5k concurrent users;

- #everylinematters It’s Just 1 Line of Code Change: When the Mariner embarked on that fateful day, instead of making history in space exploration as the first rocket to fly by venus, it exploded and came crashing down after less than 5 minutes into the flight and went down in history as the most expensive programming typo. Yes you read that right. All it was, was a TYPO. This typo cost the U.S. government about $80 million ($630 million in 2016 dollars). Somewhere within the computer instructions code a hyphen was omitted. So instead of soaring into space the Mariner ended up crashing into the ground.

- Maybe the problem is we aren't drinking enough? Did coffee give us the Enlightenment?

- Icache misses matter too. ScyllaDB implemented a SEDA-like mechanism to run tasks tied to the same function sequentially. The first task warms up icache and the branch predictors. Later runs benefit from this warmup. Microbenchmarks see almost 200% improvement while full-system tests see around 50% improvement, mostly due to improved IPC. Avi Kivity in a mechanical sympathy thread.

- People who use Go and fill out a survey on Go turn out to really like Go. Go 2016 Survey Results: Users are overwhelmingly happy with Go: they agree that they would recommend Go to others by a ratio of 19:1, that they’d prefer to use Go for their next project (14:1), and that Go is working well for their teams (18:1). Fewer users agree that Go is critical to their company’s success (2.5:1).

- The World's Largest Shipping Firm Now Tracks Cargo on Blockchain. Interesting application, but the benefits of using blockchain weren't clear over alternatives.

- Steps along the way from product to platform: Build great APIs. Support early integrators. Listen to feedback. Consider how 3rd parties can make your product better. Design for integrations. Play nice. Formalize relationships. Make it easy. Listen, listen, listen. And maybe. Maybe. Dozens or even hundreds of other companies, over several years of working with you, will decide that yes, what you’ve built is a platform. And you say thank you, and serve them well. Because without them, you are not a platform. They decide.

- Fueling the Gold Rush: The Greatest Public Datasets for AI. Great source of training data for computer vision; natural language; speech; recommendation; networks and graphs; geospatial.

- Really awesome tour through using a GPU. GPU Performance for Game Artists: To appreciate the impact that your art has on the game’s performance, you need to know how a mesh makes its way from your modelling package onto the screen in the game. That means having an understanding of the GPU – the chip that powers your graphics card and makes real-time 3D rendering possible in the first place. A die shot of NVIDIA’s GTX 1070 GPUArmed with that knowledge, we’ll look at some common art-related performance issues, why they’re a problem, and what you can do about it. Things are quickly going to get pretty technical, but if anything is unclear I’ll be more than happy to answer questions in the comments section.

- What was it like to work at AWS S3 during the S3 outage on February 28, 2017?: I saw an answer to this question back when it was first posted. Not sure if it's the same one, but the answer I saw was from an employee. The jist was that it was really amazing to watch an entire, massive organization come together to solve a problem and that the resiliency of S3 to this sort of failure was really incredible to see in action.

- Cloud first, Serverless second: The big conversation for the next 6 months on Serverless is about tooling, because at present, we have a lot of abstractions that allow for deployments and a lot of abstractions that could cause problems in the future...Please don’t build another function deployment tool. We don’t need it!...The tooling we need is around infrastructure, deployment, service management, testing

- Injecting Faults in Distributed Storage: The take-away is that file system checkers need to be transactional, a conclusion which seems obvious once it has been stated. This is especially the case since the probability of a power outage is highest immediately after power restoration. Until the checkers are transactional, their vulnerability to crashes and power failures, and the inability of the distributed storage systems above them to correctly respond poses a significant risk

- Micro-optimizations matter: preventing 20 million system calls: It’s not just Ruby and Puppet, though. You can find similar bug reports for other projects with users reporting 100% CPU usage and hundreds of thousands of calls to sigprocmask melting their CPUs. What’s really going on here is that the calls to sigprocmask are coming from inside of a pair of glibc functions (that are not system calls): getcontext and setcontext. These two functions are used to save and restore the CPU state and are commonly used by programs and libraries implementing exception handling or userland threads.

- CoDel and Active Queue Management: CoDel is essentially an improved head drop mechanism that provides the correct signals to TCP to slow down its send rate, or rather to reduce the window size (and hence the number of packets in flight on the connection)...CoDel is a modified head drop queuing mechanism, somewhat similar in intent (but not in function) to wRED (weighted Random Early Detection). The packet that is dropped, in this case, would be packet 7 (to the far right of the queue), if it has been in the queue for too long (has too long of a dwell time).

- lambci/lambci: a package you can upload to AWS Lambda that gets triggered when you push new code or open pull requests on GitHub and runs your tests (in the Lambda environment itself) – in the same vein as Jenkins, Travis or CircleCI. It integrates with Slack, and updates your Pull Request and other commit statuses on GitHub to let you know if you can merge safely.

- Lots of good security advice. forter/security-101-for-saas-startups.

-

Scaling up DNA data storage and random access retrieval: Synthetic DNA offers an attractive alternative due to its potential information density of ~ 1018B/mm3, 107 times denser than magnetic tape, and potential durability of thousands of years...This paper demonstrates an end-to-end approach toward the viability of DNA data storage with large-scale random access. We encoded and stored 35 distinct files, totaling 200MB of data, in more than 13 million DNA oligonucleotides (about 2 billion nucleotides in total) and fully recovered the data with no bit errors, representing an advance of almost an order of magnitude compared to prior work...We developed a random access methodology based on selective amplification, for which we designed and validated a large library of primers, and successfully retrieved arbitrarily chosen items from a subset of our pool containing 10.3 million DNA sequences. Moreover, we developed a novel coding scheme that dramatically reduces the physical redundancy (sequencing read coverage) required for error-free decoding to a median of 5x, while maintaining levels of logical redundancy comparable to the best prior codes.

-

Modeling Structure and Resilience of the Dark Network: The Darknet exhibits some interesting features that are not shared by the structure of the Internet. Triadic closure in the Darknet is more likely than in the Internet, with communication paths much shorter in the former. Like the Internet, the Darknet is characterized by a non-homogenous connectivity distribution and the presence of higher-order degree-degree correlations. However, the topology of the Darknet is more interestingbecause of the peculiar heavy-tailed scaling of the degree distribution, with scaling exponent close to -1 and cutoff, at variance with the Internet, appearing more like a power-law with scaling exponent close to -2 and no evident cutoff. The rich-club analysis has revealed the lack of a core ofhighly central nodes interconnected each other, at variance with the Internet where this effect is remarkable. We argue that such topological differences are responsible for the different resilience exhibited by the two communication systems in response to random disruption, target attacks and induced cascade failures.

-

Evolving Ext4 for Shingled Disks: We introduce ext4-lazy, a small change to the Linux ext4 file system that significantly improves the throughput in both modes. We present benchmarks on four different drive-managed SMR disks from two vendors, showing that ext4-lazy achieves 1.7-5.4x improvement over ext4 on a metadata-light file server benchmark. On metadata-heavy benchmarks it achieves 2-13x improvement over ext4 on drive-managed SMR disks as well as on conventional disks.

-

Challenges to Adopting Stronger Consistency at Scale: There is an exciting boom in research on scalable systems that provide stronger semantics for geo-replicated stores. We have described the challenges we see to adopting these techniques at Facebook, or in any environment that scales using sharding and separation into stateful services. Future advances that tackle any of these challenges seem likely to each have an independent benefit. Our hope is that these barriers can be overcome.

- Spanner, TrueTime and the CAP Theorem: Spanner is Google's highly available global-scale distributed database. It provides strong consistency for all transactions. This combination of availability and consistency over the wide area is generally considered impossible due to the CAP Theorem. We show how Spanner achieves this combination and why it is consistent with CAP. We also explore the role that TrueTime, Google's globally synchronized clock, plays in consistency for reads and especially for snapshots that enable consistent and repeatable analytics.