Stuff The Internet Says On Scalability For January 12th, 2018

Hey, it's HighScalability time:

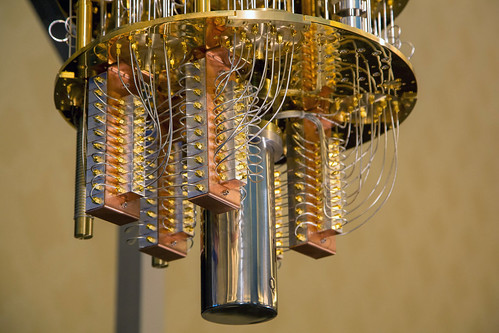

Whiskey still? Chandelier? Sky city? Nope, it's IBM's 50-qubit quantum computer. (engadget)

If you like this sort of Stuff then please support me on Patreon. And I'd appreciate your recommending my new book—Explain the Cloud Like I'm 10—to anyone who needs to understand the cloud (who doesn't?). I think they'll like it. Now with twice the brightness and new chapters on Netflix and Cloud Computing.

- 28.5 billion: PornHub visitors; 3 billion: computer chips have Spectre security hole; 75.8%: people incorrectly think private browsing is actually private; 380,000: streams needed to make minimum wage on Spotify; 30Gbps: throughput for servers in Azure using new network interface cards packing field-programmable gate arrays; 8 quadrillion calculations per second: new NOAA supercomputer; $2 billion: market cap for parody cryptocurrency dogecoin; $1 trillion: IoT spending by 2035; 100,000: IoT sensors monitor canal in China; 1,204: definitions for emo; 23 million: digits in largest prime number; 2.8%: decline in PC shipments;

- Quoteable Quotes:

- @Lee_Holmes: We owe a debt of gratitude to the unsung heroes of Spectre and Meltdown: the thousands of engineers that spent their holidays working on OS patches, browser patches, cloud roll-outs, and more. Thank you.

- Geoff Huston: While a small number of providers have made significant progress in public IPv6 deployments for their respective customer base, the overall majority of the Internet is still exclusively using IPv4. This is despite the fact that among that small set of networks that have deployed IPv6 are some of the largest ISPs in the Internet!

- Robert Sapolsky: But our frequent human tragedy is that the more we consume, the hungrier we get. More and faster and stronger. What was an unexpected pleasure yesterday is what we feel entitled to today, and what won’t be enough tomorrow.

- Simon Wardley: As for losers — I’m sorry to say that one set of losers will be those who hold on to DevOps practices.

- StackOverflow: Angular and React are by far the most popular across the board, no matter the technology used. It makes sense that they are the clear frontrunners, supported by two of the biggest and most influential companies in tech. Just looking at those two frameworks, Angular is more visited amongst C#, Java, and (to a degree) PHP developers, whereas React is more popular with Rails, Node.js, and Python developers.

- Matthew Green: due to flaws in both Signal and WhatsApp (which I single out because I use them), it’s theoretically possible for strangers to add themselves to an encrypted group chat. However, the caveat is that these attacks are extremely difficult to pull off in practice, so nobody needs to panic. But both issues are very avoidable, and tend to undermine the logic of having an end-to-end encryption protocol in the first place.

- Taylor Lorenz: The data shows that despite its perception as a nascent social platform, Snapchat is much more of a chat app. And key features like Snap Maps have yet to gain widespread adoption among the app’s user base.

- FittedCloud: In summary, converting C4/M4 instances may not be a trial task. But for those that are able to go through the analysis and convert could save significantly and improve application performance. At the pace with which AWS moves it is likely that next generation instances will continue to be available in the coming years so the sooner you upgrade the better prepared you are for the future generation instances.

- Sridhar Rajagopalan: When we moved the Sense analytics chain to GCP, the data coverage metric went from below 80% to roughly 99.8% for one of our toughest customer use cases. Put another way, our data litter decreased from over 20%, or one in five, to approximately one in five hundred. That’s a decrease of a factor of approximately 100, or two orders of magnitude!

- Simon Wardley: We have to be very, very careful on this point. Because people think “reduced waste” means “reduced IT spend”. And it certainly does not. We’ll see more efficiency and rapid development of higher order systems. But in terms of reducing IT spend, people said the same thing about EC2 in 2007, 2008. And they quickly learned about something called Jevons’ Paradox. What happens is that as we make something more efficient, we wind up consuming vastly more of that thing. So when people say “Oh, we’re going to spend so much less money with serverless!” nah — forget it. We’re just going to do more stuff.

- Stephen Cass: 5G is likely to become the glue that binds many of our critical technologies together, which will put mobile carriers at the center of modern global civilization in a big way.

- @SGgrc: The General Law of Cross-Task Information Leakage: “In any setting where short-term performance optimizations have global effect, a sufficiently clever task can infer the recent history of other tasks by observing its own performance.”

- Quincy Larson: It turns out a LOT of developers got their first tech job in their 30s, 40s, and 50s.

- @mjpt777: The approach of not caring about software performance because processors keep getting faster seems to be a bit broken right now.

- Bill Joy: We sought “grand challenge” breakthroughs because they can lead to a cascade of positive effects and transformations far beyond their initial applications. The grand challenge approach works — dramatic improvements reducing energy, materials and food impact are possible. If we widely deploy such breakthrough innovations, we will take big steps toward a sustainable future.

- @cocoaphony: I have made some snarky comments about "serverless" (since it…you know…runs on servers). And I have flailed around quite a bit trying to understand that world. But as it comes into focus, wow, oh my goodness, it really is quite amazing what I'm able to do with so little code.

- @TheLastPickle: Running cassandra-stress on a mixed load showed 20%+ latency increase, over every percentile, when upgrading to the patched ubuntu kernel 4.13.0-25 #Cassandra #meltdown

- @PWAStats: Tinder cut load times from 11.91 seconds to 4.69 seconds with their new PWA. The PWA is 90% smaller than Tinder’s native Android app. User engagement is up across the board on the PWA.

- @Nick_Craver: Here's what happened last night to one of our busier redis servers before and after patching for meltdown. Using the same 200k/12 minute axes on both: down from ~145k ops/sec to ~95ks ops/sec, or about 35% slower.

- paulcalvano: In a year since Facebook’s blog post200 about Cache-Control Immutable, we can see that it’s usage has spread beyond Facebook and is being used by a handful of large 3rd parties and ~4150 additional domains. Across all of the pages in the HTTP Archive, 2% of requests and 30% of sites appear to include at least 1 immutable response. Additionally, most of the sites that are using it have the directive set on assets that have a long freshness lifetime.

- Bloomberg: "It makes you shudder," said Paul Kocher, who helped find Spectre and started studying trade-offs between security and performance after leaving chip company Rambus Inc. last year. "The processor people were looking at performance and not looking at security."

- @Quora: Quora is facing a slowdown due to the patch applied by AWS for Intel's Meltdown and Spectre issues. We are currently working on fixing it and appreciate your patience during this.

- Theo Schlossnagle: the ground has shifted under the monitoring industry. This seismic change has caused existing tools to change and new tools to emerge in the monitoring space, but that alone will not deliver us into the low-risk world of DevOps—not without new and updated thinking. Why? Change.

- Epic Games: We wanted to provide a bit more context for the most recent login issues and service instability. All of our cloud services are affected by updates required to mitigate the Meltdown vulnerability. We heavily rely on cloud services to run our back-end and we may experience further service issues due to ongoing updates.

- scrollaway: Yep, seeing a much more severe hit on all our elasticache instances than anywhere else. CPU graph from two underused ones

- necubi: We also saw a huge hit on our memcached elasticache nodes. Eyeballing from our CPU graph, seems ~30%.

- Marc Brooker: the conclusion of all of this is that random load balancing (and random shuffle-sharding, and random consistent hashing) distributed load rather poorly when 𝑀 is small. Load-sensitive load-balancing, either stateful or stateless with an algorithm like best-of-two is still very much interesting and relevant. The world would be simpler and more convenient if that wasn't the case, but it is.

- @joeerl: Quite - right. One process = one CPU. Shared nothing. Pure message passing. The fact this is inefficient on conventional hardware is because conventional hardware has been optimised for something completely different.

- @kelseyhightower: Serverless is going to DRY up software development. Look beyond FaaS and you'll find better platforms that help you avoid rebuilding the same subsystems over and over again.

- ampletter: We don’t want to stop Google’s development of AMP, and these changes do not require that. We also applaud search engines that give ranking preference to fast-loading pages. AMP can remain one of a range of technologies that give publishers high quality options for delivering Web pages quickly and making users happy. However, publishers should not be compelled by Google’s search dominance to put their content under a Google umbrella. The Web is not Google, and should not be just Google.

- @mnewswanger: Breaking down requests for @StackOverflow web servers: Pre-Meltdown Patch -> Post Meltdown Patch (Increase %) Response time: 18ms -> 22ms (22%) Avg SQL Call: .73ms -> .83ms (13%) Avg Elastic Call: 12.56ms -> 19.71ms (57%) #Meltdown

- @swardley: One of the biggest arguments for "bad" hybrid cloud (as in public + private) is the sunk cost fallacy of an existing data centre. In practice, this is hiding a truth - loss of political capital. Execs just don't want to admit they've spent billions on the obviously wrong thing.

- Epic Games: We wanted to provide a bit more context for the most recent login issues and service instability. All of our cloud services are affected by updates required to mitigate the Meltdown vulnerability. We heavily rely on cloud services to run our back-end and we may experience further service issues due to ongoing updates.

- kl0nos: I see Rust Evangelic Strike Force in Action again. Bashing Go and everything else whenever you can and prising Rust without seeing its obvious flaws.

Every programming language has flaws, period. Go also has its flaws, same goes for Rust, C++, Java etc.

-

Memory Guy: Why is ECC necessary on NAND flash, yet it’s not used for other memory technologies? The simple answer is that NAND’s purpose is to be the absolute cheapest memory on the market, and one way to achieve the lowest-possible cost is to relax the standards for data integrity — to allow bit errors every so often.

- @aionescu:The mitigations poor OS vendors have to do for "Variant 2: branch target injection (CVE-2017-5715)" aka #Spectre make me cry/die a little inside. The mitigations poor OS vendors have to do for "Variant 2: branch target injection (CVE-2017-5715)" aka #Spectre make me cry/die a little inside.

- @parismarx: “Prior to artificial regulatory supply caps, the unregulated taxi industry was unprofitable and subject to growing concerns over negative externalities. #Uber is now facing the same relentless drag on its P&L.”

- @bitfield: This isn't a patch, this is like cutting your leg off to prevent someone stealing your toes.

- Sift Science: The window of time between booking and traveling is shrinking for all travelers. One-third of millennials make travel plans at the last minute, and 72% of mobile hotel bookings are made within one day of a stay.

- @brendangregg: 2018 will be the year of Transparent Huge Pages. KPTI patches for #spectre/#meltdown do a TLB flush on syscalls. Can be better to reload by 2M instead of 4k.

- @lukego: One core Per process is actually becoming popular in some domains e.g. network data planes. We setup CPU+NIC pairs that seldom talk to the kernel and we don’t seem to be particularly impacted (afaik.) Stay in ring3 and be merry...?

- @jxxf: How to avoid 80% of all software problems: * store all times as UTC ISO8601 * only do datetime math with libraries * more processes > more threads * avoid building a distributed system * ... or if you can't, avoid requiring coordination * shun whiteboard coding interviews

- Ann Steffora Mutschler: The idea of using chiplets, with or without a package, has been circulating for at least a half-dozen years, and they can trace their origin back to IBM’s packaging scheme in the 1960s. It is now picking up steam, both commercially and for military purposes, as the need for low-cost semi-customized solutions spreads across a number of new market opportunities.

- @chris__martin: The "overly-verbose programming language" problem: When you translate your idea into code, a bunch of extra detail has to be added. The "insufficiently-typed programming language" problem: when you translate your idea into code, a bunch of valuable detail has to be removed.

- James Horrox: In a 2012 study, psychologists David L. Strayer and Ruth and Paul Atchley sent a group of participants on a four-day wilderness hike, completely cut off from technology, and subsequently asked them to carry out tasks requiring creative thinking and complex problem solving. The study found that participants’ performance on these tasks improved by 50%, leading the authors to conclude that “[t]here is a real, measurable cognitive advantage to be realized if we spend time truly immersed in a natural setting”.

- Dr. Welser~ Beyond the current trend of using GPUs as accelerators, future advances in computing logic architecture for AI will be driven by a shift toward reduced-precision analog devices, which will be followed by mainstream applications of quantum computing. Neural network algorithms that GPUs are commonly used for are inherently designed to tolerate reduced precision. Reducing the size of the data path would allow more computing elements to be packed together inside a GPU, a dynamic that in the past was taken for granted as an outcome of Moore's Law technology scaling. Whether it is integration of analog computing elements or solving complex chemistry problems for quantum computing, materials engineering will play a critical enabling role.

- Geoff Huston: The density of inter-AS interconnection continues to increase. The growth of the Internet is not "outward growth from the edge" as the network is not getting any larger in terms of average AS path change. Instead, the growth is happening by increasing the density of the network by attaching new networks into the existing transit structure and peering at established exchange points. This makes for a network whose diameter, measured in AS hops, is essentially static, yet whose density, measured in terms of prefix count, AS interconnectivity and AS Path diversity, continues to increase. This denser mesh of interconnectivity could be potentially problematical in terms of convergence times if the BGP routing system used a dense mesh of peer connectivity, but the topology of the network continues along a clustered hub and spoke model, where a small number of transit ASs directly service a large number of stub edge networks. This implies that the performance of BGP in terms of time and updates required to reach convergence continues to be relatively static.

- Steve Gibson: Speculative execution is a technique used by high speed processors in order to increase performance by guessing likely future execution paths and prematurely executing the instructions in them. For example when the program’s control flow depends on an uncached value located in the physical memory, it may take several hundred clock cycles before the value becomes known. Rather than wasting these cycles by idling, the processor guesses the direction of control flow, saves a checkpoint of its register state, and proceeds to speculatively execute the program on the guessed path. When the value eventually arrives from memory the processor checks the correctness of its initial guess. If the guess was wrong, the processor discards the (incorrect) speculative execution by reverting the register state back to the stored checkpoint, resulting in performance comparable to idling. In case the guess was correct, however, the speculative execution results are committed, yielding a significant performance gain as useful work was accomplished during the delay.

- Google solved Spectre in a very googly way. Protecting our Google Cloud customers from new vulnerabilities without impacting performance: With the performance characteristics uncertain, we started looking for a “moonshot”—a way to mitigate Variant 2 without hardware support. Finally, inspiration struck in the form of “Retpoline”—a novel software binary modification technique that prevents branch-target-injection, created by Paul Turner, a software engineer who is part of our Technical Infrastructure group. With Retpoline, we didn't need to disable speculative execution or other hardware features. Instead, this solution modifies programs to ensure that execution cannot be influenced by an attacker. By December, all Google Cloud Platform (GCP) services had protections in place for all known variants of the vulnerability. During the entire update process, nobody noticed: we received no customer support tickets related to the updates. This confirmed our internal assessment that in real-world use, the performance-optimized updates Google deployed do not have a material effect on workloads. With Retpoline, we could protect our infrastructure at compile-time, with no source-code modifications. Furthermore, testing this feature, particularly when combined with optimizations such as software branch prediction hints, demonstrated that this protection came with almost no performance loss.

- Azure has some work to do. Azure Functions vs AWS Lambda – Scaling Face Off: The results are, frankly, pretty damning when it comes to Azure Functions ability to scale dynamically and so let’s get into the data and then look at why...Add 2 Users per Second...We can see from this test that AWS matches the growth in user load almost exactly, it has no issue dealing with the growing demand and page requests time hover around the 100ms mark. Contrast this with Azure which always lags a little behind the demand, is spikier, and has a much higher response time hovering around the 700ms mark...Constant Load of 400 Concurrent Users...[AWS] quickly absorbs the load and hits a steady response time of around 80ms again in under a minute...Azure, on the other hand, is more complex. Average response time doesn’t fall under a second until the test has been running for 7 minutes and it’s only around then that the system is able to get near the throughput AWS put out in a minute...Same scenario as the last test but this time 1000 users...Again we can see a similar pattern with Azure slow to scale up to meet the demand while with AWS it is business as usual in under a minute...I don’t think there’s much point dancing around the issue: the above numbers are disappointing. Azure is slow to scale it’s HTTP triggered functions and once we get beyond the 100 concurrent users point the response times are never great and the experience is generally uneven

- PornHub—2017 by the numbers: 28.5 billion visitors...81 million people per day...24.7 billion searches...this translates to about 50,000 searches per minute and 800 searches per second. This is also incidentally the same number of hamburgers that McDonalds sells every second...users and content partners uploaded over 4 million videos...In total, 595,482 hours of video were uploaded, which is 68 YEARS of porn if watched continuously...3,732 Petabytes of data was streamed for 7,101 GB per minutes and 118 GB per second...every 5 minutes Pornhub transmits more data than the entire contents of the New York Public library’s 50 million books...people are more interested than ever before in ‘Porn for Women’, making this the top trending search throughout the year, increasing by over 1400%.

- Gil does his usual masterful job explaining Meltdown. This is only part of his long and detailed post. You'll want to read the whole thing. Gil Tene: I'm sure people here have heard enough about the Meltdown vulnerability and the rush of Linux fixes that have to do with addressing it. So I won't get into how the vulnerability works here. My one word reaction to the simple code snippets showing "remote sensing" of protected data values was "Wow". However, in examining both the various fixes rolled out in actual Linux distros over the past few days and doing some very informal surveying of environments I have access to, I discovered that the PCID processor feature, which used to be a virtual no-op, is now a performance AND security critical item. In the spirit of [mechanically] sympathizing with the many systems that now use PCID for a new purpose, as well was with the gap between the haves/have-nots in the PCID world, let me explain why: The PCID (Processor-Context ID) feature on x86-64 works much like the more generic ASID (Address Space IDs) available on many hardware platforms for decades. Simplistically, it allows TLB-cached page table contents to be tagged with a context identifier, and limits the lookups in the TLB to only match within the currently allowed context. TLB cached entires with a different PCID will be ignored. Without this feature, a context switch that would involve switching to a different page table (e.g. a process-to-process context switch) would require a flush of the entire TLB. With the feature, it only requires a change to the context id designated as "currently allowed". The benefit of this comes up when a back-and-forth set of context switches (e.g. from process 1 to process 2 and back to process 1) occurs "quickly enough" that TLB entries of the newly-switched-into context still reside in the TLB cache. With modern x86 CPUs holding >1K entries in their L2 TLB caches (sometimes referred to as STLB), and each entry mapping 2MB or 4KB virtual regions to physical pages, the possibility of such reuse becomes interesting on heavily loaded systems that do a lot of process-to-process context switching. It's important to note that in virtually all modern operating systems, thread-to-thread context switches do not require TLB flushing, and remain within the same PCID because they do not require switching the page table. In addition, UNTIL NOW, most modern operating systems implemented user-to-kernel and kernel-to-user context switching without switching page tables, so no TLB flushing or switching or ASID/PCID was required in system calls or interrupts. The PCID feature has been a "cool, interesting, but not critical" feature to know about in most Linux/x86 environments for these main reasons: 1. Linux kernels did not make use of PCID until 4.14. So even tho it's been around and available in hardware, it didn't make any difference. 2. It's been around and supported in hardware "forever", since 2010 (apparently added with Westmere), so it's not new or exciting. 3. The benefits of PCID-based retention of TLB entries in the TLB cache, once supported by the OS, would only show up when process-to-process context switching is rapid enough to matter. While heavily loaded systems with lots of active processes (not threads) that rapidly switch would benefit, systems with a reasonable number of of [potentially heavily] multi-threaded processes wouldn't really be affected or see a benefit. This all changed with Meltdown.

- Here's a thorough EC2 network benchmark in the form of an EC2 Network Performance Cheat Sheet. Fastest was m5.24xlarge at 24.597 Gbit/s. Slowest was t1.micro at 0.094 Gbit/s.

- Finding out the MySQL performance regression due to kernel mitigation for Meltdown CPU vulnerability: In this case we can observe around a 4-7% regression in throughput if pcid is enabled. If pcid is disabled, they increase up to 9-10% bad, but not as bad as the warned by some “up to 20%”. If you are in my situation, and upgrade to stretch would be worth to get the pcid support.

- RedHat on Speculative Execution Exploit Performance Impacts - Describing the performance impacts to security patches for CVE-2017-5754 CVE-2017-5753 and CVE-2017-5715. They see a 1-20% performance reduction.

- How long can a BBC reporter stay hidden from CCTV cameras in China? 7 minutes. @TheJohnSudworth has been given rare access to put the world's largest surveillance system to the test. Chilling.

- RSM (real systems monitoring) is coming, and we will, just as with RUM, be recording and analyzing systems-level interactions—every one of them. Monitoring in a DevOps World: Long dead are the systems that age like fine wine. Today's systems are born in an agile world and remain fluid to accommodate changes in both the supplier and the consumer landscape...In this new world, you not only have fluid development processes that can introduce change on a continual basis, you also have adopted a microservices-systems architecture pattern...Many monitoring companies have been struggling to keep up with the nature of ephemeral architecture. Nodes come and go, and architectures dynamically resize from one minute to the next in an attempt to meet growing and shrinking demand. As nodes spin up and subsequently disappear, monitoring solutions must accommodate...The first thing to remember is that all the tools in the world will not help you detect bad behavior if you are looking at the wrong things. Be wary of tools that come with prescribed monitoring for complex assembled systems...When it comes to monitoring the "right thing," always look at your business from the top down...Second, embrace mathematics. In modern times, functionality is table stakes; it isn't enough that the system is working, it must be working well...Circonus has experienced an increase in data volume of almost seven orders of magnitude. Some people still monitor systems by taking a measurement from them every minute or so, but more and more people are actually observing what their systems are doing. This results in millions or tens of millions of measurements per second on standard servers...A third important characteristic of successful monitoring systems is data retention...At the pace we move, it is undeniable that your organization will develop intelligent questions regarding a failure that were missed immediately after past failures. Those new questions are crucial to the development of your organization, but they become absolutely precious if you can travel back in time and ask those questions about past incidents...The final piece of advice for a successful monitoring system is to be specific about what success looks like...The art of the SLI (service-level indicator), SLO (service-level objective), and SLA (service-level agreement) reigns here.

- SSITH, really? Dear DARPA, should your new program developing a framework for designing security against cyberintruders right into chips’ microarchitecture really be called System Security Integrated Through Hardware and Firmware? May the force be with you. Baking Hack Resistance Directly into Hardware.

- Geoff Huston's flawless one paragraph summary of BGP: BGP is an instance of a Bellman-Ford distance vector routing algorithm. This algorithm allows a collection of connected devices (BGP speakers) to each learn the relative topology of the connecting network. The basic approach of this algorithm is very simple: each BGP speaker tells all its other neighbours about what it has learned if the new learned information alters the local view of the network. This is a lot like a social rumour network, where every individual who hears a new rumour immediately informs all their friends. BGP works in a very similar fashion: each time a neighbour informs a BGP speaker about reachability to an IP address prefix, the BGP speaker compares this new reachability information against its stored knowledge that was gained from previous announcements from other neighbours. If this new information provides a better path to the prefix then the local speaker moves this prefix and associated next hop forwarding decision to the local forwarding table and informs all its immediate neighbours of a new path to a prefix, implicitly citing itself as the next hop. In addition, there is a withdrawal mechanism, where a BGP speaker determines that it no longer has a viable path to a given prefix, in which case it announces a "withdrawal" to all its neighbours. When a BGP speaker receives a withdrawal, it stores the withdrawal against this neighbour. If the withdrawn neighbour happened to be the currently preferred next hop for this prefix, then the BGP speaker will examine its per-neighbour data sets to determine which stored announcement represents the best path from those that are still extant. If it can find such an alternative path, it will copy this into its local forwarding table and announce this new preferred path to all its BGP neighbours. If there is no such alternative path, it will announce a withdrawal to its neighbors, indicating that it no longer can reach this prefix.

- People being badly burned by superheating water in a microwave is a thing. Here's a demonstration on Mythbusters. Maybe some microwave oven makers can use some of the smart learnin' to recognize when a cup of liquid and too long of a timer period has been entered?

- Stochastic Computing in a Single Device: Researchers at the University of Minnesota say they’ve made a big leap in a strange but growing field of computing. Called stochastic computing, the method uses random bits to calculate via simpler circuits, at lower power, and with greater tolerance for errors...Their device, similar to an MRAM memory cell, can perform the stochastic computing versions of both addition and multiplication on four logical inputs...Instead of suppressing this random aspect of the magnetic tunnel junction’s nature, Wang and Lv put it to use. They designed a cell that produces random strings of bits that carry and compute information...They demonstrated that the tunnel junction’s randomness could be tuned by four independent values: the amplitude and width of a pulse of current fed into the junction, a bias current running through the junction, and a biasing magnetic field. Stochastic data can easily be converted to any of these with some simple circuitry. When Wang and Lv did that, they found that the device summed whatever values were input as pulse amplitude, bias current, and the bias magnetic field. The result of that triple summation was then multiplied by the value represented by the pulse width to produce an answer in the stochastic computing form—a string of random bits with a particular probability of ones occurring.

- How might Tesla's car security work? Fascinating look at how a hardware-based Root of Trust works and why it’s necessary. Protecting Automotive Systems With A Root Of Trust.

- Summary of 22 product-engineering patterns that college didn’t teach me: Know your short/mid/long-term solutions; Doing the right thing should be easy; Add monitoring & alerting early on; Limiting the stack / Leveraging the existing stack; Use the right tools for the job; Time-boxing; Separation of responsibilities; Pain of failures ought to be felt by those who can fix it (and those who caused it); Cleanup should be easy; Solutions via Engineering or via People; Write Testable Code; Develop for debug-ability (or self-teaching failures); Understand when some extra work can become a slippery slope; No worse than before; All or nothing; Use time correlation to notice changes; Going from alert to failure should be fast; Bring in all the right people; Bad alerts are evil alerts.

- What does the code to exploit Metdown look like? Here you go. Programmer's Guide to Meltdown.

- The limitations of matchmaking without server logic: we came up with was to add a league system to Awesomenauts and limit matchmaking based on that. The idea is that we divide the leaderboards into 9 leagues, based on player skill. These leagues weren't just intended for matchmaking, but also for the player experience: it's cooler to be in league 2 than to be in place 4735, even though that might actually be the same thing...While leagues were an improvement, this approach didn't fix the big problem that there's no logic running on the servers. Each client decides for themselves based on incomplete information. This is very limiting to how clever your matchmaking can be. There are lots of situations in which this algorithm will fail to produce optimal match-ups...So we started developing our own matchmaking system (called Galactron) with our own servers.

- Overall, we’ve been impressed with Envoy and will continue to explore its features as we build out and expand Bugsnag. Using Envoy to Load Balance gRPC Traffic: Behind the scenes, Bugsnag has a pipeline of microservices responsible for processing the errors we receive from our customers that are later displayed on the dashboard. This pipeline currently handles hundreds of millions of events per day...Bugsnag’s microservices are deployed as Docker containers in the cloud using Google’s excellent Kubernetes orchestration tool. Kubernetes has built in load balancing via its kube-proxy which works perfectly with HTTP/1.1 traffic, but things get interesting when you throw HTTP/2 into the mix...gRPC uses the performance boosted HTTP/2 protocol. One of the many ways HTTP/2 achieves lower latency than its predecessor is by leveraging a single long-lived TCP connection and to multiplex request/responses across it. This causes a problem for layer 4 (L4) load balancers as they operate at too low a level to be able to make routing decisions based on the type of traffic received...One of the options we explored was using gRPCs client load balancer which is baked into the gRPC client libraries. That way each client microservice could perform its own load balancing. However, the resulting clients were ultimately brittle and required a heavy amount of custom code to provide any form of resilience, metrification, or logging...We needed a layer 7 (L7) load balancer because they operate at the application layer and can inspect traffic in order to make routing decisions...our ultimate decision came down to the footprint of the proxy. For this, there was one clear winner. Envoy is tiny. Written in C++11, it has none of the enterprise weight that comes with Java based Linkerd.

- Lots of good advice. Elasticsearch Performance Tuning Practice at eBay: Split your data into multiple indices if your query has a filter field and its value is enumerable; Use routing if your query has a filter field and its value is not enumerable; Organize data by date if your query has a date range filter; Set mapping explicitly; Avoid imbalanced sharding if documents are indexed with user-defined ID or routing; Make shards distributed evenly across nodes; Use bulk requests; Use multiple threads/works to send requests; Increase the refresh interval; Reduce replica number; Use auto generated IDs if possible; Use filter context instead of query context if possible; Increase refresh interval; Increase replica number; Try different shard numbers; Node query cache; Retrieve only necessary fields; Avoid searching stop words; Sort by _doc if you don’t care about the order in which documents are returned; Avoid using a script query to calculate hits in flight. Store the calculated fields when indexing; Avoid wildcard queries.

- Which is the fastest web framework? router_cr (crystal); raze (crystal); nickel (rust); japronto (python); iron (rust); fasthttprouter (go); kemal (crystal). Rails is last at 25. My first question was what the heck is crystal? Crystal: fast as C, slick as Ruby. Crystal’s syntax is heavily inspired by Ruby’s, so it feels natural to read and easy to write, and has the added benefit of a lower learning curve for experienced Ruby devs. Crystal is statically type checked, so any type errors will be caught early by the compiler rather than fail on runtime. Moreover, and to keep the language clean, Crystal has built-in type inference, so most type annotations are unneeded.

- Robohub Podcast with a fascinating episode on building an Open Source Prosthetic Leg: goal is to provide an inexpensive and capable platform for researchers to use so that they can work on prostheses without developing their own hardware, which is both time-consuming and expensive.

- Services by Lifecycle: Avoid the Entity Service; Focus on Behavior Instead of Data; Tell, Don’t Ask; Divide Services by Lifecycle in a Business Process.

- nikoladimitroff/Game-Engine-Architecture: A repo containing the learning materials for the course 'Game Engine Architecture with UE4' taught at Univeristy of Sofia.

- ray-project/ray (article): a flexible, high-performance distributed execution framework. Ray comes with libraries that accelerate deep learning and reinforcement learning development

- Retpoline: a software construct for preventing branch-target-injection: Retpoline” sequences are a software construct which allow indirect branches to be isolated from speculative execution. This may be applied to protect sensitive binaries (such as operating system or hypervisor implementations) from branch target injection attacks against their indirect branches.

- How to Lift-and-Shift a Line of Business Application onto Google Cloud Platform: Phase one – Migrate the application to the cloud using Google’s Infrastructure-as-a-Service (IaaS) offering, Google Compute Engine; Phase two – Leverage the cloud for high availability (HA); Phase three – Leverage the cloud for disaster recovery (DR).

- 5G Mobile: Disrupting the Automotive Sector: One does not need to postulate that 5G will be as important as railroads in the 19th century or indeed ICTs in the 1990s to appreciate that it will have a very sizable economic impact—even a fraction of the impact of these past GPTs would still be enough to make 5G a significant enabler of growth in the coming two decades. The automotive sector provides a good showcase of the role and effects of 5G, particularly the manner in which it can serve as a platform around which other innovation can occur.