Stuff The Internet Says On Scalability For January 26th, 2018

Hey, it's HighScalability time:

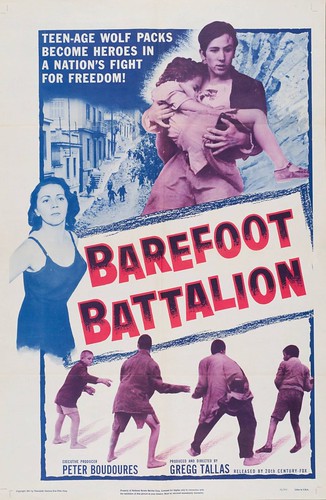

10,000 marvelous classic movie posters documenting a period in US history even Black Mirror could not imagine.

If you like this sort of Stuff then please support me on Patreon. And I'd appreciate your recommending my new book—Explain the Cloud Like I'm 10—to anyone who needs to understand the cloud (who doesn't?). I think they'll like it. Now with twice the brightness and new chapters on Netflix and Cloud Computing.

- $172 billion: amount Hackers stole in 2017; $100B: Netflix's value; 400G: ethernet; 2005: resurrection of a NASA satellite; 800: kilograms of oil equivalent needed per capita per year;

- Quotable Quotes:

-

Edgar D. Mitchell: You develop an instant global consciousness, a people orientation, an intense dissatisfaction with the state of the world, and a compulsion to do something about it. From out there on the moon, international politics look so petty. You want to grab a politician by the scruff of the neck and drag him a quarter of a million miles out and say, ‘Look at that, you son of a bitch.”

-

Laura Gilmore: It wasn’t by accident that I ended up working on robots in a cheese factory.

-

@BenedictEvans: "It is somewhat ridiculous to suppose that the invention of a motor car can render horses less necessary to man" - Saddlery and Harness magazine, 1895

-

A. Maurits van der Veen: In the end, it was the very strength of the social network connecting bulb traders, new and old alike, that made the bubble possible. All this implies that the tulip mania deserves to retain its position as a classic financial bubble. However, it also suggests that the lessons to be drawn from the bubble are less about a spectacular rise in prices followed by a collapse than about the social structures within which the market is embedded.

-

Pablo Meier: My not-useful takeaway from these reckons is that what you need is modularity, not microservices. If you replaced “each team publishes a library with a stable API to something like Artifactory” instead of “each team runs and monitors n services,” you get many of the same team structure benefits of microservices with a lot less of the technical hassle.

-

David Vye: The ability to spin a skater through 360 degrees in high definition requires that the system be able to deliver 400 megabytes per second

-

@garybernhardt: My 133 MHz computer in the late 90s could run chat without a performance impact on the rest of the system. Greetings from 1998, which is the future where computers are fast!

-

@typesfast: I’m in Seattle and there is currently a line to shop at the grocery store whose entire premise is that you won’t have to wait in line.

-

@bascule: It's 2032: the Internet now runs at dialup speeds because the rest of the bandwidth is used for blockchains. We've gone back to candles because electricity that was used for lighting is now used for mining. 2D gaming is back in because GPUs are too expensive.

-

Mike Orcutt: The researchers found that the top four Bitcoin-mining operations had more than 53 percent of the system’s average mining capacity, measured on a weekly basis. Mining for Ethereum was even more consolidated: three miners accounted for 61 percent of the system’s average weekly capacity.

-

Lily Chen: Among women who join the tech industry, 56 percent leave by mid-career, which is double the attrition rate for men.

-

@GossiTheDog: Just had a threat briefing from a leading cyber security company on Skyfall and Solace, two new CPU vulnerabilities with a website and logos. Didn’t have heart to tell them it’s a hoax.

-

@johnrobb: “The new generation doesn’t have time to watch television anymore, even I don’t buy newspapers. The News Feed, I can see quickly.”

-

bryan4tw: Nah, you're doing it wrong. We spin up 3x t2.large instances, and an elb. We have a script that installs apache and mysql on each server, then copies the files, and builds the mysql database from a backup on the dev's machine. edit Make sure to manually assign the right elastic IPs to the new instances too, because the script deploys to specific IP addresses! /edit Every change requires an entire rebuild of the environment, sometimes multiple changes per hour. It doesn't matter that the site is completely static once deployed, we need mysql on the instance so it loads faster and gets a higher page rank. There are 7 or 8 sites hosted like this. It costs us like $2000/month. It's enterprise grade; you can tell by the price.

-

Maciej Włodarczak: Tether printed MORE USD than US government in 2018

-

Stripe: we’ve seen the desire from our customers to accept Bitcoin decrease. And of the businesses that are accepting Bitcoin on Stripe, we’ve seen their revenues from Bitcoin decline substantially. Empirically, there are fewer and fewer use cases for which accepting or paying with Bitcoin makes sense.

-

Ben Thompson: This is clearly the goal with Amazon Go: to build out such a complex system for a single store would be foolhardy; Amazon expects the technology to be used broadly, unlocking additional revenue opportunities without any corresponding rise in fixed costs — of developing the software, that is; each new store will still require traditional fixed costs like shelving and refrigeration. That, though, is why this idea is so uniquely Amazonian.

-

Linus: Somebody is pushing complete garbage for unclear reasons.

-

Matt Hurd: Back in our real world: UAV-based comms, hollow core fibres, and HF-based HFT low-latency signaling may be happening whether you like it or not. Learn from Getco and don’t buy into the “New New Thing” that is just another Spread Networks. Be aware and beware.

-

Elizabeth Landau: In other words, as long as you’re comparing numbers with the same “label” or unit of magnitude—“million” to “million”—you’re quite likely to do fine in estimating relative magnitude. But things fall apart for many in comparing big numbers with different labels—“million” to “billion.”

-

@VibrantBiffle: 2010: Bitcoin will free us from the banking system. 2016: Central Banks should add bitcoins as a reserve currency 2017: Tethers, futures and ETFs are good for bitcoin 2018: It was for the banks all along

-

@RealSexyCyborg: If food delivery cost $0.40USD with no "tip" lots of Western countries might be at a similar place. Cheap migrant labor is the backbone of our e-commerce boom. The way they treat those boys is unforgivable.

-

tda: I had the same experience. I manually partitioned a pg10 db and it was quite a lot of work to make sure I had all the partitions I need. Then I switched to timescale and got it was just a breeze. I could partition a dataset both in time and in foreign_key with a single command, and performance (especially huge inserts) jumped up. Or to be more accurate, the performance degradation of my table which made each subsequent insert a bit slower than the previous was completely gone. All in all, very happy with timescaledb.

-

_pdp_: Lambda and dynamo are currently 600% cheaper than running our own instances. For us, we are making substantial savings. In fact, the biggest cost is not Lambda itself but dynamo because we had to create quite a few indexes. Frankly, these things can be avoided with better planning. That being said, it is still way cheaper in our case.

-

Kode Vicious: My personal favorite form of this stupidity is when programmers build systems in user space, using shared memory, and then reproduce every possible contortion of the locking problem seen in kernel programming. Coordination is coordination, whether you do it in the kernel, in user space, or with pigeons passing messages, though the first two places have fewer droppings to clean up.

-

Nick Statt: Netflix plans to spend $8 billion to make its library 50 percent original by 2018

-

shitloadofbooks: only the extremely good developers seem to comprehend that they are almost always writing what will be considered the "technical debt" of 5 years from now when paradigms shift again.

-

@copyconstruct: when I was in grad school, the distributed systems course entailed reading and distilling white papers and prototyping systems. These days, "Distributed Systems" increasingly means "building on top of Kubernetes" or running containers. Not a value judgement, just an observation

-

Kyle Orland: Today, those and many other relatively recent predictions of doom for the console market look downright silly. The industry analysts at NPD announced last night that the US video game market grew 11 percent in 2017 to $3.3 billion. The reason? "Video game hardware [meaning consoles] was the primary driver of overall growth," as hardware was up 27 percent for the year, to $1.27 billion.

-

occz: I was really interested in ECS, it seemed like a good way to get some containers going without having to wprry and later when load increases just get some stupidly simple auto scaling - so I decided to use ECS for one of our not particularly serious internal projects. It worked fine until I wanted to have more than one container and have these containers communicate - ECS has no support for Docker networks, which makes it essentially useless to me. I guess I have to start using Kubernetes like all the other cool kids.

-

keithwinstein: The basic theme is that (a) purely functional implementations of things like video codecs can allow finer-granularity parallelism than previously realized [e.g. smaller than the interval between key frames], and (b) lambda can be used to invoke thousands of threads very quickly, running an arbitrary statically linked Linux binary, to do big jobs interactively...For lambda, it's fun to imagine that every compute-intensive job you might run (video filters or search, machine learning, data visualization, ray tracing) could show the user a button that says, "Do it locally [1 hour]" and a button next to it that says, "Do it in 10,000 cores on lambda, one second each, and you'll pay 25 cents and it will take one second."

-

Ted Nelson: We thought computing would be artisanal. We did not imagine great monopolies

-

cs702: Say the cost in time/money/resources of coordinating between any two individuals is on average x dollars. Let's call this figure pair coordination cost. The pair coordination cost between 3 individuals, ignoring overhead, is 3x dollars, as there are three possible pairs that need to coordinate, i.e., there are three connections in a network with three nodes. The pair coordination cost between 4 individuals, ignoring overhead, is 6x dollars. At 5 people, the pair coordination cost is 10x dollars. At n people, the pair coordination cost is (n(n-1)/2)x dollars, which is asymptotically proportional to n²x as n grows. When coordination costs are high, as with software development, it's not surprising that small teams outperform large teams. Large software development teams generally cannot accomplish much -- unless they break into smaller teams with smaller, well-separated responsibilities. This applies to other high-coordination-cost endeavors, such as building a successful company from scratch.

-

Animats: This is another way to do IoT wrong - collecting data but just displaying it. The idea is to use CO₂ levels to control your HVAC system. The HVAC system should be able to draw fresh air from outside or recirculate air from inside, depending on CO₂ level. This is a standard option on modern HVAC systems, and there are standard sensors for it. Better systems sense temperature, CO₂, CO, humidity, and smoke. This is a huge win for rooms where the people load varies widely, such as hotel function rooms and classrooms. Such control systems save money, because, when nobody is using the room, they detect that CO₂ is low and cut down the ventilation rate. When the room fills up, the CO₂ level goes up, the fans speed up and the outside air intakes open until the CO₂ level comes down.[2] There are smart control units which manage heat, fans, vents, and air conditioning compressors. The hardware pays for itself in power consumption.

-

DannyBee: you should realize Google is pretty conservative internally in the main repository because of the amount of code/tooling/etc. While we let people experiment in the main repository, they are controlled experiments. Even if we decided, tomorrow, to move the main repositories away from our current set of programming languages, that would be a fairly long term path, not a short one. Outside of the main repository, or on google open source projects, or etc, people can generally do what they want, they just don't get the benefits of the above if they aren't using a language we already support very well.

-

_pdp_: We decided to base our new platform on serverless - previously docker. Couldn't be any happier. Everything runs as expected and I don't need to worry about a tone of things.People talk about vendor lock-in and all that. If you don't write things from scratch there is a vendor lock-in everywhere. Open source is also vendor lock-in. For example, we used to use xulrunner from Mozilla for some time and look where it is right now - nowhere. I was also concerned initially with being locked in into a particular platform but then again, what I realised is that if your project is extremely successful you can always spend big to migrate when you have the funds to do it. As a startup, that is not the case. You start with nothing. So platforms like AWS are absolutely amazing because not too long ago you still had to buy your own datacenters.scale

-

Nacer Chahat: After a couple of years of dedicated effort, the antenna team at JPL finally solved the problem—and in two different ways. In one project, called Radar in a CubeSat (or RainCube), we designed a deployable antenna that will fan out like an umbrella once the satellite reaches orbit. In another project, called Mars Cube One (MarCO) and due for launch in May, we created a flat antenna that unfolds from the surface of the CubeSat. Our success has led NASA to start considering these tiny platforms for missions that were once thought possible only with a large, conventional satellite.

-

Alison Preston: We actually build concepts, and we link things together that have common threads between them.” The cost of this flexibility, however, could be the formation of false or faulty memories: Silva’s mice became scared of a harmless cage because their memory of it was formed so close in time to a fearful memory of a different cage. Extrapolating single experiences into abstract concepts and new ideas risks losing some detail of the individual memories. And as people retrieve individual memories, these might become linked or muddled. “Memory is not a stable phenomenon,”

-

Obi_Juan_Kenobi: Generally speaking, I caution against computer analogies for biology. Biology is messy! It rarely works how you want it to. Even relatively common and basic techniques in molecular biology require a great deal of troubleshooting, and even in the best labs with loads of experience, sometimes things simply refuse to work how you'd like them to. You don't hear too much about synthetic biology anymore (it's still going, just less hype) because the premise was incredibly naive; you were never going to get bits and pieces of DNA to behave in a predictable manner. Just about everyone with wet lab experience suspected this.

-

beelseboob: No it's not - you're most likely thinking of current satellite internet systems which involve a few sats in geosynchronous orbit. That means the signal needs to travel 36,000km there and back, adding 250ms of latency just for getting up there and down again. What Elon Musk plans to do is build a constelation of lots of sats in LEO. LEO is only about 120km up, meaning it only takes 0.8ms to get up to the sat, and back down again. Importantly though, once at that altitude, signals travel between sats at the speed of light in a vacuum, rather than about 2/3 of it. That means you only need a transmission distance of around 800km, and your in-space signal will be lower latency than your on-the-ground one.

- A. Maurits van der Veen: A number of factors served as catalysts for speculation. First, Dutch commerce in general was flourishing during this period, creating new wealth not only at the elite levels but also among an increasingly prominent middle class. Second, the large profits generated by the East India trade — as well as speculation in the shares of the Dutch East India Company (VOC) — generated not only high incomes, but also a level of comfort with risky futures contracts. Third, no guild controlled the tulip trade, so barriers to entry were comparatively low (Schama 1988). Fourth, the free coinage policy of the Bank of Amsterdam may have attracted additional capital to Holland. One final contributing factor deserves separate mention: the plague which ravaged Holland in 1635-1636. Amsterdam lost about 20% of its inhabitants over two years. Eliminating the need to have one’s own bulb garden or even to be a connoisseur of tulips, combined with the lure of quick and easy profits, drew new entrants into the tulip market in growing numbers as early as the fall of 1635.

-

- Handling tens of thousands of requests / sec on t2.nano with Kestrel: So we get 25,000 requests a second and some pretty awesome latencies under very high load on a 1 CPU / 512 MB machine. For that matter, here are the top results from midway through on the t2.nano machine...To be fair, a t2.nano machine is meant for burst traffic and is not likely to be able to sustain such load over time, but even when throttled by an order of magnitude, it will still be faster than the Go implementation

- Steve Yegge is leaving Google because he thinks Google has lost its way. Why I left Google to join Grab. Google has become risk averse; political; arrogant; and is competitor instead of customer focussed. You know, like most every other big company. And given the hedonic treadmill nature of humans, 13 years is simply a long time to stay at one company. Even free brownies get old after awhile. Everyone will have an opinion, but as far as innovation, I think there are two Googles: Consumer Google and Developer Google. Consumer Google has always been...well...suspect. Developer Google is still kicking it though. It's probably not a coincidence that Developer Google is not competitor focussed, it's driven by innovation.

- Short and sweet. Adrian Cockcroft on the Evolution of Business Logic from Monoliths, to Microservices, to Functions. Microservices are so 5 years ago.

- Have we already reached peak internet latency? Network latencies and speed of light: In this short post I’m going to attempt to convince you that current network (Internet) latencies are here to stay, because they are already within a fairly small factor of what is possible under known physics, and getting much closer to that limit – say, another 2x gain – requires heroics of civil and network engineering as well as massive capital expenditures that are very unlikely to be used for general internet links in the foreseeable future. Also, High-Speed Trading: Lines, Radios, and Cables – Oh My.

- My God, It's Full of Tools! Stackshare has compiled their list of the Top 50 Developer Tools of 2017. Take a look around, you'll certainly see new tools you didn't know about. New Tool of the Year: Lottie. I'd never heard of it, but I don't often add high quality animation to Android, iOS, or React Native apps. Application & Data Tool of the Year: React. You'd have to be dead to not have heard about React. Utility Tool of the Year: Postman. DevOps Tool of the Year: GitHub. Business Tool of the Year: Slack.

- Thinking through the push notification provider selection process. Do you want a generalist or specialist? Push Notifications: Firebase Vs. OneSignal: OneSignal’s immediate advantage? It’s a push notification service. Just what we needed—nothing more, nothing less...OneSignal does it better. With its filters and queries (session_count, amount_spent, tags, language, location, email), you can offload a fair amount of computing from your backend. When push (notifications) comes to shove, we at Mobile Jazz seek the best tool for the job over a one-size-fits-all solution. OneSignal has a superior user interface dedicated to managing and adjusting notifications via the dashboard. Its SDK is orientated around push notifications, with more coherent labelling conventions than Firebase.

- The high-bandwidth future is getting a preview at the Olympics. It looks awesome. 5G Makes Its Public Debut At The Winter Games. What can you do with trillions of dollars of investment in 5G? Immersion, that seems to be the theme; Track the progress of competitors with skiers wearing a GPS receiver to pass their location live to the KT servers; drones equipped with video cameras; Sync View – Transmits super-high-quality video in real time using an ultra-small camera, position sensor, and mobile communication module, enabling viewers to watch the games from the perspective of the players; Interactive Time Slice – All 100 cameras installed at different angles will shoot what’s happening, letting viewers interactively choose the screen and angle they want to watch; 360° VR Live – Events will be captured and streamed by 360-degree cameras and using head-mounted display (HMD) equipment that offers virtual reality (VR) live views of virtually every place in the arena; Omni Point View – Presents the event in virtual 3D space, enabling spectators to enjoy 3D virtual view from the perspectives of the player of their choice or at specific points they want on mobile devices in real time.

- Good tutorial. Running Dedicated Game Servers in Kubernetes Engine. Also, How we built a serverless digital archive with machine learning APIs, Cloud Pub/Sub and Cloud Functions.

- How do you run canary deploys in practice? cortesoft: I run the DevOps team for a large CDN, and am the lead developer of our canary system. We deploy a wide variety of software to a number of different systems, and can have anywhere from 20-50 simultaneous canaries deploying code to 30,000+ servers. With that many servers and canaries going, it can be easy to run into conflicts when you want to deploy a new version of a piece of software while another canary is still deploying the previous version. Canaries often need to go slowly enough to get full day-night cycles in a number of global regions (traffic patterns are not the same everywhere). The way we get around this is through the heavy use of feature flags, and the idea of having all feature flags enabled by default is the opposite of our strategy. My old boss used a 'runway' analogy for canaries; you have to wait for the previous release to finish 'taking off' (be deployed) before you can release the next version. So we need to deploy quickly so you don't 'hog the runway' So it seems we have two disparate goals; we want to let canaries release slowly to see full traffic cycles, but need to release quickly to get off the runway. We solve this problem by having the default behavior for any new feature be disabled when releasing the code. This allows us to deploy quickly; once you verify in your canary that your new code is not being executed (i.e. your feature flag is working), you can deploy quite quickly. Your code should be a no-op with the feature flag disabled. Once your code is released, THEN you can start the canary for enabling your feature flag. Feature flags (and in fact, all configuration choices) are first class citizens in our canary system; you can canary anything you want, and you get all the support of a slow controlled release, with metrics and A-B comparisons. Since the slower canary is simply enabling a feature, other people can keep releasing new versions of code without interfering with your feature flag canary. Now, you can have confounding issues with multiple people making canaries on the same systems, but our tooling allows you to disambiguate which canary is causing issues we find.

- Using Go on Lambda can save you a lot of money. AWS Lambda Go vs. Node.js performance benchmark: updated. Test 1: There is almost zero difference between Node.js and Go in this compute intensive test. Test 2: This is a much clearer result than the former test, it is only in the 99% percentile that both tests are somewhat equal but still far apart. All values below this threshold are all in favour of Go. This is where users that have high volume AWS functions could really save money when switching to Go, as their bill could effectively be cut by up to ~40%.

- Good story of how a system evolves. Go is cheaper than Node. Heroku is cheaper than Lambda. To 30 Billion and Beyond: ipify is a freely available, highly scalable ip address lookup service...The first iteration of ipify was quite simple. I wrote an extremely tiny (< 50 LOC) API service in Node...I decided that if I wanted to run ipify in a scalable, highly available and cheap way then Heroku was the simplest and best option...I added another dyno (brining my monthly cost up to ~$75/mo), but decided to investigate further. I'm a frugal guy -- the idea of losing > $50/mo seemed unpleasant...At this point, the production ipify service was able to serve roughly 20 RPS across two dynos. ipify was in total serving ~52 million requests/mo. Not impressive...I decided to rewrite the service in Go...The results were fantastic. I was able to get about ~2k RPS of throughput from a single dyno! This brought my hosting back down to $25/mo and my total throughput to ~5.2 billion requests/mo...Before I knew it ipify was servicing around 15 billion requests/mo and I was now back to spending $50/mo on hosting costs...Today, ipify routinely serves between 2k and 20k RPS...Average response time ranges between 1 and 20ms depending on traffic patterns...today the service runs for between $150/mo and $200/mo depending on burst traffic and other factors...If you compare that to the expected cost of running the same service on sometihng like Lambda, ipify would rack up a total bill of: $1,243.582/mo (compute) + $6,000/mo (requests) = ~$7,243.58. Also, Handling tens of thousands of requests / sec on t2.nano with Kestrel.

- Changelog with a fascinating podcast on Building a Secure Operating System with Rust. Maybe Rust can help make microkernels a thing. Also, Redox, Redox book, redox-os/redox

- Building Lambda-Powered WebSockets with Fanout: You can also build custom Lambda-powered WebSockets by integrating a service like Fanout — a cross between a message broker and a reverse proxy that enables realtime data push for apps and APIs. With these services together, we can build a Lambda-powered API that supports plain WebSockets. This approach uses GRIP, the Generic Realtime Intermediary Protocol — making it possible for a web service to delegate realtime push behavior to a proxy component. This FaaS GRIP library makes it easy to delegate long-lived connection management to Fanout, so that backend functions only need to be invoked when there is connection activity. The other benefit is that backend functions do not have to run for the duration of each connection.

- Not every small piece of code has to be asynchronous. The performance characteristics of async methods in C#. Async methods performance 101: If the async method completes synchronously the performance overhead is fairly small; If the async method completes synchronously the following memory overhead will occur: for async Task methods there is no overhead, for async; Task<T>methods the overhead is 88 bytes per operation (on x64 platform); ValueTask<T> can remove the overhead mentioned above for async methods that complete synchronously; A ValueTask<T>-based async method is a bit faster than a Task<T>-based method if the method completes synchronously and a bit slower otherwise; A performance overhead of async methods that await non-completed task is way more substantial (~300 bytes per operation on x64 platform).

- Using his third eye Will Hamill peers deep into Amazon's soul to figure out why AWS does the things it does. Making sense of AWS re:Invent: Amazon released quite a lot of new services at AWS re:Invent. 61 new services or significant updates to existing services. But why these services in particular?...Part of Amazon’s strategy is and has long been to remove ‘undifferentiated heavy lifting’ - and that doesn’t just mean to go around mopping up all the low level configuration hassle people are having on top of their existing services and turning it into a managed service...What it means is that if your job is to build things on top of computer platforms and low-level abstractions (i.e. most ISVs, development companies, outsourcing companies, consulting companies) then what you consider a low-level abstraction needs to change...If you’re implementing low-level configuration type work, or anything else now offered by an AWS service, you’re essentially now competing with Amazon...Unless you work for a company whose actual core business is in providing these kinds of platforms, then the bulk of your work should be done at higher levels. Look at PaaS over IaaS, evaluate Lambda seriously, prefer managed services by default. I’d go so far as to say that in most cases, Infrastructure as a Service isn’t for you, it’s for Amazon and Azure and Google’s own service teams to build stuff on top of. What you want is to then build on their offerings instead of duplicating them. If you don’t do it, your competitors will!

- We come not to praise REST, but to bury it. A GraphQL Primer: Why We Need A New Kind Of API (Part 1): REST services tend to be at least somewhat “chatty” since it takes multiple round trips between client and server to get enough data to render an application; The next problem with REST-style services is that the send way more information than is needed. In our blog example, all we need is each post’s title and its author’s name which is only about 17% of what was returned. That is a 6x loss for a very simple payload. In a real-world API, that kind of overhead can be enormous; The final thing that REST APIs lack are mechanisms for introspection. Without any contract with information about the return types or structure of an endpoint, there is no way to reliably generate documentation, create tooling or interact with the data.

- Why yes, I did wonder. You may be wondering why we "reinvented the wheel" and took effort to implement another job queue sytem, on top of PostgreSQL. Why Another Job Queue? And Why PostgreSQL? Also, Get Rid of That Bottleneck Using Modern Queue Techniques.

- Experiences with running PostgreSQL on Kubernetes. twakefield: I read it differently (albeit, I have much more context). I read it as a cautionary tail that Kubernetes makes it easier to get in trouble if you don't know what you are doing - so you better have a deep knowledge of you stateful workloads and technology. Perhaps obvious to some, but still a good reminder when dealing with a well-hyped technology like Kubernetes.

- Georgios Michelogiannakis with an interesting talk on how we can keep Moore's Law going. An architect's point of view on emerging technologies. There's emerging transistors, emerging memories, 3D integration, and specialized architectures (Quantum Control Processor, Superconducting Logic, Carbon Nanotubes).

- Netflix on Scaling Time Series Data Storage — Part I: By leveraging parallelism, compression, and an improved data model, the team was able to meet all of the goals: Smaller Storage Footprint via compression; Consistent Read/Write Performance via chunking and parallel reads/writes. Latency bound to one read and one write for common cases and latency bound to two reads and two writes for rare cases; The team achieved ~6X reduction in data size, ~13X reduction in system time spent on Cassandra maintenance, ~5X reduction in average read latency and ~1.5X reduction in average write latency. More importantly, it gave the team a scalable architecture and headroom to accommodate rapid growth of Netflix viewing data.

- All good things must come to an end. Building a Distributed Log from Scratch, Part 5: Sketching a New System: This concludes our series on building a distributed log that is fast, highly available, and scalable. In part one, we introduced the log abstraction and talked about the storage mechanics behind it. In part two, we covered high availability and data replication. In part three, we we discussed scaling message delivery. In part four, we looked at some trade-offs and lessons learned. Lastly, in part five, we outlined the design for a new log-based system that draws from the previous entries in the series.

- Fun story of Reverse Engineering A Mysterious UDP Stream in My Hotel.

- It's partitions all the way down. PostgreSQL 10: Partitions of... partitions!: Native Partitioning was a highly anticipated PostgreSQL feature. I truly believe this to be a game changer when it comes to hard decisions on database solutions. Do you really need a new, complicated, NOSQL solution to handle your growing data? Partitioning in PostgreSQL 10 might just be what you need (and save you a lot of headches!).

- binhnguyennus/awesome-scalability: An up-to-date and curated reading list for designing high scalability 🍒, high availability 🔥, high stability 🗻 back-end systems - Merge requests are greatly welcome 👬 Back-end & SRE engineers, let' the world know your silent yet awesome work

- google/honggfuzz (article)~ The Honggfuzz NetDriver, introduced recently into the Honggfuzz's code-base implements a fairly robust fuzzing setup for TCP servers.

- Encoding, Fast and Slow: Low-Latency Video Processing Using Thousands of Tiny Threads: We describe ExCamera, a system that can edit, transform, and encode a video, including 4K and VR material, with low latency. The system makes two major contributions. First, we designed a framework to run general-purpose parallel computations on a commercial “cloud function” service. The system starts up thousands of threads in seconds and manages inter-thread communication. Second, we implemented a video encoder intended for fine-grained parallelism, using a functional-programming style that allows computation to be split into thousands of tiny tasks without harming compression efficiency. Our design reflects a key insight: the work of video encoding can be divided into fast and slow parts, with the “slow” work done in parallel, and only “fast” work done serially.

- BRIEF FOR 51 COMPUTER SCIENTISTS AS AMICI CURIAE IN SUPPORT OF THE RESPONDENT: More than a billion people around the world safeguard their private emails and other data in hundreds of high-security datacenters operated by trusted American “cloud” service providers such as Amazon, Google, IBM, and Microsoft. This new paradigm combines the highest security for one’s most personal data with nearly instantaneous access from anywhere in the world. Like the telegraph, telephone, and Internet before it, the “cloud” is built on old and new technologies. Any legal decisions involving this new paradigm should be based on a clear understanding of the underlying science and engineering. For example, it should not conflate where data is accessible with where it is located. Nor should it overlook the myriad physical actions required at the datacenter to deliver on two of the “cloud’s” primary promises: security and speed of access. We hope our brief assists this Court in reaching such an understanding. We explain the following points based on the underlying computer science of this “cloud” technology and service: 1. The data is at a specific physical location; 2. Retrieving data from Ireland requires complex physical actions in Ireland; The data is protected in a digital “safe deposit box”; The closer the data, the faster the access.

- Blockchains from a Distributed Computing Perspective: This article is a tutorial on the basic notions and mechanisms underlying blockchains, colored by the perspective that much of the blockchain world is a disguised, sometimes distorted, mirror-image of the distributed computing world

- The Dutch Tulip Mania: The Social Foundations of a Financial Bubble: Presenting the most complete dataset of tulip prices compiled until now, this paper demonstrates that attempts to explain away the bubble in this manner cannot be justified with the available data: the tulip mania really did produce a bubble. Available price data are too scarce and unreliable to permit a conclusive explanation of the bubble, but evidence from contemporary accounts suggests that a model based on social networks and information cascades best explains the emergence and collapse of this bubble: a dense, localized social network made the bubble possible, but also served to limit its impact on the broader economy.