Stuff The Internet Says On Scalability For November 16th, 2018

Wake up! It's HighScalability time:

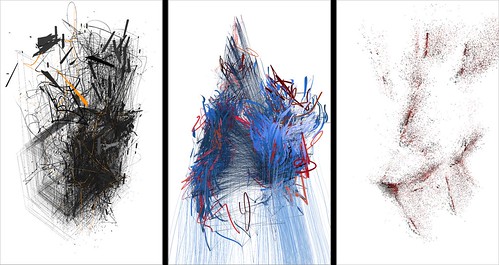

Beautiful. Unwelcome Gaze is a triptych visualizing the publicly reachable web server infrastructure of Google, Facebook, Amazon and the routing graph(s) leading to them.

Do you like this sort of Stuff? Please support me on Patreon. I'd really appreciate it. Know anyone looking for a simple book explaining the cloud? Then please recommend my well reviewed (30 reviews on Amazon and 72 on Goodreads!) book: Explain the Cloud Like I'm 10. They'll love it and you'll be their hero forever.

- $1 billion: Alibaba sales in 85 seconds; 1 million: Human brain processor supercomputer; 1 billion: Uber quarterly loss; 640,000: AMD cores in Hawk supercomputer; 40x: drone lifts its own weight; 26: pictures in history of computing; 10^11: WeChat requests per day;

- Quotable Quotes:

- Larry Ellison: It’s kind of embarrassing when Amazon uses Oracle but they want you to use Aurora and Redshift. They’ve had 10 years to get off Oracle, and they’re still on Oracle.

- @Werner: Amazon's Oracle data warehouse was one of the largest (if not THE largest) in the world. RIP. We have moved on to newer, faster, more reliable, more agile, more versatile technology at more lower cost and higher scale. #AWS Redshift FTW!

- Sheera Frenkel: “We’re not going to traffic in your personal life,” Tim Cook, Apple’s chief executive, said in an MSNBC interview. “Privacy to us is a human right. It’s a civil liberty.” (Mr. Cook’s criticisms infuriated Mr. Zuckerberg, who later ordered his management team to use only Android phones — arguing that the operating system had far more users than Apple’s.)

- @copyconstruct: This is a fantastic companion to the first Aurora paper from Amazon.

The main takeaway is the distributed consensus kills performance, that local state can actually be a good thing, use an immutable log as the source of truth (basically what the Kafka folks have been preaching)

- @yishengdd: However from my own experience, AWS Amplify is 10x better than Google Firebase

- Daniel Lemir: Another way to look at the problem is to measure the “speedup” due to the memory-level parallelism: we divide the time it takes to traverse the array using 1 lane by the time it takes to do so using X lane. We see that the Intel Skylake processor is limited to about a 10x or 11x speedup whereas the Apple processors go much higher.

- Shaun Raviv: Friston’s free energy principle says that all life, at every scale of organization—from single cells to the human brain, with its billions of neurons—is driven by the same universal imperative, which can be reduced to a mathematical function. To be alive, he says, is to act in ways that reduce the gulf between your expectations and your sensory inputs. Or, in Fristonian terms, it is to minimize free energy.

- Max J. Krause: with the exception of aluminium, cryptomining consumed more energy than mineral mining to produce an equivalent market value. While the market prices of the coins are quite volatile, the network hashrates for three of the four cryptocurrencies have trended consistently upward, suggesting that energy requirements will continue to increase. During this period, we estimate mining for all 4 cryptocurrencies was responsible for 3–15 million tonnes of CO2 emissions.

- Doubleslash: Back in 2014 OpenStack may have been a thing but nowadays, 4 years later, people learned a lesson. There is no value in being a full-stack operator of infrastructure for systems. The idea of running a complex, distributed architecture like a private cloud at the same or higher stability and comparable or even lower cost than public clouds eventually proved futile. That's why in 2018, consensus is, infrastructure for systems has been replaced by infrastructure for applications (k8s). The infrastructure is typically consumed on public clouds.

- Rob Pike: The key point here is our programmers are Googlers, they’re not researchers. They’re typically, fairly young, fresh out of school, probably learned Java, maybe learned C or C++, probably learned Python. They’re not capable of understanding a brilliant language but we want to use them to build good software. So, the language that we give them has to be easy for them to understand and easy to adopt.

- ChuckMcM: Looking forward to these chips but always have concerns for AMDs ability to execute consistently. Opteron, the original 'Sledgehammer' series was way ahead of Intel because Intel just couldn't bring themselves to put 64 bit features into their Pentium line, and AMD squirreled away that advantage by not following up, and having other issues with later spins of the Opterons. That said, this really does look like a pretty awesome chip for data centers. I would love a dual socket mother board that had 512G of RAM 1TB of Optane memory as additional "RAM", then 16TB of NVME SSD storage, and 32 SAS/SATA channels for an effective 360TB of rotating disk (dual parity RAID 6, 30 active drives, 2 parity drives). And then make a cluster of 48 of those monsters. Ah the places we would go and the things we would do with such a system.

- Julia Evans: Another huge part of understanding the software I run in production is understanding which parts are OK to break (aka “if this breaks, it won’t result in a production incident”) and which aren’t. This lets me focus: I can put big boxes around some components and decide “ok, if this breaks it doesn’t matter, so I won’t pay super close attention to it”.

- Joel Hruska: There are positive reasons for why AMD adopted chiplets — they improve yield and cost compared to enormous monolithic dies and they’re inherently cheaper to manufacture since they waste less wafer space (smaller chips result in less wasted wafer as a percentage of the total). But there’s also a negative reason that speaks to the difficulty of current scaling: We’ve reached the point where it simply makes little sense to continue scaling down wire sizes. Every time we perform a node shrink, the number of designs that benefit from the shift are smaller. AMD’s argument with Rome is that it can get better performance scaling and reduce performance variability by moving all of its I/O to this single block, and that may well be true — but it’s also an acknowledgment that the old trend of assuming that process node shrinks were just unilaterally good for the industry is well and truly at an end.

- @kellabyte: I am so tired of learning new programming languages every time I change projects when 99% of all the code we write never matters what it’s written in. You could benefit from my expertise in a language more than I benefit from learning yet another language.

- Asha McLean: The top five countries selling to China by GMV were Japan, the United States, Korea, Australia, and Germany. Rounding out the top 10 categories of imported products bought by Chinese consumers was health supplements, milk powder, facial masks, and diapers. Swisse was the top-selling global brand.

- @colmmacc: It's ten years since we launched Amazon CloudFront! Building it was super fun, my first day at Amazon was spent getting stuck into the CloudFront routing software and making it actually work. A few weeks later, but before we launched, we hit my favorite bug ever ... of course it involves YAML, and may be the root of my hatred for that format! So CloudFront is a DNS based CDN, the "magic" happens at the DNS layer, where our DNS servers figure out what the best location to serve your traffic is ... we built this gigantic live map of the internet ... for every network out there, we figure out what the correct ordering of CloudFront locations should be. E.g. if I'm Seattle, then it's something like SEA, PDX, SFO ... you get the idea ... The map is based on latency measurements and geo-data. For the geo-data, we had import a huge geodb (we have a few of these) and import them into the CloudFront routing later. That import happened in YAML format.

- ThousandEyes: Architectural differences between providers: Traffic enters the AWS network closest to the target region, but for GCP and Azure, traffic enters closest to the users. Performance varies by region: Geographical performance variations exist across the three cloud providers, most noticeably in the LATAM and Asia regions. Intra-cloud network performance is strong: Multi-cloud performance between the three providers is consistent and reliable.

- @MrAlanCooper: When I started programming, it was a solo skill, performed by individuals, with little or no sharing and virtually no collaboration. A culture was built around those facts. 1 So, back in the day, to be a good programmer one had to be able to do all the logical gymnastics that programmers do, but one had to do it solo, and ENJOY doing it solo. The culture of individualism grew strong. 2 I don’t want to defend that culture, and I’m not a little bit embarrassed by the fact that I thrived in it. I wrote a chapter about the macho culture of programming in my book, The Inmates are Running the Asylum, published 20 years ago. 3

- Mikhail Dyakonov: All these problems, as well as a few others I’ve not mentioned here, raise serious doubts about the future of quantum computing. There is a tremendous gap between the rudimentary but very hard experiments that have been carried out with a few qubits and the extremely developed quantum-computing theory, which relies on manipulating thousands to millions of qubits to calculate anything useful. That gap is not likely to be closed anytime soon.

- Mark LaPedus: It appears that machine learning is moving into the photomask shop and fab. We’ve seen companies begin to use the technology for circuit simulation, inspection and design-for-manufacturing. I’ve heard machine learning is being used to make predictions on problematic areas or hotspots. What is the purpose here or what does this accomplish?

-

@RGB_Lights: I hope this latest fiasco of traffic rerouting through China is the wakeup call for all of us to get serious about addressing the massive and unacceptable vulnerability inherent in today’s BGP routing architecture.

-

@Carnage4Life: Seems the EU has basically decided that after government mandated tech competitors to Silicon Valley didn't work out they'll just regulate them into oblivion. First Facebook with GDPR now YouTube with article 13. Unintended consequences? I doubt it.

- @mipsytipsy: Excellent point: I don't want ANYONE logging in to production. Ssh is an anti-pattern, even for ops. App developers, use your *instrumentation* as eyes and ears.

- Brenon Daly: The net reduction in publicly traded companies has erased tens of billions of dollars of market value from what had once been viewed as the place for software vendors to be, from both a marketing and financial point of view. For generations, software entrepreneurs founded and funded their businesses with a singular goal: IPO. Ringing the opening bell on the Nasdaq or NYSE was seen as a rite of passage for a company that aspired to grow out of its status as a ‘startup.’

- elvinyung: What if performant cross-shard transactions is a red herring, and the thing that we should be looking more into is reliable automatic data colocation to avoid performing cross-shard transactions as much as possible? There's decent amount of academic research around this, with projects like SWORD [1] and Schism [2] that study shard load balancing as a problem of hypergraph partitioning. It seems like this might be worth incorporating into commercial distributed database projects.

- Jordan Ritter: Then there was a day, I think it was June, where I got a message from Shawn that said something to the effect of, “Holy shit! John incorporated [Napster]—and he dicked me out of the ownership!” And the blood drained out of my face. Shawn didn’t have a dad growing up; John was his uncle. He was absolutely the car salesman type. He could bully you into doing anything. And John, without telling Shawn, incorporated the company and gave himself 70 percent of it. And if there was one mistake, one fucking mistake that sank us, it was that one. Because when it came time to raise money, no one wanted to deal with John and his big-swinging-dick attitude, so we ended up settling for second best, or third best, or fourth best, fifth best, time and time again. That really set us up for failure from the very beginning.

- Bob Hinden: We started to realize in the ealy 90s we were starting to run out v4 addresses.

-

@seldo: P.S. if you are thinking "sure, Cloudflare Workers sound nice but is anybody really using them at scale?" the answer is "yes, the npm Registry has been mostly workers since May".

- @el33th4xor: The miners have a funny game they play among themselves, where they constantly add more hashpower to steal income from each other. This does not translate into a betterment for users in any form: it adds no additional throughput to the system.

- Dina Bass~ Microsoft collected $9.5 billion in Azure cloud revenue in 2018, vs. $1.6 billion for the comparable Google business, according to investment bank KeyBanc Capital Markets Inc. Next year, KeyBanc forecasts, it’ll be $15.1 billion for Microsoft, $3.2 billion for Google. Of course, in a market expected to top $40 billion next year, third place in the U.S. isn’t so bad. Still, “Google is way back,” says Brent Bracelin, an analyst at KeyBanc who co-authored the report. “They don’t have enterprise sales distribution,” he says. “That’s their big Achilles’ heel. Microsoft has a massive footprint there.”

- alex_hitchins: That and the fact you can speak to engineers at Microsoft will relative ease. Recently had an Azure support ticket open. The whole process was beyond my expectations to the point I'd have difficulty putting anything big anywhere else. Could be personal preference, but the way they manage introducing new services and grandfathering old ones is also very good.

- @karpathy: last fun thing to think about is that we're [Tesla] doing 1.28M images over 90 epochs with 68K batches, so the entire optimization is ~1700 updates to converge. How lucky for us that our Universe allows us to trade that much serial compute for parallel compute in training neural nets

- Brian Bailey~ Why Chips Die. Death by design. Death by manufacturing. Death by handling. Death by association. Death by operation.

- iAPX: The problem is not HTTP over TCP, it is out-sourced Ads and trackers. 67 requests, 1.0MB transferred, 1.48s with AdBlock Plus and my own Tracker Blocking Chrome Extension. 263 requests, 2.8MB transferred, 26.92s without any of that. This is the home page of Ars Technica as I write it (full reload). Do you think that HTTP/3 will generate 4X less request, will be 3X faster to transfer and will be 18X faster to complete loading?!?

- realusername: I've created an account to try Azure last weekend, it took me 2 hours to sign-up due to issues in the registration on their end, and then once it finally worked, I copied an example from their documentation. 5 minutes after, I received an email that I was banned for "security reasons", so yeah if they can't even handle a sign-up properly, I wonder how they want to reach the top spot of the cloud market, I'll for sure try to avoid Azure in the future for any company I go.

- qubex: No: Reversible Computing embraces the (quantum mechanical) notion that information cannot ever be lost (cfr the “Black Hole Information Paradox”), and that therefore the output of a computation can only be a reshuffled but complete permutation of the input. Nix (for example) allows you to delete stuff and/or initialise a variable by zeroing it out and thus obliterating information. A reversible computing paradigm would not allow this. (In practical terms this information is dissipated as heat when memory deletes information.)

- Samantha Zyontz: I find that external tool specialists contribute more to early adopter teams that provide innovations in difficult application areas, and not the low hanging fruit. In the easier applications, teams in the application area are more inclined to learn how to use CRISPR themselves for new innovations. Interestingly, this effect does not diminish right away. External tool specialists are still used for subsequent innovations in more difficult applications.

- shareometry: I am currently evaluating GCP for two separate projects. I want to see if I understand this correctly: 1) For three whole days, it was questionable whether or not a user would be able to launch a node pool (according to the official blog statement). It was also questionable whether a user would be able to launch a simple compute instance (according to statements here on HN). 2) This issue was global in scope, affecting all of Google's regions. Therefore, in consideration of item 1 above, it was questionable/unpredictable whether or not a user could launch a node pool or even a simple node anywhere in GCP at all. 3) The sum total of information about this incident can be found as a few one or two sentence blurbs on Google's blog. No explanation nor outline of scope for affected regions and services has been provided. 4) Some users here are reporting that other GCP services not mentioned by Google's blog are experiencing problems. 5) Some users here are reporting that they have received no response from GCP support, even over a time span of 40+ hours since the support request was submitted. 6) Google says they'll provide some information when the next business day rolls around, roughly 4 days after the start of the problem.

- Oracle sure pissed off Amazon. Is that a good idea? This quote is just the first salvo. James Hamilton on Choose Technology Suppliers Carefully: However, the second thing I’ve learned from watching Oracle/customer relationships over the years is there really are limits to what customers will put up with and when hit, action is taken. Eventually, a customer will focus on the “Oracle Problem” and do the work to leave the unhealthy relationship. When they do, the sense of relief in the room is absolutely palpable. I’m super happy to have been part of many of these migrations in my near-decade on each of IBM DB2 and Microsoft SQL Server. It’s fun when helping to make your product work for a customer not only helps your company but also helps the customer save money, get a better product, and no longer have to manage an unhealthy supplier relationship.

- Is there a name for that thing where people worry too much about names? Serverless & Functions — Not One And The Same. Also, Serverless for startups — it’s the fastest way to build your technology idea.

- Congestion control is always a fun and overlooked meta component of any distributed system. Overload control for scaling WeChat microservices

- WeChat’s microservice system accommodates more than 3000 services running on over 20,000 machines in the WeChat business system, and these numbers keep increasing as WeChat is becoming immensely popular. As WeChat is ever actively evolving, its microservice system has been undergoing fast iteration of service updates. For instance, from March to May in 2018, WeChat’s microservice system experienced almost a thousand changes per day on average.

- On a typical day, peak request rate is about 3x the daily average. At certain times of year (e.g. around the Chinese Lunar New Year) peak workload can rise up to 10x the daily average.

- How do they handle load spikes without dropping requests and degrading service? DAGOR. DAGOR is WeChat's overload control system. DAGOR uses the average waiting time of requests in the pending queue (i.e., queuing time). Queuing time has the advantage of negating the impact of delays lower down in the call-graph (compared to e.g. request processing time). Request processing time can increase even when the local server itself is not overloaded. DAGOR uses window-based monitoring, where a window is one second or 2000 requests, whichever comes first.

- This is cool, most systems don't understand different requests should be treated differently~ To deal with this in a service agnostic way, every request is assigned a business priority when it first enters the system. This priority flows with all downstream requests. Business priority for a user request is determined by the type of action requested. When overload control is set to business priority level n, all requests from levels n+1 will be dropped. WeChat adds a second layer of admission control based on user-id. User priority is dynamically generated by the entry service through a hash function that takes the user ID as an argument. Each entry service changes its hash function every hour. As a consequence, requests from the same user are likely to be assigned to the same user priority within one hour, but different user priorities across hours. This provides fairness while also giving an individual user a consistent experience across a relatively long period of time. It also helps with the subsequent overload problem since requests from a user assigned high priority are more likely to be honoured all the way through the call graph.

- As a SEDA fan this was interesting: Compared to CoDel and SEDA, DAGOR exhibits a 50% higher request success rate with subsequent overloading when making one subsequent call. The benefits are greater the higher the number of subsequent requests.

- Lessons: Overload control in a large-scale microservice architecture must be decentralized and autonomous in each service; Overload control should take into account a variety of feedback mechanisms (e.g. DAGOR’s collaborative admission control) rather than relying solely on open-loop heuristics; Overload control design should be informed by profiling the processing behaviour of your actual workloads.

- Facebook's Data @Scale – Boston recap. Some titles you might like: Lessons and Observations Scaling a Timeseries Database; Leveraging Sampling to Reduce Data Warehouse Resource Consumption; Deleting Data @ Scale.

- 180,000 brands and 200,000 offline smart stores participated in the shopping event from Alibaba's ecosystem in China and around the world. Of that number, 237 brands exceeded sales of 100 million yuan. Alibaba is a giant purpose built selling machine. How did they do it? AI, automation, digital. We get an overview in The technology enabling Alibaba to sell $30.8 billion in Double 11 goods: Alibaba used an intelligent operating platform, DC Brain, to optimise the performance of the 200-plus global internet datacentres (IDCs) hosting its online stores in areas including energy consumption, temperature, energy efficiency, and reliability. Through machine learning, DC Brain can predict the electric consumption and Power Usage Effectiveness of each IDC in real time, allocating to each to reduce energy consumption. For this year's festival, Alibaba also made available its "hyper-scale green datacentre" located in Zhangbei, northern China. Developed by Tmall Global, Alibaba Cloud, and Cainiao, the global tracking system uses Internet of Things (IoT) and blockchain technology to verify products purchased by consumers through dual authentication and two-way encryption, while tracking the real-time location of items through location-based services and GPS. With 700 automated guided vehicles (AGVs), Cainiao's new smart warehouse, which is the largest robotic smart warehouse in China, used IoT to automatically direct AGVs to drive, load, and unload. Sky Eye uses cameras in logistics stations across the country, and by using a combination of computer vision technology and Cainiao's algorithm is able to identify idle resources and abnormalities in the logistics process. The online chatbot, recently launched on Lazada, provides instant translation from English to Thai, Vietnamese, Indonesian, and vice-versa. The chatbot also provided support for AliExpress, Alibaba's global online retail platform that links buyers and sellers conversing in English, Chinese, Russian, Spanish, French, Arabic, and Turkish. Through machine learning, AI was used to predict a product's future sales volume in the weeks leading up to 11.11. Prices were also automatically adjusted in real-time, using reinforced learning. New Retail saw technology immersed within bricks and mortar stores, such as a "magic mirror" makeup kiosk that allowed customers, through augmented reality, to visualise what certain makeup items looked like on them before purchasing.

- How big should your connection pool be? About Pool Sizing. Reducing the connection pool size alone, in the absence of any other change, decreased the response times of the application from ~100ms to ~2ms -- over 50x improvement. Why? Once the number of threads exceeds the number of CPU cores, you're going slower by adding more threads, not faster. This: You want a small pool, saturated with threads waiting for connections. If you have 10,000 front-end users, having a connection pool of 10,000 would be shear insanity. 1000 still horrible. Even 100 connections, overkill. You want a small pool of a few dozen connections at most, and you want the rest of the application threads blocked on the pool awaiting connections. If the pool is properly tuned it is set right at the limit of the number of queries the database is capable of processing simultaneously -- which is rarely much more than (CPU cores * 2) as noted above.

- Cloud economics is all about making money by sharing fixed cost resources with the most users possible. Cloudflare goes next level. Cloud Computing without Containers.

- The great chain of cloud being: VM, container, serverless => isolates?

- Isolates are lightweight contexts that group variables with the code allowed to mutate them. Most importantly, a single process can run hundreds or thousands of Isolates, seamlessly switching between them. They make it possible to run untrusted code from many different customers within a single operating system process.

- kentonv: Hi, I'm the tech lead of Workers. Note that the core point here is multi-tenancy. With Isolates, we can host 10,000+ tenants on one machine -- something that's totally unrealistic with containers or VMs. Why does this matter? Two things: 1) It means we can run your code in many more places, because the cost of each additional location is so low. So, your code can run close to the end user rather than in a central location. 2) If your Worker makes requests to a third-party API that is also implemented as a Cloudflare Worker, this request doesn't even leave the machine. The other worker runs locally. The idea that you can have a stack of cloud services that depend on each other without incurring any latency or bandwidth costs will, I think, be game-changing.

- Security is a big issue. boulos: Can you say more about the assumed security model here? We [Google] built gVisor [1] (and historically used nacl or ptrace) precisely because things like Isolates aren't (historically?!) trustworthy enough for side-by-side. Plus the whole V8-only ;). chaitanya: I can’t believe Cloudflare would run untrusted customer code side by side with that of other customers. V8 isolates sound great but did the Chromium team have this threat model in mind when designing them? qznc: Seems they have convinced themselves that extensive testing is good enough.

- Good list. Things Nobody Told Me About Being a Software Engineer. Especially: writing code is only a small part of what goes into shipping production software.

- The beauty of digital is how infinitely malleable, recombineable, and retargetable it is. That's driving the evolution of the proactive cloud. Another example: iHeartRadio is now creating a weekly mixtape based on my personal history. When I talked about Google photos simply increasing engagement I was perhaps too cynical. Datamining our lives doesn't always have to be banal and superficial. I admit I've teared up a few times. Is that just an AI manipulating me for commercial purposes? Yes, but does it matter? How Google Photos Became a Perfect Jukebox for Our Memories: We noticed that you would never relive or reminisce about any of these moments,” said Anil Sabharwal, the Google vice president who led the team that built Photos, and still runs it. “You would go on this beautiful vacation, you’d take hundreds of beautiful photos, years would pass, and you would never look at any of them.

- Cross shard transactions at 10 million requests per second. When do you need cross-shard transactions? tahara: For us (Dropbox), the threshold ended up being multiple product teams having to implement ad-hoc two-phase commit over their datatypes and burning engineer hours not to implement it but to prove that they had gotten it right and to handle the clean up after any unsuccessful writes. You're right that most systems probably don't need 2PC, which is why Edgestore didn't include it until now. As mentioned in the post, we finally felt that we had reached the right balance of tradeoffs to justify the API-level primitive. We have a routing tier of a few hundred machines. Any of those can serve as transaction coordinator. This is one of the places where having a transaction record is useful -- in general, our locking and non-transactional read scheme will wait nicely for a 2PC transaction to complete, but in the face of failures, any actor in the system can abort the 2PC transaction by marking the transaction record. There's also a really nice optimization for non-transactional reads that allows them to read even in the event of a staged but not yet committed 2PC transaction (you can prove to yourself that a linearized read can occur on either side, if the 2PC transaction is still pending when the read begins).

- Some think C++ is a zombie that doesn't know it's dead, but no, C++ is still alive and seg faulting. Trip report: Fall ISO C++ standards meeting. Dozens of changes. Two major features adopted for C++20: Ranges, and Concepts convenience notation. A major theme seems to be C++ is serious about first-class compile-time programming. We’re on track to making most “normal” C++ code available to run at compile time — and although C++20 won’t get quite all the way there.

- AWS and GCP Networking Differences: In GCP, networking is a global resources so it is not scoped to a region like in AWS. I'm not sure if this is better or worse but it's different enough that I think I'm going to emulate the AWS model by creating a network per region and then a single subnet per zone in the region. The subnets are much simpler in GCP because outbound networking just works if a VM has a public IP address whereas in AWS there is a lot more configuration involved. Subnets belong to networks but there is no relationship between regions and networks other than that subnets must belong to a zone which belong to a region. Overall I think it's simpler but the scoping is different from AWS.

- Progressive Service Architecture At Auth0: To help us manage feature flags, our SRE team created a feature flags router service. The router lets us centralize all the feature flags logic in a single place allowing different services and products to retrieve the same information at any time to turn on and off feature flags across different functions. The final design of this client was the result of a progressive service architecture that provides us with fallbacks, self-healing, high availability, and high scalability. Circuit breaking is a design pattern implemented in software architecture and popularized by Michael Nygard in his book, Release It!. This pattern is used to detect system failures and prevent the system from attempting to execute operations that will fail during events such as maintenance or outages. This is the basic configuration we have for our feature flags service. At every 4 failures, we open the circuit, go to the secondary store, and we have a cooldown of 15 seconds before we try to use the primary store again. Our self-healing service architecture is simple and it allows us to implement disaster management solutions easily as we have seen: 🛑 Cache times out. ✅ Connect to primary store. 🛑 Cache is down. ✅ Connect to primary store. 🛑 Primary store times out. ✅ After a certain number of attempts, open the circuit to secondary store. 🛑 Primary store is down. ✅ After a certain number of attempts, open the circuit to secondary store. 🛑 Secondary store is down. 🙏 Pray… light a candle… everything is down!

- Postmortem on the read-only outage of Basecamp on November 9th, 2018. The bad is a hard limit in the events table meant no writes could occur. An easily avoidable error. The good is they had a read-only mode when the failure occurred.

- Fun detective story. Serving Millions of Users in Real-Time with Node.js & Microservices [Case Study]. A detective must gather evidence. In software that means adding instrumentation so you can see what's going on. They found the authorization middleware of the application could take up 60% of the request time on extreme load. Why? The pbkdf2 decryption in the authentication middleware is so CPU heavy that over time it takes up all the free time of the processing unit. Heroku’s dynos on shared machines couldn’t keep up with the continuous decryption tasks in every request, and the router could not pass the incoming requests until the previous ones have not been processed by any of the dynos. he requests stay in the router’s queue until they have been processed or they get rejected after 30 seconds waiting for the dyno. Heroku returns HTTP 503 H13 - Connection closed without a response - which is the original symptom of the issue we were hired to fix.

- centrifugal/centrifugo (article): a real-time messaging server. It's language-agnostic and can be used in conjunction with application backend written in any programming language.

- Agile Development for Serverless Platforms: Agile Development for Serverless Platforms helps you start thinking about how to apply Agile practices in fully serverless architectures. This book brings together excerpts from four Manning books selected by Danilo Poccia, the author of AWS Lambda in Action.

- A Microprocessor implemented in 65nm CMOS with Configurable and Bit-scalable Accelerator for Programmable In-memory Computing: This paper presents a programmable in-memory-computing processor, demonstrated in a 65nm CMOS technology. For data-centric workloads, such as deep neural networks, data movement often dominates when implemented with today’s computing architectures. This has motivated spatial architectures, where the arrangement of data-storage and compute hardware is distributed and explicitly aligned to the computation dataflow, most notably for matrix-vector multiplication. In-memory computing is a spatial architecture where processing elements correspond to dense bit cells, providing local storage and compute, typically employing analog operation. Though this raises the potential for high energy efficiency and throughput, analog operation has significantly limited robustness, scale, and programmability.