Stuff The Internet Says On Scalability For February 23rd, 2018

Hey, it's HighScalability time:

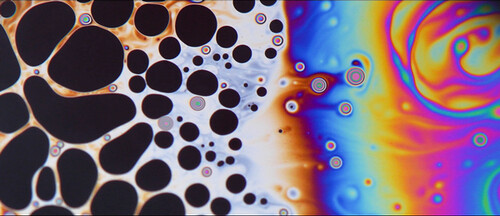

What does a bubble look like before it bursts? The image shows a brief period of stability before succumbing to molecular forces that pinch the film together and cause the bubble to burst. (Mr Li Shen - Imperial College London)

If you like this sort of Stuff then please support me on Patreon. And I'd appreciate if you would recommend my new book—Explain the Cloud Like I'm 10—to anyone who needs to understand the cloud (who doesn't?). I think they'll learn a lot, even if they're already familiar with the basics.

- 20 million: daily DuckDuckGo searches, 55% growth; 38.3 billion: WeChat messages sent per day; 500 million: database of pwned passwords to check against; 38%: China's consumption of world IC production; 92%: Fortune 500 traffic is from bots; $2: Blue Pill: A 72MHz 32-Bit Computer; 5,000: Martian days of operation for the Opportunity Rover; 30.72 terabytes: Samsung SSD; 185%: Golden State Warriors drive up ticket prices; $1.3 billion: loss that happens when Kylie Jenner tweets about your new UI;

- Quotable Quotes:

- @slava_pestov: A senior programmer is merely a junior programmer who has given up

- @gabrtv: This is why FaaS/PaaS on top of container orchestration is so important. By running FaaS on Kubernetes, you hedge against the abstractions you've bet on today, while setting yourself up to benefit from the abstractions to come.

- Karin Strauss~ Their storage system now offers random access across 400 megabytes of data encoded in DNA with no bit errors

- @mipsytipsy: That reminds me ... about a year ago we posted our first open job rec. Here are some things I have observed since then. 1) There is a huge, underserved pool of skilled senior engineers who are actively repelled by bro culture. Of all genders. 2) there is an ocean of brilliant, overqualified, creative, entrepreneurial business folks who are sick of getting shit on by engineers, particularly technical founders.

- @KentLangley: “All the compute power of the top 500 supercomputer clusters today would represent less than 0.01% of all the Bitcoin mining operations worldwide.”

- @allspaw: Problems in business-critical software are detected, identified, and handled via the coordinated *cognitive* work of people coping with uncertainty and ambiguity.

No framework, tool, or process changes that. This is why I believe we need to take human performance seriously.

-

Vinod Khosla: There are, perhaps, a few hundred sensors in the typical car and none in the body. A single ad shown to you on Facebook has way more computing power applied to it than a $10,000 medical decision you have to make.

-

tryitnow: I've worked in finance for several SaaS companies and you're 100% right. Infrastructure is never the biggest cost, it's sales and marketing and most of that spend is a mystery in terms of attribution, so blended CAC is not terribly useful.

-

Ariel Bleicher: 5G Champion’s prototype radios, for instance, operate in a 1-Gigahertz-wide band around 28 Gigahertz—10 times the maximum spectrum available to today’s 4G networks.

-

@cl4es: I found a hack which reduce the one-off bootstrap overhead of using lambdas by ~75% (15ms or so)

-

underwater: I work for a publisher that zero ads. We have fast pages with minimal JS. We rolled out AMP purely for the SEO win and saw a huge uptick in traffic. If Google really cared about performance they’d reward publishers doing the right thing on their regular pages (which would benefit the web as a whole), not just those using AMP

-

Artur: Waze doesn’t get you to your destination any faster. So, is it better to spend 30-minutes following Waze through suburban neighborhoods and alleys than 27-minutes minutes following Google Maps into a highway traffic jam?

-

pradn: I'm in a team right now which I believe has a 10x programmer. He picked a simple threading model to prevent tons of wasted time on deadlocks and other threading issues. It's also easier to reason about and get new people on-boarded. When he reviews code, he finds bugs that prevent days of debugging down the road and suggests simpler architectures that make the code easier to understand and change. He has had this effect on ~20 people over ~5 years. I would not be surprised if he saved us ~1 year of dev time collectively.

-

Vince Cerf: It's the mind-stretching practice of trying to think what the implications of technology will be that makes me enjoy science fiction. It teaches me that when you're inventing something you should try to think about what the consequences might be.

-

DyslexicAtheist: Ethics scholars: "The artists are the ones who recognize the fundamental truth that human nature hasn't changed much since Shakespeare's time, no matter what fancy new tools you give us." Tech: "we can solve all problems with blockchains and an ICO / token sale!"

-

Jamey Sharp: Google Wave tackled real problems, but failed because it relied on a revolution that never happened, which is the outcome you should expect for any technology project that expects everyone to switch to it right away. Would somebody please try again but with less hubris this time?

-

@PaulMMCooper: I love that when Plato complains about the spread of the written word in 370 BC, he sounds like my granddad complaining about the internet.

-

@mweagle: Remember when every app used to write their own C Unicode string libraries? And collections? And memory allocators? No? If not, congrats - those were *slow* times. Now project that inevitability into building cloud services.

-

prepend: So it’s ok for google to scrape and save images and display them without compensating photographers (which I’m cool with), but not users? This is bizarre, anti user functionality. By default if the image is available on the web, it is meant to be seen. This is going to make high school PowerPoint users spend more time, and result in zero extra revenue and zero drop in copyright infringement.

-

Robert W. Lucky: While transistors are continuing to shrink, it’s at a slower pace. The technology road map calls for 5-nanometer fabrication by about 2020, but since we can’t run those transistors faster—mostly because of heat dissipation problems—we will need to find effective ways of using more transistors in lieu of increasing clock speed. And because of increasing fabrication costs, these designs will have to be produced at high volume.

-

Will Gallego: The systems we work through are constantly changing, the determining factors for their perceived stability in flux, and an adaptation to stimuli internal and external that allows it to achieve this experienced resilience. By focusing on a single instance as the sole cause, we remove attention from the surrounding interactions that contribute, leaving critical information hidden that desperately needs surfacing.

-

MrDOS: I think all of the iOS text decoding crashes I've seen are under half-a-dozen code points in length. I know there are many, many possible 5- or 6-code point combinations of Unicode characters, but surely this is the sort of area where a genetic fuzzer like afl would excel at finding problems. It really surprises me that we keep seeing issues like this on iOS, especially when they don't seem to crop up elsewhere – you certainly don't hear about people's browsers and desktop applications crashing willy-nilly because of bugs in HarfBuzz or Pango, and you don't even see issues like this on macOS, which shares a lot of under-the-hood similarities with iOS. What makes iOS so different?

-

He Xiao: Given that the computation burden on the web browser mainly stems from data chunk search and comparison, we reverse the traditional delta sync approach by lifting all chunk search and comparison operations from the client side to the server side. Inevitably, this brings considerable computation overhead to the servers. Hence, we further leverage locality-aware chunk matching and lightweight checksum algorithms to reduce the overhead. The resulting solution, WebR2sync+, outpaces WebRsync by an order of magnitude, and is able to simultaneously support 6800--8500 web clients' delta sync using a standard VM server instance based on a Dropbox-like system architecture.

-

andr: One thing I think every Silicon Valley startup and investor should be afraid of is the incredible rate and depth of adoption of new technologies in China. I came across two examples recently. First, in China e-books have taken over paper books, not just in volume, but in publishers' minds [1]. Second, payment systems like AliPay are becoming the dominant in just a few years, completely circumventing credit cards [2]. Now, neither of these are technological breakthroughs in themselves. The Kindle has been around for over 10 years, and Apple Pay and its precursors are not news, either. However, neither of those technologies has had the impact on their respective markets that the creators hoped for. Paper books are still frequently cheaper than their Kindle versions, and there have hardly been any noteworthy straight-to-Kindle publications. Likewise, Apple Pay, which is only a replacement for your physical card, but still a transaction mostly owned by Visa/MasterCard, and not Apple, is still far from a dominant purchase mechanism, even in the Bay Area. All in all, the pace of adoption of disruptive technologies in the US is pretty poor. So, as an entrepreneur, I'd be much more worried not about inventing the cool new tech before China, but actually getting people hooked on it.

-

Andy Bechtolsheim: We saw that 10 Gb/sec had a very long tail for almost ten years, and then many vendors jumped into 40 Gb/sec, as did Arista,” she explained. “But for 100 Gb/sec, we believe first of all that it has an extremely long tail, unlike 40 Gb/sec. So we think the relevant bandwidth point is really going to be 100 Gb/sec for a very long time, with the option to do 10 Gb/sec, 25 Gb/sec, or 50 Gb/sec on the server or storage I/O connections; 400 Gb/sec is going to be very important in certain use cases, and you can expect that Arista is working very hard on it. And we will be as always first or early to market. The mainstream 400 Gb/sec market is going to take multiple years. I believe initial trials will be in 2019, but the mainstream market will be even later. And just because 400 Gb/sec comes, by the way, does not mean 100 Gb/sec goes away. They are really going to be in tandem. The more we do a 400 Gb/sec, the more we will also do 100 Gb/sec. So they are really together – it is not either or

-

Symmetry: That was a bit misleading in some ways. First, in pipelining you'll typically measure how long a pipeline steps in FO4s, which is to say the delay required for one transistor to drive 4 other transistors of the same width. Intel will typically design its pipeline stages to have 16 FO4s of delay. IBM is more aggressive and will try to work it down to 10. But of those 10, 2 are there for the latches you added to create the stage and 2 are there to account for the fact that a clock edge doesn't arrive everywhere at exactly the same time. So if you take one of those 16 FO4 Intel stages and cut it in half you won't have a two 8 FO4 stages but two 10 FO4 stages. And since those latch transistors take up space and energy you're got some severe diminishing returns problems. One thing that's changed as transistors have gotten smaller is that leakage has gotten to be more of a problem. You used to just worry about active switching power but now you have to balance using higher voltage and lower thresholds to switch your transistors quickly with the leakage power that that will generate. And finally velocity saturation is more of a problem on shorter channels making current go up more linearly with the gate voltage than quadratically.

- The future will be measured. Using sensors sports become more like games in that individual actions can be scored and summed for a final score. Historically, sports have only had access to gross measurements like the final elapsed time or the final score. How do sports change when every competition becomes a quest?

- Snowboarders in the Olympics attach transponders around their legs so the judges can know how high each contestant rises in the air. Bigger air, bigger scores.

- It's not just snowboarders. The Olympics’ Never-Ending Struggle to Keep Track of Time: Many of the temporal innovations this year have less to do with timing, per se, than with its permutations: the real-time acceleration rate and brake speed of speed skaters and alpine skiers; the takeoff and landing speeds of snowboarders; g-forces in the bobsleigh. It’s a continuous stream of entertaining data, but it can also be fed back into the training process, helping athletes to understand where they gained or lost time.

- And it's not just data being collected, sensors catalyze the invention of new sports and new methods of scoring. The Olympics’ Never-Ending Struggle to Keep Track of Time: Perhaps the biggest temporal novelty in Pyeongchang is a new event, mass-start speed skating. In mass start, all the competitors—as many as twenty-four—are on the ice at once, racing to complete sixteen laps around the four-hundred-metre-long oval. The fourth, eighth, and twelfth laps are sprints, with the first, second, and third skaters to finish gaining, respectively, five points, three points, or one point. There’s a final sprint, too, offering even more points, which factor into the ranking of the top three competitors.

- Wouldn't it be cool if the Olympics and other competitions would open source this data so people could build the awesome things you just know they would invent?

- This takes zero-downtime to a whole new level. In 1950, a 1700-ton the Mexican Telephone and Telegraph Company building was relocated while running! The communications lines were still wired up and the operators still worked inside of the structure while the move occurred. The building was slowly shifted about 40 feet to its new location in just five days. Phone service was not interrupted. Managed Retreat. This is why you want packet switched networks. Apparently this is a thing. Raising of Chicago. Retro Indy: Rotating the Indiana Bell building.

- Have to say when I saw there was a tool that would allow you to check if your password had been compromised by actually entering your password into a text box, I almost had a heart attack. But it can be done securely using something called k-Anonymity. I've Just Launched "Pwned Passwords" V2 With Half a Billion Passwords for Download. Have I entered my password? Too chicken. But you can download the password database and check locally. Also, Validating Leaked Passwords with k-Anonymity.

- Bijlmer (City of the Future, Part 1). For modernists early in the 20th century the perfect city was separated out by function, with distinct zones for housing, working, recreation, and traffic. Such an architecture they thought would be less noisy, less polluted, less chaotic, more approaching some Platonic ideal of Cityness they had in their minds. This ideal, as most are, was not based in research or fact, it was a yearning for the perfectibility of man. After the devastation of WWII there was an opportunity, like the rebuilding of Rome or London in earlier times, to rebuild cities according to this ideal. Oh, and politicians bought in because these cities were cheaper to build. Concrete cookie-cutter buildings cost less and go up fast. The result? As you might expect: failure. Cities are innovation platforms. Cities: Engines of Innovation. A Physicist Solves the City. Cities Are Innovative Because They Contain More Ideas to Steal. When we separate software out by function do we also create sterile unproductive ideals of what software should be?

- David Rosenthal glosses a lot of interesting papers in Notes from FAST18.

- Why Decentralization Matters. Nerds always dream of decentralization, I certainly do, but every real world force aligns on the side of centralization. We still have NAT with IPv6! Ironically, the reason given why decentralization will win is exactly why it won't: "Decentralized networks can win the third era of the internet for the same reason they won the first era: by winning the hearts and minds of entrepreneurs and developers." Decentralization is harder for developers, it's harder build great software on top of, it's harder to monetize, it's harder to monitor to control, it's harder to onboard users, everything becomes harder. The advantages are technical, ideological, and mostly irrelevant to users. Wikipedia is crowd-sourced, it's in no way decentralized, it's locked down with process like Fort Knox. Decentralization is great for dumb pipes, that is the original internet, but not much else. Cryptonetworkwashing can't change architectures that have long proven successful in the market.

- Cold starts just aren't a theoretical problem. It can piss of mobile users with slow response times. You can't count on natural user traffic to keep the cache warm. Cold starting AWS Lambda functions. Triming code didn't help. Neither did the universal salve of adding more memory. What worked was making an OPTION request in the background to warm up the function. Lesson: take the worst case into consideration. Unfortunately, a majority of users encountered the worst case due to my incorrect assumption about traffic patterns and cold starts.

- Maybe you don't need Rust and WASM to speed up your JS. Typically when you read something like this: "performance benefits of replacing plain JavaScript in the core of source-map library with a Rust version compiled to WebAssembly" the instinct is to run far and fast. Vyacheslav Egorov did the opposite. After a incredibly deep and intricate analysis and endless tweaking he came up with a factor of 4 improvement in the Javascript code.

- Most optimizations fall into three different groups: algorithmic improvements; workarounds for implementation independent, but potentially language dependent issues; workarounds for V8 specific issues.

- Conclusion: I am not gonna declare here that «we have successfully reached parity with the Rust+WASM version». However at this level of performance differences it might make sense to reevaluate if it is even worth the complexity to use Rust in source-map.

- Learnings: Profiler Is Your Friend; Algorithms Are Important; VMs Are Work in Progress. Bug Developers!; VMs Still Need a Bit of Help; Clever Optimizations Must be Diagnosable; Language and Optimizations Must Be Friends.

- Some mighty fine bit twiddling. Reading bits in far too many ways (part 2). Also, Iterating over set bits quickly.

- What what should we worried about concerning vulnerabilities in implantable devices? This and many other scary topics are addressed in A few notes on Medsec and St. Jude Medical.

- Like most computers, implantable devices can communicate with other computers. To avoid the need for external data ports – which would mean a break in the patient’s skin – these devices communicate via either a long-range radio frequency (“RF”) or a near-field inductive coupling (“EM”) communication channel, or both. Healthcare providers use a specialized hospital device called a Programmer to update therapeutic settings on the device (e.g., program the device, turn therapy off).

- The real nightmare scenario is a mass attack in which a single resourceful attacker targets thousands of individuals simultaneously — perhaps by compromising a manufacturer’s back-end infrastructure — and threatens to harm them all at the same time.

- This is something I hadn't thought of: The real challenge in securing an implantable device is that too much security could hurt you. As tempting as it might be to lard these devices up with security features like passwords and digital certificates, doctors need to be able to access them. Sometimes in a hurry.

- Many manufacturers have adopted an approach that cut through this knot. The basic idea is to require physical proximity before someone can issue commands to your device. Specifically, before anyone can issue a shock command (even via a long-range RF channel) they must — at least briefly — make close physical contact with the patient.

- it’s important that vendors take these issues seriously, and spend the time to get cryptographic authentication mechanisms right — because once deployed, these devices are very hard to repair, and the cost of a mistake is extremely high.

- When automation goes bad it depends on its remote robotic appendages to intervene. Google's robo-CTRL-ALT-DEL failed, hung networks and Compute Engine for 90 minutes: During the outage, the first component didn't send any data. That lack of information meant VMs in other zones couldn't figure out how to contact their colleagues. Autoscaler also needed information flow from the first resource, so it too ground to a halt...Automatic failover was unable to force-stop the process, and required manual failover to restore normal operation.

- How does Chinese tech stack up against American tech?: At the present pace China’s tech industry will be at parity with America’s in 10-15 years. This will boost the country’s productivity and create tech jobs. But the real prize is making far more profits overseas and setting global standards. Here the state’s active role may make some countries nervous about relying on Chinese tech firms. One scenario is that national-security worries mean China’s and America’s tech markets end up being largely closed to each other, leaving everywhere else as a fiercely contested space. This is how the telecoms-equipment industry works, with Huawei imperious around the world but stymied in America. For Silicon Valley, it is time to get paranoid. Viewed from China, many of its big firms have become comfy monopolists. In the old days all American tech executives had to do to see the world’s cutting edge was to walk out the door. Now they must fly to China, too. Let’s hope the airports still work.

- Kind of odd: Upwork's Q4 2017 Skills Index, DynamoDB skills ranked No. 2 in employer demand, behind only the upstart bitcoin technology. Number 3 is React Native. Freelancing Site Sees Surge in Demand for Amazon DynamoDB Skills.

- Will China Succeed In Memory?: IP issues are just one of the challenges. China’s memory makers also face stiff competition in a tough market. “I look at this at maybe almost like what China did in the foundry industry. They have 10% market share. Maybe China will get 10% of the memory market,” IC Insights’ McClean said. “I don’t think it would be zero. But I don’t see big chunks of market share coming out of Samsung, Micron and Hynix anytime soon.”

- Though you'll have 10,000 years to take a tour, beginnings are always the most fun. Clock of the Long Now - Installation Begins. Looks so cool!

- Nice tutorial on using Amazon's new AppSync. Running a scalable & reliable GraphQL endpoint with Serverless. We have a new entrant into the how to remake Twitter competition. Looks fairly straightforward.

- Good AAAI/AIES’18 Trip Report (Association for the Advancement of Artificial Intelligence): Activity detection is obviously a hot topic; Face identification is also a widely discussed topic; To improve Deep Neural Networks, many researchers seek to transfer the learned knowledge to new environment; One computation theme in these research work is that to reduce the computation overhead; many system-related papers are presented at this conference; IBM presents a series of papers and demos; Adversarial learning is one key topic in different vision-related research areas.

- Good advice on Running a 400+ Node Elasticsearch Cluster. Meltwater each day indexes about 3 million editorial articles and almost 100 million social posts, current disk usage is about 200 TB for primaries, and about 600 TB with replicas, handling around 3k requests per minute. The main cluster is run on AWS, using i3.2xlarge instances for our data nodes. Three dedicated master nodes in different availability zones with with discovery.zen.minimum_master_nodes set to 2 to avoid the split-brain problem. Why manage our own ES cluster? We considered hosted solutions but we decided to opt for our own setup, for these reasons: AWS Elasticsearch Service allows us too little control, and Elastic Cloud would cost us 2-3 times more than running directly on EC2.

- Maybe AIs need to be raised in a village? Brainpower boost for birds in large groups.

- If you are looking to create an architecture from scratch then The best architecture with Docker and Kubernetes — myth or reality? is a good resource, but simple it's not. Sparkling illustrations clarify each topic. Covers topics like: Setting up a development environment; Automated testing; Systems delivery; Continuity in integration and delivery; Rollback systems; Ensuring information security and audit; Identity service; and so on. Be warned, in the end whatever you create will go the way of all flesh: Like it or not, sooner or later your entire architecture will be doomed to failure. It always happens: technologies become obsolete fast (1–5 years), methods and approaches — a bit slower (5–10 years), design principles and fundamentals — occasionally (10–20 years), yet inevitably.

- Illuminating exploration of Voxel lighting: The first improvement we propose in this model is modifying the sky light to support multiple directions...When propagating light field, we need to often perform component-wise operations on the channels of each light field, which we can pack into a single machine word. Here’s a picture of how this looks assuming 4-bits per channel for the simpler case of a 32-bit value.

- The major lesson from Google Wave? You need a realistic onboarding strategy. Switching to a new system is incremental. Nobody will do it all at once. It's the same bootstrapping problem every social network has. How not to replace email: Wave was intended to completely replace existing systems like email and chat, so it had no provisions for interoperating with those systems. To succeed, Wave required a revolution, a critical mass of people switching to the new way and dragging the rest of the world with them—and those haven’t worked out very often. Making the revolution even more unlikely, initially Google offered Wave accounts by invitation only, so the people you wanted to talk with probably couldn’t even get an account.

- How We Almost Burned Down the House: In reality, our monitoring and alerting system was insufficient. We didn’t receive notice when a server load exceeded the healthy state before the user experience became bad; Rolling out in equal pieces, such as 7, 7, 7, 7, 7, 7, would have detected the issue earlier, with a smaller group added, hence, it would have been easier to handle or roll back and less users would have been affected; We ultimately used eight Azure-managed apps at full scale, all up to 100% of load, and we could not scale more. We accepted limited scalability, as the limits seemed so harmless.

- jhuangtw-dev/xg2xg: A handy lookup table of similar technology and services to help ex-googlers survive the real world :)

- Into puzzles? You might like Breaking Codes and Finding Patterns - Susan Holmes.

- Funnest part of deep learning is the ability to abstract patterns from one domain and then apply them to another. It's what one might call creativity. A Closed-form Solution to Photorealistic Image Stylization: Photorealistic image style transfer algorithms aim at stylizing a content photo using the style of a reference photo with the constraint that the stylized photo should remains photorealistic. While several methods exist for this task, they tend to generate spatially inconsistent stylizations with noticeable artifacts. In addition, these methods are computationally expensive, requiring several minutes to stylize a VGA photo. In this paper, we present a novel algorithm to address the limitations. The proposed algorithm consists of a stylization step and a smoothing step. While the stylization step transfers the style of the reference photo to the content photo, the smoothing step encourages spatially consistent stylizations. Unlike existing algorithms that require iterative optimization, both steps in our algorithm have closed-form solutions. Experimental results show that the stylized photos generated by our algorithm are twice more preferred by human subjects in average. Moreover, our method runs 60 times faster than the state-of-the-art approach.

- The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation: Artificial intelligence and machine learning capabilities are growing at an unprecedented rate. These technologies have many widely beneficial applications, ranging from machine translation to medical image analysis. Countless more such applications are being developed and can be expected over the long term. Less attention has historically been paid to the ways in which artificial intelligence can be used maliciously. This report surveys the landscape of potential security threats from malicious uses of artificial intelligence technologies, and proposes ways to better forecast, prevent, and mitigate these threats. We analyze, but do not conclusively resolve, the question of what the long-term equilibrium between attackers and defenders will be. We focus instead on what sorts of attacks we are likely to see soon if adequate defenses are not developed.

- Towards practical high-speed high dimensional quantum key distribution using partial mutual unbiased basis of photon's orbital angular momentum: Here we propose a sort of practical high-speed high dimensional QKD using partial mutual unbiased basis (PMUB) of photon's orbital angular momentum (OAM). Different from the previous OAM encoding, the high dimensional Hilbert space we used is expanded by the OAM states with same mode order, which can be extended to considerably high dimensions and implemented under current state of the art. Because all the OAM states are in the same mode order, the coherence will be well kept after long-distance propagation, and the detection can be achieved by using passive linear optical elements with very high speed. We show that our protocol has high key generation rate and analyze the anti-noise ability under atmospheric turbulence. Furthermore, the security of our protocol based on PMUB is rigorously proved. Our protocol paves a brand new way for the application of photon's OAM in high dimensional QKD field, which can be a breakthrough for high efficiency quantum communications.

- Here's the AAAI-18 Main Track Accepted Paper List.

- Do you need a Blockchain? No.

- Fail-Slow at Scale: Evidence of Hardware Performance Faults in Large Production Systems: Fail-slow hardware is an under-studied failure mode. We present a study of 101 reports of fail-slow hardware incidents, collected from large-scale cluster deployments in 12 institutions. We show that all hardware types such as disk, SSD, CPU, memory and network components can exhibit performance faults. We made several important observations such as faults convert from one form to another, the cascading root causes and impacts can be long, and fail-slow faults can have varying symptoms. From this study, we make suggestions to vendors, operators, and systems designers.

- Paper review. IPFS: Content addressed, versioned, P2P file system: The question is will it stick? I think it won't stick, but this work will still be very useful because we will transfer the best bits of IPFS to our datacenter computing as we did with other peer-to-peer systems technology. The reason I think it won't stick has nothing to do with the IPFS development/technology, but has everything to do with the advantages of centralized coordination and the problems surrounding decentralization. I rant more about this later in this post... I just don't believe dialing the crank to 11 on decentralization is the right strategy for wide adoption. I don't see the killer application that makes it worthwhile to move away from the convenience of the more centralized model to open the Pandora's box with a fully-decentralized model.