Stuff The Internet Says On Scalability For March 16th, 2018

Hey, it's HighScalability time:

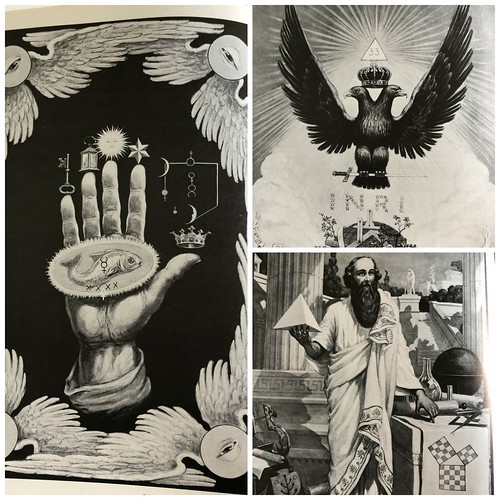

Hermetic symbolism was an early kind of programming. Symbols explode into layers of other symbols, like a programming language, only the instruction set is the mind.

If you like this sort of Stuff then please support me on Patreon. And I'd appreciate if you would recommend my new book—Explain the Cloud Like I'm 10—to anyone who needs to understand the cloud (who doesn't?). I think they'll learn a lot, even if they're already familiar with the basics.

- ~30: AWS services used by iRobot; 450,000: Shopify S3 operations per second; $240: yearly value of your data; ~day: time to load a terabyte from Postgres into BigQuery; 5 million: viewers for top Amazon Prime shows; 130,000: Airbusians move from Microsoft Office to Google Suite; trillion: rows per second processed by MemSQL; 38 million: Apple Music paid members; 4 million: Microsoft git commits for a Windows release;

- Quotable Quotes:

- Stephen Hawking: Although I cannot move and I have to speak through a computer, in my mind I am free.

- Roger Penrose: Despite [Stephen Hawking] terrible physical circumstance, he almost always remained positive about life. He enjoyed his work, the company of other scientists, the arts, the fruits of his fame, his travels. He took great pleasure in children, sometimes entertaining them by swivelling around in his motorised wheelchair. Social issues concerned him. He promoted scientific understanding. He could be generous and was very often witty. On occasion he could display something of the arrogance that is not uncommon among physicists working at the cutting edge, and he had an autocratic streak. Yet he could also show a true humility that is the mark of greatness.

- Raymond Wong: The most revealing part of the report exposes how Apple didn't even have plans to integrate Siri into HomePod until after the Amazon Echo launched

- @ajaynairthinks: When I tell people I am the founding PM on Lambda, the question I often get is how the idea for #AWS Lambda/#Serverless came about in the first place. The truth is, its way too hard to point to one person/event as the defining moment.

- @ImerM1: Just did some budget analysis. Turns out we've managed to reduce our AWS costs for RNA-seq by 90%, by using Lambda, Batch and Step Functions

- Daniel Lemire: My numbers are clear: in my tests, it is three times faster to sum up the values in a LinkedHashSet.

- @Tr0llyTr0llFace: The Bitcoin network is processing 200,000 transactions per day at a cost of $3B per year. Visa is processing 150,000,000 transactions per day at a cost of $8B per year. Visa also does insurance, credit, customer support...With Bitcoin your funds are lost if you forget your PIN.

- Linus Torvalds: It looks like the IT security world has hit a new low.

- Steve Jobs~ When you're the janitor, reasons matter. Somewhere between the janitor and the CEO, reasons stop mattering. That Rubicon is crossed when you become a VP.

- @ByRosenberg: The San Jose Mercury News, Oakland Tribune, Contra Costa Times and their sister papers had 1,000 editorial employees in 2000. Now they're down to 100. Devastating to see Bay Area news coverage decimated in one of the world's most important places to cover

- @jimwebber: Amazed at the medical/genomics paper I've just reviewed where the whole thing was built in Neo4j and processed via Cypher. Our user community is extraordinary.

- @maria_fibonacci: Mansplainings aside, I build things with k8s as my day job, and I build things with Elixir because it's my fav lang. Even if they're different types of software, the reasons people end up using them are basically the same, yet the additional layer of complexity matters.

- @gwenshap: Things I learned at #StrataData last week. I only attended one session, but talked to 100+ attendees. So, it is the "hallway" view. 1. Machine learning is sexier than ever. Lots of talk. I got the impression that organizations built around ML can do it, but existing business still didn't make the leap. My bet is that in 2-3 years we'll start seeing the "early majority".

- Jim Handy: In a nutshell Mark [Thirsk] is telling us that there may be some stress on DRAM and NAND flash wafer supplies, but the companies that will feel the greatest impact will be tier 2 chip makers who purchase the lowest cost wafers.

- michaelt: So I'm a mid-level manager at a company with a few hundred developers. The more developers you hire, the higher the chance you'll start collecting people with obscure (but usually reasonably easy to satisfy) tool preferences. You know, the guy who changes his IDE to emacs key bindings, the guy who does all his e-mail using mutt when everyone else uses gmail, the girl who uses a Dvorak keyboard layout, the guy who insists he works best on a 1024x768 screen, and so on. Having tried a variety of industry tools and thought about about how you work best is usually a good sign[1]. Their favourite tools aren't my favourite tools, but they work for me so if they're not happy, I'm not happy.

- Paul Kunert: Airbus will organise information around “teams, topics and programmes” and “let people go to the information that they need for their jobs… almost the opposite from an environment that is based on email where you receive whatever it is that others decide you can receive.”

- Geoff Huston: However, I also suspect that the intelligence agencies are already focussing elsewhere. If the network is no longer the rich vein of data that it used to be, then the data collected by content servers is a more than ample replacement. If the large content factories have collected such a rich profile of my activities, then it seems entirely logical that they will be placed under considerable pressure to selectively share that profile with others. So, I’m not optimistic that I have any greater level of personal privacy than I had before. Probably less. Meet the new boss. Same as the old boss.

- @PaulDJohnston: Counter prediction: very few software engineers will need to know about k8s et al because #serverless. It's code that matters and orchestration is becoming a commodity. (Sounding like @swardley). Also ,zip is a more robust deployment artifact

- Joel Hruska: In all cases, the pirated version of the [Final Fantasy XV] was faster, by 5 percent to a whopping 33 percent, depending on the scene...The implications of these findings are straightforward: The piracy protections baked into the game are hitting overall performance, causing a significant set of issues. Companies regularly deny it happens, but tests like this punch holes in such claims.

- Mathieu Ripert: we [Instacart] found out that with quantile regression we were able to plan deliveries closer to their due time without increasing late percentage. This effect allowed us to explore more trip combinations in our fulfillment engine and therefore increase efficiency (one of our most important metric) by 4%.

- @vijaypande: We argue that the future of predicting the interactions between a drug and its prospective target demands more than simply applying deep learning algorithms from other domains, like vision and natural language, to molecules.

- John Allspaw: The increasing significance of our systems, the increasing potential for economic, political, and human damage when they don’t work properly, the proliferation of dependencies and associated uncertainty — all make me very worried. And, if you look at your own system and its problems, I think you will agree that we need to do more than just acknowledge this — we need to embrace it.

- Kevlin Henney: Move Slow and Mend Things

- Sarah Zhang: To his surprise, the DNA revealed that humans and Neanderthals did interbreed in their time together in Europe. Possibly even more than once. Today, surprisingly, the people carrying the most Neanderthal DNA are not in Europe but in East Asia

- @CrispinCowan0: "A 'distributed system' is one in which i can't get my work done because a machine I've never heard of is down" -- Leslie Lamport

- @SwiftOnSecurity: "Hey my self-driving car stops when it sees a dog" "We don't support dogs." "What?" "We couldn't figure out how to predict where dogs are going. But don't worry, we handle it safely now." "By stopping?" "AND waiting for the dog to leave. This is all explained in the patch notes."

- @swardley: I cannot emphasise enough that AWS Lambda represents the industrialisation of code execution platforms to a utility (from a product) ... this is not a minor thing, this is a massive thing. Containers etc - all good stuff but destined to be invisible subsystems at best.

- @mweagle : “Any architecture that enables fewer developers to accomplish more and spend less time on operational maintenance is worth thinking hard about.” (via @Pocket) #longreads

- maxander: Everything in biological systems depends on everything else; all variables are global, all methods are public, and all definitions are recursive. It's all spaghetti code- there is nothing like the separation of functionality that we impose on our designed artefacts for the sake of our limited minds. A signal needed to be sent from one place to another, and the brain has the machinery for endocrine functions already, so why not have it handle it? So while this is a quite valid and interesting result, it's not nearly as exciting as the popular press is going to make it out to be.

- Stephen Hawking: If machines produce everything we need, the outcome will depend on how things are distributed. Everyone can enjoy a life of luxurious leisure if the machine-produced wealth is shared, or most people can end up miserably poor if the machine-owners successfully lobby against wealth redistribution. So far, the trend seems to be toward the second option, with technology driving ever-increasing inequality.

- @iancassel~ "If you want to make a difference in this world you will endure more pain than those who don't. Increase your threshold for pain and criticism. " -- Elon Musk SXSW 2018

- @swardley: X: You're quite harsh against IBM executives. Me: This enormous, mile high Iceberg of cloud was visible way before Amazon appeared. IBM executives were steering the ship that rammed into it despite the 600 foot neon warning signs. It certainly wasn't the engineer's fault.

- @davkean: I'm going to say this again, looking at latest data for solution load time, solutions take 3 times longer to load when the OS is on a spinning disk versus an SSD. About 1/4 of you still have spinning disks; get an SSD today.

- @stevesi: Remote versus central/HQ work comes up often. Very emotional debate. Pits many forces “against” each other: SV v. other tech centers, modern v. old school work styles, work-life balance, etc. Many thoughts follow (HT to friend @ManuKumar for thoughtful thread on topic) 1/many

- @copyconstruct: "We [Facebook] use two metrics, the web servers’ 99th percentile response time and HTTP fatal error rate, as proxies for the user experience, and determined in most cases this was adequate to avoid bad outcomes."

- Sabine Hossenfelder: The LHC hasn’t seen anything new besides the Higgs. This means the laws of nature aren’t “natural” in the way that particle physicists would have wanted them to be. The consequence is not only that there are no new particles at the LHC. The consequence is also that we have no reason to think there will be new particles at the next higher energies – not until you go up a full 15 orders of magnitude, far beyond what even futuristic technologies may reach.

- Michael Nygard: Just as oil production led to new uses of oil that reshaped everything from consumer products to food production to hygiene, I fully expect data-fueled ML models to reshape this century. Moreover, we will see demand for ever-greater data production from our homes, workplaces, and devices. This will cause tension and conflict about data use just as happened with land-use, water-use, and mineral rights. That will lead to new legal regimes and doctrines. In extreme cases, it may lead to revolutions similar to the Revolutions of 1848 in Europe.

- Adam Hargreaves: But add dark DNA to the picture, and that’s not necessarily the case. If genes contained within these mutation hotspots have a greater chance of mutating than those elsewhere, they will display more variation on which natural selection can act, so the traits they confer will evolve faster. In other words, dark DNA could influence the direction of evolution, giving a driving role to mutation. Indeed, my colleagues and I have suggested that mutation rates in dark DNA may be so rapid that natural selection cannot act fast enough to remove deleterious variants in the usual way. Such genes might even become adaptive later on, if a species faces a new environmental challenge.

- Ah, software development is so glamorous. Now we know why Siri was so dumb for so long: Instead of continuously updating Siri so that it would get smarter faster, Richard Williamson, one of the former iOS chief Scott Forstall's deputies, reportedly only wanted to update the assistant annually to coincide with new iOS releases...Williamson denies the accusations that he slowed Siri development down and instead cast blame on Siri's creators. "It was slow, when it worked at all," Williamson said. "The software was riddled with serious bugs. Those problems lie entirely with the original Siri team, certainly not me."..."Members of the Topsy team expressed a reluctance to work with a Siri team they viewed as slow and bogged down by the initial infrastructure that had been patched up but never completely replaced since it launched."

- Stackoverflow released their Developer Survey Results 2018.

- 48.3% of developers are developing software for Linux, first time Linux has been in the lead. Windows Desktop or Server at 35.4%. Android at 29%. And a surprise: AWS at 24.1%.

- This is higher than I would expect: 24.8% of developers learned to code within the past five years. Does that mean more of these developers are on SO? Or is the base of programmers expanding fast?

- So sad: For 69.8% of developers JavaScript is their most commonly used programming language.

- No surprise given the constant Rust blitz we've been seeing: Rust is the most loved programming language.

- Is Elixir really worth it? sasajuric: So in general, my sentiment is that BEAM offers a proper foundation for building arbitrary complex system with the least amount of technical complexity. Couple that with developer friendliness and approachability of Elixir, and you get a great tool which allows you to start simple, move forward at a steady pace, and have the technology which can take you a very long way and help you deal with complex problems at a very large scale, without forcing you to step outside and use a bunch of 3rd party products, but still allowing you to interact with such tools and even write parts of your system in other languages.

- Azure Functions – Significant Improvements in HTTP Trigger Scaling: In my view the Azure Functions team have done some impressive work in a fairly short space of time to transform the performance of Azure Functions triggered by HTTP requests...In the real world representative tests there is still a significant response time gap for HTTP triggered compute between Azure Functions and AWS Lambda however it is not clear from these tests alone if this is related to Functions or other Azure components. Time allowing I will investigate this further.

- Just because there's an API doesn't mean you don't have to understand what's going on underneath the covers. Future Proofing Our Cloud Storage Usage. Given their high rate of S3 operations, Shopify was hitting S3 errors because of the hot partition problem one often gets with sharding. The solution is to add randomness to the key name to avoid rate-limits on hot partitions by distributing writes over many partitions. Result: median latencies dropped by 60%; 95th percentile dropped by about 25%.

- Scaling eventually requires partitioning. How do you partition efficiently? Google came up with Affinity Clustering, a hierarchal clustering method based on Boruvka's MST algorithm. Balanced Partitioning and Hierarchical Clustering at Scale (video, paper): We apply balanced graph partitioning to multiple applications including Google Maps driving directions, the serving backend for web search, and finding treatment groups for experimental design. For example, in Google Maps the World map graph is stored in several shards. The navigational queries spanning multiple shards are substantially more expensive than those handled within a shard. Using the methods described in our paper, we can reduce 21% of cross-shard queries by increasing the shard imbalance factor from 0% to 10%. As discussed in our paper, live experiments on real traffic show that the number of multi-shard queries from our cut-optimization techniques is 40% less compared to a baseline Hilbert embedding technique. This, in turn, results in less CPU usage in response to queries. In a future blog post, we will talk about application of this work in the web search serving backend, where balanced partitioning helped us design a cache-aware load balancing system that dramatically reduced our cache miss rate.

- I’m trying to learn more about how small startups are using AWS. The winner is Terraform.

- jonathan-kosgei: We’re provisioning resources via Terraform, it’s incredibly convenient especially considering the number of resources we deploy to multiple regions

- Relax: Provision with terraform, deploy with ansible. Ansible would do very little, just pull down our packaged software via apt-get which was python virtualenvs...We supposedly adhered to the “devops” mantra of “devops is a shared responsibility,” but in practice no one wanted to deal with it so it usually fell on one or two people to keep things sane.

- tschellenbach: Cloudformation, Cloud-Init, Puppet, Boto and Fabric. Works like a charm, but none of these tools are perfect.

- enobayram: we are a small startup with ~10 engineers. We’re using AWS, both for customer services (we deploy a few instances per customer, so they are completely isolated) and for the services that support our own workflows (CI etc.). For customer instances, we currently use simple scripts utilizing the AWS CLI tools. For our supporting infrastructure we use NixOps. NixOps is a blast when it works, though it requires some nix-fu to keep it working.

- twotwotwo: It’s a Python/Django app, https://actionk.it, which some lefty groups online use to collect donations, run their in-person event campaigns and mailing lists and petition sites, etc. We build AMIs using Ansible/Packer; they pull our latest code from git on startup and pip install deps from an internal pip repo. We have internal servers for tests, collecting errors, monitoring, etc. We have no staff focused on ops/tools.

- Philip Ball: But doesn’t quantum physics involve a rather uniquely odd sort of behavior? Not really. The Schrödinger equation does not so much describe what quantum particles are actually “doing,” rather it supplies a way of predicting what might be observed for systems governed by particular wavelike probability laws.

- Matteo Valleriani: An entire new discipline – the digital humanities – emerged in order to allow scholars to manage this wealth of information. Historical sources, their electronic copies and bibliographic metadata are increasingly immersed in a frame of annotations, ideas and relations electronically produced by historians while studying our material and intellectual heritage.

- danielcompton: I’m running on Google Cloud Platform, but there’s enough similarities to AWS that hopefully this is helpful. I use Packer to bake a golden VM image that includes monitoring, logging, e.t.c. based on the most recent Ubuntu 16.04 update. I rebuild the golden image roughly monthly unless there is a security issue to patch. Then when I release new versions of the app I build an app specific image based on the latest golden image. It copies in an Uberjar from Google Cloud Storage (built by Google Cloud Builder). All of the app images live in the same image family

- bja: Part of my current gig (https://yours.co) uses the Serverless framework. To answer your questions: Deploying NodeJS lambda functions along with a variety of CloudFormation resources (Serverless supports raw CloudFormation syntax for spinning up just about anything on AWS - this can even be customized to non-AWS resources as well). Deployment is sls deploy. Provisioning is CloudFormation (described previously)...We don’t have anyone responsible for it - it’s permeated through the team and documented in a Nuclino wiki

- jweede: We’re a small startup that runs on AWS. We deploy a python app, jenkins, gitlab, sentry, and probably other self-hosted services that I’m forgetting. We’re currently using Terraform, Salt, and bridge some gaps with Python...Our team is ~30 devs, and we have 2-3 people who spend part of their time maintaining the above.

- Redis gets a Better Hyperloglog cardinality estimation algorithm: The current implementation uses the LogLog-Beta approach to estimate the cardinality from the HyperLogLog registers. Unfortunately, the method relies on magic constants that have been empirically determined. The formula presented in "New cardinality estimation algorithms for HyperLogLog sketches", has a better theoretical foundation and has already been independently verified in article. The new implementation finds the histogram of registers first, which is finally fed into the new cardinality estimation formula.

- Excellent discussion with Nordstrom on The challenges of developing event-driven serverless systems at massive scale.

- Updating a 50 terabyte PostgreSQL database:

- We built our database stack with PostgreSQL, as it provides consistency, isolation, and reliability we need. In addition, it is open source, and has an active ecosystem including multiple support and consultancy companies. Finally, as we work with sensitive financial data, having a transactional database was key as it ensures we don’t lose track of records.

- Our PostgreSQL database clusters consist of one master and at least three slave servers, spread over multiple data centers. The database servers are dual socket machines with 768 GB of RAM. Each server connects to its own shared storage device with fiber channel or ISCSI, and has a raw capacity of 150+ terabytes of SSDs and average compression ratio of 1:6.

- we built our software architecture in such a way that we can stop traffic to our PostgreSQL databases, queue the transactions, and run a PostgreSQL update without affecting payments acceptance.

- At 10 terabytes it took a few days before we got the new server up and running, and this process was becoming progressively more time-consuming.

- New process: Stop traffic to the database cluster...and 8 more steps.

- The new process takes around a day including preparation work and it can continue to scale indefinitely.

- Is MEAN [MongoDB, Express.js, Angular, Node.js] stack still the best way to go in 2018? I'm running a MySQL MEAN (MyEAN?) with Angular 5. I find it really good to develop with, but I do think React would have been a good option too...I would say ReactJS is winning...As for the rest MongoDB has always been a NoSQL product express is just a web server and nodejs the backend can and should be anything... So MEAN is a special stack for extremely special conditions...Yes I would and do use React with a different backend... IMO backend does not matter a f*ck, if properly loosely coupled it can be .NET Java Python Ruby anything you want...MEAN was never the best way to go.

- Have to say, short selling is the first thing I thought about when I heard the AMD security alert came with a one day notice. Assassination Attempt on AMD by Viceroy Research & CTS Labs, AMD "Should Be $0". theoldboy: CTS Labs, a previously unknown "security research" company founded in 2017, release a paper exposing alleged flaws in AMD's Secure Processor (all of which require root access in the first place). Instead of the normal 90 days before public disclosure they give AMD 24 hours. Very shortly afterwards Viceroy Research publish a laughable 25-page PDF "analyzing" these disclosures. This had very obviously been prepared in advance and it's sole aim seems to be to cause damage to AMD. It concludes that “We believe AMD is worth $0.00, and will have no choice but to file for Chapter 11 Bankruptcy in order to effectively deal with the repercussions of recent discoveries.” It is speculated that the motive behind CTS Labs and Viceroy Research proceeding like this was to drive down AMD's share price so that they and their associates could make money from short-selling those shares. Also, “AMD Flaws” Technical Summary.

- 802.11ac is not the last word in WiFi. IEEE 802.11ax High-Efficiency Wireless (HEW) standard promises to deliver four times greater data throughput per user. Why You Should Care About IEEE 802.11ax: maximum theoretical bandwidth of 14 Gbps; operates in the 5-GHz band; architected to provide steady, resilient performance even in Wi-Fi dense areas; expect the spec to be released in 2019.

- Scaling Infrastructure Management with Grail. Uber built a graph database above other all their disperated and distributed resources to provide a unified view. They call it Grail: "Grail does not collect information for any specific domain, instead functioning as a platform where infrastructure concepts (hosts, databases, deployments, ownership, etc.) from different data sources can be aggregated, interlinked, and queried in a highly-available and responsive fashion. Grail effectively hides all the implementation details of our infrastructure." Yes, of course they wrote their own query language, YQL. As an example, the following query lists all hosts with 40GB of free memory, 100GB of free disk space, and that use an SSD disk: TRAVERSE host:* ( FIELD HostInfo WHERE HostInfo.disk.media = “SSD“ WHERE HostInfo.disk.free > (100*1024^3) WHERE HostInfo.memory.free > (40*1024^3))

- Good idea. Canary Analysis Service: Automated canarying has repeatedly proven to improve development velocity and production safety. CAS helps prevent outages with major monetary impact caused by binary changes, configuration changes, and data pushes. It is unreasonable to expect engineers working on product development or reliability to have statistical knowledge; removing this hurdle—even at the expense of potentially lower analysis accuracy—led to widespread CAS adoption. CAS has proven useful even for basic cases that don't need configuration, and has significantly improved Google's rollout reliability. Impact analysis shows that CAS has likely prevented hundreds of postmortem-worthy outages, and the rate of postmortems among groups that do not use CAS is noticeably higher.

- Memory efficiency of parallel IO operations in Python: Parallelism is a very efficient way of speeding up an application that has a lot of IO operations. In my case, there was a ~40% speed increase compared to sequential processing. Once a code runs in parallel, the difference in speed performance between the parallel methods is very low. An IO operation heavily depends on the performance of the other systems (i.e. network latency, disk speed, etc). Therefore, the execution time difference between the parallel methods is negligible....At Kiwi.com we use asyncio in high performing APIs where we want to achieve speed with a low memory footprint on our infrastructure.

- MoviePass as a data play. MoviePass: The new face of unbridled data greed. A lot of people trying to own the home these days, makes sense to own the night out too. They really need to pair it with babysitting though.

- Fastly CTO on Edge Computing, Hiring Engineers, and Moonshots: On a daily basis, 10 percent of the requests on the internet go through Fastly...Its stack is separated into the data plane, through which their customer’s data flows, and the control plane, which runs the data plane. The key, he said, is the use of Varnish, a high-speed reverse-proxy server, and the Varnish Control Language (VCL) that allows the company’s engineers to write code at the edge...For the data plane, the company goes old school, using their own servers, spread out across the globe. This allows the company to control its own servers and switches...The control plane, on the other hand, is in the cloud, mostly on the Google Cloud Platform, but some is also run internally...Because of the breadth of its stack, the company codes in a wide variety of languages. Along with VCL, it also uses Ruby, C, Perl, Python, and Rust.

- Excellent description. Deploy the Voting App to AWS ECS with Fargate. But why not just use serverless?

- Cache amplification: How much of the database must be in cache so that a point-query does at most one read from storage? I call this cache-amplification or cache amplification. clustered b-tree - everything above the leaf level must be in cache; non-clustered b-tree - the entire index must be in cache; LSM - Block indexes for all levels should be in cache;

- Here's a multi-part series comparing Azure vs GCP. Lots of detail on storage, VMs, scaling, and more.

- Looks good. Amazon ECS Workshop for AWS Fargate: In this workshop, we will configure GitHub, CodePipeline, CodeBuild, VPC, ALB, ECS, Autoscaling, and log aggregation using a framework called Mu to launch microservices on AWS Elastic Container Service.

- Data processed by applications is growing. You need to pick the right storage. Here's the info you need to pick the right tool for the right job. What Every Programmer has to know about Database Storage by Alex Petrov. Companion blog posts: On Disk IO, Part 1: Flavors of IO, On Disk IO, Part 2: More Flavours of IO, LSM Trees, Access Patterns in LSM Trees, B-Trees and RUM Conjecture.

- Crash Course Computer Science is a 41 video series that takes you from the basics of computing all the way up to the singularity. Each video is in the 10 minute range. Looks good.

- Cliff Click with a short explanation of JVM JIT’ting Basics.

- binhnguyennus/awesome-scalability: An updated and curated list of selected readings to illustrate High Scalability, High Availability, and High Stability Back-end Design Patterns. Concepts are explained in the articles of notable engineers (Werner Vogels, James Hamilton, Jeff Atwood, Martin Fowler, Robert C. Martin, Tom White, Martin Kleppmann) and high quality reference sources (highscalability.com, infoq.com, official engineering blogs, etc). Case studies are taken from battle-tested systems those are serving millions to billions of users (Netflix, Alibaba, Flipkart, LINE, Spotify, etc).

- tensorflow/models: DeepLab is a state-of-art deep learning model for semantic image segmentation, where the goal is to assign semantic labels (e.g., person, dog, cat and so on) to every pixel in the input image.

- ajor/bpftrace: BPFtrace is a DTrace-style dynamic tracing tool for linux, based on the extended BPF capabilities available in recent Linux kernels. BPFtrace uses LLVM as a backend to compile scripts to BPF-bytecode and makes use of BCC for interacting with the Linux BPF system.

- Microsoft/checkedc: Checked C is an extension to C that adds static and dynamic checking to detect or prevent common programming errors such as buffer overruns, out-of-bounds memory accesses, and incorrect type casts. This repo contains the specification for the extension, test code, and samples. For the latest version of the specification and the draft of the next version

- Nordstrom/serverless-artillery: Combine serverless with artillery and you get serverless-artillery for instant, cheap, and easy performance testing at scale.

- facebookincubator/profilo (article): an Android library for collecting performance traces from production builds of an app.

- criteo/biggraphite (article): We decided that the best way to integrate with the Graphite ecosystem was to use the Graphite and Carbon plugin system.

- ashwin153/beaker: a distributed, transactional key-value store that is consistent and available. Beaker is N / 2 fault tolerant but assumes that failures are fail-stop, messages are received in order, and network partitions never occur. Beaker is strongly consistent; conflicting transactions are linearized but non-conflicting transactions are not. Beaker features monotonic reads and read-your-write consistency.

- Snarky: A high-level language for verifiable computation: A verifiable computation is a computation that produces an output along with a proof certifying something about that output. Until very recently, verifiable computation has been mostly theoretical, but recent developments in zk-SNARK constructions have helped maked it practical. Verifiable computation makes it possible for you to be confident about exactly what other people are doing with your data.

- Paper review. Service-oriented sharding with Aspen: The proposed protocol, Aspen, securely shards the blockchain (say a popular blockchain like Bitcoin) to provide high scalability to the service users. Sharding is a well-established technique to improve scalability by distributing contents of a database across nodes in a network. But sharding blockchains is non-trivial. The main challenge is to preserve the trustless nature while hiding transactions in other services of a blockchain from users participating in one service.

-

Canopy: An End-to-End Performance Tracing And Analysis System: This paper presents Canopy, Facebook’s end-to-end performance tracing infrastructure. Canopy records causally related performance data across the end-to-end execution path of requests, including from browsers, mobile applications, and backend services. Canopy processes traces in near real-time, derives user-specified features, and outputs to performance datasets that aggregate across billions ofrequests. UsingCanopy, Facebook engineers can query and analyze performance data in real-time. Canopy addresses three challenges we have encountered in scaling performance analysis: supporting the range of execution and performance models used by different components of the Facebook stack; supporting interactive ad-hoc analysis of performance data; and enabling deep customization by users, from sampling traces to extracting and visualizing features. Canopy currently records and processes over1 billion traces per day. We discuss how Canopy has evolved to apply to a wide range of scenarios, and present case studies of its use in solving various performance challenges.

-

Implementing Goal-Directed Foraging Decisions of a Simpler Nervous System in Simulation: The virtual predator realistically reproduces the decisions of the real one in varying circumstances and satisfies optimal foraging criteria. The basic relations are open to experimental embellishment toward enhanced neural and behavioral complexity in simulation, as was the ancestral bilaterian nervous system in evolution.

-

Encoding, Fast and Slow: Low-Latency Video Processing Using Thousands of Tiny Threads: we implemented a video encoder intended for fine-grained parallelism, using a functional-programming style that allows computation to be split into thousands of tiny tasks without harming compression efficiency. Our design reflects a key insight: the work of video encoding can be divided into fast and slow parts, with the “slow” work done in parallel, and only “fast” work done serially.

-

Anna: A KVS For Any Scale (article): a partitioned, multi-mastered system that achieves high performance and elasticity via waitfree execution and coordination-free consistency. Our design rests on a simple architecture of coordination-free actors that perform state update via merge of lattice-based composite data structures. We demonstrate that a wide variety of consistency models can be elegantly implemented in this architecture with unprecedented consistency, smooth fine-grained elasticity, and performance that far exceeds the state of the art.