Stuff The Internet Says On Scalability For March 30th, 2018

Hey, it's HighScalability time:

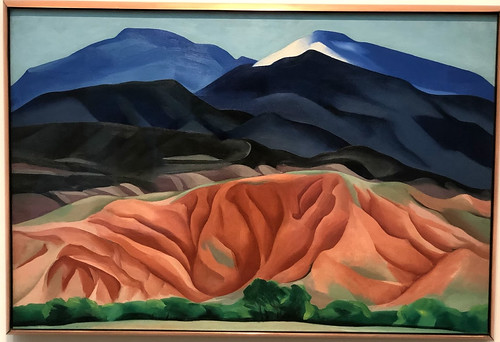

“Objective painting is not good painting unless it is good in the abstract sense. A hill or tree cannot make a good painting just because it is a hill or tree. It is lines and colors put together so that they may say something.” – Georgia O’Keeffe

If you like this sort of Stuff then please support me on Patreon. And I'd appreciate if you would recommend my new book—Explain the Cloud Like I'm 10—to anyone who needs to understand the cloud (who doesn't?). I think they'll learn a lot, even if they're already familiar with the basics.

- 6,000: new viri spotted by AI; 300,000: Uber requests per second; 10TB & 600 years: new next-gen optical disk; 32,000: sites running Coinhive’s JavaScript miner code; $1 billion: Uber loss per quarter; 3.5%: global NAND flash output lost to power outage; 100TB: new SSD; 48TB: RAM on one server; 200 million: Telegram monthly active users; 2,000: days Curiosity Rover on Mars; 225: emerging trends; 4,425: SpaceX satellites approved;

- Quotable Quotes:

- @msuriar: Uber's worst outage ever: - Master log replication to S3 failed. - Logs backup up on primary. - Alerts fire but are ignored. - Disk full on primary. - Engineer deletes unarchived WAL files. - Config error prevents failover/promotion. #SREcon

- @thecomp1ler: Most powerful Xeon is the 28 core Platinum 2180 at $10k RSP and >200W TDP. Due to the nature of Intel turbo boost it almost always operates at TDP under load. Our ARM is the Centriq 2452 at less than $1400 RSP, 46 cores and 120W TDP, that it never hits. Beats Xeon 9/10 workloads.

- Arjun Narayan: This is also finally the year when people start to wake up and realize they care about serializability, because Jeff Dean said its important. Michael Stonebraker, meanwhile, has been shouting for about 20 years and is very frustrated that people apparently only care when The Very Important Googlers say its important.

- @xleem: Simple Recipe: 1) Identify system boundaries 2) Define capabilities exposed 3) Plain english definitions of availabilty 4) Define technical SLO 5) measure baseline 6) set targets 7) iterate#SREcon

- Jordan Ellenberg~ For seven years, a group of students from MIT exploited a loophole in the Massachusetts State Lottery’s Cash WinFall game to win drawing after drawing, eventually pocketing more that $3 million. How did they do it? How did they get away with it? And what does this all have to do with mathematical entities like finite geometries, variance of probability distributions, and error-correcting codes?

- Jeff Dean: ML hardware is at its infancy. Even faster systems and wider deployment will lead to many more breakthroughs across a wide range of domains. Learning in the core of all of our computer systems will make them better/more adaptive. There are many opportunities for this.

- Teller: Here's a compositional secret. It's so obvious and simple, you'll say to yourself, "This man is bullshitting me." I am not. This is one of the most fundamental things in all theatrical movie composition and yet magicians know nothing of it. Ready? Surprise me.

- @kcoleman: OH (from an awesome Lyft driver): “Today has been great. I’ve been blessed by the algorithm.” Immediately had an eerie feeling that this could become an increasingly common way to describe a day.

- Jim Whitehurst [Red Hat chief executive]: We added hundreds of customers in the last year, while Pivotal only added 44 new customers. Their average deal size is $1.5 million, quite large. So they are more the top-down, big company kind of focus. We have over 650 customers [for OpenShift], we added hundreds this past year, and we are growing faster than Pivotal. We thought we were are performing favorably compared to them, but this is the first time we had the data to really compare.

- @danctheduck: My GDC takeaway: Everyone who is making games-as-a-service is getting most of their actual traction by building co-op MMOs. But very few of them realize this is what they are doing. So they keep sabotaging their communities with bizarro design philosophies. Short version (1/2) - People want to do fun activities with friends. The higher order bit. Super sticky. - Core gameplay collapses to this. Ex: Team vs Team plus match making is just another way of make 'fair' Team vs Environment (aka coop) - We focus a lot on PvP, competition or esports. But many of those are *aspirational* for players. Not actually desirable. - Or we fixate on single player genre tropes. Which may be a familiar reason to *start* playing, but aren't always key to why people *continue* playing.

- @iamtrask: Lots of folks are optimistic about #blockchain. I recently came across a difficult question...If a zero-knowledge proof can prove to users that a centralized service performed honest computation, why decentralize it? We live in free markets... I see a correction coming...

- Katherine Bourzac: Shanbhag thinks it’s time to switch to a design that’s better suited for today’s data-intensive tasks. In February, at the International Solid-State Circuits Conference (ISSCC), in San Francisco, he and others made their case for a new architecture that brings computing and memory closer together. The idea is not to replace the processor altogether but to add new functions to the memory that will make devices smarter without requiring more power. Industry must adopt such designs, these engineers believe, in order to bring artificial intelligence out of the cloud and into consumer electronics.

- @dylanbeattie: When npm was first released in 2010, the release cycle for typical nodeJS package was 4 months, and npm restore took 15-30 seconds on an average project. By early 2018, the average release cycle for a JS package was 11 days, and the average npm restore step took 3-4 minutes. 1/11

- @davemark: THREAD: J.C.R. Licklider was one of the true pioneers of computer science. Back in about 1953, Licklider built something called a Watermelon Box. If it heard the word watermelon, it would light up an LED. That’s all it did. But it was the start of a huge wave. //@reneritchie

- smudgymcscmudge: I have to admit that the switch from “free software” to “open source” worked on me. Early in my career I was intrigued by the idea, but couldn’t get past how “free” software was a sustainable model. I started to get it at around the same time the terminology changed.

- @msuriar: CPU attack: spin up something that burns 100% CPU. (openssl or something). What do you expect to happen? What actually happens? #SREcon

- Forrest Brazeal: The way I describe it is: functions as a service are cloud glue. So if I’m building a model airplane, well, the glue is a necessary part of that process, but it’s not the important part. Nobody looks at your model airplane and says: “Wow, that’s amazing glue you have there.” It’s all about how you craft something that works with all these parts together, and FaaS enables that.

-

Forrest Brazeal: We don’t yet have the Rails of serverless — something that doesn’t necessarily expose that it’s actually a Lambda function under the hood. Maybe it allows you to write a bunch of functions in one file that all talk to each other, and then use something like webpack that splits those functions and deploys them in a way that makes sense for your application.We don’t yet have the Rails of serverless — something that doesn’t necessarily expose that it’s actually a Lambda function under the hood. Maybe it allows you to write a bunch of functions in one file that all talk to each other, and then use something like webpack that splits those functions and deploys them in a way that makes sense for your application.

- @eastdakota [Cloudflare CEO]: Why we’re switching to ARM-based servers in one image. Both servers running the same workload at the same performance [lower power].

- @southpolesteve: By building Lambda as they have, AWS effectively stamped a big "DEPRECATED" on containers. People are freaking out accordingly. They should be.

- James Faucette: 5G challenges include the backhaul, siting, and spectrum. With 5G, you need hundreds of times more base stations. 5G operates at a much higher frequency [than previous wireless standards], and when you get to millimeter waves they will barely cover a room. Signal unpredictability and how far they can go are big problems.

- François Chollet: I’d like to raise awareness about what really worries me when it comes to AI: the highly effective, highly scalable manipulation of human behavior that AI enables, and its malicious use by corporations and governments.

- Werner Vogels: We maximize this benefit when we deploy new versions of our software, or operational changes such as a configuration edit, as we often do so zone-by-zone, one zone in a Region at a time. Although we automate, and don't manage instances by hand, our developers and operators know not to build tools or procedures that could impact multiple Availability Zones. I'd wager that every new AWS engineer knows within their first week, if not their first day, that we never want to touch more than one zone at a time. Availability Zones run deep in our AWS development and operations culture, at every level. AWS customers can think of zones in terms of redundancy, "Use two or more Availability Zones for reliability." At AWS, we think of zones in terms of isolation, "Stay within the Availability Zone, as much as possible."

- @davideagleman: Clip from The Brain: The brain is a vast interconnected network of areas that communicate with each other. #BrainPBS

- joseph-hurtado: The most interesting result for me was to see the increasing influence and growth of the ClojureScript ecosystem, and how it has attracted many JavaScript developers. Few know that nowadays ClojureScript can work well with react, does not need Java, and has had solid support for source maps, and live reload for a while.

- Murat: I think what attracts developers to fully-decentralized platforms currently is not that their minds and hearts are won, but it is the novelty, curiosity, and excitement factors. There is an opportunity to make a winning in this domain if you are one of the first movers. But due to the volatility of the domain, lack of a killer application as of yet, and uncertainty about what could happen when regulations hit, or the bubble burst, it is still a risky move.

- @danfenz: 2016: "Java is doomed! It evolves so slowly! Every good idea takes ages to be available!" 2018: "Java is doomed! It evolves too quickly! Nobody will be able to keep pace!"

- @jc_stubbs: SCOOP: Kaspersky is moving its servers which process data from U.S. and European users to Switzerland in response to spying allegations. Company docs say doing so will put data outside the jurisdiction of Russian security services

- @KentLangley: Never forget Gall's Law, "A complex system that works is invariably found to have evolved from a simple system that worked. A complex system designed from scratch never works and cannot be patched up to make it work. You have to start over with a working simple system."

- @RealSexyCyborg: You want to do Shenzhen right? Skip the gov funded Maker/Innovation dog & pony show- it's all fake. Bring an idea- even a napkin if you don't have a prototype. Spend a week going to meetings, see what it would take to have made. Even if you don't go to production, you will get SZ

- ninkendo: I don't think cross-platform UI toolkits should exist, period. Your cross platform code should live underneath the UI layer, and you should write a custom UI for each platform you're bringing your logic to. Mac apps should look and feel like mac apps, windows apps should look and feel like windows apps, etc. Trying to abstract away all these platform differences either nets you a lowest common denominator that doesn't feel at home on any platform, or is so complicated to develop for that you end up doing more work in the end

- Peter Bailis: It’s reasonably well known that these weak isolation models represent “ACID in practice,” but I don’t think we have any real understanding of how so many applications are seemingly (!?) okay running under them.

- Viren Jain: Two questions have guided my scientific research: 1. How does network structure influence computational function in neural systems? 2. How can complex systems use experience to improve performance?

- Silicosis: I mean, it was pre-Google. It was before people were going around and indexing all of the news sites in real time. Space Rogue would get up at, what, three in the morning? He'd hit the news site for every major media organization and look for security related stuff.

- Jason Brush: Indeed, Amazon could almost be described as a sort digital Brutalism: it is straightforward and efficient, with a near-utopian aspiration to meet people’s needs in the least fussy way possible. Amazon’s success brings into relief a principle that is sometimes hard to swallow in the design community–successful design is not necessarily beautiful.

- Sumit Maingi: The more traffic that your application receives the less sense Serverless makes, now while all of us want to prepare for a billion requests a month level of traffic we need to make sure we estimate sensibly. Decided that you want to go for Serverless? Not all applications are suitable for it, its not just the cost for which you should choose it, make sure you consider all the points.

- Neotelos: I switched away from VMware ESXi to Docker, I loved the results. Reduced disk consumption by gigs, RAM consumption by Xgb per VM to less than 100mb per container, etc. And from a dev perspective there were no library or dependency surprises at deploy time - even with massive application and environment changes.

- Amy Nordrum: As [PLATO] began to catch on, students quickly spun its best features—which included emoticons, chat rooms, and email—into an early social network of sorts.

- danbruc: Meh. They used 448 cores to count the frequency of bit patterns of some small length in a probably more or less continuous block of memory. They had 57,756,221,440 total rows, that are 128,920,138 rows per core. If the data set contained 256 or less different stock symbols, then the task boils down to finding the byte histogram of a 123 MiB block of memory. My several years old laptop does this with the most straight forward C# implementation in 170 ms. That is less than a factor of 4 away from their 45.1 ms and given that AVX-512 can probably process 64 bytes at a time, we should have quite a bit room to spare for all the other steps involved in processing the query.

- pathslog: My team is now working on migrating to Kubernetes and, let me tell you, that's not a simple task when your company already has a classic, but well established build pipeline, development process, server provisioning, along with legacy storage solutions and monitoring tools like Zabbix. Things are not fully automated, but they are mostly in place. They work relatively well and we cannot just hand over a disruptive tecnology to all these teams just because "we think it's cool and new".

- joushou: I have actually not seen any positive information about Aurora. There's a bunch of questions related to poor performance posted where Amazon replies, mostly trying to downplay performance concerns and emphasize replication. Not that the fancy replication of not a great feature, but Amazon did make large performance claims that doesn't seem to hold.

- pmcgrathm: As someone who is a product manager in the commercialized AI SaaS space, the most important pieces of feedback I would give a new PM here: 1) Don't let your -brilliant- colleagues try to force their -brilliantly complex- solution of a problem - clearly define market problems, and don't let the team try to go the route of trying to force fit a solution to a market problem. Market problems come first. 2) Frame the market problems appropriately for your ML/AI teams, and practice trying to frame the problem from a variety of angles. Framing from different angles promotes the 'Ah-ha' moment in terms of the right way to solve the problem from the ML side. 3) Don't commit serious time to a model before having a naive solution to benchmark against. Always have a naive solution to compare against the AI solution. 'Naive' here may be a simple linear regression, RMSE, or multi armed bandit/Thompson sampling.

- Neil Lawrence: In many respects I object to the use of the term Artificial Intelligence. It is poorly defined and means different things to different people. But there is one way in which the term is very accurate. The term artificial is appropriate in the same way we can describe a plastic plant as an artificial plant. It is often difficult to pick out from afar whether a plant is artificial or not. A plastic plant can fulfil many of the functions of a natural plant, and plastic plants are more convenient. But they can never replace natural plants. In the same way, our natural intelligence is an evolved thing of beauty, a consequence of our limitations. Limitations which don’t apply to artificial intelligences and can only be emulated through artificial means. Our natural intelligence, just like our natural landscapes, should be treasured and can never be fully replaced.

- After serverless and k8s/containers/microservices, we haven't had much new on the architecture front. AWS AppSync might be such a thing. How to design a serverless backend that scales with your apps success: The more intelligent apps are taking advantage of GraphQL to minimize data transfer between the client and server. For GraphQL, use a managed service like AWS AppSync to provide the GraphQL service and isolate the data. AWS AppSync also includes specific capabilities around collaborative real-time data synchronization across any data type — this provides a lot of flexibility within your app.

- What does it mean for Google to be an AI company? Jeff Dean explains in Systems and Machine Learning: Restore & improve urban infrastructure; Advance health informatics; Completely new, novel scientific discoveries; Neural Machine Translation; Engineer better medicines and maybe; Make solar energy affordable; Develop carbon sequestration methods Manage the nitrogen cycle; Predicting Properties of Molecules - State of the art results predicting output of expensive quantum chemistry calculations, but ~300,000 times faster; Reverse engineer the brain; Songbird Brain Wiring Diagram; Engineer the Tools of Scientific Discovery; Machine Learning for Finding Planets; Keeping Cows Happy; Fighting Illegal Deforestation; AutoML: Automated machine learning (“learning to learn”); Deep learning is transforming how we design computers; Machine Learning for Systems; Machine Learning for Higher Performance Machine Learning Models; Learned Index Structures not Conventional Index Structures;

- Life of a stack is 5 years? Medium is changing to React.js and GraphQL so services will be simpler, more modular, and more performant. They're also moving away from protobufs to gRPC and converting server-side code into services. Sangria is the framework for our GraphQL server. Apollo Client is the GraphQL client-side framework. 2 Fast 2 Furious: migrating Medium’s codebase without slowing down.

- Different approaches to AI. Googe creates its TPU. Microsoft uses FPGAs. Microsoft’s Brainwave makes Bing’s AI over 10 times faster: Unlike FPGAs, Google’s TPUs can’t be reconfigured once they’re built. (Google deals with this by making its chips’ architecture as general as possible to deal with a wide variety of potential situations.) The FPGAs Microsoft has deployed have dedicated digital signal processors on board that are optimized for performing some of the particular types of math needed for AI. This way the company is able to get some of the same benefits that come from building an application-specific integrated circuit (ASIC) such as a TPU. Brainwave can distribute a model across several FPGAs while tasking a smaller number of CPUs to support them. If a machine learning model requires the use of multiple FPGAs, Microsoft’s system bundles them up into what the company calls a “hardware microservice,” which then gets handed off to the Brainwave compiler for distributing a workload across the available silicon. Also, Infinitely scalable machine learning with Amazon SageMaker.

- When OO started it was understandably like representational art, which are images that clearly recognizable for what they purport to be. After a time one comes to realize OO is more a way to organize software so that it can match the ideas you have in your mind. That's programming as an abstract art.

- Understanding the SMACK stack for big data. tkannelid: tldr: for large distributed stuff, they're recommending: Spark (newer and shinier Hadoop) Mesos (a system to schedule tasks across multiple machines) Akka (actor framework in Scala) Cassandra (a NoSQL database with the dubious distinction of looking very much like a SQL database until you want to do something that would be trivial in SQL) Kafka (a distributed stream processing system, something of a generalized map/reduce)

- Data/Serverless/FaaS Evaluation/. Here you'll find a comparison of AWS Lambda, Azure Functions, Google Cloud Functions, IBM Cloud Functions. The form is a little different in that features are listed in yaml files. Also, AZURE VS. AWS DATA SERVICES COMPARISON.

- Interesting implication of GDPR. You have to ask yourself a basic question: can your database and other products and services delete data? Towards 3B time-series data points per day: With upcoming GDPR regulations, it also seems TimescaleDB is better able to handle compliance as compared to InfluxDB. With InfluxDB, it is not possible to delete data, only to drop it based on a retention policy. TimescaleDB in comparison, offers a more efficient drop_chunks() function, but also allows for the deletion of individual records. Also, EU GDPR - Another reason for SaaS to reconsider on-premise.

- Nice summary of Highlights from Lonestar ElixirConf 2018.

- 10x better resource utilization, even with 30% more requests. Towards 3B time-series data points per day: Why DNSFilter replaced InfluxDB with TimescaleDB.

- Switching was not easy. Not because of TimescaleDB — but because we’d chosen to integrate so tightly with InfluxDB. Our query-processor code was directly sending data to our InfluxDB instance. We explored the option of sending data to both InfluxDB and TimescaleDB directly, but in the end realized an architecture utilizing Kafka would be far superior.

- From the get-go, we noticed marked improvements: Ease of change. TimescaleDB supports all standard PostgreSQL alter table commands, create index. Performance. We saw more efficient analytics querying, very low CPU on incoming data. No missing data.

- After setting [filesystem level compression up; we get about a 4x compression ratio on our production server, running a 2TB NVMe.

- Manifold is moving to Kubernetes. Migrating to Kubernetes with zero downtime: the why and how. They started with Terraform deploying containers on AWS EC2 and exposed these through ELBs. Deployment was slow and low container density was expensive. They ran into a lot of problems because us-west-1 didn't support all the functionality they needed. Also seems like running your own HA cluster is a PITA. They stuck with AWS even though it was more expensive then Azure or GCP. They used a staged approach to move from the old cluster to the new cluster without a service disruption. Result: decreased our deployment time from ~15min to ~1.5min and managed to cut operational costs by doing so.

- Shedding traffic by priority is a key scaling strategy. Uber found 28 percent of outages could have been mitigated or avoided through graceful degradation. Here's how Uber build a load balancer to do just that. Introducing QALM, Uber’s QoS Load Management Framework.

- we implemented an overload detector inspired by the CoDel algorithm. A lightweight request buffer (implemented by goroutine and channels) is added for each enabled endpoint to monitor the latency between when requests are received from the caller and processing begins in the handler. Each queue monitors the minimum latency within a sliding time window, triggering an overload condition if latency goes over a configured threshold.

- Each queue has its own overload detector, so when degradation happens in one endpoint, QALM will only shed its load from that endpoint, without impacting others.

- QALM defines four levels of criticality based on Uber’s business requirements: Core infrastructure requests: never dropped; Core trip flow requests: never dropped; User facing feature requests: may be dropped when the system is overloaded; Internal traffic such as testing requests: higher possibility of being dropped when the system is overloaded.

- The new code was integrated into the stack at the RPC layer rather than having applications recode to include the QALM.

- Here's how Netflix does something similar: Performance Under Load.

- Faster WiFi is not better WiFi. What does that mean? For a delicious deep dive you'll want to consume Show 381: Inside The Pros & Cons Of 802.11ax and Wi-Fi Throughput and 802.11ax’s Achilles Heel. WiFi is a half duplex RF environment. The bulk of the data frames (70-80%) are small. The result is excessive overhead at the mac signaling layer. At any one time only one device can transmit. WiFi has not been highly efficient. With WiFi we've built big giant highways and faster cars, but we have a traffic jam. The problem with MIMO is it doesn't work in high density situations, that is, when there's a lot of people using WiFi. What we need orthogonal frequency-division multiple access (OFDMA) which is in 802.11ax. It uses smaller channels that can be transmitted on upstream and downstream with multiple clients at the same time. There's a lot more. Very interesting conversation.

- Into Chaos engineering? There's a slack team for that.

- All the steps you need to generate a 10x Performance Increases: Optimizing a Static Site. It is astonishing how slow the internet is when you live your high speed bubble. Profiling and fixing problems makes a big difference. Result: This improved my page load times from more than 8 seconds to ~350ms on first page load, and an insane ~200ms on subsequent ones.

- 5G will change everything, eventually. The Bumpy Road To 5G.

- 5G comes in two flavors. One utilizes the sub-6 GHz band, which offers modest improvements over 4G LTE. The other utilizes spectrum above 24 GHz, ultimately heading to millimeter-wave technology. As a general rule, as the frequency goes up, so does the speed and the ability to carry more data more quickly. On the other hand, as the frequency increases, the distance that signals can travel goes down. The result is that many more repeaters and base stations will be required. That’s good news for the semiconductor industry, but it also means that rollouts are likely to take longer than previous wireless technologies because the amount of infrastructure required to make it all work will increase significantly

- “With 5G, you have analog, digital and RF coming together”

- 5G handsets could run out of range quickly, leaving them searching for signals. That will deplete batteries faster than in strong-reception areas

- “People are wondering whether they will need bigger control systems,” said Sundari Mitra, CEO of NetSpeed Systems. “This requires a fundamental change in the architecture. There is more dynamic compute required, and that means a new level of sophistication in these designs. You can’t take the traditional fabric and mold it into 5G because it will require heterogeneous computing. It’s not just a single processor that needs access to memory.”

- “If you look at all the 5G systems that are out there, they’re prototypes,” said Mike Fitton, senior director of strategic planning and business development at Achronix. “That’s why they’re all on programmable logic.

- It's tough to look in the mirror and see you are no longer the new cool kid. Ruby is gazing deeply into that mirror now. So Ruby is going to the gym, losing a little weight, and getting a nip and tuck here and there. Ruby is alive and well and thinking about the next 25 years: Matz’s approach to addressing the key issues also has an eye to two key trends he sees for the future: scalability and, what he called the, smarter companion...To improve performance further Ruby is introducing JIT (Just-In-Time) technology...“I think it’s okay to include a new abstraction,” he ventured “and discourage the use of threads” in the future. "Guild is Ruby’s experiment to provide a better approach. Guild is totally isolated,” Matz told the audience in Bath. “Basically we do not have a shared state between Guilds. That means that we don’t have to worry about state-sharing, so we don’t have to care about the locks or mutual exclusives. Between Guilds we communicate with a channel or queue.”

- Isaac Arthur's awesome speculative science YouTube channel. Be prepared to spend a lot of time there.

- Facebook Performance @Scale 2018 recap. Talk titles include: Execution Graphs: Distributed trace processing for performance regression detection; Understanding performance in the wild; The Magic Modem: A global network emulator; iOS VM and loader considerations.

- Yep. Why AWS Lambda and .zip is a recipe for serverless success. Good discussion on HN. The whole idea is for someone to manage infrastructure for you. How can you do that when a container can contain arbitrary software to upgrade?

- A History of Transaction Histories: In the beginning (1990?) database connections were nasty, brutish, and short. Under the law of the jungle, anarchy reigned and programmers had to deal with numerous anomalies. To deal with this, the ANSI SQL-92 standard was the first attempt to bring a uniform definition to isolation levels...Now the next question comes up: what do we do with all these databases that exist in the wild (namely Oracle and Postgres, MySQL is still a toy around now) that claim serializability but are actually just snapshot isolation...Alan Fekete et al have a great idea in 2005, which they call “Making Snapshot Isolation Serializable”. It essentially takes a vanilla snapshot isolation database, and layers on some checks in the SQL statements, to ensure that you have serializability... Does serializability even matter? What are we doing with our lives?...The retort is basically: you’re not always fine, and you’re going to find out that you’re not fine when its too late, so don’t do that. This is not an easy argument to make. It finally starts to catch once people have been burned with NoSQL databases for about a decade.

- Heptio has what looks like a good free book on Cloud Native Infrastructure. Managable at 137 pages. I wish the Box case study would have been more technical.

- How do you handle migration from a monolith? A good explanation at Using API Gateways to Facilitate Your Transition from Monolith to Microservices: Deploying an edge gateway within Kubernetes can provide more flexibility when implementing migration patterns like the “monolith-in-a-box”, and can make the transition towards a fully microservice-based application much more rapid.

- An analysis of the strengths and weaknesses of Apache Arrow: As long as you are performing in-memory analytics where your workloads are typically scanning through a few attributes of many entities, I do not see any reason not to embrace the Arrow standard. The time is right for database systems architects to agree on and adhere to a main memory data representation standard. The proposed Arrow standard fits the bill, and I would encourage designers of main memory data analytics systems to adopt the standard by default unless they can find a compelling reason that representing their data in a different way will result in a significantly different performance profile (for example, Arrow’s attribute-contiguous memory layout is not ideal if your workloads are typically accessing multiple attributes from only a single entity, as is common in OLTP workloads).

- Netflix/SimianArmy: Tools for keeping your cloud operating in top form. Chaos Monkey is a resiliency tool that helps applications tolerate random instance failures.

- danluu/post-mortems: A collection of postmortems. Broken out by category: Config Errors, Hardware/Power Failures, Conflicts, Time, Uncategorized. No company goes unscathed.

- LevInteractive/imageup: A high speed image manipulation and storage microservice for Google Cloud Platform written in Go

- eve-lang: a programming language and IDE based on years of research into building a human-first programming platform. From code embedded in documents to a language without order, it presents an alternative take on what programming could be - one that focuses on us instead of the machine. (note: this is a dead project, yet still interesting)

- vesper-framework/vesper: Vesper is a NodeJS framework that helps you to create scalable, maintainable, extensible, declarative and fast GraphQL-based server applications.

- redisgraph.io: a graph database developed from scratch on top of Redis, using the new Redis Modules API to extend Redis with new commands and capabilities. Its main features include: - Simple, fast indexing and querying - Data stored in RAM, using memory-efficient custom data structures - On disk persistence - Tabular result sets - Simple and popular graph query language (Cypher) - Data Filtering, Aggregation and ordering

- Netflix/concurrency-limits: Java Library that implements and integrates concepts from TCP congestion control to auto-detect concurrency limits to achieve optimal throughput with optimal latency.

- Snap Machine Learning: We [IBM] describe an efficient, scalable machine learning library that enables very fast training of generalized linear models. We demonstrate that our library can remove the training time as a bottleneck for machine learning workloads, opening the door to a range of new applications. For instance, it allows more agile development, faster and more fine-grained exploration of the hyper-parameter space, enables scaling to massive datasets and makes frequent re-training of models possible in order to adapt to events as they occur. Our library, named Snap Machine Learning (Snap ML), combines recent advances in machine learning systems and algorithms in a nested manner to reflect the hierarchical architecture of modern distributed systems. This allows us to effectively leverage available network, memory and heterogeneous compute resources. On a terabyte-scale publicly available dataset for click-through-rate prediction in computational advertising, we demonstrate the training of a logistic regression classifier in 1.53 minutes, a 46x improvement over the fastest reported performance.

- Programmable full-adder computations in communicating three-dimensional cell cultures: Synthetic biologists have advanced the design of trigger-inducible gene switches and their assembly into input-programmable circuits that enable engineered human cells to perform arithmetic calculations reminiscent of electronic circuits. By designing a versatile plug-and-play molecular-computation platform, we have engineered nine different cell populations with genetic programs, each of which encodes a defined computational instruction. When assembled into 3D cultures, these engineered cell consortia execute programmable multicellular full-adder logics in response to three trigger compounds.

- Murat on Anatomical similarities and differences between Paxos and blockchain consensus protocols: 1. Well, this entire post started as a result of a MAD question: "What if I try to map Blockchain protocol components to that of Paxos protocol components?" So I chalk that up as my first MAD question :-) 2. Now that we have this mapping, is there a way to leverage on this to synthesize a new insight? What is the next step? Sometimes providing such a mapping can help give someone a novel insight, which can lead to a new protocol.

- A Quantitative Analysis of the Impact of Arbitrary Blockchain Content on Bitcoin: Our analysis shows that certain content, e.g., illegal pornography, can render the mere possession of a blockchain illegal. Based on these insights, we conduct a thorough quantitative and qualitative analysis of unintended content on Bitcoin’s blockchain. Although most data originates from benign extensions to Bitcoin’s protocol, our analysis reveals more than 1600 files on the blockchain, over 99 % of which are texts or images. Among these files there is clearly objectionable content such as links to child pornography, which is distributed to all Bitcoin participants.

- A Bit-Encoding Based New Data Structure for Time and Memory Efficient Handling of Spike Times in an Electrophysiological Setup: or the first time in the present context, bit-encoding of spike data with a specific communication format for real time transfer and storage of neuronal data, synchronized by a common time base across all unit sources. We demonstrate that our architecture can simultaneously handle data from more than one million neurons and provide, in real time (< 25 ms), feedback based on analysis of previously recorded data. In addition to managing recordings from very large numbers of neurons in real time, it also has the capacity to handle the extensive periods of recording time necessary in certain scientific and clinical applications. Furthermore, the bit-encoding proposed has the additional advantage of allowing an extremely fast analysis of spatiotemporal spike patterns in a large number of neurons.