Stuff The Internet Says On Scalability For April 6th, 2018

Hey, it's HighScalability time:

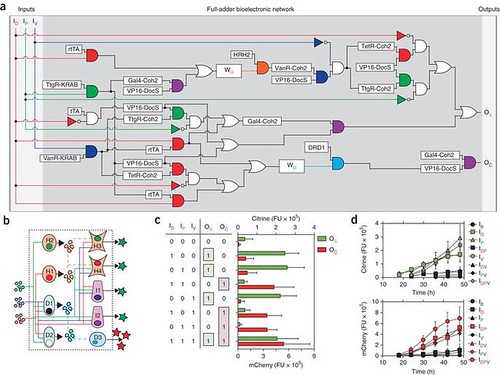

Programmable biology - engineered cells execute programmable multicellular full-adder logics. (Programmable full-adder computations)

If you like this sort of Stuff then please support me on Patreon. And I'd appreciate if you would recommend my new book—Explain the Cloud Like I'm 10—to anyone who needs to understand the cloud (who doesn't?). I think they'll learn a lot, even if they're already familiar with the basics.

- $1: AI turning MacBook into a touchscreen; $2000/month: BMW goes subscription; 20MPH: 15′ Tall, 8000 Pound Mech Suit; 1,511,484 terawatt hours: energy use if bitcoin becomes world currency; $1 billion: Fin7 hacking group; 1.5 million: ethereum TPS, sort of; 235x: AWK faster than Hadoop cluster; 37%: websites use a vulnerable Javascript library; $0.01: S3, 1 Gig, 1 AZ;

- Quotable Quotes:

- Huang’s Law~ GPU technology advances 5x per year because the whole stack can be optimized.

- caseysoftware: Metcalfe lives here in Austin and is involved in the local startup community in a variety of ways. One time I asked him how he came up with the law and he said something close to: "It's simple! I was selling network cards! If I could convince them it was more valuable to buy more, they'd buy more!" As an EE who studied networks, etc in college, it was jarring but audacious and impressive. He was either BSing all of us ~40 years ago or in that conversation a few years ago.. but either way, he helped make our industry happen.

- Adaptive nodes: the consensus that the learning process is attributed solely to the synapses is questioned. A new type of experiments strongly indicates that a faster and enhanced learning process occurs in the neuronal dendrites, similarly to what is currently attributed to the synapses

- @dwmal1: Spotted this paper via @fanf. The idea is amazing: "We offer a new metric for big data platforms, COST, or the Configuration that Outperforms a Single Thread", and find that several frameworks fail to beet a single core even when given 128 cores.

- David Rosenthal: But none of this addresses the main reason that flash will take a very long time to displace hard disk from the bulk storage layer. The huge investment in new fabs that would be needed to manufacture the exabytes currently shipped by hard disk factories, as shown in the graph from Aaron Rakers. This investment would be especially hard to justify because flash as a technology is close to the physical limits, so the time over which the investment would have to show a return is short.

- @asymco: There are 400 million registered bike-sharing users and 23 million shared bikes in China. There were approximately zero of either in 2016. Fastest adoption curve I’ve ever seen (and I’ve seen 140).

- @anildash: Google’s decision to kill Google Reader was a turning point in enabling media to be manipulated by misinformation campaigns. The difference between individuals choosing the feeds they read & companies doing it for you affects all other forms of media.

- The Memory Guy: has recently been told that memory makers’ research teams have found a way to simplify 3D NAND layer count increases.

- @JohnONolan: First: We seem to be approaching (some would argue, long surpassed) Slack-team-saturation. It’s just not new or shiny anymore, and where Slack was the “omg this is so much better than before” option just a few years ago — it has now become the “ugh… not another one” thing

- Memory Guy: The industry has moved a very long way over the last 40 years, but I need not mention this to anyone who’s involved in semiconductors. In 1978 a Silicon Valley home cost about $100,000, or about the cost of a gigabyte of DRAM. Today, 40 years later, the average Silicon Valley home costs about $1 million and a gigabyte of DRAM costs about $7.

- CockroachDB: A three-node, fully-replicated, and multi-active CockroachDB 2.0 cluster achieves a maximum throughput of 16,150 tpmC on a TPC-C dataset. This is a 62% improvement over our 1.1 release. Additionally, the latencies on 2.0 dropped by up to 81% compared to 1.1, using the same workload parameters. That means that our response time improved by 544%.

- @Carnage4Life: Interesting thread about moving an 11,000 user community from Slack to Discourse. It's now quite clear that Slack is slightly better than email for small groups but is actually worse than the alternatives for large groups

- Paul Barham: You can have a second computer once you’ve shown you know how to use the first one.

- Nate Kupp~ petabyte hadoop cluster Apple uses to understand battery life on iphone and ipad looking at logging data coming off those devices.

- Auto Trader: Another option would be to link CloudWatch up with Grafana, an open source tool for time series analytics, which is both easy to use and well known in the business. Grafana has built-in support for CloudWatch so the two can be hooked together – CloudWatch needs adding as a data source, enabling us to build dashboards using CloudWatch metrics. These dashboards would allow us to analyse and visualise the metrics. Grafana also includes an alerting engine, from which we would be able to send alerts for any data abnormalities.

- JrSchild: My strategy is to use Cloudflare workers to invoke a Lambda function. This way I have a cheap AWS API Gateway replacement. $3.70 per 1M requests becomes only $0.50. Costing my only $12.5 for 25M requests + the Lambda invocations which are hella cheap.

- Quirky: Challenging norms and paradigms. A sense of separateness helped the innovators to become original thinkers, freeing them from the constraints of accepted, or acceptable, solutions and theories. For example, Einstein was able to challenge well-accepted principles of Newtonian physics because he stood well outside academic circles and because it was his nature to resist authority. Musk pioneered reusable rockets—something the space industry said was impossible—in part because he was not in the space industry and in part because he wasn’t the kind of person who let other people define what was possible for him.

- @Noahpinion: 1/I have been coming to Japan since 2002. Since that time, the country has changed enormously. Year-to-year the changes are small, but looking back, they really add up. Here are some of the things that have changed. 2/One of the biggest changes is diversity. Especially in Tokyo. Thanks to the tourism boom, the place is jam-packed with non-Japanese people. But that's not nearly all of it.

- Tim Kadlec: The verdict on AMP’s effectiveness is a little mixed. On the one hand, on an even playing field, AMP documents don’t necessarily mean a page is performant. There’s no guarantee that an AMP document will not be slow and chew right through your data. On the other hand, it does appear that AMP documents tend to be faster than their counterparts. AMP’s biggest advantage is the restrictions it draws on how much stuff you can cram into a single page.

- sriku: Federated is a bit different from decentralised. For example, email is federated, but not decentralised. In a federated network, if "your service providing server" is down (like gmail.com), then you don't have service, though others on other parts of the network would continue to have service. With a decent sized network, there is no "your service providing server" and other machines can take over when one fails. Decentralisation always goes along with redundancy whereas federation doesn't require redundancy.

- Andrew Brookins: If you study these simulations, you should have a good sense of why timeouts and circuit breakers work – and why you might want to avoid retries. If nothing else, I hope you internalize that if your application uses network requests, you should probably use the shortest timeout possible and wrap the request in a circuit breaker!

- Kai-Fu Lee: I don’t have the solutions, but if we want to come back to the question of why we exist, we at this point can say we certainly don’t exist to do routine work. We perhaps exist to create. We perhaps exist to love. And if we want to create, let’s create new types of jobs that people can be employed in. Let’s create new ways in which countries can work together. If we think we exist to love, let’s first think how we can love the people who will be disadvantaged.

- etiam: "The whole point of a dimension reduction model is to mathematically represent the data in simpler form. It’s as if Cambridge Analytica took a very high-resolution photograph, resized it to be smaller, and then deleted the original. The photo still exists — and as long as Cambridge Analytica’s models exist, the data effectively does too." That's an eloquent piece of explanation of a very important point. And apropos the discussion about privacy legislation, it's also going to be a very interesting point. Will the Cambridge Analyticas of the world be able to claim they have held on to no personal data, when strictly speaking the raw data has indeed been deleted after being used to create a derivative work that can for all important purposes be used to recreate the original? Assuming I find out I'm being profiled and demand to have my data removed, will society grant me rights to have derivative forms removed or adjusted too? I'm somewhat pessimistic that legal hairsplitting about matters like these will make enforcement very difficult.

- Scott Mautz: a nearly two-year study showed an astounding productivity boost among the telecommuters equivalent to a full day's work. Turns out work-from-home employees work a true full-shift (or more) versus being late to the office or leaving early multiple times a week and found it less distracting and easier to concentrate at home. Additionally (and incredibly), employee attrition decreased by 50 percent among the telecommuters, they took shorter breaks, had fewer sick days, and took less time off. Not to mention the reduced carbon emissions from fewer autos clogging up the morning commute. Oh, and by the way, the company saved almost $2,000 per employee on rent by reducing the amount of HQ office space.

- mooreds: I was asked at an interview what developers need to know about AWS. I think there are three things to keep in mind: Consider higher level services for operational simplicity. Unless you are at a certain scale, you'd never run your own object store, you'd use s3. I always say if you are thinking about downloading and installing any software system on a server, see if AWS has a managed offering and at least evaluate it. Use the elasticity of the cloud. Shut things off. Build scaling systems but make sure they scale both ways. If you aren't automating you aren't doing it right. Tools like cloudformation and terraform let you really treat your infrastructure like software and force attitude changes. Bonus: keep an eye on bandwidth costs, especially between AZs as these can be shocking and can drive architecture.

- A web game developer asks: Why is AWS so outrageously expensive?: Even behind cloudflare, I could barely make a profit with the cost of AWS...I've since switched to a smaller, cheaper cloud provider I'm paying maybe 6X less on hosting, and I feel like I'm also getting more CPU/RAM bang for my buck. Anyway, my question is, why is AWS so damn expensive? It's a great service and I used to recommend it to everyone. But 5-6X market rate is just not worth it for the extra features (like elastic ip addresses and AMIs).

- Were you using On Demand or Reserved pricing? There is usually quite a difference between the two.

- WS pricing is usually competitive. I'd advise you go to that page on the dash where they detail everything that they're charging you to see if something is wrong.

- We use Digital Ocean at work. Take a look at it, it might fit your needs better.

- AWS is honestly meant for enterprises with enterprise pricing. You'd be better off with any of the big 4 - Vultr, DigitalOcean, Linode or OVH. I use OVH, I'm thinking of switching for DigitalOcean for the ease of scaling up and down, better UI, better support, etc.

- Data transfer per month can be much more expensive than the compute power for websites like this that use a lot of bandwidth.

- AWS is not a good choice when you don't have a lot of leeway with your operational expenses. Priorities at larger/funded companies are difference (trading $ for organizational speed/velocity).

- I think the opposite might true. AWS only seems great until you need to scale it and the premium you are actually paying is magnified. At least I thought everything was well-priced, building small business websites, on AWS. But now that I've seen what bills look like with a larger scale application, hell no.

- AWS will give you discounts if you're a startup or a small company. You just need to apply for them.

- It also sounds like you're using AWS wrong. Any sort of file hosting should be done through S3, not EC2. With AWS you're paying for a few premium features, if you don't need them, then you're better off with a cheaper VPS provider. One of the biggest features (in my opinion) of AWS is the integrated environment and specialised tools. At my old job, we had EC2, S3, Elasticache, Lambda, RDS, and DynamoDB all working together with each other. If you're only using EC2, you're not really fully utilising AWS.

- Did you ever explore going with an amazon RDS and a couple of lambda instances with gateways? I do this in AWS, and the equivalent in Azure, for a couple of services that have about the same load for much less than what you seem to be paying.

- Herb Sutter with a Trip report: Winter ISO C++ standards meeting (Jacksonville). 140 experts attended this meeting. Lots of changes and proposals, most of which I no longer understand.

- fovc asks: For people who run their apps like this, do you find the complexity is greater or lesser than running e.g. Express + nginx in richer containers?

- Fovc is referring to From Express.js to AWS Lambda: Migrating existing Node.js applications to serverless.

- After the initial “cost” of figuring out how to configure the services, I’m finding the complexity far less than containers. Many concerns are removed from my application code and build and deploy systems by Lambda and API gateway. You just throw some zip files in S3 and you have a web service. No more image registry, container orchestration, load balancers, AMIs, autoscaling, etc. You also get to remove things code around CORS, auth, polling etc. from your app.

- Like all things, it depends. Your application architecture complexity is going to go way up, but your devops / sysadmin complexity goes to zero. I run a web hosting service, and while my application code is a bit more complicated now, I don't have to worry about monitoring ec2 instances, process managers, and that's worth it. That particular trade of is worth it.

- Node apps are incredibly easy to deploy to Elastic Beanstalk. For most users, the pros of Lambda are probably outweighed by the cons (even though the concept is fantastic).

- Building Check-In Queuing & Appointment Scheduling for In-Person Support at Uber: To address WebSocket degradation, we configured our system so there is a ring in each data center; this way, if two requests with the same unique GLH ID hit two different data centers, it would only update the site queues in the data center where we host the site queues. We forward all our requests to a single data center, regardless of which data center the request came from. In the event of a data center failover, we forward the requests to another data center. We also kill all the WebSocket connections with the original data center and re-create connections with the new active data center.

- Which is the fastest version of Python? Python 3.7 is the fastest of the “official” Python’s and PyPy is the fastest implementation I tested.

- You know all those brainstorming meetings where everyone sits at a table and is supposed to be creative? Yah, those don't work. Quirky: brainstorming groups produced fewer ideas, and ideas of less novelty, than the sum of the ideas created by the same number of individuals working alone...Overall, these studies found that when groups interactively ranked their “best” ideas, they chose ideas that were less original than the average of the ideas produced and more feasible than the average of the ideas produced. In other words, people tended to value feasibility more than originality...Teamwork can be very valuable, but to really ensure that individuals bring as much to the team as possible—especially when the objective is a creative solution—individuals need time to work alone before the group effort begins...Studying these innovators also reveals that when managers want employees to come up with breakthroughs, they should give them some time alone to ponder their craziest of ideas and follow their paths of association into unknown terrain. This type of mental activity will be thwarted in a group brainstorming meeting. Individuals need to be encouraged to come up with ideas freely...When you require consensus, you force everyone to focus on the most obvious choices that they think others will agree to, and that is usually an incremental extension of what the organization already does. An organization that seeks more-original ideas should instead make it clear that the objective is breakthrough innovation, not consensus. It can also be very useful to let teams that disagree about a solution pursue different—even competing—paths. For example, at CERN (the European Organization for Nuclear Research, which operates the Large Hadron Collider), teams of physicists and engineers accelerate and collide particles to simulate the “big bang,” in hopes of advancing our understanding of the origins of the universe. CERN thus encourages multiple teams to work on their own solutions separately, and only after the teams have had significant time to develop their solution do the teams meet and compare alternatives.

- Wonder how the new SQS integration will perform? The right way to distribute messages effectively in serverless applications: Invoking synchronously is the fastest end-to-end; Invoking asynchronously seams to have an excellent overall performance, since we don’t need to wait for the response; Using SNS helps us to decouple our services in a better way, but results in longer end-to-end duration; The Kinesis Data Streams result undoubtedly surprises. It takes only 10ms to execute, but a very long total duration (>0.5 seconds) to get from Lambda A to Lambda B. The reason is obvious — Kinesis is designed to ingest mass amounts of data in real-time (under 10ms) rather than output the data quickly.

- As above, so below. Chips are basically clusters in miniature, which helps explains how Anna: A KVS for any scale can be faster than redis. XNormal: The key insight is that sharding the keyspace works not just for distributed nodes. By treating the each CPU as a node and applying the same sharding mechanism local performance is also optimized. Just because the local CPUs can access the same memory doesn’t mean they should. Inter-CPU synchronization has its costs, too. If the architecture supports sharding the keyspace into disjoint sub-spaces anyway, why not use it for everything?

- Shopify shares their Google Cloud love. Shopify's Infrastructure Collaboration with Google: After 12 years of building and running the foundation of our own commerce cloud with our own data centers, we are excited to build our Cloud with Google...Built our Shop Mover, a selective database data migration tool, that lets us rebalance shops between database shards with an average of 2.5s of downtime per shop...Grown to over 400 production services and built a platform as a service (PaaS) to consolidate all production services on Kubernetes.

- Sendgrid to AWS Simple Email Service: With SES we are paying about 1/4 of the amount we were paying Sendgrid and that difference should grow much bigger as we grow...The first step we did was to move out of using Sendgrid’s web API to the SMTP protocol...Because we didn’t want to rely on AWS right away (uncertain deliverability), we created a multiplexer that can switch from sending emails with Sendgrid or SES...We found almost no difference: we have almost 100% deliverability and 0.01% complaints in both providers.

- Are anonymous decentralized applications compliant with new laws like GDPR and FOSTA? Does it even matter? Some interesting discussions around OpenBazaar 2.0, powered by IPFS.

- Fascinating epic article on Data Laced with History: Causal Trees & Operational CRDTs: It’s true: an ORDT text editor will never be as fast, flexible, or bandwidth-efficient as Google Docs, for such is the power of centralization. But in exchange for a totally decentralized computing future? A world full of systems able to own their data and freely collaborate with one another? Data-centric code that’s entirely free from network concerns?

- So are containers great? Here’s the thing: other than the security model, everything that makes containers good is really a workaround for our other tools doing things badly. Partially this is a long way to say: yes, containers are great, that security model bit is nothing to scoff at. But consider a better world: There’s nothing about building native code (or any code) that prevents us from building artifacts. We’d need a build tool that’s designed to produce dpkg/rpm/other packages the way Maven is designed to produce jars, but that’s completely possible without containers. There’s nothing about our libraries or compilers that requires us to dump everything into a pile of global state in /usr/include and /usr/lib. We could absolutely have our build tools be packaging-aware. There’s nothing about our libraries that requires us to hard-code magic paths to /usr/share, there’re perfectly capable of figuring out where they were loaded from and finding their data files in the appropriate relative locations. There’s nothing about our distro’s package managers that requires there to be only the global system state and no other local installations whatsoever. There’s nothing about native packages that requires packages to be non-relocatable, and perhaps simply installed to /usr/pkg/arch/name/version/ by default rather than having a required installation location.

- Most people don't realize how much DNS impacts performance. Which DNS is fastest? Depends on where you are. DNS Resolvers Performance compared: CloudFlare x Google x Quad9 x OpenDNS: All providers (except Yandex) performed very well in North America and Europe. They all had under 15ms response time across the US, Canada and Europe, which is amazing...CloudFlare was the fastest DNS for 72% of all the locations. It had an amazing low average of 4.98 ms across the globe...Google and Quad9 were close for second and third respectively.

- How we used Cloud Spanner to build our email personalization system—from “Soup” to nuts: Soup now runs on GCP, although the recommendations themselves are still generated in an on-premise Hadoop cluster. The computed recommendations are stored in Cloud Spanner for the reasons mentioned above. After moving to GCP and architecting for the cloud, we see an error rate of .005% per second vs. a previous rate of 4% per second, an improvement of 1/800...Cloud Spanner also solved our scaling problem. In the future, Soup will have to support one million concurrent users in different geographical areas. Likewise, Soup has to perform 5,000 queries per second (QPS) at peak times on the read side, and will expand this requirement to 20,000 to 30,000 QPS in the near future...We found that a hybrid architecture that leverages the cloud for fast data access but still using our existing on-premises investments for batch processing to be effective...At this time, we can only move data in and out of Cloud Spanner in small volumes. This prevents us from making real-time changes to recommendations. Once Cloud Spanner supports batch and streaming connections, we'll be able to enable an implementation to provide more real-time recommendations to deliver even more relevant results and outcomes.

- Does using HyperLogLog work? You must be 100% sure that the small loss of accuracy is tolerable. Using HyperLogLog in production, a retrospective: We tried using HyperLogLog over fine grained time series data in production, but at the time it was not working as well as we’d hoped. I wanted to explore HyperLogLog further though as I thought it was cool, so spent some time building HyperLogLog Playground, a website to help visualise what this cool algorithm is doing under the hood.

- Here are some Highlights from the TensorFlow Developer Summit, 2018. TensorFlow is being used to discover new planets, assess a person’s cardiovascular risk of a heart attack and stroke, predict flight routes through crowded airspace for safe and efficient landings, detect logging trucks and other illegal activities, detect diseases in Cassava plants, the TensorFlow Lite core interpreter is now only 75KB, now provides state-of-the-art methods for Bayesian analysis.

- What's next? History of Google's Evolution of Massive Scale Data Processing: MapReduce: Scalability, Simplicity; Hadoop: Open Source Ecosystem; Flume: Pipelines, Optimization; Storm: Low-Latency; Spark: Strong Consistency; MillWheel: Out-of-order processing; Cloud Dataflow: Unified Batch + Streaming; Flink: Open-source out-of-order; Beam: portability.

- How do you implement Image Processing as a Microservice today? There are two solutions presented. Google Function/Serverless: deemed too complicated. Imageup Microservice: replicable http based microservice which can run privately within your network. It’s written in Go and makes use of the blazingly fast Imaging library. Kubernetes makes it easy to set this up, and you can deploy as many replicas as needed to handle concurrent processing.

- Overload and how Wallaroo mitigates overload: Solution 1: Add more queue space; Solution 2: Increase the Departure Rate by using faster machines, or horizontal scaling, or load shedding; Solution 3: Decrease the Arrival Rate by filtering out some requests, or applying back-pressure, doing nothing and letting the chips falls where they may. Wallaroo has settle on back-pressure as a solution. That's described in a separate article.

- Netflix/flamescope: a visualization tool for exploring different time ranges as Flame Graphs.

- facebook/rocksdb: We have developed a generic Merge operation as a new first-class operation in RocksDB to capture the read-modify-write semantics. This Merge operation: Encapsulates the semantics for read-modify-write into a simple abstract interface; Allows user to avoid incurring extra cost from repeated Get() calls; Performs back-end optimizations in deciding when/how to combine the operands without changing the underlying semantics; Can, in some cases, amortize the cost over all incremental updates to provide asymptotic increases in efficiency.

- improbable-eng/thanos: Thanos is a set of components that can be composed into a highly available Prometheus setup with long term storage capabilities. Its main goals are operation simplicity and retaining of Prometheus's reliability properties.

-

rShetty/awesome-distributed-systems: Awesome list of distributed system resources(blogs, videos), papers and open source projects.

-

encode/apistar: A smart Web API framework, designed for Python 3.

-

nzoschke/gofaas: A boilerplate Go and AWS Lambda app. Demonstrates an expert configuration of 10+ AWS services to support running Go functions-as-a-service (FaaS).

-

archagon/crdt-playground (article): A generic implementation of Victor Grishchenko's Causal Tree CRDT, written in Swift. State-based (CvRDT) implementation. Features many tweaks, including a site identifier map, atom references, and priority atoms. Uses Lamport timestamps instead of "awareness".

-

Verifying Interfaces Between Container-Based Components: In this work, we present a methodology for ensuring correctness of compositions for containers based on existing programming language research, namely research in the field of type systems. We motivate our solution examining two use cases, both leveraging existing public cloud and edge infrastructure solutions and industry use cases.

-

The Stellar Consensus Protocol: A Federated Model for Internet-level Consensus: SCP is a Federation-based Byzantine Agreement (FBA) protocol which allows open membership instead of requiring closed membership. In a federated open membership model, we don't know the number of nodes in the entire system or all of their identities. Nodes may join and leave.

-

Slim NoC: A Low-Diameter On-Chip Network Topology for High Energy Efficiency and Scalability: Emerging chips with hundreds and thousands of cores require networks with unprecedented energy/area efficiency and scalability. To address this, we propose Slim NoC (SN): a new on-chip network design that delivers significant improvements in efficiency and scalability compared to the state-of-the-art. The key idea is to use two concepts from graph and number theory, degree-diameter graphs combined with non-prime finite fields, to enable the smallest numberof ports for a given core count. SN is inspired by state-of-the-art off-chip topologies; it identifies and distills their advantages for NoC settings while solving several key issues that lead to significant overheads on-chip.

-

Scalability! But at what COST?: We offer a new metric for big data platforms, COST, or the Configuration that Outperforms a Single Thread. The COST of a given platform for a given problem is the hardware configuration required before the platform outperforms a competent single-threaded implementation. COST weighs a system’s scalability against the overheads introduced by the system, and indicates the actual performance gains of the system, without rewarding systems that bring substantial but parallelizable overheads

- Anna: A KVS For Any Scale: Modern cloud providers offer dense hardware with multiple cores and large memories, hosted in global platforms. This raises the challenge of implementing high-performance software systems that can effectively scale from a single core to multicore to the globe. Conventional wisdom says that software designed for one scale point needs to be rewritten when scaling up by 10−100× [1]. In contrast, we explore how a system can be architected to scale across many orders of magnitude by design. We explore this challenge in the context of a new keyvalue store system called Anna: a partitioned, multi-mastered system that achieves high performance and elasticity via waitfree execution and coordination-free consistency. Our design rests on a simple architecture of coordination-free actors that perform state update via merge of lattice-based composite data structures. We demonstrate that a wide variety of consistency models can be elegantly implemented in this architecture with unprecedented consistency, smooth fine-grained elasticity, and performance that far exceeds the state of the art.