SW4gRGVmZW5zZSBvZiBIdW1hbml0eeKAlEhvdyBDb21wbGV4IFN5c3RlbXMgRmFpbGVkIGluIFdl c3R3b3JsZCAgKipzcG9pbGVycyoq

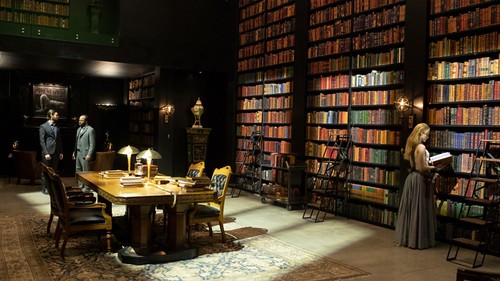

The Westworld season finale made an interesting claim: humans are so simple and predictable they can be encoded by a 10,247-line algorithm. Small enough to fit in the pages of a thin virtual book.

Perhaps my brain was already driven into a meta-fugal state by a torturous, Escher-like, time shifting plot line, but I did observe myself thinking—that could be true. Or is that a thought Ford programmed into my control unit?

To the armies of algorithms perpetually watching over us, the world is a Skinner box. Make the best box, make the most money. And Facebook, Netflix, Amazon, Google, etc. make a lot of money specifically on our predictability.

Even our offline behaviour is predictable. Look at patterns of human mobility. We stay in a groove. We follow regular routines. Our actions are not as spontaneous and unpredictable as we'd surmise.

Predictive policing is a thing. Our self-control is limited. We aren't good with choice. We're predictably irrational. We seldom learn from mistakes. We seldom change.

Not looking good for team human.

It's not hard to see how those annoyingly smug androids—with their perfect bodies and lives lived in a terrarium—could come to take such a dim view of humanity. They see us at our worst. Who wants entry into heaven decided on how we behave playing Grand Theft Auto?

They think they understand us because they've observed us playing a game. They don't. The reason is in How Complex Systems Fail. The androids saw humans through a Russian doll set of nested games. The most obvious game was Westworld. Ford had his game. Numerous corporate games played them selves out with immortality as the payoff.

Each game constrains and directs behavior. In real-life we humans have greater degrees of freedom. When Delores wanted to solve a problem she—ironically—chose genocide as the solution. That's what she was used to. Even horribly flawed humans wouldn't see genocide as a valid move.

Human lives and human society are emergent. You can't attach a debugger to our DNA and find our repertoire of behaviors explicitly described and enumerated. What we are arises out of our interactions. Just like how evolution emerges from biology, which emerges from chemistry, which emerges from physics, which emerges from quantum mechanics, which emerges from who knows what. Shape and control those interactions and you change the world.

Predicting what emerges at each layer is impossible, so it's futile to generate a 10,247-line algorithm by testing fidelity to a remembered baseline. You're creating a game character. That's what's missing in Westworld. And that's why humans can never be counted out—they can always surprise you.

- Complex systems are intrinsically hazardous systems. All of the interesting systems (e.g. transportation, healthcare, power generation) are inherently and unavoidably hazardous by the own nature.

- Complex systems are heavily and successfully defended against failure. The high consequences of failure lead over time to the construction of multiple layers of defense against failure.

- Catastrophe requires multiple failures – single point failures are not enough. The array of defenses works. System operations are generally successful. Overt catastrophic failure occurs when small, apparently innocuous failures join to create opportunity for a systemic accident.

- Complex systems contain changing mixtures of failures latent within them. The complexity of these systems makes it impossible for them to run without multiple flaws being present. Because these are individually insufficient to cause failure they are regarded as minor factors during operations.

- Complex systems run in degraded mode. A corollary to the preceding point is that complex systems run as broken systems. The system continues to function because it contains so many redundancies and because people can make it function, despite the presence of many flaws

- Catastrophe is always just around the corner. Because overt failure requires multiple faults, there is no isolated ‘cause’ of an accident. There are multiple contributors to accidents.

- Post-accident attribution accident to a ‘root cause’ is fundamentally wrong. Because overt failure requires multiple faults, there is no isolated ‘cause’ of an accident.

- Hindsight biases post-accident assessments of human performance. Knowledge of the outcome makes it seem that events leading to the outcome should have appeared more salient to practitioners at the time than was actually the case.

- Human operators have dual roles: as producers & as defenders against failure. The system practitioners operate the system in order to produce its desired product and also work to forestall accidents.

- All practitioner actions are gambles. After accidents, the overt failure often appears to have been inevitable and the practitioner’s actions as blunders or deliberate willful disregard of certain impending failure.