Stuff The Internet Says On Scalability For August 2nd, 2019

Wake up! It's HighScalability time—once again:

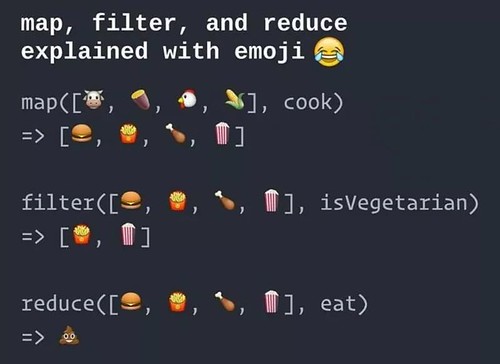

That's pretty good. (@shrutikapoor08)

Do you like this sort of Stuff? I'd greatly appreciate your support on Patreon. I wrote Explain the Cloud Like I'm 10 for people who need to understand the cloud. And who doesn't these days? On Amazon it has 52 mostly 5 star reviews (121 on Goodreads). They'll learn a lot and hold you in even greater awe.

Number Stuff:

- $9.6B: games investment in last 18 months, equal to the previous five years combined.

- $3 million: won by a teenager in the Fortnite World Cup.

- 100,000: issues in Facebook's codebase fixed from bugs found by static analysis.

- 106 million: Capital 1 IDs stolen by a former Amazon employee. (complaint)

- 2 billion: IoT devices at risk because of 11 VXWorks zero day vulnerabilities.

- 2.1 billion: parking spots in the US, taking 30% of city real estate, totaling 34 billion square meters, the size of West Virginia, valued at 60 trillion dollars.

- 2.1 billion: people use Facebook, Instagram, WhatsApp, or Messenger every day on average.

- 100: words per minute from Facebook's machine-learning algorithms capable of turning brain activity into speech.

- 51%: Facebook and Google's ownership of the global digital ad market space on the internet.

- 56.9%: Raleigh, NC was the top U.S. city for tech job growth.

- 20-30: daily CPAN (Perl) uploads. 700-800 for Python.

- 476 miles: LoRaWAN (Low Power, Wide Area (LPWA)) distance world record broken using 25mW transmission power.

- 74%: Skyscanner savings using spot instances and containers on the Kubernetes cluster.

- 49%: say convenience is more important than price when selecting a provider.

- 30%: Airbnb app users prefer a non-default font size.

- 150,000: number of databases migrated to AWS using the AWS Database Migration Service.

- 1 billion: Google photos users, @MikeElgan: same size as Instagram but far larger than Twitter, Snapchat or Pinterest

- 300M: Pinterest monthly active users with evenue of $261 million, up 64% year-over-year, on losses of $26 million for the second-quarter of 2019.

- 7%: of all dating app messages were rated as false.

- $100 million: Goldman Sachs spend to improve stock trades from hundreds of milliseconds down to 100 microseconds while handling more simultaneous trades. The article mentions using microservices and event sourcing, but it's not clear how that's related.

Quotable Stuff:

- Josh Frydenberg, Australian Treasurer: Make no mistake, these companies are among the most powerful and valuable in the world. They need to be held to account and their activities need to be more transparent.

- Neil Gershenfeld: Fabrication merges with communication and computation. Most fundamentally, it leads to things like morphogenesis and self-reproducing an assembler. Most practically, it leads to almost anybody can make almost anything, which is one of the most disruptive things I know happening right now. Think about this range I talked about as for computing the thousand, million, billion, trillion now happening for the physical world, it's all here today but coming out on many different link scales.

- Alan Kay: Marvin and Seymour could see that most interesting systems were crossconnected in ways that allowed parts to be interdependent on each other—not hierarchical—and that the parts of the systems needed to be processes rather than just “things”

- Lawrence Abrams: Now that ransomware developers know that they can earn monstrous payouts from local cities and insurance policies, we see a new government agency, school district, or large company getting hit with a ransomware attack every day.

- @tmclaughbos: A lot of serverless adoption will fail because organizations will push developers to assume more responsibility down the stack instead of forcing them to move up the stack closer to the business.

- Lightstep: Google Cloud Functions’ reusable connection insertion makes the requests more than 4 times faster [than S3] both in region and cross region.

- HENRY A. KISSING, ERERIC SCHMIDT, DANIEL HUTTENLOCHER: The evolution of the arms-control regime taught us that grand strategy requires an understanding of the capabilities and military deployments of potential adversaries. But if more and more intelligence becomes opaque, how will policy makers understand the views and abilities of their adversaries and perhaps even allies? Will many different internets emerge or, in the end, only one? What will be the implications for cooperation? For confrontation? As AI becomes ubiquitous, new concepts for its security need to emerge. The three of us differ in the extent to which we are optimists about AI. But we agree that it is changing human knowledge, perception, and reality—and, in so doing, changing the course of human history. We seek to understand it and its consequences, and encourage others across disciplines to do the same.

- minesafetydisclosures: Visa's business is all about scale. That's because the company's fixed costs are high, but the cost of processing a transaction is essentially zero. Said more simply, it takes a big upfront investment in computers, servers, personnel, marketing, and legal fees to run Visa. But those costs don't increase as volume increases; i.e., they're “fixed”. So as Visa processes more transactions through their network, profit swells. As a result, the company’s operating margin has increased from 40% to 65%. And the total expense per transaction has dropped from a dime to a nickel; of which only half of a penny goes to the processing cost. Both trends are likely to continue.

- noobiemcfoob: Summarizing my views: MQTT seems as opaque as WebSockets without the benefits of being built on a very common protocol (HTTP) and being used in industries beyond just IoT. The main benefits proponents of MQTT argue for (low bandwidth, small libraries) don't seem particularly true in comparison to HTTP and WebSockets.

- erincandescent: It is still my opinion that RISC-V could be much better designed; though I will also say that if I was building a 32 or 64-bit CPU today I'd likely implement the architecture to benefit from the existing tooling.

- Director Jon Favreau~ the plan was to create a virtual Serengeti in the Unity game engine, then apply live action filmmaking techniques to create the film — the “Lion King” team described this as a “virtual production process.”

- Alex Heath: In confidential research Mr. Cunningham prepared for Facebook CEO Mark Zuckerberg, parts of which were obtained by The Information, he warned that if enough users started posting on Instagram or WhatsApp instead of Facebook, the blue app could enter a self-sustaining decline in usage that would be difficult to undo. Although such “tipping points” are difficult to predict, he wrote, they should be Facebook’s biggest concern.

- jitbit: Well, to be embarrassingly honest... We suck at pricing. We were offering "unlimited" plans to everyone until recently. And the "impressive names" like you mention, well, they mostly pay us around $250 a month - which used to be our "Enterprise" pricing plan with unlimited everything (users, storage, agents etc.) So I guess the real answer is - we suck at positioning and we suck at marketing. As the result - profits were REALLY low (Lesson learned - don't compete on pricing). P.S. Couple of years ago I met Thomas from "FE International" at some conference, really experienced guy, who told me "dude, this is crazy, dump the unlimited plan like right now" so we did. So I guess technically we can afford a PaaS now...

- 1e-9: The markets are kind of like a massive, distributed, realtime, ensemble, recursive predictor that performs much better than any one of its individual component algorithms could. The reason why shaving a few milliseconds (or even microseconds) can be beneficial is because the price discovery feedback loops get faster, which allows the system to determine a giant pricing vector that is more self-consistent, stable, and beneficial to the economy. It's similar to how increasing the sample rate of a feedback control system improves performance and stability. Providers of such benefits to the markets get rewarded through profit.

- @QuinnyPig: There’s something else afoot too. I fix cloud bills. If I offer $10k to save $100k people sign off. If I offer $10 million to save $100 million people laugh me out of the room. Large numbers are psychologically scary.

- mrjn: Is it worth paying $20K for any DB or DB support? If it would save you 1/10th of an engineer per year, it becomes immediately worth. That means, can you avoid 5 weeks of one SWE by using a DB designed to better suit your dataset? If the answer is yes (and most cases it is), then absolutely that price is worth. See my blog post about how much money it must be costing big companies building their graph layers. Second part is, is Dgraph worth paying for compared to Neo or others? Note that the price is for our enterprise features and support. Not for using the DB itself. Many companies run a 6-node or a 12-node distributed/replicated Dgraph cluster and we only learn that much later when they're close to pushing it into production and need support. They don't need to pay for it, the distributed/replicated/transactional architecture of Dgraph is all open source. How much would it cost if one were to run a distributed/replicated setup of another graph DB? Is it even possible, can it execute and perform well? And, when you add support to that, what's the cost?

- @codemouse: It’s halfway to 2020. At this point, if any of your strategy is continued investment into your data centers you’re doing it wrong. Yes migration may take years, but you’re not going to be doing #cloud or #ops better than @awscloud

- hermitdev: Not Citibank, but previously worked for a financial firm that sold a copy of it's back office fund administration stack. Large, on site deployment. It would take a month or two to make a simple DNS change so they could locate the services running on their internal network. The client was a US depository trust with trillions on deposit. No, I wont name any names. But getting our software installed and deployed was as much fun as extracting a tooth with a dull wood chisel and a mallet.

- Insikt Group: Approximately 50% of all activity concerning ransomware on underground forums are either requests for any generic ransomware or sales posts for generic ransomware from lower-level vendors. We believe this reflects a growing number of low-level actors developing and sharing generic ransomware on underground forums.

- Facebook: For classes of bugs intended for all or a wide variety of engineers on a given platform, we have gravitated toward a "diff time" deployment, where analyzers participate as bots in code review, making automatic comments when an engineer submits a code modification. Later, we recount a striking situation where the diff time deployment saw a 70% fix rate, where a more traditional "offline" or "batch" deployment (where bug lists are presented to engineers, outside their workflow) saw a 0% fix rate.

- Andy Rachleff: Venture capitalists know that the thing that causes their companies to go out of business is lack of a market, not poor execution. So it's a fool's errand to back a company that proposes to do a ride-hailing service or renting a room or something as crazy as that. Again--how would you know if it’s going to work? So the venture industry outsourced that market risk to the angel community. The angel community thinks they won it away from the venture community, but nothing could be further from the truth, because it's a sucker bet. It's a horrible risk/reward. The venture capitalists said, "Okay, let the angels invest at a $5 million valuation and take all of that market risk. We'll invest at a $50 million valuation. We have to pay up if it works." Now they hope the company will be worth $5 billion to make the same return as they would have in the old model. Interestingly, there now are as many companies worth $5 billion today as there were companies worth $500 million 20 years ago, which is why the returns of the premier venture capital firms have stayed the same or even gone up.

- imagetic: I dealt with a lot of high traffic live streaming video on Facebook for several years. We saw interaction rates decline almost 20x in a 3 year period but views kept increasing. Things just didn't add up when the dust settled and we'd look at the stats. It wouldn't be the least bit surprised if every stat FB has fed me was blown extremely out of proportion.

- prism1234: If you are designing a small embedded system, and not a high performance general computing device, then you already know what operations your software will need and can pick what extensions your core will have. So not including a multiply by default doesn't matter in this case, and may be preferred if your use case doesn't involve a multiply. That's a large use case for risc-v, as this is where the cost of an arm license actually becomes an issue. They don't need to compete with a cell phone or laptop level cpu to still be a good choice for lots of devices.

- oppositelock: You don't have time to implement everything yourself, so you delegate. Some people now have credentials to the production systems, and to ease their own debugging, or deployment, spin up little helper bastion instances, so they don't have to use 2FA each time to use SSH or don't have to deal with limited-time SSH cert authorities, or whatever. They roll out your fairly secure design, and forget about the little bastion they've left hanging around, open to 0.0.0.0 with the default SSH private key every dev checks into git. So, any former employee can get into the bastion.

- Lyft: Our tech stack comprises Apache Hive, Presto, an internal machine learning (ML) platform, Airflow, and third-party APIs.

- Casey Rosenthal: It turns out that redundancy is often orthogonal to robustness, and in many cases it is absolutely a contributing factor to catastrophic failure. The problem is, you can’t really tell which of those it is until after an incident definitively proves it’s the latter.

- Colm MacCárthaigh: There are two complementary tools in the chest that we all have these days, that really help combat Open Loops. The first is Chaos Engineering. If you actually deliberately go break things a lot, that tends to find a lot of Open Loops and make it obvious that they have to be fixed.

- @eeyitemi: I'm gonna constantly remind myself of this everyday. "You can outsource the work, but you can't outsource the risk." @Viss 2019

- Ben Grossman~ this could lead to a situation where filmmaking is less about traditional “filmmaking or storytelling,” and more about “world-building”: “You create a world where characters have personalities and they have motivations to do different things and then essentially, you can throw them all out there like a simulation and then you can put real people in there and see what happens.”

- cheeze: I'm a professional dev and we own a decent amount of perl. That codebase is by far the most difficult to work in out of anything we own. New hires have trouble with it (nobody learns perl these days). Lots of it is next to unreadable.

- Annie Lowrey: All that capital from institutional investors, sovereign wealth funds, and the like has enabled start-ups to remain private for far longer than they previously did, raising bigger and bigger rounds. (Hence the rise of the “unicorn,” a term coined by the investor Aileen Lee to describe start-ups worth more than $1 billion, of which there are now 376.) Such financial resources “never existed at scale before” in Silicon Valley, says Steve Blank, a founder and investor. “Investors said this: ‘If we could pull back our start-ups from the public market and let them appreciate longer privately, we, the investors, could take that appreciation rather than give it to the public market.’ That’s it.”

- alexis_fr: I wonder if the human life calculation worked well this time. As far as I see, Boeing lost more than the sum of the human lives; they also lost reputation for everything new they’ve designed in the last 7 years being corrupted, and they also engulfed the reputation of FAA with them, whose agents would fit the definition of “corrupted” by any people’s definition (I know, they are not, they just used agents of Boeing to inspect Boeing because they were understaffed), and the FAA showed the last step of failure by not admitting that the plane had to be stopped until a few days after the European agencies. In other words, even in financial terms, it cost more than damages. It may have cost the entire company. They “DeHavailland”’ed their company. Ever heard of DeHavailland? No? That’s probably to do with their 4 successive deintegrating planes that “CEOs have complete trust in.” It just died, as a name. The risk is high.

- Neil Gershenfeld: computer science was one of the worst things ever to happen to computers or science, why I believe that, and what that leads me to. I believe that because it’s fundamentally unphysical. It’s based on maintaining a fiction that digital isn’t physical and happens in a disconnected virtual world.

- @benedictevans: Netflix and Sky both realised that a new technology meant you could pay vastly more for content than anyone expected, and take it to market in a new way. The new tech (satellite, broadband) is a crowbar for breaking into TV. But the questions that matter are all TV questions

- @iamdevloper: Therapist: And what do we do when we feel like this? Me: buy a domain name for the side project idea we've had for 15 seconds. Therapist: No

- @dvassallo: Step 1: Forget that all these things exist: Microservices, Lambda, API Gateway, Containers, Kubernetes, Docker. Anything whose main value proposition is about “ability to scale” will likely trade off your “ability to be agile & survive”. That’s rarely a good trade off. 4/25 Start with a t3.nano EC2 instance, and do all your testing & staging on it. It only costs $3.80/mo. Then before you launch, use something bigger for prod, maybe an m5.large (2 vCPU & 8 GB mem). It’s $70/mo and can easily serve 1 million page views per day.

- PeteSearch: I believe we have an opportunity right now to engineer-in privacy at a hardware level, and set the technical expectation that we should design our systems to be resistant to abuse from the very start. The nice thing about bundling the audio and image sensors together with the machine learning logic into a single component is that we have the ability to constrain the interface. If we truly do just have a single pin output that indicates if a person is present, and there’s no other way (like Bluetooth or WiFi) to smuggle information out, then we should be able to offer strong promises that it’s just not possible to leak pictures. The same for speech interfaces, if it’s only able to recognize certain commands then we should be able to guarantee that it can’t be used to record conversations.

- Murat: As I have mentioned in the previous blog post, MAD questions, Cosmos DB has operationalized a fault-masking streamlined version of replication via nested replica-sets deployed in fan-out topology. Rather than doing offline updates from a log, Cosmos DB updates database at the replicas online, in place, to provide strong consistent and bounded-staleness consistency reads among other read levels. On the other hand, Cosmos DB also maintains a change log by way of a witness replica, which serves several useful purposes, including fault-tolerance, remote storage, and snapshots for analytic workload.

- grauenwolf: That's where I get so frustrated. Far too often I hear "premature optimization" as a justification for inefficient code when doing it the right way would actually require the same or less effort and be more readable.

- Murat: Leader - I tell you Paxos joke, if you accept me as leader. Quorum - Ok comrade. Leader - Here is joke! (*Transmits joke*) Quorum - Oookay... Leader - (*Laughs* hahaha). Now you laugh!! Quorum - Hahaha, hahaha.

- Manmax75: The amount of stories I've heard from SysAdmins who jokingly try to access a former employers network with their old credentials only to be shocked they still have admin access is a scary and boggling thought.

- @dougtoppin: Fargate brings significant opportunity for cost savings and to get the maximum benefit the minimal possible number of tasks must be running to handle your capacity needs. This means quickly detecting request traffic, responding just as quickly and then scaling back down.

- @evolvable: At a startup bank we got management pushback when revealing we planned to start testing in production - concerns around regulation and employees accessing prod. We changed the name to “Production Verification”. The discussion changed to why we hadn’t been doing it until now.

- @QuinnyPig: I’m saying it a bit louder every time: @awscloud’s data transfer pricing is predatory garbage. I have made hundreds of thousands of consulting dollars straightening these messes out. It’s unconscionable. I don’t want to have to do this for a living. To be very clear, it's not that the data transfer pricing is too expensive, it's that it's freaking inscrutable to understand. If I can cut someone's bill significantly with a trivial routing change, that's not the customer's fault.

- @PPathole: Alternative Big O notations: O(1) = O(yeah) O(log n) = O(nice) O(nlogn) = O(k-ish) O(n) = O(ok) O(n²) = O(my) O(2ⁿ) = O(no) O(n^n) = O(f*ck) O(n!) = O(mg!)

- Brewster Kahle: There's only a few hackers I've known like Richard Stallman, he'd write flawless code at typing speed. He worked himself to the bone trying to keep up with really smart former colleagues who had been poached from MIT. Carpal tunnel, sleeping under the desk, really trying hard for a few years and it was killing him. So he basically says I give up, we're going to lose the Lisp machine. It was going into this company that was flying high, it was going to own the world, and he said it was going to die, and with it the Lisp machine. He said all that work is going to be lost, we need a way to deal with the violence of forking. And he came up with the GNU public license. The GPL is a really elegant hack in the classic sense of a hack. His idea of the GPL was to allow people to use code but to let people put it back into things. Share and share alike.

Useful Stuff:

- It's probably not a good idea to start a Facebook poll on the advisability of your pending nuptials a day before the wedding. But it is very funny and disturbingly plausible. Made Public. Another funny/sad one is using a ML bot to "deal with" phone scams. The sad part will be when both sides are just AIs trying to socially engineer each other and half the world's resources become dedicated to yet another form of digital masturbation. Perhaps we should just stop the MADness?

- Urgent/1111 Zero Day Vulnerabilities Impacting VxWorks, the Most Widely Used Real-Time Operating System (RTOS). I read this with special interest because I've used VxWorks on several projects. Not once do I ever remember anyone saying "I wonder if the TCP/IP stack has security vulnerabilities?" We looked at licensing costs, board support packages, device driver support, tool chain support, ISR service latencies, priority inversion handling, task switch determinacy, etc. Why did we never think of these kind of potential vulnerabilities? One reason is social proof. Surely all these other companies use VxWorks, it must be good, right? Another reason is VxWorks is often used within a secure perimeter. None of the network interfaces are supposed to be exposed to the internet, so remote code execution is not part of your threat model. But in reality you have no idea if a customer will expose a device to the internet. And you have no idea if later product enhancements will place the device on the internet. Since it seems all network devices expand until they become a router, this seems a likely path to Armageddon. At that point nobody is going to requalify their entire toolchain. That just wouldn't be done in practice. VxWorks is dangerous because everything is compiled into a single image the boots and runs, much like a unikernel. At least when I used it that was the case. VxWorks is basically just a library you link into your application that provides OS functionality. Your write the boot code, device drivers, and other code to make your application work. So if there's a remote code execution bug it has access to everything. And a lot of these images are built into ROM, so they aren't upgradeable. And if even if the images are upgradeable in EEPROM or flash, how many people will actually do that? Unless you pay a lot of money you do not get the source to VxWorks. You just get libraries and header files. So you have no idea what's going on in the network stack. I'm surprised VxWorks never tested their stack against a fuzzing kind of attack. That's a great way to find bugs in protocols. Though nobody can define simplicity, many of the bugs were in the handling of the little used TCP Urgent Pointer feature. Anyone surprised that code around this is broke? Who uses it? It shouldn't be in the stack at all. Simple to say, harder to do.

- JuliaCon 2019 videos are now available. You might like Keynote: Professor Steven G. Johnson and The Unreasonable Effectiveness of Multiple Dispatch.

- CERN is Migrating to open-source technologies. Microsoft wants too much for their licenses so CERN is giving MS the finger.

- Memory and Compute with Onur Mutlu:

- The main problem is that DRAM latency is hardly improving at all. From 1999 to 2017, DRAM capacity has increased by 128x, bandwidth by 20x, but latency only by 1.3x! This means that more and more effort has to be spent tolerating memory latency. But what could be done to actually improve memory latency?

- You could “easily” get a 30% latency improvement by having DRAM chips provide a bit more precise information to the memory controller about actual latencies and current temperatures.

- Another concept to truly break the memory barrier is to move the compute to the memory. Basically, why not put the compute operations in memory? One way is to use something like High-Bandwidth Memory (HBM) and shorten the distance to memory by stacking logic and memory.

- Another rather cool (but also somewhat limited) approach is to actually use the DRAM cells themselves as a compute engine. It turns out that you can do copy, clear, and even logic ops on memory rows by using the existing way that DRAMs are built and adding a tiny amount of extra logic.

- Want to make something in hardware? Like Pebble, Dropcam, or Ring. Who you gonna call? Dragon Innovation. Listen how on the AMP Hour podcast episode #451 – An Interview with Scott Miller.

- Typical customers build between 5k and 1 million units, but will talk with you at 100 units. Customers usually start small. They've built a big toolbox for IoT, so they don't need to create the wheel every time, they have designs for sensing, processing, electronics on the edge, radios, and all the different security layers. They can deploy quickly with little customizations.

- Dragon is moving into doing the design, manufacturing, packaging, issue all POs, and installation support. They call this Product as a Service (PaaS)—full end-to-end provider. Say you have a sensor to determine when avocados are ripe you would pay per sensor per month, or maybe per avocado, instead of a one time sale. Seeing more non-traditional getting into the IoT space, with different revenue models, Dragon has an opportunity to innovate on their business model.

- Consumer is dying and industrial is growing. A trend they are seeing in the US is a constriction of business to consumer startups in the hardware space, but an an expansion of industrial IoT. There have been a bunch of high profile bankruptcies in the consumer space (Anki, Jibo).

- Europe is growing. Overall huge growth in industrial startups across Europe. Huge number of capable factories in the EU. They get feet on the ground to find and qualify factories. They have over 2000 factories in their database. 75% in China, increasingly more in the EU and the US.

- Factories are going global. Seeing a lot of companies driven out of China by the 25% tariffs, moving into Asian pacific countries like Taiwan, Singapore, Vietnam, Indonesia, Malaysia. Coming up quickly, but not up to China's level yet. Dragon will include RFQs on a global basis, including factories from the US, China, EU, Indonesia, Vietnam, to see what the landed cost is as a function of geography.

- Factories are different in different countries. In China factories are vertically integrated. Mold making, injection molding, final assembly and test and packaging, all under one roof. Which is very convenient. In the US and Europe factories are more horizontal. It takes a lot more effort to put together your supply chain. As an example of the degree they were vertically integrated this factory in China would make their own paint and cardboard.

- Automation is huge in China. Chinese labor rates are on average 5 to 6 dollars an hour, depending on region, factory, training. Focus is on automation. One factory they worked with had 100,000 workers now they have 30,000 because of automation.

- Automation is different in China. Automation in China is bottom's up. They'll build a simple robot that attaches to a soldering iron and will solder the leads. In the US is top down. Build a huge full functioning worker that can do anything instead of a task specific robot. China is really good at building stuff so they build task specific robots to make their processes more efficient. Since products are always changing this allows them to stay nimble.

- Also Strange Parts, Design for Manufacturing Course, How I Made My Own iPhone - in China.

- BigQuery best practices: Controlling costs: Query only the columns that you need; Don't run queries to explore or preview table data; Before running queries, preview them to estimate costs; Using the query validator; Use the maximum bytes billed setting to limit query costs; Do not use a LIMIT clause as a method of cost control; Create a dashboard to view your billing data so you can make adjustments to your BigQuery usage. Also consider streaming your audit logs to BigQuery so you can analyze usage patterns; Partition your tables by date; f possible, materialize your query results in stages; If you are writing large query results to a destination table, use the default table expiration time to remove the data when it's no longer needed; Use streaming inserts only if your data must be immediately available.

- Boeing has changed a lot over the years. Once upon a time I worked on a project with Boeing and the people were excellent. This is something I heard: "The changes can be attributed to the influence of the McDonnel family who maintain extremely high influence through their stock shares resulting from the merger. It has been gradually getting better recently but still a problem for those inside who understand the real potential impact."

- Maybe we are all just random matrices? What Is Universality? It turns out there are deep patterns in complex correlated systems that lie somewhere between randomness and order. They arise from components that interact and repel one another. Do such patterns exist in software systems? Also, Bubble Experiment Finds Universal Laws.

- PID Loops and the Art of Keeping Systems Stable:

- I see a lot of places where control theory is directly applicable but rarely applied. Auto-scaling and placement are really obvious examples, we're going to walk through some, but another is fairness algorithms. A really common fairness algorithm is how TCP achieves fairness. You've got all these network users and you want to give them all a fair slice. Turns out that a PID loop it’s what's happening. In system stability, how do we absorb errors, recover from those errors?

- Something we do in CloudFront is we run a control system. We're constantly measuring the utilization of each site and depending on that utilization, we figure out what's our error, how far are we from optimized? We change the mass or radius of effect of each site, so that at our really busy time of day, really close to peak, it's servicing everybody in that city, everybody directly around it drawing those in, but that at our quieter time of day can extend a little further and go out. It’s a big system of dynamic springs all interconnected, all with PID loops. It's amazing how optimal a system like that can be, and how applying a system like that has increased our effectiveness as a CDN provider.

- A surprising number of control systems are just like this, they're just Open Loops. I can't count the number of customers I've gone through control systems with and they told me, "We have this system that pushes out some states, some configuration and sometimes it doesn't do it." I find that scary, because what it's saying is nothing's actually monitoring the system. Nothing's really checking that everything is as it should be. My classic favorite example of this as an Open Loop process, is certificate rotation. I happened to work on TLS a lot, it's something I spent a lot of my time on. Not a week goes by without some major website having a certificate outage.

- We have two observability systems at AWS, CloudWatch, and X-Ray. One of the things I didn't appreciate until I joined AWS - I was a bit going on like Charlie and the chocolate factory, and seeing the insides. I expected to see all sorts of cool algorithms and all sorts of fancy techniques and things that I just never imagined. It was a little bit of that, there was some of that once I got inside working, but mostly what I found was really mundane, people were just doing a lot of things at scale that I didn't realize. One of those things was just the sheer volume of monitoring. The number of metrics we keep on, every single host, every single system, I still find staggering.

- Exponential Back-off is a really strong example. Exponential Back-off is basically an integral, an error happens and we retry, a second later if that fails, then we wait. Rate limiters are like derivatives, they're just rate estimators and what's going on and deciding what's to let in and what to let out. We've built both of these into the AWS SDKs. We've got other back pressure strategies too, we've got systems where servers can tell clients, "Back off, please, I'm a little busy right now," all those things working together. If I look at system design and it doesn't have any of this, if it doesn't have exponential back-off, if it doesn't have rate-limiters in some place, if it's not able to fight some power-law that I think might arise due to errors propagating, that tells me I need to be a bit more worried and start digging deeper.

- I like to watch out for edge triggering in systems, it tends to be an anti-pattern. One reason is because edge triggering seems to imply a modal behavior. You cross the line, you kick into a new mode, that mode is probably rarely tested and it's now being kicked into at a time of high stress, that's really dangerous. Your system has to be idempotent, if you're going to build an idempotent system, you might as well make a level-triggered system in the first place, because generally, the only benefit of building an edge-triggered system is it doesn't have to be idempotent.

- There is definitely tension between stability and optimality, and in general, the more finely-tuned you want to make a system to achieve absolute optimality, the more risk you are of being able to drive it into an unstable state. There are people who do entire PIDs on nothing else then finding that balance for one system. Oil refineries are a good example, where the oil industry will pay people a lot of money just to optimize that, even very slightly. Computer Science, in my opinion, and distributed systems, are nowhere near that level of advanced control theory practice yet. We have a long way to go. We're still down at the baby steps of, “We’ll at least measure it.”

- Re:Inforce 2019 videos are now available.

- Top Seven Myths of Robust Systems: The number one myth we hear out in the field is that if a system is unreliable, we can fix that with redundancy; rather than trying to simplify or remove complexity, learn to live with it. Ride complexity like a wave. Navigate the complexity; The adaptive capacity to improvise well in the face of a potential system failure comes from frequent exposure to risk; Both sides — the procedure-makers and the procedure-not-followers — have the best of intentions, and yet neither is likely to believe that about the other; Unfortunately it turns out catastrophic failures in particular tend to be a unique confluence of contributing factors and circumstances, so protecting yourself from prior outages, while it shouldn’t hurt, also doesn’t help very much; Best practices aren’t really a knowable thing; Don’t blame individuals. That’s the easy way out, but it doesn’t fix the system. Change the system instead.

- They grow up so slow. What’s new in JavaScript: Google I/O 2019 Summary.

- From a rough calculation we saw about 40% decrease in the amount of CPU resources used. Overall, we saw latency stabilize for both avg and max p99. Max p99 latency also decreased a bit. Safely Rewriting Mixpanel’s Highest Throughput Service in Golang. Mixpanel moved from Python to Go for their data collection API. They has already migrated the Python API to use the Google Load Balancer to route messages to kubernetes pod on Google Cloud where an Envoy container load-balanced between eight Python API containers. The Python API containers then submitted the data to Google Pubsub queue via a pubsub sidecar container that had a kestrel interface. To enable testing against live traffic, we created a dedicated setup. The setup was a separate kubernetes pod running in the same namespace and cluster as the API deployments. The pod ran an open source API correctness tool, Diffy, along with copies of the old and new API services. Diffy is a service that accepts HTTP requests, and forwards them to two copies of an existing HTTP service and one copy of a candidate HTTP service. One huge improvement is we only need to run a single API container per pod.

- Satisfactory: Network Optimizations: It would be a big gain to stop replicating the inventory when it’s not viewed, which is essentially what we did, but the method of doing so was a bit complicated and required a lot of rework...Doing this also helps to reduce CPU time, as an inventory is a big state to compare, and look for changes in. If we can reduce that to a maximum of 4x the number of players it is a huge gain, compared to the hundreds, if not thousands, that would otherwise be present in a big base...There is, of course, a trade-off. As I mentioned there is a chance the inventory is not there when you first open to view it, as it has yet to arrive over the network...In this case the old system actually varied in size but landed around 48 bytes per delta, compared to the new system of just 3 bytes...On top of this, we also reduced how often a conveyor tries to send an update to just 3 times a second compared to the previous of over 20...the accuracy of item placements on the conveyors took a small hit, but we have added complicated systems in order to compensate for that...we’ve noticed that the biggest issue for running smooth multiplayer in large factories is not the network traffic anymore, it’s rather the general performance of the PC acting as a server.

- MariaDB vs MySQL Differences: MariaDB is fully GPL licensed while MySQL takes a dual-license approach. Each handle thread pools in a different way. MariaDB supports a lot of different storage engines. In many scenarios, MariaDB offers improved performance.

- Our pySpark pipeline churns through tens of billions of rows on a daily basis. Calculating 30 billion speed estimates a week with Apache Spark: Probes generated from the traces are matched against the entire world’s road network. At the end of the matching process we are able to assign each trace an average speed, a 5 minute time bucket and a road segment. Matches on the same road that fall within the same 5 minute time bucket are aggregated to create a speed histogram. Finally, we estimate a speed for each aggregated histogram which represents our prediction of what a driver will experience on a road at a given time of the week...On a weekly basis, we match on average 2.2 billion traces to 2.3 billion roads to produce 5.4 billion matches. From the matches, we build 51 billion speed histograms to finally produce 30 billion speed estimates...The first thing we spent time on was designing the pipeline and schemas of all the different datasets it would produce. In our pipeline, each pySpark application produces a dataset persisted in a hive table readily available for a downstream application to use...Instead of having one pySpark application execute all the steps (map matching, aggregation, speed estimation, etc.) we isolated each step to its own application...We favored normalizing our tables as much as possible and getting to the final traffic profiles dataset through relationships between relevant tables...Partitioning makes querying part of the data faster and easier. We partition all the resulting datasets by both a temporal and spatial dimension.

- Do not read this unless you can become comfortable with the feeling that everything you've done in your life is trivial and vainglorious. Morphogenesis for the Design of Design:

- One of my students built and runs all the computers Facebook runs on, one of my students used to run all the computers Twitter runs on—this is because I taught them to not believe in computer science. In other words, their job is to take billions of dollars, hundreds of megawatts, and tons of mass, and make information while also not believing that the digital is abstracted from the physical. Some of the other things that have come out from this lineage were the first quantum computations, or microfluidic computing, or part of creating some of the first minimal cells.

- The Turing machine was never meant to be an architecture. In fact, I'd argue it has a very fundamental mistake, which is that the head is distinct from the tape. And the notion that the head is distinct from the tape—meaning, persistence of tape is different from interaction—has persisted. The computer in front of Rod Brooks here is spending about half of its work just shuttling from the tape to the head and back again.

- There’s a whole parallel history of computing, from Maxwell to Boltzmann to Szilard to Landauer to Bennett, where you represent computation with physical resources. You don’t pretend digital is separate from physical. Computation has physical resources. It has all sorts of opportunities, and getting that wrong leads to a number of false dichotomies that I want to talk through now. One false dichotomy is that in computer science you’re taught many different models of computation and adherence, and there’s a whole taxonomy of them. In physics there’s only one model of computation: A patch of space occupies space, it takes time to transit, it stores state, and states interact—that’s what the universe does. Anything other than that model of computation is physics and you need epicycles to maintain the fiction, and in many ways that fiction is now breaking.

- We did a study for DARPA of what would happen if you rewrote from scratch a computer software and hardware so that you represented space and time physically.

- One of the places that I’ve been involved in pushing that is in exascale high-performance computing architecture, really just a fundamental do-over to make software look like hardware and not to be in an abstracted world.

- Digital isn’t ones and zeroes. One of the hearts of what Shannon did is threshold theorems. A threshold theorem says I can talk to you as a wave form or as a symbol. If I talk to you as a symbol, if the noise is above a threshold, you’re guaranteed to decode it wrong; if the noise is below a threshold, for a linear increase in the physical resources representing the symbol there’s an exponential reduction in the fidelity to decode it. That exponential scaling means unreliable devices can operate reliably. The real meaning of digital is that scaling property. But the scaling property isn’t one and zero; it’s the states in the system.

- if you mix chemicals and make a chemical reaction, a yield of a part per 100 is good. When the ribosome—the molecular assembler that makes your proteins—elongates, it makes an error of one in 104. When DNA replicates, it adds one extra error-correction step, and that makes an error in 10-8, and that’s exactly the scaling of threshold theorem. The exponential complexity that makes you possible is by error detection and correction in your construction. It’s everything Shannon and von Neumann taught us about codes and reconstruction, but it’s now doing it in physical systems.

- One of the projects I’m working on in my lab that I’m most excited about is making an assembler that can assemble assemblers from the parts that it’s assembling—a self-reproducing machine. What it's based on is us.

- If you look at scaling coding construction by assembly, ribosomes are slow—they run at one hertz, one amino acid a second—but a cell can have a million, and you can have a trillion cells. As you were sitting here listening, you’re placing 1018 parts a second, and it’s because you can ring up this capacity of assembling assemblers. The heart of the project is the exponential scaling of self-reproducing assemblers.

- As we work on the self-reproducing assembler, and writing software that looks like hardware that respects geometry, they meet in morphogenesis. This is the thing I’m most excited about right now: the design of design. Your genome doesn’t store anywhere that you have five fingers. It stores a developmental program, and when you run it, you get five fingers. It’s one of the oldest parts of the genome. Hox genes are an example. It’s essentially the only part of the genome where the spatial order matters. It gets read off as a program, and the program never represents the physical thing it’s constructing. The morphogenes are a program that specifies morphogens that do things like climb gradients and symmetry break; it never represents the thing it’s constructing, but the morphogens then following the morphogenes give rise to you.

- What’s going on in morphogenesis, in part, is compression. A billion bases can specify a trillion cells, but the more interesting thing that’s going on is almost anything you perturb in the genome is either inconsequential or fatal. The morphogenes are a curated search space where rearranging them is interesting—you go from gills to wings to flippers. The heart of success in machine learning, however you represent it, is function representation. The real progress in machine learning is learning representation.

- We're at an interesting point now where it makes as much sense to take seriously that scaling as it did to take Moore’s law scaling in 1965 when he made his first graph. We started doing these FAB labs just as outreach for NSF, and then they went viral, and they let ordinary people go from consumers to producers. It’s leading to very fundamental things about what is work, what is money, what is an economy, what is consumption.

- Looking at exactly this question of how a code and a gene give rise to form. Turing and von Neumann both completely understood that the interesting place in computation is how computation becomes physical, how it becomes embodied and how you represent it. That’s where they both ended their life. That’s neglected in the canon of computing.

- If I’m doing morphogenesis with a self-reproducing system, I don’t want to then just paste in some lines of code. The computation is part of the construction of the object. I need to represent the computation in the construction, so it forces you to be able to overlay geometry with construction.

- Why align computer science and physical science? There are at least five reasons for me. Only lightly is it philosophical. It’s the cracks in the matrix. The matrix is cracking. 1) The fact that whoever has their laptop open is spending about half of its resources shuttling information from memory transistors to processor transistors even though the memory transistors have the same computational power as the processor transistors is a bad legacy of the EDVAC. It’s a bit annoying for the computer, but when you get to things like an exascale supercomputer, it breaks. You just can’t maintain the fiction as you push the scaling. The resource in very largescale computing is maintaining the fiction so the programmers can pretend it’s not true is getting just so painful you need to redo it. In fact, if you look down in the trenches, things like emerging ways to do very largescale GPU program are beginning to inch in that direction. So, it’s breaking in performance.

- What’s interesting is a lot of the things that are hard—for example, in parallelization and synchronization—come for free. By representing time and space explicitly, you don’t need to do the annoying things like thread synchronization and all the stuff that goes into parallel programming.

- Communication degraded with distance. Along came Shannon. We now have the Internet. Computation degraded with time. The last great analog computer work was Vannevar Bush's differential analyzer. One of the students working on it was Shannon. He was so annoyed that he invented our modern digital notions in his Master’s thesis to get over the experience of working on the differential analyzer.

- When you merge communication with computation with fabrication, it’s not there’s a duopoly of communication and computation and then over here is manufacturing; they all belong together. The heart of how we work is this trinity of communication plus computation and fabrication, and for me the real point is merging them.

- I almost took over running research at Intel. It ended up being a bad idea on both sides, but when I was talking to them about it, I was warned off. It was like the godfather: "You can do that other stuff, but don’t you dare mess with the mainline architecture." We weren't allowed to even think about that. In defense of them, it’s billions and billions of dollars investment. It was a good multi-decade reign. They just weren’t able to do it.

- Again, the embodiment of everything we’re talking about, for me, is the morphogenes—the way evolution searches for design by coding for construction. And they’re the oldest part of the genome. They were invented a very long time ago and nobody has messed with them since.

- Get over digital and physical are separate; they can be united. Get over analog as separate from digital; there’s a really profound place in between. We’re at the beginning of fifty years of Moore’s law but for the physical world. We didn’t talk much about it, but it has the biggest impact of anything I know if anybody can make anything.

Soft Stuff:

- paypal/hera (article): Hera multiplexes connections for MySQL and Oracle databases. It supports sharding the databases for horizontal scaling. It is a data access gateway that PayPal uses to scale database access for hundreds of billions of SQL queries per day. Additionally, HERA improves database availability through sophisticated protection mechanisms and provides application resiliency through transparent traffic failover. HERA is now available outside of PayPal as an Apache 2-licensed project.

- zerotier/lf: a fully decentralized fully replicated key/value store. LF is built on a directed acyclic graph (DAG) data model that makes synchronization easy and allows many different security and conflict resolution strategies to be used. One way to think of LF's DAG is as a gigantic conflict-free replicated data type (CRDT). Proof of work is used to rate limit writes to the shared data store on public networks and as one thing that can be taken into consideration for conflict resolution.

- pahud/fargate-fast-autoscaling: This reference architecture demonstrates how to build AWS Fargate workload that can detect the spiky traffic in less than 10 seconds followed by an immediate horizontal autoscaling.

- ailidani/paxi: Paxi is the framework that implements WPaxos and other Paxos protocol variants. Paxi provides most of the elements that any Paxos implementation or replication protocol needs, including network communication, state machine of a key-value store, client API and multiple types of quorum systems.

Pub Stuff:

- The original Libra (MIT Fellow Says Facebook ‘Lifted’ His Ideas for Libra Cryptocurrency). Digital trade coin: towards a more stable digital currency.

- Secure Firmware Development Best Practices: This document was produced by the Cloud Security Industry Summit (CSIS). CSIS is a group of Cloud Service Providers, with a mission to align on a vision and approach to developing best-of-breed security solutions. The group includes members from top Cloud Service Providers, partnering as an industry team and evolving a coordinated approach for improving cloud security from component to system to solution. Intel facilitates the group.

- Dissecting performance bottlenecks of strongly-consistent replication protocols: In this work, we study the performance of Paxos protocols. We take a two-pronged approach, and provide both analytic and empirical evaluations which corroborate and complement each other. We then distill these results to give back-of-the-envelope formulas for estimating the throughput scalability of different Paxos protocols.