Facebook and Site Failures Caused by Complex, Weakly Interacting, Layered Systems

Facebook has been so reliable that when a site outage does occur it's a definite learning opportunity. Fortunately for us we can learn something because in More Details on Today's Outage, Facebook's Robert Johnson gave a pretty candid explanation of what caused a rare 2.5 hour period of down time for Facebook. It wasn't a simple problem. The root causes were feedback loops and transient spikes caused ultimately by the complexity of weakly interacting layers in modern systems. You know, the kind everyone is building these days. Problems like this are notoriously hard to fix and finding a real solution may send Facebook back to the whiteboard. There's a technical debt that must be paid.

The outline and my interpretation (reading between the lines) of what happened is:

- Remember that Facebook caches everything. They have 28 terabytes of memcached data on 800 servers. The database is the system of record, but memory is where the action is. So when a problem happens that involves the caching layer, it can and did take down the system.

- Facebook has an automated system that checks for invalid configuration values in the cache and replaces them with updated values from the persistent store. We are not told what the configuration property was, but since configuration information is usually important centralized data that is widely shared by key subsystems, this helps explain why there would be an automated background check in the first place.

- A change was made to the persistent copy of the configuration value which then propagated to the cache.

- Production code thought this new value was invalid, which caused every client to delete the key from the cache and then try to get a valid value from the database. Hundreds of thousand of queries a second, that would have normally been served at the caching layer, went to the database, which crushed it utterly. This is an example of the Dog Pile Problem. It's also an example of the age old reason why having RAID is not the same as having a backup. On a RAID system when an invalid value is written or deleted, it's written everywhere, and only valid data can be restored from a backup.

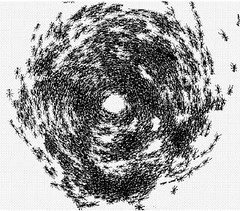

- When a database fails to serve a request often applications will simply retry, which spawns even more requests, which makes the problem exponentially worse. CPU is consumed, memory is used up, long locks are taken, networks get clogged. The end result is something like the Ant Death Spiral picture at the beginning of this post Bad juju.

- A feedback loop had been entered that didn’t allow the databases to recover. Even if a valid value had been written to that database it wouldn't have mattered. Facebook's own internal clients were basically running a DDOS attack on their own database servers. The database was so busy handling requests no reply would ever be seen from the database, so the valid value couldn't propagate. And if they put a valid value in the cache that wouldn't matter either because all the clients would still be spinning on the database, unaware that the cache now had a valid value.

- What they ended up doing was: fix the code so the value would be considered valid; take down everything so the system could quiet down and restart normally.

This kind of thing happens in complex systems as abstractions leak all over each other at the most inopportune times. So the typical Internet reply to every failure of "how could they be so stupid, that would never happen to someone as smart as me", doesn't really apply. Complexity kills. Always.

Based on nothing but pure conjecture, what are some of the key issues here for system designers?

- The Dog Pile Problem has a few solutions, perhaps Facebook will add one them, but perhaps their system has been so reliable it hasn't been necessary. Should they take the hit or play the percentages that it won't happen again or that other changes can mitigate the problem? A difficult ROI calculation when you are committed to releasing new features all the time.

- The need for a caching layer in the first place, with all the implied cache coherency issues, is largely a function of the inability for the database to serve as both an object cache and a transactional data store. Will the need for a separate object cache change going forward? Cassandra, for example, has added caching layer that along with key-value approach, may reduce the need for external caches for database type data (as apposed to HTML fragment caches and other transient caches).

- How did invalid data get into the system in the first place? My impression from the article was that maybe someone did an update by hand so that the value did not got through a rigorous check. This happens because integrity checks aren't centralized in the database anymore, they are in code, and that code can often be spread out and duplicated in many areas. When updates don't go through a central piece of code it's any easy thing to enter a bad value. Yet the article seemed to also imply that value entered was valid, it's just that the production software didn't think it was valid. This could argue for an issue with software release and testing policies not being strong enough to catch problems. But Facebook makes a hard push for getting code into production as fast as possible, so maybe it's just one of those things that will happen? Also, data is stored in MySQL as a BLOB, so it wouldn't be possible to do integrity checks at the database level anyway. This argues for using a database that can handle structured value types natively.

- Background validity checkers are a great way to slowly bring data into compliance. It's usually applied though to data with a high potential to be unclean, like when there are a lot of communication problems and updates get wedged or dropped, or when transactions aren't used, or when attributes like counts and relationships aren't calculated in real-time. Why would configuration data be checked when it should always be solid? Another problem is again that the validation logic in the checker can easily be out of sync when validation logic elsewhere in the stack, which can lead to horrendous problems as different parts of the system fight each other over who is right.

- The next major issue is how the application code hammered the database server. Now I have no idea how Facebook structures their code, but I've often seen this problem when applications are in charge of writing error recovery logic, which is a very bad policy. I've seen way too much code like this: while (0) { slam_database_with_another_request(); sleep (1); }. This system will never recover when the sh*t really hits the fan, but it will look golden on trivial tests. Application code shouldn't decide policy because this type of policy is really a global policy. It should be moved to a centralized network component on each box that is getting fed monitoring data that can tell what's happening with the networks and services running on the networks. The network component would issue up/down events that communication systems running inside each process would know to interpret and act upon. There's no way every exception in every application can handle this sort of intelligence, so it needs to be moved to a common component and out of application code. Application code should never ever have retries. Ever. It's just inviting the target machine to die from resource exhaustion. In this case a third party application is used, but with your own applications it's very useful to be able to explicitly put back pressure on clients when a server is experiencing resource issues. There's no need to go Wild West out there. This would have completely prevented the DDOS attack.

There are a lot of really interesting system design issues here. To what degree you want to handle these type of issues depends a lot on your SLAs, resources, and so on. But as systems grow in complexity there's a lot more infrastructure code that needs to be written to keep it all working together without killing each other. Much like a family :-)