Stuff The Internet Says On Scalability For April 30th, 2021

Hey, HighScalability is back!

This channel is the perfect blend of programming, hardware, engineering, and crazy. After watching you’ll feel inadequate, but in an entertained sort of way.

Love this Stuff? I need your support on Patreon to keep this stuff going.

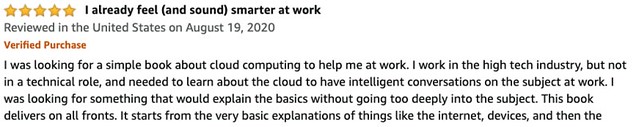

Do employees at your company need to know about the cloud? My book will teach them all they need to know. Explain the Cloud Like I'm 10. On Amazon it has 285 mostly 5 star reviews. Here's a 100% bipartisan review:

Number Stuff:

- $54bn: exepcted AWS revenue for 2021. Closing in on IBM. 200 million Prime subscribers.

- 932,884: number of earths that will really fit inside the sun.

- 77%: increase in the number of components in the average application. 84% of codebases have at least one vulnerability.

- 2 Million: requests per second forwarded by HAProxy over HTTP on a Single Arm-based AWS Graviton2 Instance

- 50+%: of Chipotle's sales were through digital orders in the first quarter. Online sales grew 133.9% Chipotle attributed much of its growth to Chipotlanes, the fast casual chain's version of drive-thrus.

- 25%: of visitors to Stack Overflow copy code. Over 40 million copies were seen in the two weeks worth of activity analyzed. The majority of copies are coming from anonymous users.

- 1 Trillion: DNS lookups by Comcast customers in 2020. Peak internet traffic rose 32% over pre-pandemic levels. In the span of 4 months in the wake of pandemic lockdowns, Comcast’s network experienced almost 2 years-worth of traffic growth.

- ~12,000: developers contributed on GitHub to Ingenuity’s software via open source.

- $430B: Apple investment in US manufacturing.

- €30 million: GDPR fines in Q1 2021 on EU countries

- 2x: likelihood that a 50 year old founder will succeed compared to a 30 year old. Among the very fastest-growing new tech companies, the average founder was 45 at the time of founding.

- $125B: record global venture funding in Q1. A 50 percent increase quarter over quarter and a whopping 94 percent increase year over year.

- 30,000: malfunctions caused by cosmic rays in Japan.

- $6.01 billion: YouTube advertising revenue for the quarter. Up from $4 billion from a year ago, for a growth rate of 49%. On pace to match Netflix. So when you get an ad every 15 seconds at least you know it’s going to a good cause.

- 98%: reduction in lithium battery costs over three decades.

- 78Mbps: average Starlink download speed for one user in Walnut Creek. Latency of 36ms, which is not bad at all. [Also Dishy (Starlink) Custom Mount on Standing Seam Metal Roof]

- 13%: expected semiconductor CapEx growth in 2021. Capital expenditures grew 9.2% in 2020 to US$112.1 billion, which is $14.1 billion higher than the spring 2020 forecast.

- 1000: hours the Phoebus cartel declared incandescent lights should last. Planned obsolescence FTW.

- 4.5 billion: words generated per day by GPT-3

- 62%: streaming music revenue of global recorded music sales, or $13.4 billion, an all-time high.

- 62.5%: of indie ios developers make zip, nada, nothing. 30.3% make less than $10,000. .2% make more than 1 million.

- 5,200: metric tons of micrometeorites fall to Earth every year.

- ~75%: calories remain in fecal matter.

- thirty-four billion billion : cortical spikes over your life time.

Quotable Stuff:

- Larry Page and Sergey Brin: we expect that advertising-funded search engines will be inherently biased towards the advertisers and away from the needs of the consumers...we believe the issue of advertising causes enough mixed incentives that it is crucial to have a competitive search engine that is transparent and in the academic realm

- Frank Pasquale (The New Laws of Robotics): Robotic systems and AI should complement professionals, not replace them. Robotic systems and AI should not counterfeit humanity. Robotic systems and AI should not intensify zero-sum arms races. Robotic systems and AI must always indicate the identity of their creator(s), controller(s), and owner(s).

- @Werner: We introduced a new component into the S3 metadata subsystem to understand if the cache’s view of an object’s metadata was stale. This component acts as a witness to writes, notified every time an object changes. This new component acts like a read barrier during read operations allowing the cache to learn if its view of an object is stale. The cached value can be served if it’s not stale, or invalidated and read from the persistence tier if it is stale.

- @Sommer: Them: “Oh you work at Apple. It must be awesome get to code in Swift all the time." Me: [self hatesToBreakItToYouBut:NO];

- Google: Whenever you access your favorite storage services, the same three building blocks are working together to provide everything you need. Borg provisions the needed resources, Spanner stores all the metadata about access permissions and data location, and then Colossus manages, stores, and provides access to all your data.

- Professor Carl Hewitt: The reason that in practice that an Actor can be hundreds of times faster is that in order to carry out a concurrent computation, the parallel lambda expression must in parallel gather together the results of the computation step of each core and then in parallel redistribute the communicated messages from each core to other cores. The slowdown comes from the overhead of a lambda expression spanning out from a core to all the other cores, performing a step on each core, and then gathering and sorting the results of the steps

- @benedictevans: Memo to Apple: talking about how many apps are on your platform, and saying that people are free to switch to other platforms, will work precisely as well for you today as it did for Microsoft 20 years ago. That is to say - don’t bother.

- shishy: One thing I’ve run into with GraphQL in the past that was a pain was handling authorization when reading specific elements. Previously I used row level security policies in postgres to manage this, but it’s always felt tricky

- They Hacked McDonald’s Ice Cream Machines—and Started a Cold War: The secret menu reveals a business model that goes beyond a right-to-repair issue, O’Sullivan argues. It represents, as he describes it, nothing short of a milkshake shakedown: Sell franchisees a complicated and fragile machine. Prevent them from figuring out why it constantly breaks. Take a cut of the distributors’ profit from the repairs. “It’s a huge money maker to have a customer that’s purposefully, intentionally blind and unable to make very fundamental changes to their own equipment," O’Sullivan says.

- Robert K. Wittman: Insider thieves are everywhere: In Illinois, a shipping clerk arranged the theft of three Cézanne paintings from the Art Institute of Chicago, then threatened to kill the museum president’s child if his demands were not met. In Baltimore, a night watchman stole 145 pieces from the Walters Art Museum, taking the pieces one by one over eight months—each night, while making his rounds, he pried open a display case, pinched an Asian artifact or two, then rearranged the rest of the pieces so the display wouldn’t look suspicious. In Russia, a veteran curator in Saint Petersburg systematically looted the world-renowned Hermitage, removing more than $5 million worth of czarist treasures over fifteen years

- Data Center Frontier: This apparently didn’t deter Seth Aaron Pendley, a 28-year-old Texas resident, who was charged with plotting to blow up an Amazon Web Services facility in Ashburn, Virginia. Pendley was arrested after allegedly attempting to obtain an explosive device from an undercover FBI employee in Fort Worth, saying he planned to attack a data center and “kill off about 70 percent of the Internet." If convicted, Pendley faces up to 20 years in federal prison.

- n-gate: A webshit writes a Lovecraftian horror story about a simple web service sprouting tendrils from hellish dimensions unknowable to human experience. Hackernews argues about the proper selection of free hosting tiers amongst a diverse selection of webshit services to ensure availability approaching a ten-dollar virtual machine. Other Hackernews appreciate the dozens -- possibly hundreds -- of awful decisions that led to the concluding monstrosity in the article, and are grateful for the advice.

- @SwiftOnSecurity: So I came up with a really mean insult that's probably too devastating to actually use at work. "This is architected like a McDonald's ice cream machine."

- cpeterso: I was talking with a Netflix product manager a few years ago and, IIUC, he said Netflix re-encodes their entire catalog monthly to take advantage of new encoder optimizations. Disk is cheap. They want to optimize network transfers to both save money and improve user experience.

- systemvoltage: vertical scaling is severely underrated. You can do a lot and possibly everything ever for your company with vertical scaling. It would apply to 99% of the companies or even more.

- SatvikBeri: Several years ago, I was chatting with another engineer from a close competitor. He told me about how they'd set up a system to run hundreds of data processing jobs a day over a dozen machines, using docker, load balancing, a bunch of AWS stuff. I knew these jobs very well, they were basically identical for any company in the space. He then mentioned that he'd noticed that somehow my employer had been processing thousands of jobs, much faster than his, and asked how many machines we were using. I didn't have the heart to tell him we were running everything manually on my two-year-old macbook air.

- ok123456: A lot of modern tooling has come from people using microservices instead of making libraries. If you actually just write libraries it turns out you don't need to solve distributed systems problems, orchestration and configuration management as part of your normal development cycle.

- Canada: Internet service was down for about 900 customers in Tumbler Ridge, B.C., after a beaver chewed through a crucial fibre cable, causing "extensive" damage.

- GigaOm Research: The final three-year Total Cost of Ownership (TCO) finds the cost of serverless Cassandra to be $740,087, compared to a cost of $3,174,938 for a self-managed OSS Cassandra deployment.

- @benedictevans: When Apple has finished rolling out Apple Silicon, it will no longer sell any computer that quotes a MHz rating. End of an era. Now it segments and differentiates by form factor and experience, not feeds and speeds.

- GitHub: This idea has been used since at least the 1970s to speed up queries in relational databases. In Git, reachability bitmaps can provide dramatic speed-ups when walking objects that reside in the same pack: walking all of the objects in the Linux kernel repository took more than 33 seconds without bitmaps, but only 1.57 seconds to perform the same traversal with bitmaps.

- jillesvangurp: All of that failing 100% at the same time is simply not a thing. Not even close. It's not something grid operators plan for. It might dip by 20-30% but it might also peak by that much. And it's likely to average out over time in a very predictable way. All that means is that we need to have a little more capacity. 2x would be overkill. 1.2 to 1.3x plus some battery will probably do the trick. Batteries on the grid are intended for and used exclusively for absorbing short term peaks and dips in both supply and demand. Short term as in hours/minutes; not days or weeks. They are very good at that. This is why modest amounts of lithium ion batteries are being used successfully in various countries. These batteries can provide large amounts of power (MW/GW) for typically not more than a few hours. The reason that is cost effective (despite the cost of these batteries) is that taking e.g. gas peaker plants online for a few hours/minutes and then offline again is expensive and slow. And of course with cheaper wind and solar providing cheap power most of the time, gas plants are increasingly pushed in that role because they are more expensive per kwh to operate. Gas plants on stand by still cost money. And turning them on costs more money. Batteries basically enable grids to have fewer (and eventually none) of those plants. These gravity based batteries have the same role. It's a cheaper alternative to lithium ion batteries.

- hpcjoe: should add to this comment, as it may give the impression that I'm anti cloud. I'm not. Quite pro-cloud for a number of things. The important point to understand in all of this is, is that there are crossover points in the economics for which one becomes better than the other. Part of the economics is the speed of standing up new bits (opportunity cost of not having those new bits instantly available). This flexibility and velocity is where cloud generally wins on design, for small projects (well below 10PB).

- ajcp: I work for one of the biggest supermarket chains in the US as part of the team implementing an invoice processing capability for the enterprise to utilize. We literally take in thousands of paper/non-digitized invoices a day, and in our testing have found Azure's Form Recognizer (AFR) to be very dependable and confidently accurate.

- Spotify: 2020 was the year for us to try something new. We decided to take a different approach to organizing our teams. Each team would become responsible for one piece of our Search stack — meaning one team would be responsible for getting data into Search, another team would be responsible for the quality of personalized Search results, another team would be responsible for our Search APIs, another team for insights, and finally, a team would be focused on our company bet, podcast Search. You might be wondering... In order to accomplish that, we created two groups inside our Search area — one focused on our personalized core Search experience, with Spotify end user satisfaction as the measure of success, and the other aiming to improve Spotify developer happiness, encouraging experimentation while maintaining the services SLOs. Below you can see what our Search organization looks like today.

- @jonty: In short: Right now NFT's are built on an absolute house of cards constructed by the people selling them. It is likely that _every_ NFT sold so far will be broken within a decade. Will that make them worthless? Hard to say

- As a flautist, if you could only be in the orchestra by playing a violin, would you join the orchestra? Question for software engineers: Would you leave your job because you didn't like the tech stack?. For some reason it doesn’t seem like the same thing. Programmers tend to be generalists rather than specialists.

- Anne Larson: Our [Corellian Software, Inc. ] cloud provider was slow and blocking certain ports, creating latency and email issues and hindering our product from performing to its full potential. And despite the cloud’s reputation for affordability, to get the performance and features we needed, the cost became unreasonable. After years as a cloud-first company, we realized the public cloud was no longer for us

- jrockway: The biggest problem I found with promotions is that people wanted one because they thought they were doing their current job well. That isn't promotion, that's calibration, and doing well in calibration certainly opens up good raise / bonus options. Promotion is something different -- it's interviewing for a brand new job, by proving you're already doing that job. Whether or not that's fair is debatable, but the model does make a lot of sense to me.

- Michael I. Jordan: “I think that we’ve allowed the term engineering to become diminished in the intellectual sphere," he says. The term science is used instead of engineering when people wish to refer to visionary research. Phrases such as just engineering don’t help. “I think that it’s important to recall that for all of the wonderful things science has done for the human species, it really is engineering—civil, electrical, chemical, and other engineering fields—that has most directly and profoundly increased human happiness."

- @RMac18: A big takeaway from this is the idea that these contracted content moderators do not have a regular way of communicating with people who craft policy. The people seeing the shit can't talk about the shit they're seeing to the people who make decisions. That's a disconnect.

- Jorge Luis Borges: I think that when we write about the fantastic, we're trying to get away from time and to write about everlasting things. I mean we do our best to be in eternity, though we may not quite succeed in our attempt.

- ztbwl: Our blockchain based AI platform makes it possible to save you time & material by using the cloud for your enterprise process. By applying machine learning to your augmented reality workflow you are able to cut onpremise costs by more than half. The intelligent cryptographic algorithm makes sure all your teammates collaborate trough our quantum computing pipeline. The military grade encrypted vertical integration into the tokenized world leverages extended reality to hold down the distributed cloud latency for your success. With our 5G investment we make sure to have enough monitoring-as-a-service resource pools ready for you. The Git-Dev-Sec-Ops model ensures maximum troughput and ROI on your serverless lambda cyber edge computing clusters. Our business intelligence uses hyperautomation to deploy chatbots into the kubernetes mesh to support your customers with great UX. Opt in now for more information on our agile training range and lock in future profits on our NFT‘s. Don’t miss out: 3 billion IoT devices run our solution already.

- Adrian Tchaikovsky: evolution had gifted them [octopus] with a profoundly complex toolkit for taking the world apart to see if there was a crab hiding under it.

- @QuinnyPig: Let me help you spread the message, @azure ! These workloads are up to 5x more expensive on @awscloud because you play shithead games with your licensing and customers are being strong-armed. Don’t pretend it’s some underlying economic Azure feature; net new on MSSQL is horrid.

- @jackclarkSF: Facebook is deploying multi-trillion parameter recommendation models into production, and these models are approaching computational intensity of powerful models like BERT. Wrote about research here in Import AI 245. The significant thing here: recommendation models are far more societally impactful than models that get more discussion (e.g, GPT3, BERT). Recommender models drive huge behavioral changes across FB's billions of users. Papers like this contain symptoms of advancing complexity.

- hn_throwaway_99: We use it [node.js] for a mission-critical backend in TypeScript. After 20 years (was previously primarily a Java programmer for the first part of my career) I feel I have finally hit environment "nirvana" with having our front-end in React/TypeScript, backend in Node/TypeScript with API in Apollo GraphQL, DB is Postgres. Having the same language across our entire stack has huge, enormous, gargantuan benefits that shouldn't be underestimated, especially for a small team. Being able to easily move between backend and frontend code bases has had a gigantic positive impact on team productivity. Couple that with auto-generating client and server-side typescript files from our GraphQL API schema definition has made our dev process pretty awesome.

- Stuff Made Here: There’s a finite amount of ways to fail so eventually you’ll get it. I optimized it so hard it didnt compute anything. I could make a much worse one for FREE, I just need thousands of dollars in equipment.

- kqr: it's also worth mentioning that even in a system where technically tail latencies aren't a big problem, psychologically they are. If you visit a site 20 times and just one of those are slow, you're likely to associate it mentally with "slow site" rather than "fast site".

- mmaroti: This is blatantly false. People are using formal verification tools in hardware design, and they do work and they do compose. Here is a simple verilog code which accomplishes some of what he wants.

- seemaze: Often overlooked, the core tenet of engineering is public safety. This is why professional engineers are required to obtain a license, register in their practicing jurisdiction, and are held liable if their work fails to achieve a prescribed consensus of acceptability. Imagine if software was held to the same level of scrutiny..? Software is carpentered, some is designed, and little is actually engineered. Engineers don't fail forward, early, fast, or often. They don't move fast and break things. Not engineering software is great, because if you had to pull permits to build your software, the web would still look like 1996 and my phone would have a keypad.

- jmull: The problem is, publishing an API is making a promise: you're promising that it's safe to build on the API because it will be maintained into the future and will avoid breaking compatibility. These promises can be expensive to maintain, restrict changes and if you break the promise, it's worse than if you had not made the promise in the first place. Imagine Peloton did open their API and some third-party did create Apple Watch support. Every time they do a software update or release a new machine, they may break something in the third-party integration, which leaves the third-party to scramble to fix the issue. To mitigate this, Peloton would have to do communicate changes and provide beta releases -- that is, start and run a developer relations program. This is not small potatoes. Opening an API that will actually be useful is a big, on-going deal.

- gehen88: We use Lambda@Edge to build our own CDN on top of S3 with authentication, so our customers have reliable, fast and secure data access. We use a bunch of edge lambdas which serve thousands of requests each minute, so I suspect we'll see a nice cost reduction with this. Some of the stuff we do: - rewrite urls - auth and permissions check - serve index.html on directory indexes - inject dynamic content in served html files - add CORS headers. We use S3.getObject to fetch it and create a custom response.

- dormando: I've yet to see anyone who really needs anything higher than a million RPS. The extra idle threads and general scalability help keep the latency really low, so they're still useful even if you aren't maxing out rps.

- wakatime: My one-person SaaS architecture with over 250k users: * Flask + Flask-Login + Flask-SQLAlchemy [1] * uWSGI app servers [2] * Nginx web servers [3] * Dramatiq/Celery with RabbitMQ for background tasks * Combination of Postgres, S3, and DigitalOcean Spaces for storing customer data [4] * SSDB (disk-based Redis) for caching, global locks, rate limiting, queues and counters used in application logic, etc [5]. I spend about $4,000/mo on infra costs. S3 is $400/mo, Mailgun $600/mo, and DigitalOcean is $3,000/mo. Our scale/server load might be different, but I'm still interested in what the costs would be with your setup.

- Theo Schlossnagle: We've witnessed more than exponential growth in the volume and breadth of that data. In fact, by our estimate, we've seen an increase by a factor of about 1x1012 over the past decade. Obviously, compute platforms haven't kept pace with that. Nor have storage costs dropped by a factor of 1x1012. Which is to say the rate of data growth we've experienced has been way out of line with the economic realities of what it takes to store and analyze all that data. So, the leading reason we decided to create our own database had to do with simple economics. Basically, in the end, you can work around any problem but money. It seemed that, by restricting the problem space, we could have a cheaper, faster solution that would end up being more maintainable over time.

- @swardley: I know one canny CIO used AWS as a backup for a new TV service. When the data centres failed on the day of launch they switched onto the backup service which has been running it ever since. The board was delighted. PS. The data centres failed because the CIO never built them.

- ChuckMcM: I found the news of Intel releasing this chip quite encouraging. If they have enough capacity on their 10nm node to put it into production then they have tamed many of the problems that were holding them back. My hope is that Gelsinger's renewed attention to engineering excellence will allow the folks who know how to iron out a process to work more freely than they did under the previous leadership.

- ytene: Just in case anyone comes along and reads the above and concludes that Oracle purchased Sun Microsystems in order to get the rights to JAVA...The reason that Oracle bought Sun was because at the time, Sun Microsystems were basically bankrupt. They had lost their hardware edge and companies were abandoning the Solaris platform as though it had suddenly become toxic. Unfortunately for Oracle, the combination of "Oracle on Solaris" was *the* configuration to run Oracle's RDBMS for 95% of commercial customers, so the death of Solaris and Sun hardware would have been a crippling - though not fatal - blow to Oracle Corp. To give you an idea of just how heavily these two products were integrated, on Solaris you didn't format partitions for Oracle, you left them raw, and the database managed partitioning and formatting of drives to its own design, optimized for performance.

- Doug Morton: manufacturing processes are kept within the constraints of making sense to the people overseeing the design and operation, even though the thing that we really care about in manufacturing is efficient and robust output. If we could liberate ourselves from the constraints of having to make sense to someone, we could then unlock the potential which lies in the spaces between things, the gaps in time, and the natural variations.

- @databasescaling: OH: "Mars has a higher proportion of Linux devices with working audio than any other known planet in the universe."

- Mads Hartmann: But perhaps the most valuable thing we’ve gotten out of this journey is a shift in perspective. Now that we have deeper visibility into our production systems, we’re much more confident carrying out experiments. We’ve started releasing smaller changes, guarded by feature flags, and observing how our systems behave. This has reduced the blast radius of code changes: We can shut down experiments before they develop into full-fledged incidents. We think these practices, and the mindset shift they’ve prompted, will benefit our systems and our users—and we hope our learnings will benefit you, too.

- Veritasium~ It wasn't people walking in sync that got the bridge to wobble; it was the wobbling bridge that got people to walk in sync.

- @WiringTheBrain: Life is not a state or a property; it's a #process, like a storm, or flame, or tornado. Where a living system differs is in the boundary that makes it into an entity that persists over time.

- bkanber: I posted that pledge to the site a year ago. Total donations: $80. There are a couple of hundred people who use the site for hours per day and post on the forums that they love it, but haven't donated a fiver. Asking for donations doesn't work.

- @clemmihai: My heart goes out to all backend engineers. Frontend engineers get praised for beautiful UIs. Product managers get credited for awesome products. Backend engineers get no recognition, except when a service goes down for 5 minutes—then they're called garbage engineers.

- @alexbdebrie: 6/ With DynamoDB, you have two choices for requests that don't have the partition key: - Add a secondary index - Use a Scan (not recommended). This fits what what I call DynamoDB's guiding principle: "Do not allow operations that won't scale." 8/ MongoDB's guiding philosophy is a little different. I described it as: "Make it easy* for developers." It could also be "Sure, why not?"

- @matthlerner: PayPal’s B2B revenue is quite concentrated: ~90% of revenue comes from ~10% of their merchants. So we focused on revenue churn rather than account churn. That narrowed the problem considerably! - maybe a couple hundred merchants per year.

- @rashiq: it's incredible to me that a $5 digital ocean box can serve traffic for 200k unique visitors and yet there's startups out there who deploy their landing pages with kubectl

- Geoff Huston: There is a massive level of inertial mass in TCP these days, and proposals to alter TCP by adding new options or changing the interpretation of existing signals will always encounter resistance. It is unsurprising that BBR stayed away from changing the TCP headers and why QUIC pulled the end-to-end transport protocol into the application. Both approaches avoided dealing with this inertial stasis of TCP in today’s Internet. It may be a depressing observation, but it’s entirely possible that this proposal to change ECN in TCP may take a further 5 or more years and a further 14 or more revisions before the IETF is ready to publish this specification as a Standards Track RFC.

- Tim Bray: Why is Rust faster? Let’s start by looking at the simplest possible case, scanning the whole log to figure out which URL was retrieved the most. The required argument is the same on both sides: -f 7. Here is output from typical runs of the current Topfew and Dirkjan’s Rust code. Go: 11.01s user 2.18s system 668% cpu 1.973 total. Rust: 10.85s user 1.42s system 1143% cpu 1.073 total. The two things that stick out is that Rust is getting better concurrency and using less system time. This Mac has eight two-thread cores, so neither implementation is maxing it out...It’s complicated, but I think the message is that Go is putting quite a bit of work into memory management and garbage collection.

- Gareth Corfield: Agile methodology has not succeeded in speeding up deliveries of onboard software for the F-35 fighter jet, a US government watchdog has warned in a new report.

- @swardley: X : We're adopting a cloud first policy. Me : Good on you. X : Just good? Me : You don't have a choice - punctuated equilibrium. You are being forced to adopt a cloud first policy, it's not a choice, it's a survival mechanism. Choice ended around 2012, you're waking up to this. X : We think we can make a difference in the container space.Me : Ah, so your grand plan is to wake up late, go to the fight almost a decade after it's over and say "we're ready to rumble" ... sounds like a reenactment society. Do you get dressed up in period costumes? X : What do you suggest? Me : Serverless is where the action is. X : Build a serverless environment? Me : No, that battle is almost over, you'll just get crushed by the big guns. There are places you can attack though. Time limited.

- Andrew Haining: The thing that really dooms cloud gaming however is thermodynamics, the cutting edge of datacentre research is all about how to keep the servers storing the data cool, cooling datacentres is the vast majority of the cost of running them.

- Dwayne Lafleur: Both sites are measurably worse on their AMP versions. The amount of data transferred is higher, the script execution is worse, and the page takes longer to load. So much for the promise of speed and a better user experience

- I thought it would be taller. Internet Archive Storage: 750 servers, some up to 9-years old. 1,300 VMs. 30K storage devices. >20K spinning disks (in paired storage), a mix of 4,8,12,16TB drives, about 40% of the bytes are on 16TB drives. almost 200PB of raw storage. growing the size of the archive >25%/yr. adding 10-12PB of raw storage per quarter with 16TB drives it would need 15 racks to hold a copy currently running ~75 racks currently serving about 55GB/s, planning for ~80GB/s soon.

- Lewis-Jones: That’s what true explorers do, change the way we see and understand the world.

- John Mark Bishop: In this paper, foregrounding what in 1949 Gilbert Ryle termed a category mistake, I will offer an alternative explanation for AI errors: it is not so much that AI machinery cannot grasp causality, but that AI machinery – qua computation – cannot understand anything at all.

- Eldad A. Fux: Moving From Nginx+FPM to Swoole Has Increased Our PHP API Performance by 91%

- daxfohl: With 5G that last mile between the metro hub and the user is only 5ms. This is critical for things like factory automation, autopilot, etc. Deploy your workload to AWS Wavelength or Azure Edge Zone 5G, and stick a 5G card in your factory tools or drones or whatever, and you're done. So IMO cloud providers having presence in all the big telco hubs is the future of "edge". Or at least a future.

- @PierB: Firebase allows you to go from 0 to 10 very easily, but makes going from 10 to 100 pretty much impossible. Fauna is a real database that will go with you 100% of the way. From small indie projects to full blown enterprise. So, my biggest problem with Firebase is that querying is pretty much useless for anything serious. It's essentially a KV database for JSONs with very limited filtering capabilities.

- @mp3michael: Google is a huge money loser for 96.5% of its click buying customers. There are very few Google customers generating a profit by buying clicks on Google. When the avg click costs about $1.50 few businesses have big ticket items with big enough profit margin to generate a profit.

- @varcharr: Today I heard one of the worst engineering takes to exist. “Documentation shouldn’t be necessary if the engineers are competent in what they’re doing." No. Documentation is for future you, for new teammates, and for anyone who can’t memorize everything they ever do.

- Julian Barbour: Complexity doesn’t just give time its direction – it literally is time

- m12k: IMO the Burst compiler that can "jobify" and multi-thread your code relatively painlessly is the most impressive piece of tech Unity has produced (disclosure: used to work there, though this was made after my time there). It's not that hard to fit into its constraints, and it often provides 2x-200x speedups. It's still mind-boggling to me that it's possible to get (a constrained subset of) C# to beat hand-optimized C++.

- @jthomerson: One advantage of EventBridge is subscribing to events based on attributes of the event, which is easier than with SNS/SQS. downstream services can easily filter to just the events they need

- Slack: We are now within sight of our final goal of standardization on Envoy Proxy for both our ingress load balancers and our service mesh data plane, which will significantly reduce cognitive load and operational complexity for the team, as well as making Envoy’s advanced features available throughout our load balancing infrastructure. Since migrating to Envoy, we’ve exceeded our previous peak load significantly with no issues.

- @JoeEmison: The real low/no-code benefit is (a) allowing business leads to run the software that runs their divisions (e.g., SaaS!), and (b) outsourcing stuff to managed services (AWS! Twilio!). Not trying to get software development done through a crappy high-level language by BAs.

- Brent Ozar~ “We’re just letting people run queries and light money on fire." 2021-2030: Welcome to Sprawl 2.0. Data lives everywhere, in redundant copies, none of which speak to each other, and all of which cost us by the byte, by the month. Performance sucks everywhere, and every query costs us by the byte of data read.

- Josh Barratt: So, in a broad sense, these results are a big +1 for the serverless stack. To be able to get typical round trip times of around 100ms for working with a stateful backend with this little effort and operational overhead? Yes please. However, if we’re talking about latency-sensitive gaming backends, it’s not as good. You really really wouldn’t want occasional 1 second freezes in the middle of an intense battle. The long request times are avoidable, in part, by using Provisioned Concurrency, which specifically is built to prevent this. It keeps lambdas ready to go to handle your requests at whatever concurrency you desire – an interesting hybrid serverless and servery.

- Small Datum: The truthier claim is that with an LSM there will be many streams of IO (read & write) that benefit from large IO requests. The reads and writes done by compaction are sequential per file, but there is much concurrency and the storage device will see many concurrent streams of IO.

- Steve Blank: RYAN amplified the paranoia the Soviet leadership already had. The assumptions and beliefs of people who create the software shape the outcomes Using data to model an adversary’s potential actions is limited by your ability to model its leaderships intent. Your planning and world view are almost guaranteed not to be the same as those of your adversary. Having an overwhelming military advantage may force an adversary into a corner. They may act in ways that seem irrational.

- Mikael Ronstrom:: What we derive from those numbers is that a Thread Pipeline and a Batch Pipeline has equal efficiency. However the Thread Pipeline provides a lot of benefits. For receive threads it means that the application doesn’t have to find the thread where data resides. This simplifies the NDB API greatly. I have made experiments where the NDB API actually had this possibility and where the transaction processing and data owning part was colocated in the same thread. This had a small improvement of latency at low loads, but at higher loads the thread pipeline was superior in both throughput and latency and thus this idea was discarded.

- Sean M. Carroll: Thinking of the world as represented by simply a vector in Hilbert space, evolving unitarily according to the Schr¨odinger equation governed by a Hamiltonian specified only by its energy eigenvalues, seems at first hopelessly far away from the warm, welcoming, richly-structured ontology we are used to thinking about in physics. But recognizing that the latter is plausibly a higher-level emergent description, and contemplating the possibility that the more fundamental vocabulary is the one straightforwardly suggested by our simplest construal of the rules of quantum theory, leads to a reconstruction program that appears remarkably plausible. By taking the prospect of emergence seriously, and acknowledging that our fondness for attributing metaphysical fundamentality to the spatial arena is more a matter of convenience and convention than one of principle, it is possible to see how the basic ingredients of the world might be boiled down to a list of energy eigenvalues and the components of a vector in Hilbert space.

- @retailgeek: I attended a Williams-Sonoma meeting when Howard Lester told me "no one wants to buy table-top online". Today W-S is a digital first retailer with 70% of their revenue online

Useful Stuff:

- We tend to think of programming as only involving code. I define programming as taking steps to bring about a desired result. Here’s an example of what might be called passive programming. This Unstoppable Robot Could Save Your Life.

- A soft robot finds the path through a human trachea by it’s design. There’s no processor or code, but the solution is found in the design, the nature of materials, and it’s operation.

- Another example: Xenobots: have no nerve cells and no brains. Yet xenobots — each about half a millimeter wide — can swim through very thin tubes and traverse curvy mazes.

- Also Why My Slime Mold Is Better Than Your Hadoop Cluster.

- jodrellblank: What do you define as "a computation"? If water runs down a hill following a windy path to the lowest point, is there any computation happening as it "chooses" its way? If you throw a ball up, is there any computation as it "decides" when to turn around and fall down? If a mirror reflects light rays, is it computing anything as it does so? When a fire burns, is there computation in its flames? When you drop string and it falls into a tangle, is it computing how to land? It seems to me that you could model some factors of those processes in a computer, but they are not in themselves computations. Do you define computation so broadly as to be "anything matter and energy does"?

- This is how Uber implements feature flags, allowlists/denylists, experiments, geographical or time based targeting, incremental rollout, networking configuration, circuit breaking, and emergency operational control. I love the very specific use case examples, you usually don’t get that sort of thing. Flipr: Making Changes Quickly and Safely at Scale:

- Flipr manages over 350K active properties, with approximately 150K changes per week. This configuration data is used by over 700 services at Uber across 50K+ hosts, generating around 3 million QPS for our backend systems.

- In Flipr we refer to these key and value pairs as properties. Flipr properties can have multiple values per key (i.e., a multimap instead of a map), depending on the runtime context. Example API call: client.get(“property_key", context) => value. Context is stuffed with the data attributes necessary to figure out the configuration value.

- Example use cases: “Feature #456 is enabled" “The character size limit of the ‘name’ textbox is 256 characters" “The URL of the map service API is http://mapservice:8080/v2" “Enable this feature #456 for all members of the ‘admin’ group who are currently making a request from New York" “Lockdown the property API for requests, with a ‘temporarily unavailable’ error message, between 4pm-5pm on Wednesday"

- Flipr is just a service, with a UI and a client library for users. Customers predominantly interact via the UI. Flipr supports an extensive API so that other services can use Flipr programmatically. This API is exposed using the standard Uber software networking stack.

- Scaling out to so many servers could put a lot of pressure on the backend service, but because most use cases are read-only, we can use a fan-out cache of gateway services that keep up-to-date copies cached, so the backend doesn’t get directly hit with traffic.

- The replication to gateways is asynchronous, and so making changes and reading via the client is eventually consistent. In practice the eventually-consistent model with a fan-out cache has proven to be scalable, reliable and performant, even for fleet-wide config at Uber scale.

- Flipr is also moving to a new distribution system that is based on a subscription model to achieve this same scale, but with a more efficient use of resources. I think subscription is a better model because an application can react to changes.Then you can plug these events into state machines which can really improve program structure.

- Peer reviews are required for most Flipr changes and this is enforced via standardized code review tools and custom UI in Flipr. There are access control and permissions systems to ensure that only authorized users can view and update config. Rollouts are performed incrementally across physical dimensions, so that engineers can make changes gradually and safely, rather than applying changes globally, which reduces the blast radius of any unintended consequences.

- Rust for real-time application - no dependencies allowed:

- RecoAI can process up to 100.000 events per second which is 2 orders of magnitude faster than Python. Rust is much faster than Python (100-200x times in some cases). Every data structure is stored in memory in pure Rust data structures.

- Memory safety and minimal generalization give us a comfort that could not be achieved with Python.

- No dependencies on external services (yes even a database). All the state is handled by in-memory data structures: HashMap, Vector, HashSet etc. You might think that this is a bad idea but if the system is real-time and must respond in milliseconds to provide accurate recommendations it is price that is worth paying.

- No REST API. The only way to communicate with the application is through predefined events. Because of this we can recreate the state of the application at any point which is important because it is a Machine Learning application and the ability to go back in time is very important for tasks like this. In our system, there are about 30 types of events which you can communicate to the system and every event type has a predefined schema. Generating JSON schema from Rust structs is very straightforward which made it possible to create SDK to our system without almost any additional work.

- No cloud (maybe apart from BigQuery). The application is just a single binary. Everything is a library. The only cloud service we rely on is BigQuery and Datastudio for reporting.

- Why we switched to Rust? Lack of static types. Performance. Lack of good multithreaded model. Rust is not perfect. Some places in our code seem very verbose, especially pattern matching dispatchers. Compilation times are also pretty bad. It takes around 1 minute to compile our project for testing.

- Also Scalability! But at what COST? [Configuration that Outperforms a Single Thread] - Contrary to the common wisdom that effective scaling is evidence of solid systems building, any system can scale arbitrarily well with a sufficient lack of care in its implementation

- Under the competition is good category there’s Announcing Cloudflare Workers Unbound for General Availability.

- While I used the previous version in production, I have not used the Unbound product yet. The biggest change is the CPU limits of up to 30 seconds, which opens it up to a vast number of use cases that couldn’t fit under the previous 50ms limit. In the forums there was quite a lot of confusion over if serving images violates terms of service, because as you might imagine this would make a good image serving platform, especially now that egress pricing was lowered to $0.045 per GB, but that’s not as good as free. It seems as if image serving is within ToS, but not video. The lack of full node.js support makes development more difficult. Zero millisecond cold-start time is a big win, but the forums are full of questions because the environment is not “standard." Because it’s Cloudflare you don’t pay for a DDoS attacks. A major limitation was the lack of WebSocket support, but they have that now Introducing WebSockets Support in Cloudflare Workers. When you add in durable object support you get a solid foundation to build stuff on. The idea of a long lasting Actor object at the edge that can terminate WebSockets is appealing.

- You can read more about durable objects in Durable Objects in Production. For a description of why serverless + edge is synergistic goodness you might like Why Serverless will enable the Edge Computing Revolution.

- cosmie: I think much of Cloudflare's capabilities are underappreciated and underestimated...It fixed the analytics stuff that was my headache, and made me happy. It fixed the constant breakage on other stuff, which made the devs happy. It left the legacy infrastructure in place, which made enterprise IT happy. It provided a legitimate business justification to introduce Cloudflare in a minor and targeted manner to this stodgy environment, which made the enterprise networking team I had to work with excited and collaborative. It was minimally invasive and cheap, making the entire process legitimately feasible vs. a dead in the water pipedream. It solved all kinds of "unsolvable" problems that had plagued that site for years, which made the marketing client with power over our agency contract happy.

- Also Vercel Serverless Functions vs Cloudflare Workers. Also also Edge Computing.

- Do not multiply services unnecessarily. Disasters I've seen in a microservices world:

- Disaster #1: too small services.

- Disaster #2: development environments.

- Disaster #3: end-to-end tests.

- Disaster #4: huge, shared database.

- Disaster #5: API gateways.

- Disaster #6: Timeouts, retries, and resilience.

- microservices are a good solution for organizational problems. However, the problems come when we think about failures as "edge cases" or things that we think will never happen to us. These edge cases become the new normal at a certain scale, and we should cope with them.

- How do you fix these problems? Countering microservice disasters:

- each new service is clearly owned by one team

- One of the main reasons why we have a) encouraging of copy-pasting data b) no sharing of persistence c) strong bias towards asynchronous communication in the Principles is the fact those services can typically be spun up on their own.

- We tend to avoid anything that resembles a transaction spanning multiple services, requiring an E2E test.

- This is outright banned in one of our principles — persistence shall not be shared.

- We do operate a Kong API Gateway exactly for the reasons mentioned — simplify and ensure consistent authentication, rate limiting, and logging.

- Also Fail at Scale

- It takes a village to manage AWS costs. Our [Airbnb] Journey Towards Cloud Efficiency:

- In the nine months that ended on Sept. 30, Airbnb saw a $63.5 million year-over-year decrease in hosting costs, which contributed to a 26% decline in Airbnb’s cost of revenue.

- AWS announced their Savings Plan in late 2019. We have realized its benefits and now have most of our compute resources covered under this arrangement. We monitor our Savings Plan utilization regularly to minimize On Demand charges and maximize usage of purchased Savings Plan.

- Today we have a set of prepared responses which move certain workloads on and off Savings Plan to keep utilization healthy. We enhanced the capability of our Continuous Integration environment to leverage spot instances. With a small configuration change, we can easily dial up our use of spot instances if we observe On Demand charges. When we are under-utilizing our Savings Plan, we move over EBS in our data warehouse to EC2 to ensure we stay close to maximum utilization of our savings plan.

- We purchase a 3 year convertible savings plan to give us flexibility to migrate to new instances types. For us, this flexibility offsets the potential savings from instance specific savings plan purchases. In addition to Savings Plan for our compute capacity, we leverage Reserved Instances for RDS & ElastiCache.

- With tagging and attribution maturing, we were able to identify our highest areas of spend. Amazon S3 Storage costs have historically been one of our top areas of spend, and by implementing data retention policies, leveraging more cost effective storage tiers, and cleaning up unused warehouse storage, we have brought our monthly S3 costs down considerably.

- So while Glacier is only 10% the cost of Standard storage class, the total cost can be higher than simply storing the data in Standard.

- As part of this modernization we are moving to Kubernetes. During our effort to eliminate waste, we found a number of large services not using horizontal-pod-autoscaler (HPA), and services that were using HPA, but in a largely sub-optimal way such that it never effectively scaled the services (high minReplicas or low maxReplicas).

- we have a small group of people with broad subject matter expertise who meet weekly to review the entire cost footprint.

- Our approach to consumption attribution was to give teams the necessary information to make appropriate tradeoffs between cost and other business drivers to maintain their spend within a certain growth threshold. With visibility into cost drivers, we incentivize engineers to identify architectural design changes to reduce costs, and also identify potential cost headwinds.

- Much of our success to date has been around executing on efficiency projects, and reducing the response time on cost incidents. This is reactionary, though, and we need to move toward more proactive management of our costs.

- Triage is an old strategy for deciding where to spend scarce resources. Facebook uses ML to decide how much effort to put into video encoding based on a video's projected popularity. How Facebook encodes your videos: A video consumes computing resources only the first time it is encoded. Once it has been encoded, the stored encoding can be delivered as many times as requested without requiring additional compute resources. A relatively small percentage (roughly one-third) of all videos on Facebook generate the majority of overall watch time. Facebook’s data centers have limited amounts of energy to power compute resources. We get the most bang for our buck, so to speak, in terms of maximizing everyone’s video experience within the available power constraints, by applying more compute-intensive “recipes" and advanced codecs to videos that are watched the most. Doing this has shifted a large portion of watch time to advanced encodings, resulting in less buffering without requiring additional computing resources.

- You aren’t anyone these days if you’re not building your own custom microprocessors. YouTube is now building its own video-transcoding chips. Amazon created an ARM server Graviton chip. Apple’s M1. And now Google says the system on a chip is the new motherboard. Also Foundry Wars Begin.

- Ask HN: How would you store 10PB of data for your startup today? Excellent discussion of the usual buy vs build tradeoffs. It’s a bit disappointing cloud pricing still makes this a difficult decision.

- skynet-9000: At that kind of scale, S3 makes zero sense. You should definitely be rolling your own. 10PB costs more than $210,000 per month at S3, or more than $12M after five years.

- maestroia: There are four hidden costs which not many have touched upon. 1) Staff You'll need at least one, maybe two, to build, operate, and maintain any self-hosted solution. A quick peek on Glassdoor and Salary show the unloaded salary for a Storage Engineer runs $92,000-130,000 US. Multiply by 1.25-1.4 for loaded cost of an employee (things like FICA, insurance, laptop, facilities, etc). Storage Administrators run lower, but still around $70K US unloaded. Point is, you'll be paying around $100K+/year per storage staff position...2) Facilities (HVAC, electrical, floor loading, etc)...3) Disaster Recovery/Business Continuity...4) Cost of money With rolling-your-own, you're going to be doing CAPEX and OPEX.

- cmeacham98: I've run the math on this for 1PB of similar data (all pictures), and for us it was about 1.5-2 orders of magnitude cheaper over the span of 10 years (our guess for depreciation on the hardware). Note that we were getting significantly cheaper bandwidth than S3 and similar providers, which made up over half of our savings.

- throwaway823882: I run cloud infra for a living. Have been managing infrastructure for 20 years. I would never for one second consider building my own hosting for a start-up. It would be like a grocery delivery company starting their own farm because seeds are cheap.

- amacneil: At that level of data you should be negotiating with the 3 largest cloud providers, and going with whoever gives you the best deal. You can negotiate the storage costs and also egress. reply

- Lots and lots of great detail. There's so much you need to build and run a system, but it really all can be done by one person skilled in the dark arts. The Architecture Behind A One-Person Tech Startup.

- Fast forward six months, a couple of iterations, and even though my current setup is still a Django monolith, I'm now using Postgres as the app DB, ClickHouse for analytics data, and Redis for caching. I also use Celery for scheduled tasks, and a custom event queue for buffering writes. I run most of these things on a managed Kubernetes cluster (EKS). It may sound complicated, but it's practically an old-school monolithic architecture running on Kubernetes.

- Also MY INFRASTRUCTURE AS OF 2020

- This is a long and detailed interview talking about how hard it use to use ML to control robots in “real life". I’ve extracted just one interesting section of the talk, but you’ll probably enjoy the whole thing. How Forza's Racing AI Uses Neural Networks To Evolve | War Stories | Ars Technica~ On our original Xbox and the Xbox 360, our Drivar system was local to the hard drive and on Forza Motorsport five, we switched that to being on servers. So these were Thunderhead servers for Xbox live. This was a pretty cool change and we had a whole new set of problems. We had drivatar pretty well tamed on the local box because it wasn't being infected by other people's data. It was really about local drivatars we trained and your own drivata, which dramatically reduced the number of variables coming into the user's experience. When we went to Thunderhead all of sudden it was a massive amount of data, which is awesome. It's great for training in neural net like data is your friend when you're training learning networks. However, I feel like we've had a tiger by the tail ever since your friends are coming into your game and their behaviors are showing up in your game. So technologically it was really cool. And from a user experience it's had a couple of sparklers that are incredible great user experiences but it's also had some irritants that we've had to look at. How do we shackle adn how do we tame and how do we change the user experience?...All of a sudden we had millions of data points for it to classify. So the AI got very fast and it got very weird in spots and it did things we didn't expect. And we had to start clamping that. We didn't want it to do this behavior. The problem is it's a bit of a black box. You don't know why it's doing the behavior. That's what AI is. You feed in data and it fees out what it wants to do. It doesn't tell you why. It's like a two year old. You have to take the output and then you can put a restrictor on it and have it do less of that or modify its output in some fashion. So the data was a huge boom. We started learning about how players drive. We started getting laps we didn't expect. We didn't expect things got fast in ways we didn't expect they got slow in ways we didn't expect. And weird inaccuracies showed up in our system that we really didn't expect.

- DBS Bank: Scalable Serverless Compute Grid on AWS:

- Migrated from their own servers to the cloud and saved 80%.

- Duplicated lambda functions for high medium and low priority SQS queues so they could manage provisioned concurrency separately which improved performance.

- Use EFS over S3 because have very large financial models. Mounting EFS saves cold start times.

- Use DynamoDB to enforce idempotency on function execution. Everything is put into Elasticsearch use for end to end monitoring of task computation using grafana.

- Now Execute 3-4k lambda per minute. With increases in Lambda’s capabilities they want to try microbatching so one lambda function can execute multiple calculations in parallel. They have a goal of executing 40 million calculations per day.

- Note: This benchmark was generated by Upstash. Latency Comparison Among Serverless Databases: DynamoDB vs FaunaDB vs Upstash.

- Results: DynamoDB: 76 ms | FaunaDB: 1692 ms | Upstash: 13 ms

- And of course there are always complaints about any sort of benchmark. On HackerNews.

- Logica: organizing your data queries, making them universally reusable and fun (GitHub): We [Google] present Logica, a novel open source Logic Programming language. A successor to Yedalog (a language developed at Google earlier) it is a Datalog-like logic programming language. Logica code compiles to SQL and runs on Google BigQuery (with experimental support for PostgreSQL and SQLite), but it is much more concise and supports the clean and reusable abstraction mechanisms that SQL lacks. It supports modules and imports, it can be used from an interactive Python notebook and it even makes testing your queries natural and easy.

- The problem with the on-demand async world is the witheringly cold penalty of startup costs. You fix that by warming things up. Usually warmth follows the laws of thermodynamics, which always has a cost, but I don’t see the cost mentioned here. Scaling your applications faster with EC2 Auto Scaling Warm Pools: This is a new feature that reduces scale-out latency by maintaining a pool of pre-initialized instances ready to be placed into service. EC2 Auto Scaling Warm Pools works by launching a configured number of EC2 instances in the background, allowing any lengthy application initialization processes to run as necessary, and then stopping those instances until they are needed (customers can also opt to configure Warm Pool instances to be kept in a running state to reduce further scale-out latency). When a scale-out event occurs, EC2 Auto Scaling uses the pre-initialized instances from the Warm Pool rather than launching cold instances,

- Connection limits aren’t just for servers. Balancing act: the current limits of AWS network load balancers: AWS’s advice was to attempt to support no more than 400K simultaneous connections on a single NLB. This is a dramatically more significant constraint than the target group limit; if each server instance is handling 10K connections, that effectively limits each NLB to supporting 40 fully-loaded server instances. This has impact on cost, certainly, but also imposes a significant elasticity constraint. Absorbing 1 million unexpected connections on demand is no longer possible if sufficient NLB provisions aren’t made ahead of time. Even below the 400K connection threshold we would still occasionally see situations where some 20% of the connections spontaneously dropped.

- Events have been around forever, but ease of use is a capability of its own. MatHem.se Scales Grocery Delivery App with Amazon EventBridge:

- By moving to a serverless architecture, MatHem.se was able to speed up new feature releases by 5-10 times.

- In March 2020, MatHem.se saw a huge surge in customer demand almost overnight. The number of concurrent users trying to place a grocery order increased by 800% day-over-day. Since the MatHem.se team had previously taken on a project to rewrite their entire application stack to be entirely serverless and event-driven, they were able to handle this increase in load without needing to make any significant changes.

- They decided to give customers the option to reserve a delivery time slot before adding groceries to the cart. Are you listening vaccine shot schedulers?

- MatHem.se adopted an Amazon DynamoDB to EventBridge fanout pattern using AWS Lambda in between. Whenever a DynamoDB item changes, DynamoDB Streams delivers events to EventBridge using a Lambda function. This provides MatHem.se a simple way to react to and process those events, by triggering consumers such as Lambda and AWS Step Functions.

- The team had used AWS SNS/AWS SQS for their event processing needs before turning to EventBridge. While the SNS to SQS publish/subscribe pattern has its benefits, in MatHem.se’s case, it led to a tight coupling between services and teams. A service subscribing to events from the publisher needed to know exactly which SNS topic the event was published to, and relied on documentation to understand the schema.

- Also Making Strides Toward Serverless

- Interesting use of technology in a fitness studio. Orangetheory Fitness: Taking a Data-Driven Approach to Improving Health and Wellness. They have a million members across the globe. The usual boxes that connect Cognito, Lambda, Aurora, S3, EC2, DynamoDB, etc. The interesting bit is you have a number of geographically distributed studios each with users, sensors, fitness equipment—where you store the data? where do you put the processing? where do you put the networking? Users in each studio wear heart rate monitors that connect over a local network. Each piece of fitness equipment has a specially designed tablet. Both collect data on a customer that is first stored locally. The local server acts as a point of resiliency, so a studio can function if connectivity to the backend is lost. A studio can complete classes all on their own, without backend connectivity. A mobile apps lets users book classes, see results from previous workouts, perform out of studio workouts with a heart rate monitor. This data is collected and is also sent to the backend. The local server connects to the backing via API Gateway and authenticates over Cognito. Users also authenticate using Cognito. Second by second telemetry is sent to Kinesis Firehose and then into a data lake. Serverless allowed them to scale up and down. There’s a big screen in the studio with each user’s stats (heart rate, calories burned, percent of maximum heart rate, splat points) while they exercise. That’s all calculated locally in real-time and displayed to the user and the coach. They can also tell users how they are improving over time by looking at historical stats.

- Keenan Wyrobek discusses how Zipline's AI-drones are reshaping Africa. Fascinating story of how Zipline, which delivers medical supplies in Africa using a drone, got off the ground. It’s not what you might expect. It’s not even really an AI first company. They first found customers and worked with them to design a product that fit exactly their needs. They didn’t buy an off the shelf quadcopter and stuff it with ML. They created their own airplane style drone and a clever aircraft carrier style catapult and capture system for takeoff and landing. The goal was to make the drone as reliable as possible while being capable of flying in the rain and bad weather, something drones usually can’t do. The idea was to move the complexity and costs out of the drone and move it to offline training and to equipment at each location. A lot of hard work and a lot of smart decisions. The most exciting prediction: expect flying cars in the next 20 years.

- What fruit flies could teach scientists about brain imaging. As programmers we are used to working with dumb stuff. Dumb data is stored and manipulated by smart stuff residing elsewhere. That’s not how life does it. Cells are the building blocks of biology and cells are smart. This experiment directly measures how individual neurons use energy inside intact brains using new sensors that measure ATP levels. When you increase the activity level of neurons wouldn’t you expect ATP levels to go down? Work requires energy. That’s not what happens. The cell is smarter than that. The cell increases energy production. It’s guessing how much energy it will need in the future, so it increases its energy production to meet expected future energy needs. Brains are not computers, they are implemented by cells. We’ve taken dumb stuff programming as far as it will go. We need to program with smarter stuff.

- How [Lenskart] saved over 30% on our Firebase expenses!:

- Change backup schedules to store 1/30th the data.

- Set up an lru-cache that stored only the 80 most recently used widgets. This reduced the load from 300 reads/s to 30 reads/s, a 10x improvement.

- Use a single function to service all the requests, and route APIs internally using a routing framework. We rearchitected the entire codebase of over 120 APIs into 8 logically grouped functions à la microservices.

- Amazing how often traffic shifts can ruin your day. RCA- Intermittent 503 errors accessing Azure Portal (Tracking ID HNS6-1SZ): Root Cause: The Azure portal frontend resources in UK South was taken out of rotation for maintenance the previous day, at 2021-04-19 19:08 UTC. For operational reasons related to an issue with that maintenance, the region was left out of rotation for a longer period than anticipated. This shifted traffic from UK South to UK West. This scenario was within acceptable operational limits, as the volume of Azure Portal traffic for that part of the world was declining at the end of the working day there. The next day, the increase in traffic cause our instances in UK West to automatically scale-up, and it soon reached the maximum allowed number of instances, and stopped scaling up further. The running instances became overloaded, causing high CPU and disk activity, to a point where the instances became unable to process requests and began returning HTTP 503 errors.

- Atlas: Our [Dropbox] journey from a Python monolith to a managed platform:

- serve more than 700M registered users in every time zone on the planet who generate at least 300,000 requests per second.

- We found that product functionality at Dropbox could be divided into two broad categories: large, complex systems like all the logic around sharing a file small, self-contained functionality, like the homepage

- Small, self contained functionality doesn’t need independently operated services.

- Large components should continue being their own services

- Atlas is a hybrid approach. It provides the user interface and experience of a “serverless" system like AWS Fargate to Dropbox product developers, while being backed by automatically provisioned services behind the scenes.

- In our view, developers don’t care about the distinction between monoliths and services, and simply want the lowest-overhead way to deliver end value to customers.

- 422: Sell a Third Box. Interesting tidbit: Overcast spends ~$5000 a month on servers.

- You want to save time by reusing cached data structures? Just say no. Nothing good ever comes of it. How we found and fixed a rare race condition in our session handling: In summary, if an exception occurred at just the right time and if concurrent request processing happened in just the right sequence across multiple requests, we ended up replacing the session in one response with a session from an earlier response. Returning the incorrect cookie only happened for the session cookie header and as we noticed before, the rest of the response body, such as the HTML, was all still based on the user who was previously authenticated. This behavior lined up with what we saw in our request logs and we were able to clearly identify all of the pieces that made up the root cause of this race condition.

- 5 lessons I’ve learned after 2 billion lambda executions: Don’t expect things in order. Idempotency is instrumental. Control your executions. Monitoring and alerting is key. CI/CD rules apply.

- A good example of multi-cloud. Use the strengths of different clouds to save you money. Using a disk-based Redis clone to reduce AWS S3 bill

- WakaTime hosts programming dashboards on DigitalOcean servers. That’s because DigitalOcean servers come with dedicated attached SSDs, and overall give you much more compute bang for the buck than EC2. DigitalOcean’s S3 compatible object storage service (Spaces) is very inexpensive, but we couldn’t use DigitalOcean Spaces for our primary database because it’s much slower than S3.

- Data storage in S3 is cheap, it’s the transfer and operations that get expensive. Also, S3 comes with built-in replication and reliability so we want to keep using S3 as our database

- To reduce costs and improve performance, we tried caching S3 reads with Redis. However, with the cache size limited by RAM it barely made a dent in our reads from S3. The amount of RAM we would need to cache multiple terabytes of S3 data would easily cost more than our total AWS bill.

- Using SSDB to cache S3 has significantly reduced the outbound data transfer portion of our AWS bill! 🎉 With performance comparable to Redis and using the disk to bypass Redis’s RAM limitation, SSDB is a powerful tool for lean startups.

- The great Adrian Cockcroft talks about Petalith architectures. Take a monolith, break it into individual pieces which are independently deployable microservices, and run it all on one of these new giant machines. This enables you to use shared memory between microservices, which avoids the serialization/deserialization costs of going over the wire. You get the agility of microservices with the performance of a monolith. How big are these new machines? Big. Machines now have a terabyte of RAM. Even GPUs have 40GB of memory. GPUs can have 7,000 cores. Internal memory bandwidth is 1.2 terabytes per second. So on a GPU you could do a full scan of 40GB with 7,000 cores at a ludicrous rate. You can have a cluster of 8 GPUs together for 320 GB connected over 600 gigaBYTE per second link. And you can connect 4000 of these in an EC2 cluster. What problems can you solve that you couldn't solve before? You can, for example, deploy all your containers on one machine so they can talk to each other at memory speed. Having used shared memory in the past, I think there are some problems with it when compared to a distributed unshared model, because you get into all sorts of locking, dead lock, and memory corruption issues.

Soft Stuff:

- How we scaled the GitHub API with a sharded, replicated rate limiter in Redis. They even give you the code.

- Stateful Functions 3.0.0: Remote Functions Front and Center: The Apache Flink community is happy to announce the release of Stateful Functions (StateFun) 3.0.0! Stateful Functions is a cross-platform stack for building Stateful Serverless applications, making it radically simpler to develop scalable, consistent, and elastic distributed applications.

- circonus-labs/libmtev: a toolkit for building high-performance servers. Several of libmtev's shipped subsystems including the eventer, logging, clustering, network listeners and the module system rely on the configuration system.

- Goblins: a transactional, distributed actor model environment: a quasi-functional distributed object system, mostly following the actor model. Its design allows for object-capability security, allowing for safe distributed programming environments. Also CapTP - a protocol to allow "distributed object programming over mutually suspicious networks, meant to be combined with an object capability style of programming".

- aws-samples/serverless-patterns: This repo contains serverless patterns showing how to integrate services services using infrastructure-as-code (IaC). You can use these patterns to help develop your own projects quickly.

Pub Stuff:

- Warehouse-scale video acceleration: co-design and deployment in the wild: This paper describes the design and deployment, at scale, of a new accelerator targeted at warehouse-scale video transcoding. We [Google] present our hardware design including a new accelerator building block – the video coding unit (VCU) – and discuss key design trade-offs for balanced systems at data center scale and co-designing accelerators with large-scale distributed software systems. We evaluate these accelerators “in the wild" serving live data center jobs, demonstrating 20-33x improved efficiency over our prior well-tuned non-accelerated baseline.

- Swebok 3.0 Guide to the Software Engineering Body of Knowledge: describes generally accepted knowledge about software engineering. Its 15 knowledge areas (KAs) summarize basic concepts and include a reference list pointing to more detailed information.

- Building the algorithm commons: Who Discovered the algorithms that underpin computing in the modern enterprise?: Analyz-ing this “Algorithm Commons" reveals that the UnitedStates has been the largest contributor to algorithm pro-gress, with universities and large private labs (e.g., IBM)leading the way, but that U.S. leadership has faded in recent decades.

- Building Distributed Systems With Stateright: Stateright is a model checker for distributed systems. It is provided as a Rust library, and it allows you to verify systems implemented in Rust.

- The Future of Low-Latency Memory: We compare DDR against HBM (High Bandwidth Memory) and a newer standard called OMI, the Open Memory Interface, that is used by IBM’s POWER processors. DDR suffers from loading issues that limit its speed. HBM provides significantly greater speed, but has capacity limitations. OMI provides near-HBM speeds while supporting larger capacities than DDR.

- Unikraft: Fast, Specialized Unikernels the Easy Way: Our evaluation using off-the-shelf applications such as nginx, SQLite, and Redis shows that running them on Unikraft results in a 1.7x-2.7x performance improvement compared to Linux guests. In addition, Unikraft images for these apps are around 1MB, require less than 10MB of RAM to run, and boot in around 1ms on top of the VMM time (total boot time 3ms-40ms). Unikraft is a Linux Foundation open source project and can be found at this http URL.

- How can Highly Concurrent Network-Bound Applications benefit from modern multi-core CPUs?: The evaluated results demonstrate that the shared-nothing database Redis Cluster delivers the best results for throughput of all test applications. Generally, the tests indicate that the less data is share in-between processes and the less overhead the algorithms for parallel processing introduce, the better the performance in regards to throughput in uniform load scenarios. However, this study also demonstrates that the shared-nothing approach might not be the optimal strategy for lowering tail latencies. Sharing data in-between processes, e.g. by leveraging single-queue, multi-server models and dynamic scheduling strategies, which typically add overhead, can deliver better results in regards to tail latencies, especially when workloads are skewed.

- FoundationDB: A Distributed Unbundled Transactional Key Value Store: FoundationDB is an open source transactional key value store created more than ten years ago. It is one of the first systems to combine the flexibility and scalability of NoSQL architectures with the power of ACID transactions (a.k.a. NewSQL). FoundationDB adopts an unbundled architecture that decouples an in-memory transaction management system, a distributed storage system, and a built-in distributed configuration system

- Segcache: a memory-efficient and scalable in-memory key-value cache for small objects: Evaluation using production traces shows that Segcache uses 22-60% less memory than state-of-the-art designs for a variety of workloads. Segcache simultaneously delivers high throughput, up to 40% better than Memcached on a single thread. It exhibits close-to-linear scalability, providing a close to 8× speedup over Memcached with 24 threads. It has been shown at Twitter, Facebook, and Reddit that most objects stored in in-memory caches are small. Among Twitter’s top 100+ Twemcache clusters, the mean object size has a median value less than 300 bytes, and the largest cache has a median object size around 200 bytes. In contrast to these small objects, most existing solutions have relatively large metadata per object. Memcached has 56 bytes of metadata per key, Redis is similar, and Pelikan’s slab storage uses 39 bytes2. This means more than one third of the memory goes to metadata for a cache where the average object size is 100 bytes.