Stuff The Internet Says On Scalability For August 17th, 2018

Hey, it's HighScalability time:

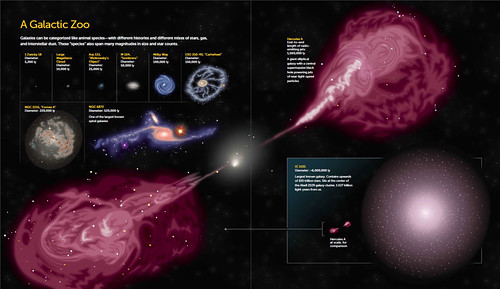

The amazing Zoomable Universe from 10^27 meters—about 93 billion light-years—down to the subatomic realm, at 10^-35 meters.

Do you like this sort of Stuff? Please lend me your support on Patreon. It would mean a great deal to me. And if you know anyone looking for a simple book that uses lots of pictures and lots of examples to explain the cloud, then please recommend my new book: Explain the Cloud Like I'm 10. They'll love you even more.

- 2.24x10^32T: joules needed by the Death Star to obliterate Alderaan, which would liquify everyone in the Death Star; 13 of 25: highest paying jobs are in tech; 70,000+: paid Slack workspaces; 13: hours ave american sits; $13.5 million: lost in ATM malware hack; $1.5 billion: cryptocurrency gambling ring busted in China; $8.5B: Auto, IoT, Security startups; 10x: infosec M&A; 1,000: horsepower needed to fly a jet suit; 30% Google's energy savings from AI control of datacenters;

- Quotable Quotes:

- The Jury Is In: From the security point of view, the monolithic OS design is flawed and a root cause of the majority of compromises. It is time for the world to move to an OS structure appropriate for 21st century security requirements.

- @coryodaniel: Rewrote an #AWS APIGateway & #lambda service that was costing us about $16000 / month in #elixir. Its running in 3 nodes that cost us about $150 / month. 12 million requests / hour with sub-second latency, ~300GB of throughput / day. #myelixirstatus !#Serverless...No it’s not Serverless anymore it’s running in a few containers on a kubernetes cluster

- @cablelounger: OH: To use AWS offerings, you really need in-house dev-ops expertise vs GCP, they make dev ops transparent to you I've a lot of experience with AWS and mostly agree with the first point. I haven't really used GCP in earnest. I'd love to hear experiences from people who have?

- @allspaw: engineer: “Unless you’re familiar with Lamport, Brewer, Fox, Armstrong, Stonebraker, Parker, Shapiro...(and others) you don’t know distributed systems.” also engineer: “I read ‘Thinking Fast and Slow’ therefore I know cognitive psychology and decision-making theory.”

- alankay1: To summarize here, I said I love "Rocky's Boots", and I love the basic idea of "Robot Odyssey", but for end-users, using simple logic gates to program multiple robots in a cooperative strategy game blows up too much complexity for very little utility. A much better way to do this would be to make a "next Logo" that would allow game players to make the AI brains needed by the robots. So what I actually said, is that doing it the way you are doing it will wind up with a game that is nxot successful or very playable. Just why they misunderstood what I said is a bit of a mystery, because I spelled out what could be really good for the game (and way ahead of what other games were doing). And of course it would work on an Apple II and other 8 bit micros (Logo ran nicely on them, etc.)

- Michael Malone: Nolan was the first guy to look at Moore’s law and say to himself: You know what? When logic and memory chips get to be under ten bucks I can take these big games and shove them into a pinball machine.

- @hichaelmart: To be honest, I think the main lesson from this is that API Gateway is expensive – 100% agree. We have a GAE app doing a very similar thing, billions of impressions/mth – and *much* cheaper than if it were on API Gateway.

- dbsmasher: Distributed systems hinge nearly entirely on partial failures and degraded modes. This post is a great illustration of how our healthchecks should also follow that nuance.

- Sasha Klizhentas: So imagine you’ve deployed Postgres inside Kubernetes. Before you had tools, pgsql, pgtop, and all that stuff. And let’s say you want to build a replica. You use ansible to build your replicas. But if you put this whole thing inside Kubernetes, then first you have to either reinvent those tools or second, make them cluster-aware. Hey how do you connect to pg master now? How do you know, out of this deployment, which Postgres is the master and which one is the replica? There is no standard way - you have to build your own automation.

- @daveambrose: what was interesting now, after a few years since she started and making the "hard" decision to become profitable, was that her business started to look like it was really ready for funding: 1. she had repeatable sales 2. positive unit economics 3. great, growing customer base

-

Broad Band: Clinton and Gore were just going around everywhere talking about the information superhighway,” she remembers. Gore was lobbying for national telecommunications infrastructure—his father, a U.S. senator from Tennessee, had sponsored legislation to build the Interstate Highway System a generation previous—in the form of his High Performance Computing Act of 1991. When that legislation passed, it was instrumental in the development of many key Internet technologies,

- Cindy Sridharan: In my experience, unbounded concurrency has often been the prime factor that’s led to service degradation or sustained under-performance. Load balancing (and by extension, load shedding) often boils down to managing concurrency effectively and applying backpressure before the system can get overloaded.

- redm: There are of course two sides to every coin. The flip side is, cell carriers never signed up to be a secure identification mechanism. SMS wasn't designed for security, and there's little financial incentive for them to invest in those changes, i.e., they don't charge you more for secure authorization of 3rd party platforms. I think its very akin to the US Social Security Number being used as a 'secure' identification in many cases.

- @uadmbg: I've worked with both, several years with AWS and ~1.5y with GCP. I find AWS to be much more complex, but it is also more mature. GCP is a breath of fresh air complexity-wise, and I think it's also less expensive as well. Nowadays I prefer working with GCP.

- Dr. Henri Jussila: We’re able to perform binary number calculations and show, for instance, how this nanostructure can carry out these functions just like a simple pocket calculator—except that instead of using electricity, the nanostructure uses only light in its operation

- Samuel K. Moore: Mythic is aiming for a mere 0.5 picojoules per multiply and accumulate, which would result in about 4 trillion operations per watt (TOPS/W). Syntiant is hoping to get to 20 TOPS/W. An Nvidia Volta V100 GPU can do 0.4 TOPS/W, according to Syntiant.

- @kelseyhightower: After listening to people who ship and maintain products on top of Serverless platforms, you quickly realize FaaS plays a much smaller role than managed services. Things like billing, payment processing, image resizing, and user management are far more important.

- @joshsusser: In my 30+ year programming career, every single, bizarrely obscure bug that took weeks to chase down has ended up being a one-line fix.

- blacksmythe: The sweet spot for FPGAs is when the data is streaming. You can build hardware where the data is passed from one stage to another, and doesn't go to RAM at all. Particularly where the data is coming in fast, but with low resolution (huge FPGA advantage for fixed point instead of floating point). FGPAs are much more efficient for signal processing from data collected from an A/D converter (e.g. software defined radio) or initial video processing from an image sensor.

- gaius: In the mid-90’s it was common to run httpd via inetd. That lasted until HTTP 1.1 came along. I see people running websites out of Lambda now and just get a sense of deja vu. We realised this start-on-demand style didn’t scale for highly interactive use cases 20+ years ago!

- @kelseyhightower: The Serverless discussion requires a lot more pragmatism. Once you move past "Hello World" you'll quickly realize you've traded one set of problems for another.

- David Burkus: The power law is the explanation for why some people seem like they know everybody: some people really do know way more people than you. And the presence of those super-connectors skews the average higher than the size of your network.

- jgh: So I've been messing with Fn + Clojure + Graal Native Image and I'm seeing cold start times around 300-400ms and hot runs around 10-30ms. TLS adds something like 100-150ms on top of that. I was excited about seeing improved docker start times, but it seems like you guys are pretty much at the same place I am with it.

- Miriam Posner: How do you manage the complexity of a system that procures goods from a huge variety of locations? You make it modular: when you black-box each component, you don’t need to know anything about it except that it meets your specifications. Information about provenance, labor conditions, and environmental impact is unwieldy when the goal of your system is simply to procure and assemble goods quickly. “You could imagine a different way of doing things, so that you do know all of that,” said Russell, “so that your gaze is more immersive and continuous. But what that does is inhibit scale.” And scale, of course, is key to a globalized economy.

- Twirrim: The Bing team at Microsoft use FPGAs in production. I was chatting with one of their developers recently who is focussed on that space for them and he mentioned they find they get similar performance to GPUs, but with significantly less power consumption, while affording them the same levels of flexibility for their particular use case.

- joecot: This is extremely frustrating. One of the biggest use cases for wanting to use Aurora serverless is connecting to MySQL using lambda functions -- Function as a Service apps that can still use rdbms. The problem is that RDS endpoints only exist inside vpcs, and cold starting a lambda function with a vpc network interface can take 10+ seconds, making it useless for an API. I had hoped that, given they were making a serverless MySQL service, they'd make sure it actually plays well with lambda. Nope. Same problems regular RDS has. No better method of securing the connection beyond vpc firewall rules. Amazon, it's been a problem since lambda was launched and it hasn't been fixed. Either fix lambda vpc cold start times, or provide a better way to connect lambda to RDS. Just burying the problem only pisses off your customers when they try to buy into your hype.

- @jblefevre60: It took 200,000 years for us to reach 1 billion—and only 200 years to reach 7 billion!

- Bill Gates: What the book reinforced for me is that lawmakers need to adjust their economic policymaking to reflect these new realities. For example, the tools many countries use to measure intangible assets are behind the times, so they’re getting an incomplete picture of the economy. The U.S. didn’t include software in GDP calculations until 1999. Even today, GDP doesn’t count investment in things like market research, branding, and training—intangible assets that companies are spending huge amounts of money on.

- @ben11kehoe: I’m a little surprised by the number of people who seem bought in to Lambda’s isolated, ephemeral compute model, but are attached to the long-lived shared connection JDBC model for SQL queries

- Naghmeh Momeni: Based on our findings, it’s clear that cooperation does not require connections between each and every individual in the group. In the case of the fourth-grade class, the sparse connections served as olive branches that promoted an atmosphere of harmony and collaboration. In all of the examples, conjoining the groups through even just a few representatives, brokers or bridges had a tremendous effect on outcomes.

- Victor Bahl: So in terms of connecting the world, we’ve [Microsoft] gone into many dimensions here, but you asked specifically about the cloud. In the cloud, it’s all about scale. I mean, we have such massive scales. They… You know, we have something in the order of 54 regions, and these regions have multiple datacenters. We’ve got over 150 datacenters around the world, and they’re getting further built. We have tens of thousands of miles of fiber in the wide area. We have hundreds of thousands of miles of optics inside the datacenter. So now think about these millions of components that are connected to one another. And we rely on them so much, you don’t want them to fail ever.

- Victor Bahl: Today, cloud vendors sell you storage and they sell you compute. I want them to sell you latency as well. So that’s how we conceived of edge computing. It was actually conceived in 2009.

- Eastman: Just a comment regarding studios and game developers. I work in the industry and 90% of these facilities do run with Xeon workstations and ECC memory. Either custom built or purchased from the likes of Dell or HP. So yes, there is a market place for workstations. No serious pro would do work on a mobile tablet or phone where there is a huge market growth. There is definitely a place for a single 32 core CPUs. But among say 100 workstations there might be a place for only 4-5 of the 2990WX. Those would serve particles/fluids dynamics simulation. Most of the workload would be sent to render farms sometimes offsite. Those render farms could use Epyc/Xeon chips. If I was a head of technology, I would seriously consider these CPUs [AMD Threadripper 2990WX] for my artists workflow.

- You start out on a small server...performance tanks. The next step is often to move to something like Route53, CloudFront, and S3. Scaling your Static Site to a Global Market for a Fraction of the Cost on AWS: "After the migration was complete, the results were astounding. I had dropped my load time from around 1.5s — 2.5s down to a freaking 234ms as highlighted in the below screenshot. This is an insane performance improvement...Not only that, but the costs of hosting the site have been reduced from roughly $20/month down to around $7/month." Cloudflare would like you to know they are cheaper and better.

- How do you explain the unreasonable effectiveness of cloud security? AWS has an enormous attack surface. Why aren't there more security problems? One reason is a huge company like Amazon can do crazy things like prove systems correct. Does your datacenter do that? I didn't think so. AWS does. Byron Cook gave an eye opening talk on Formal Reasoning about the Security of Amazon Web Service.

- AWS using formal methods started quietly in 2015. Pre-launch protocol proof engagements are now growing exponentially. After initial developer skepticism, it's gaining acceptance internally.

- World-wide fleets of machines that use security protocols to share secrets are proved correct via reduction of the protocol to proofs in VCC.

- Testing more is almost meaningless. Testing isn't good enough. There are always cases missed in testing. Proofs are meaningful. Soundness is key. Soundness means they understand the semantics of the policy language and use constraint solving, which is sound, to prove it.

- During appsec reviews they are also trying to prove code correct—memory safety, protocol conformance, compliance—using tools like Boogie, Coq, CBMC, Dafny, HOL-light, Infer, OpenJML, SAW, and SMACK. The TLS code used in S3 has been proved.

- One issue with proofs is that code changes continuously, how can the proofs keep up? For the TLS code they rerun the proofs on check-in. That's described in Continuous Formal Verification of Amazon s2n. A future goal is to be able to this for everything.

- If you want to know why IAM is so complicated and hard to understand, it's because IAM is like FOL or first-order logic. IAM is all that stands between data and hackers. Get it wrong and it's a disaster. So it's important to get it right. Even he had a hard time explaining IAM.

- VPC rules are encoded in logic and a constraint solver is used to prove them correct.

- Shouldn't things be simpler? Customers need these complex kinds of capabilities. Customers like this stuff. Proofs are an accelerator for adoption.

- Also How AWS uses automated reasoning to help you achieve security at scale, Object-Oriented Security Proofs, Model Checking Boot Code from AWS Data Centers, Model Checking Boot Code from AWS Data Centers, SideTrail: Verifying Time-Balancing of Cryptosystems, FLoC Olympic Games.

- And irony:

- HVAC techs beware. DeepMind and Google developed a safety-first AI system to autonomously manage cooling in Google's datacenters. You might notice a similarity with how AWS implements their proof system. Safety-first AI for autonomous data centre cooling and industrial control: we’re taking this system to the next level: instead of human-implemented recommendations, our AI system is directly controlling data centre cooling, while remaining under the expert supervision of our data centre operators. This first-of-its-kind cloud-based control system is now safely delivering energy savings in multiple Google data centres. Every five minutes, our cloud-based AI pulls a snapshot of the data centre cooling system from thousands of sensors and feeds it into our deep neural networks, which predict how different combinations of potential actions will affect future energy consumption. The AI system then identifies which actions will minimise the energy consumption while satisfying a robust set of safety constraints. Those actions are sent back to the data centre, where the actions are verified by the local control system and then implemented.

- What is the cost of containerizing close-to-hardware, thread-per-core application? High, unless you specifically optimize to reduce overhead, even then the penalty is 10%. The Cost of Containerization for Your Scylla: Scylla in a Docker container showed 69% reduction on write throughput using our default Docker image. While some performance reduction is expected, this gap is significant and much bigger than one would expect... For users who are extremely latency sensitive, we still recommend using a direct installation of Scylla. For users looking to benefit from the ease of Docker usage, the latency penalty is minimal...The first step is obvious: Scylla employs a polling thread-per-core architecture, and by pinning shards to the physical CPUs and isolating network interrupts the number of context switches and interrupts is reduced...We disabled security profiles by using the --security-opt seccomp :unconfined Docker parameter. Also, it is possible to manually move tasks out of cgroups by using the cgdelete utility. Executing the peak throughput tests again, we now see no difference in throughput between Docker and the underlying platform.

- Airbnb on their Capturing Data Evolution in a Service-Oriented Architecture. While they go into detail on the plumbing, this reminds me a lot of of network management systems work, which is basically how IoT works. Things out in the world change. Those changes emit as property changes. Bits of code register for those changes and run the state machine to handle them as they occur. At an application level it's difficult. Programmers have to deal with event order, applying backpressure so too many events aren't generated, not blocking processing on large event streams, prioritizing events so that higher priority events get processed first, and so on.

- We've all experienced it. That inexplicable random long pause while editing in chrome. Machines are powerful these days. What's going on? In a moment chrome springs back to life and we go on with our lives. Bruce Dawson had enough. He set out to solve the mystery—brilliantly. 24-core CPU and I can’t type an email (part one). It's what you might expect in a way you might not expect it. bcaa7f3a8bbc: It is describing how web browser, a piece of software with extremely high inherent complexity, interacts with the memory allocator of the operating system, another piece of software with high inherent complexity, combined with a rarely used feature from Gmail, can trigger complex and complicated interactions and cause major problems due to hidden bugs in various places. This type of apparent "simple" lockup requires "the most qualified people to diagnose".

- Looking for a different serverless platform? Serverless Docker Beta: A 10x-20x improvement in cold boot performance; sub-second cold boot; Maximum execution time (defaulting to 5 minutes, with a maximum of 30 minutes); Support for HTTP/2.0 and WebSocket connections; recent GitHub integration makes it possible to deploy a Docker container in the cloud solely by creating a Dockerfile; a function automatically scales with a 1:1 mapping of requests to resource allocations. A request comes in, a new function is provisioned or an existing one is re-used.

- Slack was attacked by a giant JSON blob that ate cache space. Re-architecting Slack’s Workspace Preferences: How to Move to an EAV Model to Support Scalability. Slack grew to have 160+ preference settings in a JSON blob stored in a table at a size of ~55 kB. This is enough to stress cache space, especially when usually only few of the preferences are needed at one time. So each preference was moved to its own row in the database. Then data was migrated using double writes". Any time a workspace updated their preferences and updated data in the existing workspaces table, that data was written to the new table. Dark mode was used to test reads. Any time a preference read occurs it was read using both the new and old way and the results compared as a form of testing. Each preference is now cached individually.

- Scaling at Instacart: Distributing Data Across Multiple Postgres Databases with Rails. Scaling by creating datamarts per property is a great strategy. The problem is copying all the data around to different databases while keeping everything consistent is a lot of work. Instacart with an indepth explanation of how they created an application-level asynchronous data pump with multiple Postgres databases.

- Cost of a Join. Fun analysis. The idea is do you really need to denormalize data? Are joins really that expensive? The conclusion is no: But we can see that an additional join certainly can be quite cheap, and isn't necessarily a good reason to avoid normalizing your data.

- Not exactly true. For example, YouTube adds jitter. There's randomness in backoff algorithms. A Math Theory for Why People Hallucinate: Researchers say the fact that stochastic Turing processes appear to be at work in these two biological contexts adds plausibility to the theory that the same mechanism occurs in the visual cortex. The findings also demonstrate how noise plays a pivotal role in biological organisms. “There is not a direct correlation between how we program computers” and how biological systems work, Weiss said. “Biology requires different frameworks and design principles. Noise is one of them.”

- Optimizing Backend Operations with Fragment Caching: Memcached test to the second and third runs of the cache-free test, Memcached resulted in a performance increase of over 12%.

- High-Performance Memory At Low Cost Per Bit. AI, fraud detection, edge computing all crave more memory capacity with high performance, low cost and low power. The problem is Moore’s law is slowing down the DRAM scaling. There are emerging memories: enhanced Fast Flash, RRAM (Resistive RAM), STT-MRAM (Spin Torque Transfer Magnetic RAM) and PCM (Phase Change Memory). Now, the the practical solution is a hybrid memory architecture that combines a small amount of DRAM with a large proportion of emerging memory. These emerging memories come with their own set of challenges – high read and write latencies compared to DRAM, low bandwidth, low endurance, high write energy and large access size granularity in some case. The solution is smart management via software.

- Takeaways from the ServerlessConf SF 2018: In a nutshell: a functions-centric approach is out and an application-centric approach is where the industry is heading to.

- If you're looking for a time-series database this is a good overview. TimescaleDB vs. InfluxDB: purpose built differently for time-series data. Seems fair, but this is from Timescale, so you have to keep the source in mind. NickBusey: As someone who is struggling with issues with InfluxDB in production environments, this just moved my `Investigate replacing Influx with Timescale` issue higher up my priority list. Many of the problems with InfluxDB pointed out in the article are indeed real-world pain points for us. pauldix: Creator & CTO of InfluxDB here...Much of this comparison is the technology equivalent of an argument through appeal to authority. The old "nobody ever got fired for buying Big Blue" argument...However, I wouldn't discount a technology simply because it's new. We take data loss very seriously and strive to create a storage engine that is safe for data...Ultimately, we've chosen to create from scratch. We've also chosen to create a new language rather than piggybacking on SQL. We've made these choices because we want to optimize for this use case and optimize for developer productivity. It's true that there are benefits to incremental improvement, but there are also benefits to rethinking the status quo. I've heard many times from our users that they liked the project because of how easy it was to get started and have something up and running. a012: TimescaleDB vs. PostgreSQL: 20x higher inserts, 2000x faster deletes, 1.2x-14,000x faster queries, additional functions for time-series analytics (e.g., first(), last(), time_bucket()

- A Kubernetes vs Serverless smackdown? No, it's a civilized interview in which not much was decided, but much was discussed. DevOp Cafe Episode 79 - Joseph Jacks and Ben Kehoe. Biggest issue seems to be lockin. With k8s you aren't locked in to the dozens of AWS services you inevitably end up using, you can bundle your own. Ben counters by consistently asking What business value does that add? Good discussion of envoy and istio. The sweet spot for k8s is large companies with sunk costs in heterogenity and multiple datacenters. Multi-cloud is still 3 to 5 years out, but is on the horizon because of the primitives k8s gives us.

- Removing locks makes things faster. Not always, especially when it causes another lock to fire more often. Excellent analysis. The perf optimization that cost us: So I fixed it. I changed the code so there would be no sharing of data between the write and read transactions, which eliminated the need for the lock. The details are fairly simple, I moved the structured being modified to a single class and then atomically replace the pointer to this class. Easy, simple and much much cheaper. This also had the side affect of slowing us down by a factor of 4.

- What could possibly go wrong? China Is Building a Fleet of Autonomous AI-Powered Submarines. Here Are the Details.

- 5G is trickling out and Samsung takes the lead from Qualcomm. Samsung Releases Exynos Modem 5100 - First Multi-Mode 5G Modem: it promises to achieve LTE category 19 download speeds of up to 1.6Gbps, 5G sub-6GHz speeds of up to 2Gbps and 5G mmWave speeds of up to 6Gbps. For 5G NR this means we’re working with 8x 100MHz channels summing up to 800MHz of bandwidth, versus 8x 20MHz / 160MHz for LTE radios.

- Just imagine what happens when normal people have access to "secret/private/classified" data. Ask HN: What is the most unethical thing you've done as a programmer?

- Or how to keep the CPU busy in Go. Building a Resilient Stream Processor in Go. Nicely done.

- Nebo15/annon.api: a configurable API gateway that acts as a reverse proxy with a plugin system. Plugins are reducing boilerplate that must be done in each service, making overall development faster. Also it stores all requests, responses and key metrics, making it easy to debug your application. Inspired by Kong.

- DataDog/kafka-kit (article): a collection of tools that handle partition to broker mappings, failed broker replacements, storage based partition rebalancing, and replication auto-throttling. The two primary tools are topicmappr and autothrottle. These tools cover two categories of our Kafka operations: data placement and replication auto-throttling.

- ray-project/ray (paper): a flexible, high-performance distributed execution framework.

- airbnb/SpinalTap (article): a general-purpose reliable Change Data Capture (CDC) service, capable of detecting data mutations with low-latency across different data sources, and propagating them as standardized events to downstream consumers.

- When Coding Style Survives Compilation: De-anonymizing Programmers from Executable Binaries: We show that programmers who would like to remain anonymous need to take extreme countermeasures to protect their privacy.

- The Jury Is In: Monolithic OS Design Is Flawed: Our results provide very strong evidence that operatingsystem structure has a strong effect on security. 96% of critical Linux exploits would not reach critical severity in a microkernel-based system, 57% would be reduced to low severity, the majority of which would be eliminated altogether if the system was based on a verified microkernel. Even without verification, a microkernel-based design alone would completely prevent 29% of exploits.

- F1 Query: Declarative Querying at Scale: In this paper, we have demonstrated that it is possible to build a query processing system that covers a significant number of data processing and analysis use cases on data that is stored in any data source. By combining support for all of these use cases in a single system, F1 Query achieves significant synergy benefits compared to the situation where many separate systems exist for different use cases. There is no duplicated development effort for features that would be common in separate systems like query parsing, analysis, and optimization, ensuring improvements that benefit one use case automatically benefit others. Most importantly, having a single system provides clients with a one-stop shop for their data querying needs and removes the discontinuities or “cliffs” that occur when clients hit the boundaries of the supported use cases of more specialized systems. We believe that it is the wide applicability of F1 Query that lays at the foundation of the large user base that the product has built within Google.