Stuff The Internet Says On Scalability For February 1st, 2021

Hey, it's HighScalability time once again!

Amazon converts expenses into revenue by transforming needs into products. Take a look at a fulfillment center and you can see the need for Outpost, machine learning, IoT, etc, all dogfooded. Willy Wonka would be proud.

Do you like this sort of Stuff? Without your support on Patreon this Stuff won't happen.

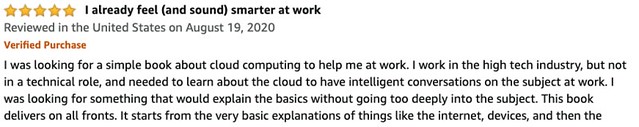

Know someone who could benefit from becoming one with the cloud? I wrote Explain the Cloud Like I'm 10 just for them. On Amazon it has 238 mostly 5 star reviews. Here's a review that has not been shorted by a hedge fund:

Number Stuff:

- 217,000 terabytes: monthly Zoom bandwidth usage. On AWS that would cost $11,186,406.55 per month and $1,843,630 on Oracle Cloud. Zoom surpassed 300 million users.

- $86 billion: Facebook’s revenue for 2020.

- $111.4 billion: Apple’s first quarter revenue for 2021. Up 21%. International sales accounted for 64% of revenue. Double-digit growth in each product category.

- 58%: of developer time spent figuring out code.

- 7500: node Kubernetes cluster. Not for the fainthearted.

- 200 million+: Netflix subscribers. $25 billion in annual revenue. Now generates more cash than it needs, and no longer borrows money to fuel growth.

- $100 billion: spent on app stores in 2020. Apple's App Store captured the majority of spending between the App Store and the Google Play Store, with 68.4 percent of spending, up 35.2 percent year-on-year. As with the global marketplace, mobile games generated the most revenue in the U.S., increasing 26.4 percent from $69 million in 2019 to $87.2 million. Other apps grew 72.2 percent from $24.8 million to $42.7 million.

- 1.65 billion: Apple installed devices. 1 billion+ iPhones.

- 100+ million: DuckDuckGo search queries in one day. Google is said to handle 5 billion searches per day.

- 50%: reduction in Azure Active Directory CPU costs by moving to .NET Core 3.1.

- 80x: first adiabatic superconductor microprocessor more energy-efficient when compared to the state-of-the-art semiconductor electronic device.

- 10^27: new prefix hella- as in you have 4 hellabytes of data.

- 1.4602 milliseconds: shortest day ever recorded was this amount shorter than the standard. The earth spins faster.

- $2.5 Billion: Amount Boeing agreed to pay for 737 Max Fraud Conspiracy.

- $11,500: saved by compressing messages 30%

- $100 million: Uber discovered they’d been defrauded out of 2/3 of their ad spend.

- 580 Terabytes: of data stored on a magnetic storage tape invented by Fujifilm.

- 10^15: queries per day handled by Facebook’s graph.

- 7.2 million: peak simultaneous Facebook Live broadcast streams.

- 2.5 petabytes: memory capacity of the human brain.

Quotable Stuff:

- Akin: Any run-of-the-mill engineer can design something which is elegant. A good engineer designs systems to be efficient. A great engineer designs them to be effective.

- @turtlesoupy: 1/n I'm an engineer who wrote Instagram's feed algorithm. Twitter needs to lower the click-probability weighting in its ranking algorithm. A thread. 2/n made you look? Seriously, that's the problem. You are infinitely more likely to "click" on something that has a little thread 🧵 icon in it as opposed to a vanilla tweet. /n that also applies to people posting spicy hot takes where you click into the thread to see outrage. It is rubbernecking. 4/n weighting on P(click), 🧵 posts dominate others and get ranked to the top of feed. But that encourages you to /post/ more 🧵 content so you get to the top of other people's feeds. 5/n that's a "network effect" where a small ranking change has a huge effect on online discourse. Look at me! I'm writing a thread right now and I hate them.

- Geoff Huston: NATs are the reason why in excess of 20 billion connected devices can be squeezed into some 2 billion active IPv4 addresses. Applications that cannot work behind NATs are no longer useful and no longer used. However, the pressures of this inexorable growth in the number of deployed devices in the Internet means that the even NATs cannot absorb these growth pressures forever. NATs can extend the effective addressable space by up to 32 ‘extra’ bits, and they enable the time-based sharing of addresses. Both of these are effective measures in stretching the address space to encompass a larger device pool, but they do not transform the address space into an infinitely elastic resource. The inevitable outcome of this process is that either we will see the fragmenting of the IPv4 Internet into a number of disconnected parts, so that the entire concept of a globally unique and coherent address pool layered over a single coherent packet transmission realm will be foregone, or we will see these growth pressures motivate the further deployment of IPv6, and the emergence of IPv6-only elements of the Internet as it tries to maintain a cohesive and connected whole.

- Robert J. Sawyer: While the first successful alchemist was undoubtedly God, I sometimes wonder whether the second successful one may not have been the Devil himself.

- Michael Wayland: Ford and Google are entering into a six-year deal, which the automaker said is worth hundreds of millions of dollars. The tie-up will make Google responsible for much of the automaker’s growing in-vehicle connectivity, as well as cloud computing and other technology services. Google is expected to assist Ford with everything from in-car infotainment systems and remote, or over-the-air, updates to using artificial intelligence

- @samnewman: So a few people have asked why I have this snarky response. What is my problem with this service? Well, to be clear, it’s not an issue with GraphQL, it’s an issue with direct coupling with underlying datasources

- Percona: There were not many cases where the ARM instance becomes slower than the x86 instance in the tests we performed. The test results were consistent throughout the testing of the last couple of days. While ARM-based instance is 25 percent cheaper, it is able to show a 15-20% performance gain in most of the tests over the corresponding x86 based instances. So ARM-based instances are giving conclusively better price-performance in all aspects.

- Pat Helland: The word consistent is not consistent. I pretty much think it means nothing useful. Eventual consistency means nothing both now AND later. It does, however, confuse the heck out of a lot of people.

- Steren: My stack requires no maintenance, has perfect Lighthouse scores, will never have any security vulnerability, is based on open standards, is portable, has an instant dev loop, has no build step and… will outlive any other stack.It’s not LAMP, Wordpress, Rails, MEAN, Jamstack... I don’t do CSR (Client-side rendering), SSR (Server Side Rendering), SSG (Static Site Generation)...My stack is HTML+CSS. And because my sources are in git, pushed to GitHub, GitHub Pages is my host.

- jandrewrogers: This implies that average data models today are a million times larger than data models a decade ago. Exabyte scale working data models have been something I've needed to consider in designs for at least a few years. We are surprisingly close to overflowing 64-bit integers in real systems.

- @DanRose999: I was at Amzn in 2000 when the internet bubble popped. Capital markets dried up & we were burning $1B/yr. Our biggest expense was datacenter -> expensive Sun servers. We spent a year ripping out Sun & replacing with HP/Linux, which formed the foundation for AWS. The backstory: My first week at Amzn in '99 I saw McNealy in the elevator on his way to Bezos' office. Sun Microsystems was one of the most valuable companies in the world at that time (peak market cap >$300B). In those days, buying Sun was like buying IBM: "nobody ever got fired for it". Our motto was "get big fast." Site stability was critical - every second of downtime was lost sales - so we spent big $$ to keep the site up. Sun servers were the most reliable so all internet co's used them back then, even though Sun's proprietary stack was expensive & sticky. Then something even more interesting happened. As a retailer we had always faced huge seasonality, with traffic and revenue surging every Nov/Dec. Jeff started to think - we have all this excess server capacity for 46 weeks/year, why not rent it out to other companies? Around this same time, Jeff was also interested in decoupling internal dependencies so teams could build without being gated by other teams. The architectural changes required to enable this loosely coupled model became the API primitives for AWS. These were foundational insights for AWS. I remember Jeff presenting at an all-hands, he framed the idea in the context of the electric grid. In 1900, a business had to build its own generator to open a shop. Why should a business in 2000 have to build its own datacenter? Amzn nearly died in 2000-2003. But without this crisis, it's unlikely the company would have made the hard decision to shift to a completely new architecture. And without that shift, AWS may never have happened. Never let a good crisis go to waste!

- Adam Leventhal: Maybe AWS will surprise us, but I’m not holding my breath. Outposts are not just expensive but significantly overpriced — despite the big drop and by quite a bit...Outposts pricing remains at least mildly rapacious.

- boulos: is a big reason for why I cared about making GKE on-prem / bare metal a thing: I don’t believe (most) customers on-prem want to buy new hardware from a cloud provider. They mostly want to have consistent API-driven infrastructure with their hybrid cloud setup, and don’t want to burn their millions of dollars of equipment to the ground to do so.

- @copyconstruc: The 2010s began with the idea that scaling infinitely wasn’t possible with an RDBMS. In 2020, it’s possible to scale “infinitely using strong relational data models (MySQL with Vitess, Postgres with CockroachDB, Aurora, and custom solutions like Cloud Spanner), which is cool.

- LeifCarrotson: If you're a veteran with Javascript, and Angular, and Node, and MySQL, you will scarcely be able to add something as simple as a "Birthday" field to the user profile pages of your new employer's SPA on your first day or first week on the job. That background will give you an idea of how you would have done that on previous applications, and give a slight speed boost as you try to skim the project for tables and functions that are related to user profiles, but the new application is almost certainly different.

- Geoff Huston: The incidence of BGP updates appears to be largely unrelated to changes in the underlying model of reachability, and more related to the adjustment of BGP to match traffic engineering policy objectives. The growth rates of updates are not a source of any great concern at this point in time.

- Kevin Mitchell: The inherent indeterminacy of physical systems means that any given arrangement of atoms in your brain right at this second, will not lead, inevitably, to only one possible specific subsequent state of the brain. Instead, multiple future states are possible, meaning multiple future actions are possible. The outcome is not determined merely by the positions of all the atoms, their lower-order properties of energy, charge, mass, and momentum, and the fundamental forces of physics. What then does determine the next state? What settles the matter?

- Drew Firment: The verdict: cloud maturity correlates closely with stronger growth. Not surprisingly, transformational-stage companies are more than 15 percentage points ahead of tactical-stage companies. When you show an obvious commitment to cloud, you see a very real return.

- @theburningmonk: Given all the excitement over Lambda's per-ms billing change today, some of you might be thinking how much money you can save by shaving 10ms off your function. Fight that temptation 🧘♂️until you can prove the ROI on doing the optimization.

- @forrestbrazeal: "We are not close to being done investing in and inventing Graviton" - @ajassy #reinvent. Graviton is threatening to take the place of DynamoDB On-Demand Capacity as my go-to example for how the cloud gets better at no cost to you.

- Cory Doctorow: Forty years ago, we had cake and asked for icing on top of it. Today, all we have left is the icing, and we’ve forgotten that the cake was ever there. If code isn’t licensed as “free, you’d best leave it alone.

- @justinkan: One of my investments is trying to decide between Google Cloud and AWS. AWS seems to have a ton of support for startups but haven't heard from anyone from Google. Does anyone at Google Cloud work on startups?

- @pixprin: 1/ Our [Pixar] world works quite a bit differently than VFX in two major ways: we spend a lot of man hours on optimization, and our scheduler is all about sharing. Due to optimization, by and large we make the film fit the box, aka each film at its peak gets the same number of cores.

- Small Datum: On average, old Postgres was slower than old MySQL while new Postgres is faster than new MySQL courtesy of CPU regressions in new MySQL (for this workload, HW, etc) The InnoDB clustered PK index hurts throughput for a few queries when the smaller Postgres PK index can be cached, but the larger InnoDB PK index cannot. The InnoDB clustered PK index helps a few queries where Postgres must to extra random IO to fetch columns not in the PK index

- @jevakallio: GraphQL is great because instead of writing boring APIs I can just mess about doing clever schema introspection metaprogramming all day long and get paid twice what a normal API programmer does because nobody else understands what is going on

- motohagiography: The last year has illustrated a new kind of "platform risk," where I think we always tried to diversify exposure to them in architecture, security, and supply chains, but it's as though it has finally trickled down to individuals.

- B.N. Frank: McDonald’s restaurants are putting cameras in their dumpsters and trash containers in an effort to improve their recycling efforts and save money on waste collection. Nordstrom department stores are doing this as well

- pelle: AltaVista itself largely died, because in a misguided attempt to manage the innovators dilemma they just tried to rebrand everything network oriented they had as AltaVista. People only remember the search engine now and for good reason. But we had AltaVista firewalls, gigabit routers, network cards, mail server (both SMTP and X400 (!!!) and a bunch of other junk without a coherent strategy. Everything that had anything to do with networking got the AltaVista logo on it. The focus became on selling their existing junk using the now hip AltaVista brand, but the AltaVista search itself was not given priority.

- @randybias: I’m sorry. There is no “open cloud future. That ship sailed. It’s a “walled garden cloud future.

- eternalban: So this is the reality of modern software development. Architecture is simply not valued at extremities of tech orgnizations: the bottom ranks are young developers who simply don't know enough to appreciate thoughtful design. And the management is facing incentives that simply do NOT align with thoughtful design and development

- @benedictevans: Facebook has 2bn users posting 100bn times a day. The global SMS system had 20-25bn messages a day. So is this a publisher? A platform? A telco? No. We don’t really know what we think about speech online, nor how to think about it, nor who should decide.

- @brokep: The pirate bay, the most censored website in the world, started by kids, run by people with problems with alcohol, drugs and money, still is up after almost 2 decades. Parlor and gab etc have all the money around but no skills or mindset. Embarrassing. The most ironic thing is that TPBs enemies include not just the US government but also many European and the Russian one. Compared to gab/parlor which is supported by the current president of the US and probably liked by the Russian one too.

- Jessitron: Complexity in software is nonlinear. It goes up way, way faster than value. This integrated architecture looks lovely in the picture, but implementing it is going to be ugly. And every modification forever is going to be ugly, too.

- @Suhail: We have 1 virtual machine on GCP and Google is making us talk to sales. It's the holidays. Which sales person will care about our account against their quota?

- Forrest Brazeal: Using a combination of traditional reserved instances to cover our platform costs and savings plans for the resources our learners create, we’ve been able to get our reserved instance mix up to about 80% of our total EC2 spend — a KPI that should reduce our EC2 bill by about 30% over the next 12 months at no impact to our users.

- @MohapatraHemant: By 2008, Google had everything going for it w.r.t. Cloud and we should’ve been the market leaders, but we were either too early to market or too late. What did we do wrong? (1) bad timing (2) worse productization & (3) worst GTM...Common thread: engineering hubris. We had the best tech, but had poor documentation + no "solutions mindset. A CxO at a large telco once told me “you folks just throw code over the fence. Cloud was seen as the commercial arm of the most powerful team @ Google: TI (tech infra)...My google exp reinforced a few learnings for me: (1) consumers buy products; enterprises buy platforms. (2) distribution advantages overtake product / tech advantages and (3) companies that reach PMF & then under-invest in S&M risk staying niche players or worse: get taken down.

- Simon Sharwood: Amazon Web Services is tired of tech that wasn’t purpose built for clouds and hopes that the stuff it’s now building from scratch will be more appealing to you, too...AWS is all about small blast radii, DeSantis explained, and in the past the company therefore wrote its own UPS firmware for third-party products.

- danluu: We've seen that, if we look at the future, the fraction of complexity that might be accidental is effectively unbounded. One might argue that, if we look at the present, these terms wouldn't be meaningless. But, while this will vary by domain, I've personally never worked on a non-trivial problem that isn't completely dominated by accidental complexity, making the concept of essential complexity meaningless on any problem I've worked on that's worth discussing.

- @ben11kehoe: sigh...every year for Christmas Day operations there turns out to be a single thing we didn’t provision high enough that keeps us from handling the massive influx of new robots entirely hands off keyboard. #ServerlessProblems One year it was a Kinesis stream that needed to be upsharded. Last year it was a Firehose account limit. This year it was one DDB table’s throughput. Always so close!

- Mary Poppendieck: People have become way too comfortable with backlogs. It’s just a bad, bad concept.

- jamescun: This post touches on "innovation tokens". While I agree with the premise of "choose boring technology", it feels like a process smell, particularly of a startup whose goal is to innovate a technology. Feels demotivating as an engineer if management says our team can only innovate an arbitrary N times.

- ormkiqmike: I've been building systems for a very long time. Managed services is a complete game changer for me and I would need some incredible reason to not use it. Especially for enterprise, for me it's a no-brainer. The amount of time/effort enterprises have to do keep their systems' patched/updated, managed services are way cheaper.

- qeternity: 4 years old. The world is completely different today. We run a number of HA Postgres setups on k8s and it works beautifully. Local nvme acccess with elections backed using k8s primitives.

- ram_rar: I worked in a startup that was eventually acquired by cisco. We had the same dilemma back then. AWS and GCP were great, but also fairly expensive until you get locked in. Oracles bare metal cloud sweetened the deal soo much, that it was a no brainer to go with them. We were very heavy on using all open source tech stuff, but didnt rely on any cloud service like S3 etc. So the transition was no brainer. If your tech stack is not reliant on cloud services like S3 etc, you're better off with a cloud provider who can give you those sweet deals. But you'll need in house expertise to deal with big data.

- @stuntpants: The premise here is wrong. arm64 is the Apple ISA, it was designed to enable Apple’s microarchitecture plans. There’s a reason Apple’s first 64 bit core (Cyclone) was years ahead of everyone else, and it isn’t just caches. Arm64 didn’t appear out of nowhere, Apple contracted ARM to design a new ISA for its purposes. When Apple began selling iPhones containing arm64 chips, ARM hadn’t even finished their own core design to license to others. Apple planned to go super-wide with low clocks, highly OoO, highly speculative. They needed an ISA to enable that, which ARM provided. M1 performance is not so because of the ARM ISA, the ARM ISA is so because of Apple core performance plans a decade ago.

- Dexter Eric: imagine magnifying the nanoscale world by a factor of ten million, stretching nanometer-scale objects to centimeters. In such a world, a typical atom becomes a sphere about 3 mm in diameter, the size of a small bead or a capital O in a medium-large font

- @swardley: Hmmm...DevOps, it's culture not cloud. Agile, it's culture not project methodology. Open Source, it's culture not sharing code. Cloud Native, it's culture not containers. Ok, define culture. No hand waving allowed.

- Layal Liverpool: Harris Wang at Columbia University in New York and his team took this one step further, using a form of CRISPR gene editing to insert specific DNA sequences that encode binary data – the 1s and 0s that computers use to store data – into bacterial cells. By assigning different arrangements of these DNA sequences to different letters of the English alphabet, the researchers were able to encode the 12-byte text message “hello world! into DNA inside E. coli cells. Wang and his team were subsequently able to decode the message by extracting and sequencing the bacterial DNA.

- fxtentacle: FYI, in situations like this the best way is to: 1. Register your videos and images with the USCO. It'll cost <$100. 2. You can now file DMCA takedowns. Send one to Apple with the USCO registration ID and a copy of the image and a link to the app in question. 3. Apple will either immediately remove that fake app, or be liable for up to $350k in punitive damages for wilful infringement and lose all DMCA protection. 4. If Apple didn't react a week later, approach a lawyer. They'll likely be willing to work purely for 50% commission, because it'll be a slam dunk in court. 5. Repeat the same with Facebook / Youtube if they advertise there with your images or videos. Take Screenshots and write down the url and date and time.

- @aschilling: What is necessary to run #Parler? - 40x 64 vCPUs, 512 GB, 14 TB NVMe - 70-100 vCPUs, 768 GB, 4 TV NVMe - 300-400 various other instances (8-16 vCPUs, 32-64 GB) - 300-400 GB/min internal traffic - 100-120 GB/min external traffic

- @Snowden: For those wondering about @SignalApp's scaling, #WhatsApp's decision to sell out its users to @Facebook has led to what is probably the biggest digital migration to a more secure messenger we've ever seen. Hang in there while the Signal team catches up.

- halfmatthalfcat: I use Akka Cluster extensively with Persistence. It's an amazing piece of technology. Before I went this route, I tried to make Akka Cluster work with RabbitMQ however I realized (like another poster here) that you're essentially duplicating concerns since Akka itself is a message queue. There's also a ton of logistics with Rabbit around binding queues, architecting your route patterns, etc that add extra cognitive overhead. I'm creating a highly distributed chat application where each user has their own persistent actor and each chatroom has their own persistent actor. At this point, it doesn't matter where the user or chatroom are in the cluster it literally "just works". All I need to do is emit a message to the cluster from a user to chatroom or vice versa, even in a cluster of hundreds of nodes, and things just work. Now there's some extra care you need to take at the edge (split-brain via multi-az, multi-datacenter) but those are things you worry about at scale.

- rsdav: I've done pretty extensive work in all three major cloud providers. If you were to ask me which one I'd use for a net new project, it would be GCP -- no question. Nearly all of their services I've used have been great with a feeling that they were purposefully engineered (BigQuery, GKE, GCE, Cloud Build, Cloud Run, Firebase, GCR, Dataflow, PubSub, Data Proc, Cloud SQL, goes on and on...). Not to mention almost every service has a Cloud API, which really goes a long way towards eliminating the firewall and helps you embrace the Zero Trust/BeyondCorp model. And BigQuery. I can't express enough how amazing BigQuery is. If you're not using GCP, it's worth going multi-cloud for BigQuery alone.

- d_silin: Consumer-grade Optane SSDs are not competetive with flash memory, simple as that. On performance and write endurance, flash-based SSD are "good enough", while also much better on cost-per-GB vs Optane. This reduces available market for Optane to gaming enthusiasts and similar customers - a very small slice of the total PC market. Now, for enterprise markets, best-in-class performance is always in demand, and the product can be (over)priced much higher than on the PC market.

- ckiehl: Things I now believe, which past me would've squabbled with: Typed languages are better when you're working on a team of people with various experience levels. Clever code isn't usually good code. Clarity trumps all other concerns. Designing scalable systems when you don't need to makes you a bad engineer. In general, RDBMS > NoSql.

- Mary Catherine Bateson: It turns out that the Greek religious system is a way of translating what you know about your sisters, and your cousins, and your aunts into knowledge about what’s happening to the weather, the climate, the crops, and international relations, all sorts of things. A metaphor is always a framework for thinking, using knowledge of this to think about that. Religion is an adaptive tool, among other things. It is a form of analogic thinking.

- General John Murray: When you are defending against a drone swarm, a human may be required to make that first decision, but I am just not sure any human can keep up. How much human involvement do you actually need when you are [making] nonlethal decisions from a human standpoint?

- berthub: So in the BioNTech/Pfizer vaccine, every U has been replaced by 1-methyl-3’-pseudouridylyl, denoted by Ψ. The really clever bit is that although this replacement Ψ placates (calms) our immune system, it is accepted as a normal U by relevant parts of the cell. In computer security we also know this trick - it sometimes is possible to transmit a slightly corrupted version of a message that confuses firewalls and security solutions, but that is still accepted by the backend servers - which can then get hacked.

- Jakob: At the core of these machines are spiked cylinders that determine which notes to play. Basically, a program stored in read-only memory, at a level of expression rather far from the musical notation used to describe the original piece of music...The process of going from a piece of music to the grid definitely counts as programming for me. It is a rather complicated transformation from a specification to an implementation in something that is probably best seen as an analogy to microcode or very long instruction word-style processors.

- @gregisenberg: A startup idea isn't "one" idea. It's a 10000 ideas, 100,000 decisions and 1,000,000 headaches

- Neil Thompson: When you look at 3D (three-dimensional) integration, there are some near-term gains that are available. But heat-dissipation problems get worse when you place things on top of each other. It seems much more likely that this will turn out to be similar to what happened with processor cores. When multicore processors appeared, the promise was to keep doubling the number of cores. Initially we got an increase, and then got diminishing returns. The first 3 diagrams do all sorts of things: the boat-shaped dial relates polar height to the horizon. The second dial is static, but requires an index string to calculate the position of the sun in the zodiac; the third tells time, here with the help of an original lead weight.

- Ravi Subramanian: For some applications, memory bandwidth is limiting growth. One of the key reasons for the growth of specialized processors, as well as in-memory (or near-memory) computer architectures, is to directly address the limitations of traditional von Neumann architectures. This is especially the case when so much energy is spent moving data between processors and memory versus energy spent on actual compute.

- @atlasobscura: Did your college math textbooks have moving parts? From 1524 on, a famous German cosmography book came with 5 volvelles! Peter Apian (later knighted for a different book), opted for a novel, tactile-visual teaching style for all earthly and heavenly measurements.

- Akin: Design is based on requirements. There's no justification for designing something one bit "better" than the requirements dictate.

- Todd Younkin: Hyperdimensional, or HD, computing looks to tackle the information explosion facing us in the years ahead by emulating the power of the human brain in silicon. To do that, hyperdimensional computing employs much larger data sizes. Instead of 32- or 64-bit computing, an HD approach would have data containing 10,000 bits or more.

- chubot: Summary: scale has at least 2 different meanings. Scaling in resources doesn't really mean you need Kubernetes. Scaling in terms of workload diversity is a better use case for it.

- warent: An app called Robinhood, named after the archetype of a man who took from the rich and gave to the poor, now blocking people from taking from the rich. I hope the irony is not lost on the general public.

- Charles Leiserson: Let's get real about investing in performance engineering. We can't just leave it to the technologists to give us more performance every year. Moore's Law made it so they didn't have to worry about that so much, but the wheel is turning.

- Andrea Goldsmith: The next generation of wireless networks needs to support a much broader range of applications. The goal of each generation of cellular has always been getting to higher data rates, but what we're looking at now are low-latency applications like autonomous driving, and networks so far have not really put hard latency constraints into their design criteria.

- linuxftw: I think the problem boils down to 'product' vs 'project.' Elastic search is very much a product, it's owned by a company, not a foundation. FOSS developers should contribute to projects and not products. Non-copyleft licenses seem to just be code for corporations to build upon, providing them free labor while getting little in return. At least with the GPL, you are getting a promise that they will make available their sources. Consider carefully your expectations when you license your software.

- jacobr1: we migrated to k8s not because we needed better scaling (ec2 auto scaling groups were working reasonably well for us) but because we kept inventing our own way to do rolling deploys or run scheduled jobs, and had a variety of ways to store secrets. On top of that developers were increasingly running their own containers with docker-compose to test services talking to each to each other. We migrated to k8s to A) have a way to standardize how to run containerized builds and get the benefits for "it works on my laptop" matching how it works in production (at least functionally) and B) a common set of patterns for managing deployed software.

- klomparce: My point of discussion is: as organization's data needs grow over time, obviously there's no single solution for every use case, so there's a need of composition of different technologies, to handle the different workloads and access patterns. But is it possible to compose these systems together with a unifying, declarative interface for reading and writing data, without having to worry about them becoming inconsistent with each other, and also not putting that burden on the application that is using these systems?

- Natalie Silvanovich: investigated the signalling state machines of seven video conferencing applications and found five vulnerabilities that could allow a caller device to force a callee device to transmit audio or video data. All these vulnerabilities have since been fixed. It is not clear why this is such a common problem, but a lack of awareness of these types of bugs as well as unnecessary complexity in signalling state machines is likely a factor. Signalling state machines are a concerning and under-investigated attack surface of video conferencing applications, and it is likely that more problems will be found with further research.

- UseStrict: We had an (admittedly more complex) monolith application for customer contracts and billing. It wasn't ideal, and was getting long in the tooth (think Perl Catalyst and jQuery), so the powers that be wanted to build a new service. But instead of decomposing the monolith into a few more loosely integrated services, they went way overboard with 20+ microservices, every DB technology imagineable (Oracle RDBMS, Mongo, MariaDB), a full message bus via RabbitMQ, and some crazy AWS orchestration to manage it all. What could've been an effort to split the existing service into manageable smaller services and rewrite components as needed turned into a multi-year ground-up effort. When I left they were nowhere near production ready, with significant technical debt and code rot from already years out of date libraries and practices.

- fishtoaster: I've talked* to a number of bootstrapped and non-profit companies who are all-in on cloud and I think there are a few use-cases you're missing beyond just "we value dev velocity over cost savings." The biggest one is ease of scaling vs something like colocation. I talked to a non-profit with incredibly spiky traffic based around whenever they get mentioned in the news. Since every dollar matters for them, being able to scale down to a minimal infrastructure between spikes is key to their survival. Another company I talked to has traffic that's reliably 8x larger during US business hours vs night time and uses both autoscaling and on-demand services (dynamodb, aurora serverless) to pay ~1/3 of what they'd have to if they needed to keep that 8x capacity online all the time.

- bane: And that's it. I know there are teams that go all in. But for the dozen or teams I've personally interacted with this is it. The rest of the stack is usually something stuffed into an EC2 instance instead of using an AWS version and it comes down to one thing: the difficulties in estimating pricing for those pieces. EC2 instances are drop-dead simple to price estimates forward 6 months, 12 months or longer. Amazon is probably leaving billions on the table every year because nobody can figure out how to price things so their department can make their yearly budget requests. The one time somebody tries to use some managed service that goes over budget by 3000%, and the after action figures out that it would have been within the budget by using in EC2, they just do that instead -- even though it increases the staff cost and maintenance complexity. In fact just this past week a team was looking at using SageMaker in an effort to go all "cloud native", took one look at the pricing sheet and noped right back to Jupyter and scikit_learn in a few EC2 instances. An entire different group I'm working with is evaluating cloud management tools and most of them just simplify provisioning EC2 instances and tracking instance costs. They really don't do much for tracking costs from almost any of the other services.

Useful Stuff:

- Scalability doesn’t matter—until it does. Then it’s not a good problem to have. It’s not a glorious opportunity for iteration. It’s just a problem and sometimes those problems really matter.

- @stilkov: Yesterday, the German state I live in (NRW) launched its website for vaccination registration. Predictably, it broke down. After all, there’s no way for a poor country like Germany to create a website that can handle 700 requests/second. That’s black magic.

- @manuel_zapf: Similar to Bavaria where the online schooling platform mebis kept breaking down, and the official recommendation was (still is?) to stagger usage. With the number of students and time of usage pretty much exactly known, this is the simplest case to scale and yet we fail.

- Locally, Kaiser Permanente made people wait on hold for hours and hours to schedule a COVID vaccine shot—or learn they weren’t eligible. Why not a simple UI to do all the obvious things? Apparently their system couldn’t handle the load. We’ve known vaccines were coming for a year.

- Also Why Parler Can’t Rebuild a Scalable Cloud Service from Scratch. Also also What went wrong with America’s $44 million vaccine data system?

- Everyone loves big hardware. The Next Gen Database Servers Powering Let's Encrypt. Let's Encrypt bought a new database server:

- 225M sites with a renewal period of 90 days. The average is low, but we don’t know the peak.

- 2U, 2x AMD EPYC 7542, 64 cores / 128 threads, 2TB 3200MT/s, 24x 6.4TB Intel P4610 NVMe SSD 3200/3200 MB/s read/write.

- Before the upgrade, we turned around the median API request in ~90 ms. The upgrade decimated that metric to ~9 ms!

- The new AMD EPYC CPUs sit at about 25%. The old was 90%. The average query response time (from INFORMATION_SCHEMA) used to be ~0.45ms. Queries now average three times faster, about 0.15ms.

- How much did it cost? They didn’t say. On the Dell site this server would be in the $200k range. Many on HN said in reality it would be far less.

- What would such a system look like in the olden days? dan-robertson: For a comparison, you could get a 64 processor, 256Gb ram Sun Starfire around 20 years ago[1]. Wikipedia claims these cost well over a million dollars ($1.5 million in 2021 dollars). This machine was enormous (bigger than a rack), would have had more non-uniform memory access to deal with, and the processors were clocked at something like 250-650MHz. It weighed 2000lbs, and was 38 inches wide, or basically 2 full racks.

- A natural question these days is what would this cost in the cloud? jeffbee: That's the wrong way to think about the cloud. A better way to think about it would be "how much database traffic (and storage) can I serve from Cloud Whatever for $xxx". Then you need to think about what your realistic effective utilization would be. This server has 153600 GB of raw storage. That kind of storage would cost you $46000 (retail) in Cloud Spanner every month, but I doubt that's the right comparison. The right math would probably be that they have 250 million customers and perhaps 1KB of real information per customer. Now the question becomes why you would ever buy 24×6400 GB of flash memory to store this scale of data.

- tanelpoder: Also worth noting that scalability != efficiency. With enough NVMe drives, a single server can do millions of IOPS and scan data at over 100 GB/s. A single PCIe 4.0 x4 SSD on my machine can do large I/Os at 6.8 GB/s rate, so 16 of them (with 4 x quad SSD adapter cards) in a 2-socket EPYC machine can do over 100 GB/s. You may need clusters, duplicated systems, replication, etc for resiliency reasons of course, but a single modern machine with lots of memory channels per CPU and PCIe 4.0 can achieve ridiculous throughput...

- When you run a scalable service on top of services that don't scale, mayhem ensues. Slack’s Outage on January 4th 2021

- The spike of load from the simultaneous provisioning of so many instances under suboptimal network conditions meant that provision-service hit two separate resource bottlenecks (the most significant one was the Linux open files limit, but we also exceeded an AWS quota limit)

- While we were repairing provision-service, we were still under-capacity for our web tier because the scale-up was not working as expected. We had created a large number of instances, but most of them were not fully provisioned and were not serving. The large number of broken instances caused us to hit our pre-configured autoscaling-group size limits, which determine the maximum number of instances in our web tier.

- A little architectural background is needed here: Slack started life, not so many years ago, with everything running in one AWS account. As we’ve grown in size, complexity, and number of engineers we’ve moved away from that architecture towards running services in separate accounts and in dedicated Virtual Private Clouds (VPCs). This gives us more isolation between different services, and means that we can control operator privileges with much more granularity. We use AWS Transit Gateways (TGWs) as hubs to link our VPCs.

- On January 4th, one of our Transit Gateways became overloaded. The TGWs are managed by AWS and are intended to scale transparently to us. However, Slack’s annual traffic pattern is a little unusual: Traffic is lower over the holidays, as everyone disconnects from work (good job on the work-life balance, Slack users!). On the first Monday back, client caches are cold and clients pull down more data than usual on their first connection to Slack. We go from our quietest time of the whole year to one of our biggest days quite literally overnight.

- AWS assures us that they are reviewing the TGW scaling algorithms for large packet-per-second increases as part of their post-incident process. We’ve also set ourselves a reminder (a Slack reminder, of course) to request a preemptive upscaling of our TGWs at the end of the next holiday season.

- Also Building the Next Evolution of Cloud Networks at Slack

- GCP Outpaces Azure, AWS in the 2021 Cloud Report:

- GCP delivered the most throughput (i.e. the fastest processing rates) on 4/4 of the Cloud Report’s throughput benchmarks

- AWS has performed best in network latency for three years running.

- Azure’s ultra-disks are worth the money

- GCP’s general purpose disk matched performance of advanced disk offerings from AWS and Azure

- In our benchmark simulation of real-world OLTP workloads, cheaper machines with general purpose disks won for both AWS and GCP.

- Also Google Cloud vs AWS in 2021 (Comparing the Giants). A very detailed comparison.

- In Good Will Hunting Matt Damon said: You wasted $150,000 on an education you coulda got for $1.50 in late fees at the public library. The modern equivalent is an Entire Computer Science Curriculum in 1000 YouTube Videos. Of course, your education as a programmer will still be OJT.

- It should be noted you do not have to create a complex workflow with AWS. All the work can be done with a couple of in-line function calls. Moving my serverless project to Ruby on Rails:

- When the building blocks are too simple, the complexity moves into the interaction between the blocks.

- the interactions between the serverless blocks happen outside my application. A lambda publishes a message to SNS, another one picks it up and writes something to DynamoDB, the third one takes that new record and sends an email….

- I was clearly not enjoying the serverless. So I decided to rewrite it. After all, it is a side project I’m doing for fun. The tech stack of choice — Ruby on Rails. It was like driving a Tesla after years of making my own scrappy cars.

- Serverless is like a black hole. It promised exciting adventures, but gravity sucked me in and I spent most of my efforts dealing with its complexity, instead of focusing on my product.

- Also Serverless applications are Hyperobjects… and it really matters: Too many times, I’ve heard the response “I can’t visualise the system or “I don’t know what it looks like when I talk about serverless applications. I genuinely find this frustrating because most people couldn’t tell me what their applications really look like. What they mean is that they can’t visualise the “architecture, because they are so used to putting code on a “server and having some form of data layer (or layers) that they don’t see the actual software application as something that needs visualising, when in fact it is just as complex, if not more so than many serverless systems.

- Rationalism? The Reality of Multi-Cloud. Descartes failed to a doubt a few important key precepts, but the conclusion is sound: for most products and teams, the result of such analysis would be opting for either strategy #1 (single cloud for all jobs) or strategy #2 (right cloud for the job).

- How chess.com scaled their database in the time of COVID and Queen's Gambit. Your legacy database is outgrowing itself.

- 4M unique daily users and over 7B queries hitting all our MySQL databases combined. Went from under 1M unique users a day a year ago to 1.3M in March, to over 4M at the moment, with over 8M games played each day

- We do use caching, extensively. If we didn’t we wouldn’t survive a day. We do use Redis, often pushing it to its extremes. We’ve tested MongoDB and Vitess, but they didn’t do it for us.

- We benchmark almost anything we’ve never done before, and see how it turns out, before actually implementing it. We know these work for us.

- We expected to see a drop in activity after the interest in show reduced, but we're still hitting records day after day. Crazy.

- we have between 20T and 25T of data, combined across all MySQL databases. And somewhere in the region between 60B and 80B records.

- The biggest problem we were facing at that point in time was that altering almost any table required taking half the hosts out of rotation, run the alter there, put it back in rotation, alter the other half, put them back in. We had to do it off-peak, as taking half the hosts out during the peak time would probably result in the other half crashing. As the website was growing, old features got new improvements, so we had to run alters a lot (if you’re in the business, you know how it goes).

- That made our goal clear: decrease number of writes going to the main cluster, and do it as quickly as possible!

- It took just one week to move logs into its dedicated database, running on two hosts, which gave us some breathing space, as those had the most writes, by far. One more month to move games (which was oh so scary, as any mistake there would be a disaster, considering that’s the whole point of the website), and puzzles took over 2 months. After these, we were well under 80% of the replication capacity in the peak, which meant we had time to regroup, and plan upcoming projects a bit better.

- One of things that has really helped our DB scale is the fact that we’ve moved a lot of sorting, merging, filtering from the DB into the code itself.

- dbmikus: If you have 1m clients making DB requests and are hitting performance constraints you could do any of: 1. Add more ram, CPU, etc to the DB host 2. Create DB read replicas for higher read volume 3. Shard the DB (reads and writes) 4. Offload logic to stateless clients, who you can easily scale horizontally So option 4 is reasonable if you don't want to or can't do the other options.

- ikonic89: I can break it down quickly, here: - Scaling web servers is much easier. Queries are (mostly, on a single host) executed one after another, so if one is slow, you suddenly have a queued of queries waiting to be executed. The goal is to execute them as quickly as possible. - 2 separate queries, both using perfect indexes will be much faster (insanely faster on this scale) than 1 query with a join. So, we just join them in code - Sorting is often a problem, since in MySQL only one index can be used per query. - No foreign keys increases insert/update query speeds, and decreases server load. - Etc, etc.

- What Joel Goldberg learned in 45 years in the software industry:

- Beware of the Curse of Knowledge. When you know something it is almost impossible to imagine what it is like not to know that thing. This is the curse of knowledge, and it is the root of countless misunderstandings and inefficiencies. Smart people who are comfortable with complexity can be especially prone to it!

- Focus on the Fundamentals. Here are six fundamentals that will continue to be relevant for a long time: Teamwork; Trust; Communitication; Seek Consensus; Automated Testing; Clean, understandable, and navigable code and design.

- Simplicity.

- Seek First to Understand.

- Beware Lock-in.

- Be Honest and Acknowledge When You Don’t Fit the Role.

- Also My Engineering Axioms.

- People are still trying to come to terms with entropy. Order is a function of work. Stop the work and chaos reigns. This is why good must always strive to overcome evil. Nothing good ever stays good without work. Software is drowning the world. The many lies about reducing complexity part 2: Cloud.

- Jeremy Burton on A Cloud-First Look Ahead for 2021.

- Cloud first means all your engineers can work on the latest release all the time. It’s a big deal because you don’t have many versions of a product out with your customers, you have one. Everyone is present on the latest release.

- Because it’s an online service you can get very close with your customers. You can see who’s how often and how long customers use a feature. That can be very humbling. You don’t want anything to get in the way of brutal honest feedback from the customer.

- CI/CD. Work on something starting monday and release in friday. It’s exciting to get that instant gratification from customer feedback. It’s very motivating.

- Early in a startup you don’t know what the customer wants so you have to change directions quickly. You can change very quickly in a cloud first / saas product and set yourself up for success based on what the customer wants instead of what the last head of sales wanted.

- Say no more than you say yes. Keep roadmaps short; a couple of quarters at most. Focus on two or three big features (epics). This communicates to the team what is important. Focus the team on what matters most. Prevents feature creep. Keeps focus on what the customer wants. Unless it comes from the customer it doesn’t go on the roadmap. In a cloud first company you can get closer to your customer.

- Product marketing is dying. It’s no longer authentic. The content isn’t deep enough. Buyers are now more educated. Goal is to produce high quality technical content. The model is a news room setup where freelance and internal people write high quality technical content. Push the content out so you educate the buyer, establish credibility, and earn the right for their business. The world is becoming inverted. Go all digital with domain experts producing high quality content first instead of sales materials, power points, PDFs, and value props to support the sales force. Establish trust through education. Then you earn the right to sell.

- The world of deals for high value license fees is over. The world now is do a deal for $5k, prove out value in the product, and have the customer come back and buy more. If they like the product they’ll come back, if they don’t they won’t. The nice thing is you can tap into smaller teams, rather than selling into the top of an organization.

- Setup a usage based pricing model that makes sense for customers. Buy a little bit now and if you like it, come back for more.

- With cloud first you don’t need to build much of the stack—datacenters, database, etc—so developers can work on the application, which is what customers actually care about. You can go fast. You can get close to your customers and see what they actually think about your product. There’s no running away from your customer if the average session time is declining and your churn is increasing. You can respond quickly to problems.

- Here's a simple explanation of how the massive SolarWinds hack happened. As someone who has worked on more than their fair share of build systems, I was wondering how a hacker could compromise a Solarwinds build server and get away with it? Their build process must be seriously broken. For any kind of sensitive product (security, privacy, reliability, etc): 1) the build server should only pick up changes that have been approved against some sort of change request id or bug id. Random changes should never be built. 2) Every line of code should be reviewed by three sets of eyes before it’s merged into whatever you are calling the production branch. These code reviews can be perspective based reviews, looking for security flaws, performance issues, memory leaks, lock problems, or whatever. Each reviewer should be from a different group. That way if one group is compromised, you have built-in redundancy. 3) Before distribution the image should be verified against a manifest detailing exactly what should be in the build. A digital signature says nothing about what’s inside a package. If you can’t audit and verify the contents of a package, you are mass distributing a weapon, not a product.

- Organize your next company like a digital insurgency. Ilkka Paananen – Superpowering Teams.

- Supercell, one of the most successful mobile game developers in the world, based in Finland. Supercell has built hugely successful games like, Clash of Clans and Clash Royale, which have each reached over a hundred million daily active users.

- The whole idea about Supercell and what is at the core of our culture is this idea of this small and independent teams that we call Cells, and this independent game teams sacred inside Supercell. The way to think about them is to think about smaller startups within the greater company.

- let's look at the best games that our company has put out. What is the common denominator of these games? One is that they had really amazing people and great teams behind the games, but interestingly, the other thing was that most of these games somehow had nothing to do with all of these fantastic processes that we had designed. The usual story was that we just didn't have anything else for these guys to do, so there are some that are sitting somewhere in the corner of the office and they were just doing whatever they wanted to do. There are some flying under the radar, so to speak, and then the next thing you know, this amazing game comes out. Then I start to think that "Wow, these amazing games may come out not because of me, or the process that I put together. They come out despite all the things that we've done here."

- then they took the idea further and instead of thinking about freedom and responsibility of individuals, they started to talk about freedom and responsibility of teams. That lead to the idea of Supercell.

- we call it the passion for excellence. Just meaning that we try to identify how high is the bar for this individual? Does this individual know what great looks like? That is so important that they really understand and you can set the bar as high as possible. That is the basis for everything. Other thing is we call them proactive doers, meaning is this individual able to get to that bar and figure out a way? The third criterion is that you just have to be great to work with. Doesn't mean that they have to be friends or anything outside work. We like people to be humble and of course trustworthy and those types of things.

- If there's one process that actually does make a lot of sense, it's probably the recruitment process. In that process, you want to accumulate all the learnings through the years that you got. The number one lesson that they learned over the years is, don't try to shortcut the process. Every single time they've tried to short cut it, in one way or another, it has come back to bite us.

- One of the best pieces of advice that I've ever gotten about recruiting is that if you can try to imagine the imaginary average quality of a person at the company and then there's some new candidate who is about to come in, ask yourself the question that "Is this new person, is she or he going to raise the average quality of the company?"

- his probably is the most difficult part of my and our jobs, just putting together a great games team. There's so much that goes into it. I wish there was a formula on how can you put it together, but I think it's quite a bit of just trial and error. What doesn't work in our experience is that if you just put together a random group of absolutely great individuals. A great team is something that the core team members, can complement each other.

- once you have found a great team, redistributing that team should be very high. there's a lot of value in rotating people between teams because that's how you maximize the learning in an organization. we are huge believers in this idea of these independent teams, but the flip side of the coin is that if you have siloed teams and there are no shared learnings, and no synergies between any of the teams, that isn't great either. The way to get those learnings going on is actually rotating some of the team members.

- Think about the three most recent games that they've done. Boom Beach, Clash Royale, and Brawl Stars. Every one of those three games has actually quite a big internal opposition, and there's been a lot of people at Supercell who actually thought that they shouldn't ever develop those games or that they shouldn't continue to develop those games, or we shouldn't release those games.

- we've always taken the other path, just to trust the team, let's have them release the game, and let's see what happens.

- one in 10 games that do make it out

- It truly is their call to either kill the game or release the game. That actually makes them care so much, and this huge feeling of responsibility comes in. I think that probably is the secret. If it would be my call, for example, to either release or kill the game, then the responsibility would shift from a team to me and then they probably wouldn't care as much. But because it's completely their responsibility, they really, really care.

- we felt that it's really important to promote this culture of risk-taking and that's why we also "Celebrate" the failure, celebrate the learnings coming from those failures.

- The Shadow Request: Troubleshooting OkCupid’s First GraphQL Release.

- we released what we called The Shadow Request. On our target page, the user loaded the page’s data from the REST API as normal and displayed the page. Then, the user loaded the same data from GraphQL, measured that call’s timing, and discarded the data.

- we discovered that our first release of the GraphQL API took about double the time — 1200ms versus 600ms — of the REST API.

- The first thing that we realized was that I accidentally released a build with the NODE_ENV set to development.

- We were also using an unoptimized Docker base image for this initial deploy.

- We then pluck out the user IDs and call our batch API endpoint, getUsers. That change sliced off almost 275ms from the call,

- we decided to just try serving it from www.okcupid.com/graphql, and the 300ms [CORS overhead] vanished

- Shouldn’t the REST API be faster now too?

- Moving 100,000 customers from co-lo data centres to the cloud. With zero downtime. Excellent story of the herculean effort needed to migrate to the cloud and how the architectures looks different in the cloud.

- How We Reduced Skyrocketing CPU Usage. The upshot: move to Python 3. An extensive read on Python revealed that there is an inherent issue with the way subprocesses are forked in Python 2.

- With security generally FUBARed in the industry, here’s an example of what actually considering security in your design would look like: Security Overview of AWS Lambda. Here’s a TLDR version. Some fun facts:

- Runs on EC2 Nitro ⇒ Nitro Hypervisor, Nitro Security Chip, Nitro Cards and Enclaves

- All payloads are queued for processing in an SQS queue.

- Queued events are retrieved in batches by Lambda’s poller fleet. The poller fleet is a group of EC2 instances whose purpose is to process queued event invocations which have not yet been processed.

- The Lambda service is split into the control plane and the data plane. Each plane serves a distinct purpose in the service. The control plane provides the management APIs

- The data plane is Lambda's Invoke API that triggers the invocation of Lambda functions. When a Lambda function is invoked, the data plane allocates an execution environment on an AWS Lambda Worker

- We rendered a million web pages to find out what makes the web slow: Make as few requests as you can. Number of requests matters more than number of kilobytes transferred. For the request you have to make, do them over HTTP2 or greater if you can. Strive to avoid render blocking requests when you can, prefer async loading when possible.

- After all these years we’re still worrying about connections. From 15,000 Database Connections to Under 100

- Initially, all the hypervisors/servers had a direct connection to the database. We setup a proxy that polled the database on behalf of the servers and forwarded the requests to the appropriate server. We also made it so all the services that were publishing events to the database did so via an API instead of directly inserting into the database.

- therealgaxbo: I do agree with your point, but the Stack Exchange example is slightly unfair. Although they do only have 1 primary DB server, they also have a Redis caching tier, an Elasticsearch cluster, and a custom tag engine - all of which infrastructure exists to take load off the primary DB and aid scalability.

- Not doing something is your lowest cost option. But how do you know what not to do? Machine learning. It’s not all that much different than how Facebook generates a news feed. Dropbox: How ML saves us $1.7M a year on document previews:

- some of that pre-generated content was never viewed. If we could effectively predict whether a preview will be used or not, we would save on compute and storage by only pre-warming files that we are confident will be viewed.

- we built a prediction pipeline that fetches signals relevant to a file and feeds them into a model that predicts the probability of future previews being used

- we replaced an estimated $1.7 million in annual pre-warm costs with $9,000 in ML infrastructure per year

- This is perhaps the biggest takeaway: ML for infrastructure optimization is an exciting area of investment.

- But it seems difficult for people to identify use cases where ML can be used to save money. setib: I work in an innovation ML-oriented lab, and we have a hard time identifying use cases with real added values.

- Then there are concerns on the ROI. spullara: This doesn't seem like enough savings to justify paying a team to maintain it.

- Datto didn’t have much luck using ML to predict hard drive failures. Predicting Hard Drive Failure with Machine Learning

- When you all are laying claim to being the next Silicon Valley, what California has besides great weather, is it does not permit non-compete agreements to be enforced. Do not underestimate how much innovation is driven by this single glorious worker super power. VMware is suing its former COO who joined competitor Nutanix.

- Partial failures are always the most difficult to diagnose and fix. Heaven and hell have always been entwined in the human soul. Evidence of collapse is everywhere. Evidence for a techno-utopia is everywhere. Few have ever recognized they are living in the middle of a collapse. Few have ever recognized they live in a golden age. Humans muddle on. A 25-Year-Old Bet Comes Due: Has Tech Destroyed Society?

- How machine learning powers Facebook’s News Feed ranking algorithm:

- Query inventory. Collect all the candidate posts. Fresh posts not yet seen but that were ranked are eligible again. Action-bumping logic: posts already seen with an interesting conversation may be includable.

- Score Xit for Juan for each prediction (Yijt). Each post is scored using multitask neural nets. There aremany, many features (xijtc) that can be used to predict Yijt, including the type of post, embeddings (i.e., feature representations generated by deep learning models), and what the viewer tends to interact with. To calculate this for more than 1,000 posts, for each of billions of users — all in real time — we run these models for all candidate stories in parallel on multiple machines, called predictors.

- Calculate a single score out of many predictions: Vijt. The output of many predictors are combined into a single score. Multiple passes are needed to save computational power and to apply rules, such as content type diversity (i.e., content type should be varied so that viewers don’t see redundant content types, such as multiple videos, one after another), that depend on an initial ranking score. First, certain integrity processes are applied to every post. These are designed to determine which integrity detection measures. Eligible posts are ordered by score.

- Pass 1 is the main scoring pass, where each story is scored independently and then all ~500 eligible posts are ordered by score. ass 2, which is the contextual pass. Here, contextual features, such as content-type diversity rules, are added.

- Most of the personalization happens in pass 1. We want to optimize how we combine Yijtkinto Vijt. For some, the score may be higher for likes than for commenting, as some people like to express themselves more through liking than commenting. For simplicity and tractability, we score our predictions together in a linear way, so that Vijt = wijt1Yijt1 + wijt2Yijt2 + … + wijtkYijtk.

- Who Will Control the Software That Powers the Internet? There have been a lot of articles lately gnashing teeth over apps that ban users who violate their terms of service. Are you saying they should not? Worse, is the conflation of apps that run on the internet with the internet itself. It’s the equivalent of saying recalling cars for mechanical problems will ruin the highway system. Or not approving a toaster because it’s a fire hazard would ruin the electrical system. No. They are two different things. If Twitter, Facebook, Google, Apple, etc. dropped from the face of the earth tomorrow, the internet wouldn’t even notice. If you don’t want to get banned from a social network don’t violate the ToS, but please quit lying about the internet.

- Give me a good UI over an interleaved stream of jumbled messages any day. Improving how we deploy GitHub.

- One of the main issues that we experienced with the previous system was that deploys were tracked across a number of different messages within the Slack channel. This made it very difficult to piece together the different messages that made-up a single deploy.

- 2x increase in folks contributing to the core software exposed some problems in terms of tooling. Tooling that worked for us a year ago no longer functioned in the same capacity.

- we had a canary stage but the stage would only deploy to up to 2% of GitHub.com traffic. This meant that there were a whole slew of problems that would never get caught in the canary stage before we rolled out to 100% of production, and would have to instead start an incident and roll back.

- At the end of this project, we were able to have two canary stages. The first is at 2% and aims to capture the majority of the issues. This low percentage keeps the risk profile at a tolerable level as such a small amount of traffic would actually be impacted by an issue. A second canary stage was introduced at 20% and allows us to direct to a much larger amount of traffic while still in canary stages.

- What resulted was a state machine backed deploy system with a first party UI component. This was combined with the traditional chatops with which our developers were already familiar. Below, you can see an overview of deploys which have recently been deployed to GitHub.com. Rather than tracking down various messages in a noisy Slack channel, you can go to a consolidated UI. You can see the state machine progression mentioned above in the overview on the right.

- cytzol: Something I found surprising is that a change to the GitHub codebase will be run in canary, get deployed to production, and then merged. I would have expected the PR to be merged first before it gets served to the public, so even if you have to `git revert` and undeploy it, you still have a record of every version that was seen by actual users, even momentarily.

- bswinnerton: This is known as “GitHub Flow (https://guides.github.com/introduction/flow/). I was pretty surprised by it when I first joined GitHub but I’ve grown to love it. It makes rolling back changes much faster than having to open up a revert branch, get it approved, and deploy it. Before every deploy your branch has master merged into it. There’s some clever work by Hubot while you’re in line to deploy to create a version of your branch that has the potential new master / main branch. If conflicts arise you fix them before it’s your turn to deploy.

- Akari shares a complex serverless example using Azure. Moving from servers to server(less).

- serverless for us is now a first consideration before we write any code. There are significant cost and operational benefits for a new organization or start-up to pick a cloud platform and move directly into the cloud-native space,

- know what you want to build, or more especially, know what you want your minimum viable product to be.

- don’t be too concerned about retrying or rebuilding. The architecture above was stable if costly, and did provide us with an active platform.

- we made the decision to slim down AVA and focus on some key areas around the value of the data being collected. Across a couple of hackathons we developed a pipeline model for extracting chatbot messages into meaningful content.

- App Services should live on the same App Service Plan unless there is a critical or feature-based reason not to.

- All data services should be consolidated into one key highly available resource such as multi-region Cosmos DB.

- AI and cognitive services should be consolidated as much as the platform will allow, for example all LUIS apps on one resource.

- we strongly considered using using containers for building out the skills and core components of the bot. With a fair amount of research we decided against it as we didn’t need the portability of containers for the stack we were using

- The two key parts of AVA are the bot and the management portal. The management portal is a React frontend authenticated with Azure Active Directory.

- The API tier is serverless through Azure Functions and allows for communication with the chatbot, as well as access to data primarily stored within Cosmos DB

- The key framework is .NET Core through the Bot Framework, which allows for the immediate integration with Teams and use of features such as adaptive dialog and adaptive cards for displaying data within the chatbot/Teams interface. The core bot operates on an App Service that communicates separately to the Function App.

- As we only utilize Microsoft Azure for our deployments, monitoring cost is straightforward and we can make use of Azure Cost Management. This allows us to see cost growth based on a strict set of tags that we apply to each resource and resource group. We have a split production/development subscription set per product with tagging per tier alongside other metadata fitting into this. A lesson from day one is: monitor costs and set budgets.

- Also Event-Driven on Azure: Part 1 – Why you should consider an event-driven architecture.

- Also also Azure for Architects, Third Edition

- Rust in Production: 1Password:

- We’ve been using Rust in production at 1Password for a few years now. Our Windows team was the frontrunner on this effort to the point where about 70% of 1Password 7 for Windows is written in Rust. We also ported the 1Password Brain – the engine that powers our browser filling logic – from Go to Rust at the end of 2019 so that we could take advantage of the speed and performance of deploying Rust to WebAssembly in our browser extension

- We are using Rust to create a headless 1Password app that encompasses all of the business logic, cryptography, database access, server communication, and more wrapped in a thin UI layer that is native to the system on which we’re deploying.

- One of the main things that drew us to Rust initially was the memory safety; it definitely excites us knowing that Rust helps us maximize our confidence in the safety of our customers’ secrets.

- There is a significant performance benefit to the lack of a traditional runtime; we don’t have to worry about the overhead of a garbage collector

- Carefully aligning application logic with Rust’s strong type rules makes APIs difficult to use incorrectly and results in simpler code that’s free from runtime checking of constraints and invariants; the compiler can guarantee there are no invalid runtime code paths that will lead your program astray before it executes.

- Another very powerful (and often overlooked) feature of Rust is its procedural macro system, which has allowed us to write a tool that automatically shares types defined in Rust with our client-side languages (Swift, Kotlin, and TypeScript).

- It did fall short for us in one key area: We were hoping WebAssembly would take us further in the browser and our browser extension than it has.

- CACHE MANAGEMENT LESSONS LEARNED: One of the major improvements we made to slapd-bdb/hdb caching was to replace this LRU mechanism with CLOCK in 2006. Logically it is the same strategy as LRU, but the implementation uses a circularly linked list. Instead of popping pages out of their position and tacking them onto the end, there is a clock-hand pointer that points to the current head/tail, and a Referenced flag for each entry. When an entry is referenced, the hand is just advanced to the next entry in the circle. This approach drastically reduces the locking overhead in reading and updating the cache, removing a major bottleneck in multi-threaded operation.

- What is differential dataflow? Differential dataflow lets you write code such that the resulting programs are incremental e.g. if you were computing the most retweeted tweet in all of twitter or something like that and 5 minutes later 1000 new tweets showed up it would only take work proportional to the 1000 new tweets to update the results. It wouldn't need to redo the computation across all tweets. Unlike every other similar framework I know of, Differential can also do this for programs with loops / recursion which makes it more possible to write algorithms. Beyond that, as you've noted it parallelizes work nicely.

- Always-on Time-series Database: Keeping Up Where There's No Way to Catch Up

- We've witnessed more than exponential growth in the volume and breadth of that data. In fact, by our estimate, we've seen an increase by a factor of about 1x1012 over the past decade.

- sradman: ACM Queue interview of Theo Schlossnagle, founder and CTO of Circonus, about IRONdb [1], an alternative time-series platform to Prometheus/InfluxDB. Some high-level architecture choices: - CRDT to avoid coordination service - LMDB (B-Tree) for read optimized requests - RocksDB (LSM-tree) for write optimized requests - Modified consistent hashing ring split across two Availability Zones - OpenZFS on Linux - Flatbuffers for Serialization-Deserialization - Information Lifecycle Management for historical data - circllhist; HDR [high dynamic range] log-linear quantized histograms

- Uber’s Real-Time Push Platform. Push don't pull in mobile real-time systems.

- Uber went from 80% of requests made to the backend API gateway being polling calls to using a push model. Polling is responsive but uses a lot of resources—faster battery drain, app sluggishness, and network-level congestion. As the number of features with real-time dynamic data needs increased, this approach was infeasible as it would continue to add significant load on the backend.

- The difficulty is knowing when to push a message and who to push it too. As an example, when a driver “accepts an offer, the driver and trip entity state changes. This change triggers the Fireball service. Then, based on a configuration, Fireball decides what type of push messages should be sent to the involved marketplace participants. Very often, a single trigger would warrant sending multiple message payloads to multiple users.

- Each push message has various configurations defined for optimizations: priority, TTL, dedupe. The system provides an “at-least-once guarantee for delivery.

- To provide a reliable delivery channel we had to utilize a TCP based persistent connection from the apps to the delivery service in our datacenter. For an application protocol in 2015, our options were to utilize HTTP/1.1 with long polling, Web Sockets or finally Server-Sent events (SSE).

- The client starts receiving a message on a first HTTP request /ramen/receive?seq=0 with a sequence number of 0 at the beginning of any new session. The server responds with HTTP 200 and ‘Content-Type: text/event-stream’ for maintaining the SSE connection. As the next step, the server sends all pending messages in the descending order of priority and associates incremental sequence numbers. Since the underlying transport protocol is a TCP connection, if a message with seq#3 is not delivered, then the connection should have disconnected, timed out, or failed.

- we use technologies: Netty, Apache Zookeeper, Apache Helix, Redis and Apache Cassandra

- We have operated this system with more than 1.5M concurrent connections and pushes over 250,000 messages per second

- In late 2019 we started developing the next generation of the RAMEN protocol to address the above shortcomings. After a lot of consideration, we chose to build this on top of gRPC.

Soft Stuff: