Stuff The Internet Says On Scalability For January 24th, 2020

Wake up! It's HighScalability time:

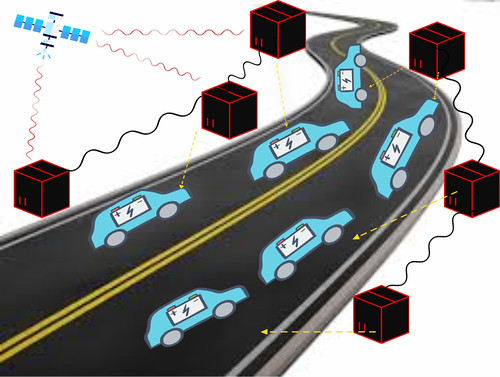

Instead of turning every car into rolling sensor studded supercomputers, roads could be festooned with stationary edge command and control pods for offloading compute, sensing and managing traffic. Cars become mostly remote controlled pleasure palaces. Solves compute, latency, and interop.

Do you like this sort of Stuff? Your support on Patreon is appreciated more than you can know. I also wrote Explain the Cloud Like I'm 10 for everyone who needs to understand the cloud (which is everyone). On Amazon it has 87 mostly 5 star reviews (149 on Goodreads). Please recommend it. You'll be a real cloud hero.

Number Stuff:

- 204 billion: app downloads in 2019, up 6% since 2018, up 45% since 2016. $120 billion spent on apps (up 2.1x from 2016). Growth is in emerging markets like India, Brazil and Indonesia.

- $100 billion: mobile game spending in 2020. Arcade and puzzle games are the most downloaded games globally.

- 82%: remote workers have no plans to return to an in-office setting.

- 579: active Facebook open source repositories. 170 new in 2019. 82,000 commits.

- .5%: growth rate in IPv4 allocation growth rate. Less than 1/10 the 2010 growth rate.

- 44%: UK tech investment grew faster than the US and China in 2019.

- 100 million: daily phishing attacks blocked by Google. In a bad mood? You're less likely to fall for an attack.

- 2.8 billion: people use a Facebook property. 11 datacenters in the US. 3 in Europe. 1 in Asia. Facebook datacenters use 38% less electricity and 80% less water than average because of their full-stack optimization approach.

- 15%: of Basecamp operations budget is spent on Ruby/Rails application servers.

- 2.5%: projected 2020 global economic growth rate. 1.4% in advanced economies and 4.1% in developing economies.

Quotable Stuff:

- @molly_struve: Developer accused of unreadable code refuses to comment

- James Hamilton: Your core premise is that cloud computing is more efficient and, because it’s more efficient, a form of Jevon’s Paradox kicks in and consumption goes up. It’s certainly the case that lower costs does make make more problems amiable to computing solutions. So, no debate there. But if the cloud operators closed their doors tomorrow, would the world’s compute loads reduce? My thinking is probably not on the argument that IT expenses in most industries are a tiny part of their overall expense. Basically, I’m arguing the world is under using IT based solutions now and they are held back by the complexity of solving problems rather much more than the cost. Skills rather than costs are the most common blockers of new IT workloads. Which is to say, if machine learning, as just one workload example, keeps getting easier (it will), then computing usage will continue to go up rapidly. Increased computing is, in many if not most cases, better for the environment.

- @slava_pestov: Typical CPU clock rate: 1984: 8 MHz 2002: 2.8 GHz 2020: 2.8 GHz Of course there were many other advancements in the last 18 years, but kids these days never knew “the megahertz wars”

- @kellan: The drop in replacement fallacy promises that you can make technical improvements to a system without having to understand the business and product problems the system is solving, without having to to talk with people who aren’t like you, and without making hard decisions

- bert hubert: In a modern telecommunications service provider, new equipment is deployed, configured, maintained and often financed by the vendor. Just to let that sink in, Huawei (and their close partners) already run and directly operate the mobile telecommunication infrastructure for over 100 million European subscribers.

- @GeeWengel: I unfortunately managed to burn several thousand dollars on #Azure Application Insights, by making a (pretty easy to make) configuration mistake, and then going on Christmas vacation... management was not pleased

- @postwait: One of the greatest achievements we pulled off in the design and implementation of @Circonus IRONdb was its simplicity. No leaders, coordinators, consensus protocols, or durable pipeline required in front. Just one process per node. Just run it. Just submit data.

- Geoff Huston: The BGP update activity is growing in both the IPv4 and IPv6 domains, but the growth levels are well below the growth in the number of routed prefixes. The ‘clustered’ nature of the Internet, where the diameter of the growing network is kept constant while the density of the network increases as implied that the dynamic behaviour of BGP, as measured by the average time to reach convergence, has remained very stable in IPv4 and bounded by an upper limit in IPv6.

- @stOrmz: We’ve been touting the idea of using AWS cost alerts as an innovative way to dectect nefarious activity. Totally cool to hear @swagitda_ also touting the same. #S4x20

- Acquisti: “What we found was that, yes, advertising with cookies — so targeted advertising — did increase revenues — but by a tiny amount. Four per cent. In absolute terms the increase in revenues was $0.000008 per advertisment,” Acquisti told the hearing. “Simultaneously we were running a study, as merchants, buying ads with a different degree of targeting. And we found that for the merchants sometimes buying targeted ads over untargeted ads can be 500% times as expensive.

- Nathan Myhrvold: 1: software is like a gas – it expands to fill its container. 2: software grows until it is limited by Moore’s law. 3: software growth makes Moore’s law possible – people buy new hardware because the software requires it. And, finally, 4: software is only limited by human ambition and expectation.

- Trilby Beresford: And as the new decade begins, analysts at App Annie predict that smartphones will not only function as the base for which all other technological devices are connected, but they will serve as the primary interface through which users interact with the world.

- Don Pettit: If the gravity of earth was only slightly greater, we couldn't leave it.

- Jessitron: Maybe you want to store JSON blobs and the high-scale solution is Mongo. But is that the “best” for your situation? Consider: does anyone on the team know how to use and administer it? are there good drivers and client libraries for your language? does it run well in the production environment you already run? do the frameworks you use integrate with it? do you have diagnostic tools and utilities for it?

- @bartdenny: So much innovation still to come as our phones become more like magic wands

- @OldManKris: “Serverless” means you have servers, but can’t configure/customize them. “No-code” means there is code, but you can’t change it. “NoSQL” means you have to learn a new query language and implement your own transaction mechanisms.

- @alasdairrr: We have over a Petabyte of data stored on ZFS at work, and over the last 10 years we’ve lost zero bytes of customer data despite all sorts of exceptional hardware and software failures. The idea of putting it on btrfs is beyond laughable...

- fafhrd91: Be a maintainer of large open source project is not a fun task. You alway face with rude and hate, everyone knows better how to build software, nobody wants to do home work and read docs and think a bit and very few provide any help. Seems everyone believes there is large team behind actix with unlimited time and budget. (Btw thanks to everyone who provided prs and other help!) For example, async/await took three weeks 12 hours/day work stint, quite exhausting, and what happened after release, I started to receive complaints that docs are not updated and i have to go fix my shit. Encouraging. You could notice after each unsafe shitstorm, i started to spend less and less time with the community. You felt betrayed after you put so much effort and then to hear all this shit comments, even if you understand that that is usual internet behavior. Anyway, removing issue was a stupid idea. But I was pissed off with last two personal comments, especially while sitting and thinking how to solve the problem. I am sorry for doing that.

- @chetanp: Salesforce’s cumulative cash burn to IPO was $30M: 1999: $0 revenue/ burned $5m 2000: $5m revenue/ burned $33m 2001: $22m revenue/ burned $14m 2002: $48m revenue/ +$3m free cash flow 2003: $86m revenue/ +$19m free cash flow IPO 2004 Scaling SaaS companies can be cash efficient.

- @etherealmind: "Anyone can buy bandwidth. But latency is from the gods" - Unknown.

- Deborah M Gordon: Changes in [ant] colony behaviour due to past events are not the simple sum of ant memories, just as changes in what we remember, and what we say or do, are not a simple set of transformations, neuron by neuron. Instead, your memories are like an ant colony’s: no particular neuron remembers anything although your brain does

- Jordana Cepelewicz: The latest in a long line of evidence comes from scientists’ discovery of a new type of electrical signal in the upper layers of the human cortex. Laboratory and modeling studies have already shown that tiny compartments in the dendritic arms of cortical neurons can each perform complicated operations in mathematical logic. But now it seems that individual dendritic compartments can also perform a particular computation — “exclusive OR” — that mathematical theorists had previously categorized as unsolvable by single-neuron systems.

- Amos Zeeberg: Now, an interdisciplinary team of researchers at the University of Colorado, Boulder, has created a rather different kind of concrete — one that is alive and can even reproduce. Minerals in the new material are deposited not by chemistry but by cyanobacteria, a common class of microbes that capture energy through photosynthesis. The photosynthetic process absorbs carbon dioxide, in stark contrast to the production of regular concrete, which spews huge amounts of that greenhouse gas.

- Cory Doctorow: Sauter’s insight in that essay: machine learning is fundamentally conservative, and it hates change. If you start a text message to your partner with “Hey darling,” the next time you start typing a message to them, “Hey” will beget an autosuggestion of “darling” as the next word, even if this time you are announcing a break-up. If you type a word or phrase you’ve never typed before, autosuggest will prompt you with the statistically most common next phrase from all users (I made a small internet storm in July 2018 when I documented autocomplete’s suggestion in my message to the family babysitter, which paired “Can you sit” with “on my face and”).

- steve chaney: The internet’s next major act will be augmented vision – smart glasses. Svelte, stylish, unnoticeably tech, and capable of delivering a new reality based on what is around you. Today, we are bound by a 6″ screen. Tomorrow, the internet will be all around us, absorbed visually – and invisibly – in ways that make smartphones seem like a camcorder of times past...This truth is that we are now living in a permissionless persistence world. Always on perception and localization from cameras means we live in a new frontier where data persists without you always granting access. Whereas the localization happening with wireless is being designed in a way that is secure, opt-in and will allow never conceived before trusted interactions. This is really important for user security and privacy.

- Matt Ranger: We automate tasks, not jobs. A job is made from of a bundle of tasks. For example, O*NET defines the job of post-secondary architecture teacher as including 21 tasks like advising students, preparing course materials and conducting original research. Technology automates tasks, not jobs. Automating a task within a job doesn’t necessarily mean the job will stop existing. It’s hard to predict the effects — the number of workers employed and the wage of those workers can go up or down depending on various economic factors as we’ll see later on.Craig A. Falconer:

- Craig A. Falconer: The safest way to sell a lie is to dress it up as a secret.

- @jamesurquhart: I like this short thread. K8S should not be evaluated on a per app basis. It’s a system-of-apps. platform. And even then, K8S is not developer-centric tech, but platform/infrastructure centric. K8S itself is capacity mgmt, period. It will get hidden away from devs over time.

- @brandonsavage: You’re looking at a $200/mo SaaS but decide to build it yourself. It takes a $100,000/yr engineer 3 months to build, + 2 weeks a year to maintain. Congratulations. You saved $2400 but spent $28,846 building it yourself. The most expensive solution ever is writing your own code

- @kellabyte: Nobody believes me I 3x’d my salary by leaving .NET community and nobody thinks any of the .NET community is the actual reason. Let me tell you some stories because .NET is having it’s Java moment.

- Sarah Rose: In the grand tradition of governments everywhere, men shied at the responsibility of doing anything new. Training women was a big step, and no one actually wanted to be the one to send Yvonne to the field.

- Jeff Carter: Despite the recent improvements, there are still areas where Lambda and Fargate do not compete due to technical limitations. Things like long-running tasks or background processing aren’t as doable in Lambda. Some event sources, such as DynamoDB streams, cannot be directly processed by Fargate. Looking forward to 2020 and beyond, I believe the number of these technical limitations will continue to decrease as Lambda and Fargate continue to converge on feature sets. The new ECS CLI is already showing a lot of promise in bringing SAM style ease-of-deployments to Fargate. Also, Lambda has a history of increasing limits and supporting new runtimes. It will be interesting to see where these services continue to evolve.

- Joel Hruska: A group of UK scientists is basically claiming to have found one. UK III-V (named for the elements of the periodic table used in its construction), would supposedly use ~1 percent the power of current DRAM. It could serve as a replacement for both current non-volatile storage and DRAM itself, though the authors suggest it would currently be better utilized as a DRAM replacement, due to density considerations. NAND flash density is increasing rapidly courtesy of 3D stacking, and UK III-V hasn’t been implemented in a 3D stacked configuration.

- James Gleick: Originality was his [Richard Feynman] obsession. He had to create from first principles—a dangerous virtue that sometimes led to waste and failure.

- @sogrady: have been asked about the term NoSQL a couple of times over the last few quarters. quantitative evidence suggests usage is in decline. what's not obvious, however, is what replaces it.

- John Naughton: As Moore’s law reaches the end of its dominion, Myhrvold’s laws suggest that we basically have only two options. Either we moderate our ambitions or we go back to writing leaner, more efficient code. In other words, back to the future.

- @kellabyte: I love C# it’s my favourite language but people don’t want the times installed anymore. People don’t want to talk about ASP for 15 years or ORM’s for 15 years or DDD and ES for 15 years. I know I’m burning a lot of bridges here but you want to know why people don’t do .NET in OSS

- @dave_universetf: Oh boy, I get to take another trip through GCP's support experience! Let's see how hilariously bad it is this time. I'll livetweet for your entertainment.

- Geoff Huston: As the Internet continues to evolve, it is no longer the technically innovative challenger pitted against venerable incumbents in the forms of the traditional industries of telephony, print newspapers, television entertainment and social interaction. The Internet is now the established norm. The days when the Internet was touted as a poster child of disruption in a deregulated space are long since over, and these days we appear to be increasingly looking further afield for a regulatory and governance framework that can challenge the increasing complacency of the newly-established incumbents. It is unclear how successful we will be in this search. We can but wait and see.

- Rick Houlihan: In the end people who actually scale NoSQL databases learn fast that NoSQL is about simple queries that facilitate CPU conservation so performance is driven by proper denormalized data modeling and not fancy query API's. Once developers realize this the platform debate quickly shifts from API functionality to a discussion of operational characteristics, and this is where the 'Born in the Cloud' services like DynamoDB start to shine.

- sn: Things I’ve learned from using SSDs at prgmr: Since the firmware is more complicated than hard drives, they are way more likely to brick themselves completely instead of a graceful degradation. Manufacturers can also have nasty firmware bugs . I’d recommend using a mix of SSDs at different lifetimes, and/or different manufacturers, in a RAID configuration.

- Memory Guy: First of all, it’s a 16Gb DRAM, so it supports the same DIMM density with half as many chips as a DIMM based on 8Gb parts. This not only reduces power, but it halves the load on the processor’s memory channel, allowing the processor’s bus to run faster. Second, it’s a DDR5 part, which means that it supports higher memory bandwidths at lower power. Micron claims that this chip yields an 85% memory performance increase over its DDR4 counterpart. DDR5 provides a fresh look at what a DRAM interface should include. Bursts are twice as long as those in DDR4. Refresh granularity is improved, and banking can be done in groups. So it’s not simply a speed upgrade and power reduction, but it includes architectural improvements to increase overall system speed.

Useful Stuff:

- ARM has been the future of servers as long as desktop Linux has been the future of the client. It looks like one dream may come true. AWS Graviton2:

- I [James Hamilton] believe there is a high probability we are now looking at what will become the first high volume ARM Server. More speeds and feeds: >30B transistors in 7nm process 64KB icache, 64KB dcache, and 1MB L2 cache 2TB/s internal, full-mesh fabric Each vCPU is a full non-shared core (not SMT) Dual SIMD pipelines/core including ML optimized int8 and fp16 Fully cache coherent L1 cache 100% encrypted DRAM 8 DRAM channels at 3200 Mhz

- ARM Servers have been inevitable for a long time but it’s great to finally see them here and in customers hands in large numbers.

- The Google Tax is the new Amazon Tax. Make a market. Suck people into it by handing out free opium cookies. Get people hooked. Then make it pay to play baby. Unfortunately, unlike how Amazon has become the go to "buy things" search engine, TripAdvisor hasn't become the go to "go places" search engine, so they must pay the piper. TripAdvisor Cuts Hundreds of Jobs After Google Competition Bites: Alphabet Inc.’s Google has launched new travel search tools that compete with TripAdvisor, while adding its own reviews of hotels, restaurants and other destinations. Google has also crammed the top of its mobile search results with more ads. This has forced many companies, including TripAdvisor, to buy more ads from the search giant to keep online traffic flowing.

- Go forth and sin no more. Ten Commandments for Cloud Decision-Makers:

- Stop pretending that cloud is just a technical problem;

- Train from Day 1;

- Measure cloud as a value center;

- Start small, but stop kicking the tires;

- Trust your partners;

- Don’t choose technology based on familiarity bias;

- Your time is your most expensive resource—optimize it;

- Build in the cloud earlier. No, earlier than that;

- Stop solving fake problems;

- Create momentum by demonstrating value.

- More art than science. Doing Things That Scale.

- Things that don't scale: Only fix a problem for myself (and maybe a small group of others). Have to be maintained in perpetuity (by me).

- Things that scale: Fix the problem in way that will just work™ for most people, most of the time. Are developed, used, and maintained by a wider community.

- The free software community tends to celebrate custom, hacky solutions to problems as something positive (“It’s so flexible!”), even when these hacks are only necessary because things are broken by default.

- If we want ethical technology to become accessible to more people, we need to invest our (very limited) time and energy in solutions that scale. This means good defaults instead of endless customization, apps instead of scripts, “it just works” instead of “read the fucking manual”. The extra effort to make proper solutions that work for everyone, rather than hacks just for ourselves can seem daunting, but is always worth it in the long run

- We're Reddit's Infrastructure team, ask us anything!

- We use Solr for our backend and run Fusion on top with custom query pipelines for Reddit's use cases. We run our own Solr and Fusion deployments in EC2. An internal service is used to provide business-level APIs. There's also some async pipelines to do real-time indexing updates for our collections. We primarily use AWS but do leverage some tools from other providers, such as Google BigQuery.

- We run clustered Solr and replicate shards across the cluster. We have backup jobs that can fully recreate our collections and indexes from existing database backups in a few hours if something catastrophic happens as well.

- We use both postgres and cassandra, and frequently have memcached in front of postgres

- IDK what the Biggest thing has been, but we've gone through a lot of effort over the past year or so to ensure that everything has proper and consistent cost allocation tagging. Considering how long Reddit's infrastructure has been around, it took some time to get things consistent.

- We've aggressively managed reserved instances, which helped make costs more predictable. That's all coupled with ongoing work to proactively manage capacity vs. utilization. Compute > memory > network > storage in order of decreasing impact on cost, so we try to pull in compute first, and care least about storage. We've got to keep all those cat GIFs somewhere.

- We have a pretty robust internal logging pipeline that we use for service health.

- We have some cost allocation tagging that goes to individual engineering teams who are responsible for the cost, but we haven't gone too heavy on enforcement yet as we're able to apply a lot of higher level cost optimizations (RIs, CDN savings) that apply across many different pillars of engineering.

- Our primary monitoring and alerting system for our metrics is Wavefront. I'll split up the answers for how metrics end up there based on use case. System metrics (CPU, mem, disk) - We run a Diamond sidecar on all hosts we want to collect system metrics on and those send metrics to a central metrics-sink for aggregation, processing, and proxying to Wavefront. Third-party tools (databases, message queues, etc.) - Diamond Collectors for these as well if a collector exists. We roll a few internal collectors and also some custom scripts as well. Internal Application metrics - Application metrics are reported using the statsd protocol and aggregated at a per-service level before being shipped to Wavefront. We have instrumentation libraries that all of our services use to automatically report basic request/response metrics.

- We also use sentry, which is great for quickly understanding why something is breaking.

- We do blameless postmortems. Usually that means that after an incident we are able to identify and fix the cause.

- I can't think very far back, but one recent issue has been with RabbitMQ running out of file descriptors and crashing, and then when it comes back up its data is corrupted. That has messed up a lot of our async processing and also surprisingly broke some in-request things that depended on being able to publish messages to rabbit.

- We make heavy use of Terraform. Puppet is used heavily in our non-k8s environments. There's no shortage of pain points, but one annoyance that we've been dealing with lately is the boundary between our non-k8s and k8s worlds as it relates to things like service discovery etc.

- We do a lot of auto-scaling both using AWS cloud watch alarms and custom tooling. CPU is usually the metric we scale off of. And we target the p50 statistic.

- Best AWS feature: I think Cost Explorer has improved tremendously over the years. CloudTrail & AWS Config are great to figure out "who touched this resource last and what did they do?", and the Personal Health Dashboard has been very useful in figuring out if a particular AWS event is affecting us.

- We're effectively only AWS. What you define as "cloud infrastructure" is getting muddier every day, however.

- We use Drone for CI, and Spinnaker for k8s deployments. We host both of these ourselves. Non-k8s deployments are handled through an in-house tool, Rollingpin.

- All new services are deployed to k8s. We continue to migrate non-k8s services to k8s. We continue to use either self-managed postgres/C* clusters, or RDS, for databases and persistence. We have not attempted to run stateful services like DBs from k8s yet. We manage our own K8s clusters on EC2, we don't use EKS. The r/kubernetes AMA has some more comments on the reasoning there.

- Mostly reserved, some on demand, and very little spot at the moment. Historically we sometimes prescaled application server pools, but that is almost never required these days.

- Sonos will stop updating its 'legacy' products in May. A lot of anger over this one. If you have an API you'll eventually have to make a cut over decision. When do you stop supporting old APIs? This is all tangled up with identity. When does something change so much that it's no longer what it was? Versioning APIs can only get you so far. The API for a bird is very different than that for a dinosaur, even though they are a long evolutionary version chain away from being the same. Though a lot of people are complaining that their older gear is legacy and will no longer be updated, the real anger is because newer gear connected to the older gear will also not be updated. At first glance this seams silly. Do you stop updating a new iPad because you have an old iPhone? But that's not a good analogy. The iPhone and and iPad are standalone beasts. One can work fine without the other. The new Sonos gear would have to continue to use old APIs and protocols to talk to the old gear. That's a nightmare to develop and test. Where do you want focus limited development and testing resources, on new or old stuff? Both sides have a valid point-of-view here. But if I sunk thousands of dollars into gear I would be pissed too. The cost benefit analysis by Sonos may not have focussed enough on soft issues. People now actively hate the Sonos brand, much like people hate Google for always shutting down their favorite services. That hurts Google when it comes to GCP. It may hurt Sonos going forward as well.

- Jeff Dean interview: Machine learning trends in 2020: - much more multitask learning and multimodal learning - more interesting on-device models — or sort of consumer devices, like phones or whatever — to work more effectively. - AI-related principles-related work is going to be important. - ML for chip design - ML in robots

- A long and interesting tweet thread telling the story of scaling Etsy. Spoilers: the wolf eats Little Read Riding Hood.

- @mcfunley: So the first version of Etsy (2005) was PHP talking to Postgres, written by someone learning PHP as he was doing it, which was all fine. Postgres was kind of an idiosyncratic choice for the time, but it didn’t matter yet and anyway that’s a different story. I started in the long shadow of “v2,” a catastrophic attempt to rewrite it, adding a Python middle layer. They had asked a Twisted consultant what to do, and the Twisted consultant said they needed a Twisted middle layer (go figure)...and it goes on for many more tweets.

- PragmaticPulp: Nearly every tweet describes scenarios that can only happen when engineering management is M.I.A. or too inexperienced to recognize when something is a bad idea. - Attempting to solve problems with a rewrite in a different programming language.- Rewriting open-source software before you can use it. - Hiring consultants to confirm your decisions. - “Nobody was in charge”. - Multiple competing initiatives to solve the problem in different ways. - The “drop-in replacement” team. - Allowing anyone to discuss “business logic” as if it’s somehow different work. I have to admit that when I was younger and less experienced, I plowed right into many of these same mistakes. These days, I’d do everything in my power to shut down the internal competition and aimless wandering of engineering teams.

- Somehow we've manged to centralize the decentralization of the edge.

- Dependability in Edge Computing

- Edge computing presents an exciting new computational paradigm that supports growing geographically distributed data integration and data processing for the Internet of Things and augmented reality applications. Edge devices and edge computing interactions reduce network dependence and support low latency, context-aware information processing in environments close to the client devices. However, services built around edge computing are likely to suffer from new failure modes, both hard failures (unavailability of certain resources) and soft failures (degraded availability of certain resources). Low-latency requirements combined with budget constraints will limit the fail-over options available in edge computing compared to a traditional cloud-based environment.

- Evolution of Edge @Netflix

- We trick clients. We put an intermediary in between client and our data center. We refer to this as a PoP. PoP is Point of Presence. Think about PoP as a proxy that accepts client's connection, terminates TLS and then over a backbone, another concept that we just introduced, sends the request to the primary data center or AWS region. What is backbone? Think about backbone as private internet connection between your Point of Presence and AWS. It's like your private highway. Everyone is stuck in traffic and you are driving on your private highway.

- How does this change the interaction and quality of client's experience if we put a Point of Presence in between them? It's a much more complicated diagram, but you can see that the connection or round-trip time between client and Point of Presence is lower. This means that TLS and TCP handshakes are happening faster. Therefore, in order to send my first bytes of my request, I need to wait only 90 milliseconds. Compare this to 300 milliseconds.

- When we send request to Point of Presence, Point of Presence already has pre-established connection with our primary data center. It's already scaled and we're ready to reuse it. It's a good idea to also use protocols that allow you to multiplex requests.

- Scaling Patterns for Netflix's Edge

- Let's go back to what we have. We have cookies, that's how most of our devices talk to Netflix or service, that gives each the cookies. These are long-lived cookies. They sit on your box for a year, nine months, but in there is a little expiration date that we put in, and this expiration date so tells us how long we can trust the cookie. If it comes into our system and we crack it open, and we look, we can look for its expiration. That's the dot in the middle that you see up here. If it has not expired, we're just going to trust it. This fits our business use case. At Netflix, if you're familiar with it, you're going to load it up, and maybe the last time you used the service was last night, so it's been eight hours. We're going to need to go make sure you're still a member. After that, we can give you a new cookie that's good for eight hours. For the rest of your TV watching binging for that night, we can trust that cookie, and that fits our use case. If you're a member, at the beginning of the night, you're probably a member at the end of the night. Works great.

- That took a little bit of the five why's. We're, "Why do you need this data?" It turns out they didn't need it for nine months. We started to actually embed it in the jar. We had a client library, we'd ship it out, and we'd just put old data, whatever the data of the day was in there, and we had to compress it small enough at this point that it really wasn't a big overhead. Now actually, the data lives in the jar. You could still use PubSub, and you can pump out updates, put if PubSub goes down, this highly critical data is available to everybody. It might be old, it might be nine months old, but that's fine.

- Dependability in Edge Computing

- Library of Congress Storage Architecture Meeting:

- HDD 75% of bits and 30% of revenue, NAND 20% of bits and 70% of revenue

- The Kryder rates for NAND Flash, HDD and Tape are comparable; $/GB decreases are competitive with all technologies.

- $/GB decreases are in the 19%/yr range and not the classical Moore’s Law projection of 28%/yr associated with areal density doubling every 2 years

- In 2026 is there demand for 7X more manufactured storage annually and is there sufficient value for this storage to spend $122B more annually (2.4X) for this storage?

- Unlike HDD, tape magnetic physics is not the limiting issues since tape bit cells are 60X larger than HDD bit cells ... The projected tape areal density in 2025 (90 Gbit/in2) is 13x smaller than today’s HDD areal density and has already been demonstrated in laboratory environments.

- Tape storage is strategic in public, hybrid, and private “Clouds”

- I have yet to see a cloud API that implements the technique published by Mehul Shah et al twelve years ago, allowing the data owner to challenge the cloud provider with a nonce, thus forcing it to compute the hash of the nonce and the data at check time.

- A lot of meat on this bone. Scaling React Server-Side Rendering:

- Given reasonable estimates, when we compound these improvements, we can achieve an astounding 1288x improvement in total capacity!

- What I found so interesting about this project was where the investigative trail led. I assumed that improving React server-side performance would boil down to correctly implementing a number of React-specific best practices. Only later did I realize that I was looking for performance in the wrong places.

- The idea was that the monolith would continue to render JSP templates, but would delegate some parts of the page to the React service. The monolith would send rendering requests to the React service, including the names of components to render, and any data that the component would require. The React service would render the requested components, returning embeddable HTML, React mounting instructions, and the serialized Redux store to the monolith. Finally, the monolith would insert these assets into the final, rendered template. In the browser, React would handle any dynamic re-rendering. The result was a single codebase which renders on both the client and server — a huge improvement upon the status quo.

- Our service would be deployed as a Docker container within a Mesos/Marathon infrastructure. Due to extremely complex and boring internal dynamics, we did not have much horizontal scaling capacity. We weren’t in a position to be able to provision additional machines for the cluster. We were limited to approximately 100 instances of our React service. It wouldn’t always be this way, but during the period of transition to isomorphic rendering, we would have to find a way to work within these constraints.

- First, upgrade your Node and React dependencies. This is likely the easiest performance win you will achieve. In my experience, upgrading from Node 4 and React 15, to Node 8 and React 16, increased performance by approximately 2.3x.

- Double-check your load balancing strategy, and fix it if necessary. This is probably the next-easiest win. While it doesn’t improve average render times, we must always provision for the worst-case scenario, and so reducing 99th percentile response latency counts as a capacity increase in my book. I would conservatively estimate that switching from random to round-robin load balancing bought us a 1.4x improvement in headroom.

- Implement a client-side rendering fallback strategy. This is fairly easy if you are already server-side rendering a serialized Redux store. In my experience, this provides a roughly 8x improvement in emergency, elastic capacity. This capability can give you a lot of flexibility to defer other performance upgrades. And even if your performance is fine, it’s always nice to have a safety net.

- Implement isomorphic rendering for entire pages, in conjunction with client-side routing. The goal here is to server-side render only the first page in a user’s browsing session. Upgrading a legacy application to use this approach will probably take a while, but it can be done incrementally, and it can be Pareto-optimized by upgrading strategic pairs of pages. All applications are different, but if we assume an average of 5 pages visited per user session, we can increase capacity by 5x with this strategy.

- Install per-component caching in low-risk areas. I have already outlined the pitfalls of this caching strategy, but certain rarely modified components, such as the page header, navigation, and footer, provide a better risk-to-reward ratio. I saw a roughly 1.4x increase in capacity when a handful of rarely modified components were cached.

- Finally, for situations requiring both maximum risk and maximum reward, cache as many components as possible. A 10x or greater improvement in capacity is easily achievable with this approach. It does, however, require very careful attention to detail.

- Looks like a good option. Deploy your side-projects at scale for basically nothing - Google Cloud Run. Fully managed. Cheap. Language agnostic. Scalable.

- @fatih: Can confirm. Cloud Run is the User experience people want instead of Kubernetes. Now they need to figure out how to handle persistence data and it’ll be a great product for many projects.

- ranciscop: I have used it for work-related reasons and indeed the service is quite nice. But I don't use Google Cloud Run forpersonal projects for two reasons: - No way of limiting the expenses AFAIK. I don't want the possibility of having a huge bill on my name that I cannot pay. This unfortunately applies to other clouds. - The risk of being locked out. For many, many reasons (including the above), you can get locked out of the whole ecosystem. I depend on Google for both Gmail and Android, so being locked out would be a disaster. To use Google Cloud, I'd basically need to migrate out of Google in other places, which is a huge initial cost.

- Choose different languages for different jobs. Why we’re writing machine learning infrastructure in Go, not Python:

- Concurrency is crucial for machine learning infrastructure; Building a cross-platform CLI is easier in Go; The Go ecosystem is great for infrastructure projects; Go is just a pleasure to work with; Python for machine learning, Go for infrastructure

- alebkaiser:We thought similarly to you about the relative ease of "pip install"—which is why we originally wrote the CLI in Python. Pretty immediately, however, we heard back from users who experienced friction. With the Go binary, we're able to share a one line bash command that users on Mac/Linux (Windows coming soon) can run to install the Cortex CLI, which removed the need for us to instruct users on how to configure their local environments. We've found that this one line install works better for our users: https://www.cortex.dev/install Also, when we had the Python CLI, some of our users complained that in their CI systems which ran `cortex deploy`, they didn’t need Python/Pip in their images, and installing them was inconvenient

- Does this mean we're in the early majority or late majority part of the technology adoption cycle? Nasdaq CIO Sees Serverless Computing as a 2020 Tech Trend.

- Can you run a bank on serverless? Temenos provides banking software for 41 of the world's top 50 banks. That encompasses 500,000,000 banking customers. So, yes. Temenos: Building serverless banking software at scale.

- Drivers: unpredictable workloads and ease of maintenance. They need to burst up and down. They need lights out datacenters. Banks don't want and the people associated with having an IT department.

- A traditional architecture of having an OLTP path and query path is used, the difference is managed services are used for both legs.

- API Gateway fronts the system. ELB routes to Fargate for transaction processing. Data is persisted to RDS. Events from the transaction go into Kinesis which routes to lambda. The lambda functions create a data model that's pushed into DynmaoDB. Why two databases? RDS is OLTP to process transactions.

- DynamoDB is optimized to handle queries. API Gateway can go directly to lambda which talks to DynamoDB.

- Cloud Formation scripts to spin up the architecture.

- Both Fargate and lambda scale up and down as needed.

- OLTP is at 12k TPS. Overall 50k+ TPS. Nothing needed to do to make lambda and DynamoDB work at high volumes.

- 12 QUICK TIPS FOR AWS LAMBDA OPTIMIZATION:

- Choose interpreted languages like Node.js or Python over languages like Java and C# if cold start time is affecting user experience;

- Keep in mind that Java and other compiled languages perform better than interpreted ones for subsequent requests;

- If you have to go with Java, you can use Provisioned Concurrency to address the cold start time issue;

- Go for Spring Cloud Functions rather than Spring Boot web framework;

- Use the default network environment unless you need a VPC resource with private IP. This is because setting up ENIs takes significant time and adds to the cold start time;

- Remove all unnecessary dependencies that are not required to run the function;

- Use Global/Static variables and singleton objects as these remain alive until the container goes down;

- Define your DB connections at the Global level so that it can be reused for subsequent invocations;

- If your Lambda in a VPC is calling an AWS resource, avoid DNS resolution as it takes significant time;

- If you are using Java, it is recommended to put your dependency .jar files in separate /lib directory rather than putting them along with function code.

- We need better IO. From FLOPS to IOPS: The New Bottlenecks of Scientific Computing:

- the bottleneck for many scientific applications is no longer floating point operations per second (FLOPS) but I/O operations per second (IOPS).

- plasma simulation simulates billions of particles in a single run, but analyzing the results requires sifting through a single frame (a multi-dimensional array) that is more than 50 TB bi—and that is for only one timestep of a much longer simulation.

- a two-photon imaging of a mouse brain yields up to 100 GB of spatiotemporal data per hour and electrocorticography (ECoG) recordings yield 280 GB per hour.

- An idea that is seeing significant application traction is to transition away from block-based, POSIX-compliant file systems towards scalable, transactional object stores. The Intel Distributed Asynchronous Object Storage (DAOS) project is one such effort to reinvent the exascale storage stack. The main storage entity in DAOS is a container, which is an object address space inside a specific pool. Containers support different schemata, including a filesystem, a key/value store, a database table, an array and a graph. Each container is associated with metadata that describe the expected access pattern (read-only, read-mostly, read/write), the desired redundancy mechanism (replication or erasure code), and the desired striping policy.

- We need better distributed computing. Future Directions for Parallel and Distributed Computing.

- Existing parallel and distributed computing abstractions impose substantial complexity on the software development process, requiring significant developer expertise to extract commensurate performance from the aggregated hardware resources. An imminent concern is that the end of Dennard scaling and Moore’s law will mean that future increases in computational capacity cannot be obtained simply from increases in the capability of individual cores and machines in a system.

- new abstractions are needed to manage the complexity of developing systems at the scale and heterogeneity demanded by new computing opportunities. This applies at all levels of the computing stack

- Traditional boundaries between disciplines need to be reconsidered. Achieving the community’s goals will require coordinated progress in multiple disciplines within CS, especially across hardware-oriented and software-oriented disciplines.

- The end of Moore’s Law and Dennard scaling necessitate increased research efforts to improve performance in other ways, notably by exploiting specialized hardware accelerators and considering computational platforms that trade accuracy or reliability for increased performance.

- Ease of development, and the need to assure correctness, are fundamental considerations that must be taken into account in all aspects of design and implementation.

- Full-stack, end-to-end evaluation is critical. Innovations and breakthroughs in specific parts of the hardware/software stack may have effects and require changes throughout the system.

- 6 Lessons learned from optimizing the performance of a Node.js service:

- performance testing can give us confidence that we are not degrading performance with each release;

- by “cranking up” the load we can expose problems before they ever reach production;

- long load tests can surface different kinds of problems. If everything looks OK, try extending the duration of the test;

- don’t forget to consider DNS resolution when thinking about outgoing requests. And don’t ignore the record’s TTL — it can literally break your app; batch I/O operations! Even when async, I/O is expensive;

- before attempting any improvements, you should have a test that you trust its results

- A thoughtful analysis of the tradeoffs. Emphasis is on using Azure Functions. To Serverless or Not To Serverles:

- The way I see it Serverless doesn’t have many advantages in comparison to traditional applications, when it comess to handling steady state scaling. Apart potentially, the peace of mind where you know you don’t even have to think about it.

- While we haven’t encountered that many actual bugs (only one, having to do with dependency injection), we have encountered many times where we felt like the documentation was out-of-date, inaccurate or simply missing.

- You lose some control, and you’re more at the mercy of the bindings. For example, there’s some reasonably advanced options we’d like to configure in regards to how we read events from our Event Hubs. However these configurations aren’t exposed by the Azure Functions runtime, so if we end up using Functions, we’ll have to live with not being able to configure that.

- There’s a different skill set to learn when using functions. While much of the business logic might be the same, the way you architect systems doesn’t necessarily carry over.

- If you have a system that relies heavily on caches, that’s harder when using Functions. In regular applications you can cache things in-memory, but that’s not always possible with functions, as you have no knowledge of how often a function is discarded and re-created.

- These techniques are broadly applicable to different environments, but what's especially interesting is to see actual improvement numbers. Building a blazingly fast Android app, Part 2:

- Delaying expensive object initialization (-300ms app startup time);

- Optimizing class loading (-400ms app startup time); Lazily initializing objects (-70ms app startup time);

- Optimizing low-level calls (-50ms page load time for various pages);

- Proactive asynchronous view inflation (-115ms page load time for notifications page);

- Parallelization of server and cache requests (-208ms page load time for the “My Network” page);

- Preload data before onCreateView() (-102ms page load time for the “My Network” page);

- We leverage our A/B testing platform to control the ramp of the optimization experiment.

- A Scientific Approach to Capacity Planning: From the analysis above, it should be evident that we cannot always solve scalability problems by merely adding more hardware. While the marginal cost of adding more hardware may be low in terms of actual dollar amounts, the benefits are almost entirely wiped out in our example scenario. If engineers do not implement a more precise, scientific way of approaching capacity planning, they will reach a point at which they will be throwing more money at the problem but will see diminishing returns due to the coherency penalty (β > 0). While the USL is not to be taken as gospel, it does give us a model to quickly analyze the system’s capacity trend. Capacity and scalability problems should be solved through a combination of hardware and software optimizations, not any one alone. With just a few data points obtained via load testing or from production systems, the USL can provide an estimate of what the optimum capacity needs to be at higher load levels. Additional load tests may be executed with the load input doubled as suggested by the USL to validate the model. The USL is still in its nascency at Wayfair as teams are just beginning to implement it for their capacity planning and measure its effectiveness.

- Very rich and detailed articles. The Architecture of a Large-Scale Web Search Engine, circa 2019 and Indexing Billions of Text Vectors

- A great story. Mitigating a DDoS, or: how I learned to stop worrying and love the CDN. But pjc50 nailed my thoughts: Decentralisation fans take note: despite wanting to remain independent, the only effective solution was in this case to re-insert a giant global intermediary (Cloudflare) and block all the anonymous unaccountable Tor users. If a decentralised system is to stay decentralised, it needs to consider spammy bad actors.

Soft Stuff:

- fabiolb/fabio: a fast, modern, zero-conf load balancing HTTP(S) and TCP router for deploying applications managed by consul.

- Nexenta/edgefs (article): high-performance, low-latency, small memory footprint, decentralized data fabric system released under Apache License v2.0 developed in C/Go. EdgeFS is built around decentralized immutable metadata consistency to sustain network partitioning up to many days and yet provide consistent global data fabric namespace via unique versions reconciliation technique.

Pub Stuff:

- Plasticine: A Reconfigurable Architecture For Parallel Patterns: a new spatially reconfigurable architecture designed to efficiently execute applications composed of parallel patterns. Parallel patterns have emerged from recent research on parallel programming as powerful, high-level abstractions that can elegantly capture data locality, memory access patterns, and parallelism across a wide range of dense and sparse applications. Using a cycle-accurate simulator, we demonstrate that Plasticine provides an improvement of up to 76.9× in performance-per-Watt over a conventional FPGA over a wide range of dense and sparse applications.

- Part II: Control Theory for Buffer Sizing: This article describes how control theory has been used to address the question of how to size the buffers in core Internet routers. Control theory aims to predict whether the network is stable, i.e. whether TCP flows are desynchronized. If flows are desynchronized then small buffers are sufficient [14]; the theory here shows that small buffers actually promote desynchronization—a virtuous circle.

- Exploiting a Natural Network Effect for Scalable, Fine-grained Clock Synchronization: In this paper, we present HUYGENS, a software clock synchronization system that uses a synchronization network and leverages three key ideas. First, coded probes identify and reject impure probe data—data captured by probes which suffer queuing delays, random jitter, and NIC timestamp noise. Next, HUYGENS processes the purified data with Support Vector Machines, a widely-used and powerful classifier, to accurately estimate one-way propagation times and achieve clock synchronization to within 100 nanoseconds. Finally, HUYGENS exploits a natural network effect—the idea that a group of pair-wise synchronized clocks must be transitively synchronized—to detect and correct synchronization errors even further. We show that HUYGENS achieves synchronization to within a few 10s of nanoseconds under varying loads, with a negligible overhead upon link bandwidth due to probes. B

- First-Hand:No Damned Computer is Going to Tell Me What to DO - The Story of the Naval Tactical Data System, NTDS: It was 1962. Some of the prospective commanding officers of the new guided missile frigates, now on the building ways, had found out that the Naval Tactical Data System (NTDS) was going to be built into their new ship, and it did not set well with them. Some of them came in to our project office to let us know first hand that no damned computer was going to tell them what to do. For sure, no damned computer was going to fire their nuclear tipped guided missiles. They would take their new ship to sea, but they would not turn on our damned system with its new fangled electronic brain.

- Stop using entrails to predict costs. Try something slightly less esoteric. Predicting the Costs of Serverless Workflows: In this paper, we propose a methodology for the cost prediction of serverless workflows consisting of input-parameter sensitive func- tion models and a monte-carlo simulation of an abstract workflow model. Our approach enables workflow designers to predict, compare, and optimize the expected costs and performance of a planned workflow, which currently requires time-intensive experimentation. In our evaluation, we show that our approach can predict the response time and output parameters of a function based on its input parameters with an accuracy of 96.1%. In a case study with two audio-processing workflows, our approach predicts the costs of the two workflows with an accuracy of 96.2%