Stuff The Internet Says On Scalability For January 3rd, 2014

Hey, it's HighScalability time, can you handle the truth?

Should software architectures include parasites? They increase diversity and complexity in the food web.

- 10 Million: classic hockey stick growth pattern for GitHub repositories

- Quotable Quotes:

- Seymour Cray: A supercomputer is a device for turning compute-bound problems into IO-bound problems.

- Robert Sapolsky: And why is self-organization so beautiful to my atheistic self? Because if complex, adaptive systems don’t require a blue print, they don’t require a blue print maker. If they don’t require lightning bolts, they don’t require Someone hurtling lightning bolts.

- @swardley: Asked for a history of PaaS? From memory, public launch - Zimki ('06), BungeeLabs ('06), Heroku ('07), GAE ('08), CloudFoundry ('11) ...

- @neil_conway: If you're designing scalable systems, you should understand backpressure and build mechanisms to support it.

- Scott Aaronson...the brain is not a quantum computer. A quantum computer is good at factoring integers, discrete logarithms, simulating quantum physics, modest speedups for some combinatorial algorithms, none of these have obvious survival value. The things we are good at are not the same thing quantum computers are good at.

- @rbranson: Scaling down is way cooler than scaling up.

- @rbranson: The i2 EC2 instances are a huge deal. Instagram could have put off sharding for 6 more months, would have had 3x the staff to do it.

- @mraleph: often devs still approach performance of JS code as if they are riding a horse cart but the horse had long been replaced with fusion reactor.

- Now we know the cost of bandwidth: Netflix’s new plan: save a buck with SD-only streaming.

- Massively interesting Stack Overflow thread on Why is processing a sorted array faster than an unsorted array? Compilers may grant a hidden boon or turn traitor with a deep deceit. How do you tell? It's about branch prediction.

- Can your database scale to 1000 cores? Nope. Concurrency Control in the Many-core Era: Scalability and Limitations: We conclude that rather than pursuing incremental solutions, many-core chips may require a completely redesigned DBMS architecture that is built from ground up and is tightly coupled with the hardware.

- Not all SSDs are created equal. Power-Loss-Protected SSDs Tested: Only Intel S3500 Passes. With a follow up. If data on your SSD can't survive a power outage it ain't worth a damn.

- It's not just Moore's suggestion that pushes functionality to commodity processors, the chips themselves are adding more specialized features. Server Performance, Network Agents, Software Routers and Networking: I’ve seen information from Intel that clearly shows that current generations of server hardware can easily handle 10Gbps of traffic and probably more than 40Gbps with just a single CPU Core.

- Nick Heudecker: Innovations in the operational DBMS area have developed around flash memory, DRAM improvements, new processor technology, networking and appliance form factors. Flash memory devices have become faster, larger, more reliable and cheaper. DRAM has become far less costly and grown in size to greater than 1TB available on a server.

- BirdWatch: an open-source application that shows off some of the cool things developers can do with Elasticsearch. Tweets matching a selection of terms are consumed via the Twitter Streaming API and stored in Elasticsearch.

- Pat Helland with a classically great article on Idempotence. Fortunately the article is not idempotent. Everytime you read it your brain updates with something new.

- Maybe it's a Timey Wimey thing? Moore’s Law and the Origin of Life: As life has evolved, its complexity has increased exponentially, just like Moore’s law. In April, geneticists announced they had extrapolated this trend backwards and found that by this measure, life is older than the Earth itself.

- Attack the network, not not the nodes. The neural basis of impaired self-awareness after traumatic brain injury: The impairment of self-awareness was not explained either by the location of focal brain injury, or the amount of traumatic axonal injury as demonstrated by diffusion tensor imaging. The results suggest that impairments of self-awareness after traumatic brain injury result from breakdown of functional interactions between nodes within the fronto-parietal control network.

- Batching is a powerful idea with bits or atoms. Contain yourself: It is somewhere around bag 273 that you start thinking about container shipping. The idea that, rather than move things one by one, you can put them into some kind of container and then let a series of very powerful pieces of equipment take over, moving the whole container from shipyard to ship, from ship to shipyard, from shipyard to truck, is immensely appealing.

- Yep, disks are best thought of as streaming IO devices, not random IO devices. Reducing the cost of writing to disk: we found out that the major cost of random writes in our tests was actually writing to disk. Writing 500K sequential items resulted in about 300 MB being written. Writing 500K random items resulted in over 2.3 GB being written.

- LinkedIn's Mobile Stack: node.js, HTTP APIs, backend services, Voldemort and LiX.

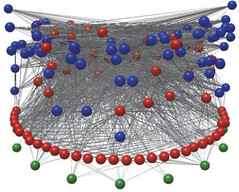

- A key idea in software architecture is creating separation, independence, and isolation. That creates parallelism and that creates speed and scalability. Maybe for a smarter world we need to rethink that notion going forward? In the Human Brain, Size Really Isn’t Everything: Human brains are different. As they got bigger, their sensory and motor cortices barely expanded. Instead, it was the regions in between, known as the association cortices, that bloomed. Our association cortices are crucial for the kinds of thought that we humans excel at. Among other tasks, association cortices are crucial for making decisions, retrieving memories and reflecting on ourselves. Association cortices are also unusual for their wiring. They are not connected in the relatively simple, bucket-brigade pattern found in other mammal brains. Instead, they link to one another with wild abandon. A map of association cortices looks less like an assembly line and more like the Internet, with each region linked to others near and far.

- I'm a state machine pimp. Replicant: Replicated State Machines Made Easy: The primary technique for masking failures is, of course, to replicate the state and functionality of the distributed system so that the service as a whole can continue to function even as some pieces may fail.

- The scale of things: For cities, West suggests that there are two interacting networks: people and infrastructure. Data from urban areas around the world—no matter the culture, geography or history—reveal that a doubling in population increases per capita advantages like wages and per capita troubles like crime by 15 percent while saving 15 per cent on infrastructure like roads.

- Optimizing Time to First Byte is not just a goal for Vampires, it's also an untapped optimization path in browsers. Ilya Grigorik with a great explanation and how to: Optimizing NGINX TLS Time To First Byte (TTTFB): With all said and done, our TTTFB is down to ~1560ms, which is exactly one roundtrip higher than a regular HTTP connection. Now we're talking!

- Intriguing brain and software similarity. The brain’s visual data-compression algorithm: we have now demonstrated that the visual cortex suppresses redundant information and saves energy by frequently forwarding image differences. < Diffs aren't just a source control idea, they are also used in sending only properties that have changed in various data structures.

- Good advice on figuring out which of these 2 cases for MySQL server overload you have: MySQL gets more work than normal; MySQL processes the usual amount of work slower.

- Great explanation of Writing a full-text search engine using Bloom filters. Bill Cheswick sums it up: "Downloading pre-computed information to a small client program is a fine solution."

- Not as good as Santa's, but still good: CDA 5106 - Advanced Computer Architecture I reading list.

- For everything there is a season? Why Dataflow is not Popular: There was no reason for developers to switch to Dataflow because soon, their slow application, will run at an acceptable rate on a new, faster microprocessor. Now that we have now come to the end of Moore’s Law the only way to get faster is by using multiple processors in parallel. Now everyone is looking around to for alternatives to threads and locks. Also, An Asynchronous Dataflow Implementation – Part 2.

- Concurrency Models, Rust, and Servo: I am not strongly arguing in favor of synchronous message sending. There are some patterns in servo that are much easier with asynchronous sending. Beyond the profiler example earlier, we would also like for our resources parsers to be able to deliver incremental results without blocking the process of parsing. But, since we are already performing a context switch in >99% of the send calls today, it's hard to believe that we're taking advantage of the #1 advantage we currently have - doing other work in the original computation while we wait for another computation to receive the message.

- Network Coded TCP (CTCP) Performance over Satellite Networks: We show preliminary results for the performance of Network Coded TCP (CTCP) over large latency networks. While CTCP performs very well in networks with relatively short RTT, the slow-start mechanism currently employed does not adequately fill the available bandwidth when the RTT is large. Regardless, we show that CTCP still outperforms current TCP variants (i.e., Cubic TCP and Hybla TCP) for high packet loss rates (e.g., >2.5%).

- The Era of Cognitive Systems: An Inside Look at IBM Watson and How it Works: Natural language processing by helping to understand the complexities of unstructured data, which makes up as much as 80 percent of the data in the world today. Hypothesis generation and evaluation by applying advanced analytics to weigh and evaluate a panel of responses based on only relevant evidence Dynamic learning by helping to improve learning based on outcomes to get smarter with each iteration and interaction.

- BIDMach: Large-scale Learning with Zero Memory Allocation: This paper describes recent work on the BIDMach toolkit for large-scale machine learning. BIDMach has demonstrated single-node performance that exceeds that of published cluster systems for many common machine-learning task. BIDMach makes full use of both CPU and GPU acceleration (through a sister library BIDMat), and requires only modest hardware (commodity GPUs).

- Nanocubes: Fast Visualization of Large Spatiotemporal Datasets: Nanocubes are a fast datastructure for in-memory data cubes developed at the Information Visualization department at AT&T Labs – Research. Nanocubes can be used to explore datasets with billions of elements at interactive rates in a web browser, and in some cases it uses sufficiently little memory that you can run a nanocube in a modern-day laptop.