Stuff The Internet Says On Scalability For January 5th, 2018

Hey, it's HighScalability time:

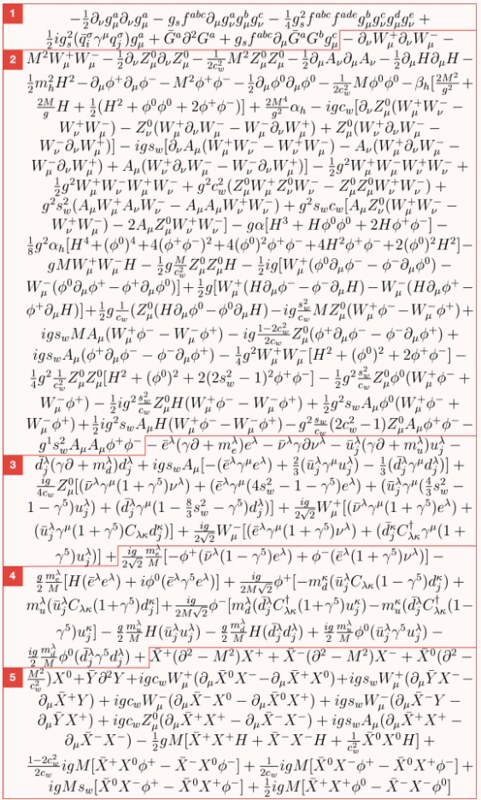

All we know about how the universe works. The standard model and mind blowing video.

If you like this sort of Stuff then please support me on Patreon. And I'd appreciate your recommending my new book—Explain the Cloud Like I'm 10—to anyone who needs to understand the cloud (who doesn't?). I think they'll like it. Now with twice the brightness and new chapters on Netflix and Cloud Computing.

- 15: datacenters not built because of Google's TPU; 5 billlion: items shipped by Amazon Prime; 600: free online courses; 1.6 million: React downloads per week; 140 milliseconds: time Elon Musk's massive backup battery took to respond to crisis at power plant; 16: world spanning Riot Games clusters; < $100 a kilowatt-hour: Lithium-ion battery packs by 2025; 23%: performance OS penalty fixing Intel bug; 200k: pending Bitcoin transactions; 123 million: household data leak from marketing analytics company Alteryx; .67: hashes per day mining Bitcoin with pencil and paper; $21 million: projected cost of redundant power for Atlanta Airport; 62: nuclear test films; 10x: more galaxies in the universe; 55%: DuckDuckGo growth; 49%: increase in node downloads; 144 terabits/second: submarine cable from Hong Kong to L.A.; $100 million/day: spending on apps and advertising on the App Store;

- Quotable Quotes:

- @seldo: Two rounds of deep analysis of employee performance data at Google show that the top predictors of success are being a good communicator in a team where you feel emotionally safe

- @emilyrauhala: I'm on the Tianjin to Beijing train and the automated announcement just warned us that breaking train rules will hurt our personal credit scores!

- Laura M. Moy: Someone like me might be able to use cell-site location information to figure out where you live, where you work, where your kids go to school, whether you’re having an affair, and where you worship.

- Google: There has been speculation that the deployment of KPTI causes significant performance slowdowns. Performance can vary, as the impact of the KPTI mitigations depends on the rate of system calls made by an application. On most of our workloads, including our cloud infrastructure, we see negligible impact on performance.

- @cloud_opinion: 2010: AWS: Cloud is real Other vendors: Lol, bookseller, real money is in virtualisation 2017: AWS: Serverless is real Other vendors: Lol, real money is in Containers

- Peter Norvig: Understanding the brain is a fascinating problem but I think it’s important to keep it separate from the goal of AI which is solving problems. Each field can learn from the other, but if you conflate the two it’s like aiming at two mountain peaks at the same time—you usually end up in the valley between them.

- @bcrypt: IMO all software engineers should be required to program a laser machine to give themselves LASIK

- Errata Security: [Meltdown/Spectre] will force a redesign of CPUs and operating systems. While not a big news item for consumers, it's huge in the geek world. We'll need to redesign operating systems and how CPUs are made.

- Errata Security: the CPUs have no bug. The results are correct, it's just that the timing is different. CPU designers will never fix the general problem of undetermined timing.

- Linus Torvalds: I think somebody inside of Intel needs to really take a long hard look at their CPU's, and actually admit that they have issues instead of writing PR blurbs that say that everything works as designed.

- @mjg59: Your workload is almost certainly not syscall bound. Stop worrying about microbenchmarks.

- @BenedictEvans: 90% of adults on earth have a mobile phone now, and about 60% and growing have a smartphone.

- @vambenepe: WRT serverless data processing, I'm feeling pretty good about Google Cloud. Redshift: how big a cluster you want? BigQuery: just send queries EMR: how big a cluster you want? Dataflow: just send pipelines Kinesis: how many shards you want? Pub/Sub: just send events

- @mattiasgeniar: This is intense: AWS instances having 2x/3x the CPU load after the Intel patch got applied. Can your business afford a 3x increase in server spending? 😲

- @rsthau: 1) Vulnerability to cache timing attacks wasn't part of "correctness" criteria when this stuff was designed. 2) [For Intel] doing the access check in parallel with sequential execution saves time, with no "observable" effect if you ignore 1).

- Robert Sapolsky: When we stop fearing something, it isn’t because some amygdaloid neurons have lost their excitability. We don’t passively forget that something is scary. We actively learn that it isn’t anymore.

- RescueTime: Our data showed that we do our most productive work (represented by the light blue blocks) between 10 and noon and then again from 2-5pm each day. However, breaking it down to the hour, we do our most productive work on Wednesdays at 3pm.

- ForHackernews: Measureable: 8-12% - Highly cached random memory, with buffered I/O, OLTP database workloads, and benchmarks with high kernel-to-user space transitions are impacted between 8-12%. Examples include Oracle OLTP (tpm), MariaBD (sysbench), Postgres(pgbench), netperf (< 256 byte), fio (random IO to NvME). Modest: 3-7% - Database analytics, Decision Support System (DSS), and Java VMs are impacted less than the “Measureable” category. These applications may have significant sequential disk or network traffic, but kernel/device drivers are able to aggregate requests to moderate level of kernel-to-user transitions. Examples include SPECjbb2005 w/ucode and SQLserver, and MongoDB.

- Dan Luu: It’s a bit absurd that a modern gaming machine running at 4,000x the speed of an apple 2, with a CPU that has 500,000x as many transistors (with a GPU that has 2,000,000x as many transistors) can maybe manage the same latency as an apple 2 in very carefully coded applications if we have a monitor with nearly 3x the refresh rate. It’s perhaps even more absurd that the default configuration of the powerspec g405, which had the fastest single-threaded performance you could get until October 2017, had more latency from keyboard-to-screen (approximately 3 feet, maybe 10 feet of actual cabling) than sending a packet around the world (16187 mi from NYC to Tokyo to London back to NYC, more due to the cost of running the shortest possible length of fiber).

- @michael_nielsen: A lot of important work comes from the constraint of having small compute. In ~2011 Ng et al attacked ImageNet with 1000+ CPUs. In 2012, Hinton et al did way better with 2 GPUs. In general, less compute often means people need better ideas. Which isn't a tragedy!

- shiny_ferret_smasher: Using the data above, average U.S. Netflix subscriber uses 208.36GB monthly. Average YouTube U.S. user uses 29.94GB monthly. As mentioned before, YouTube bitrate differs from that of Netflix, which affects data usage. Simply put, YouTube has a broader non-US market than Netflix does.

- Chris Mellor: 3D NAND bits surpassed 50 per cent of total NAND Flash bits supplied in 2017's third quarter, and are estimated to reach 85 per cent by the end of 2018,

- @cote: “direct expenditure on serverless can be cheaper than on VMs, but only where the number of times the code is executed is under about 500,000 executions per month” http://bit.ly/2CnMmms

- @odedia: "The Monolithic architecture is not an anti-pattern, it is a good choice for some applications". Excellent point by @crichardson

- David Rosenthal: Rakers agrees that in 2021 bulk data will still reside overwhelmingly on hard disk, with flash restricted to premium markets that can justify its higher cost.

- @tqbf: Or, “I kept 70 people late at the office 6 days a week for a year, then laid them off with 2 days notice 10 days before Christmas, and then bragged about it.”

- @krishnan: Most people will see increased infra costs from switching to AWS Fargate unless you are currently running many unused or underutilized EC2 instances under ECS. If you have already binpacked containers on your instances to get good utilization then self managed EC2 is cheaper

- @jasongorman: Always amazes me how few businesses see high-performing dev teams as assets. "Thanks John, Paul, George, Ringo. That 1st album sold gangbusters. Time to split you up."

- @copyconstruct: OH: "proxies are all the rage now but people keep forgetting what a huge single point of failure your proxy has now become. Soon enough this industry will be hiring PREs - proxy reliability engineers or SMRE - service mesh reliability engineer"

- AnandTech: For the most part, the Optane SSDs are holding to their MSRPs, leaving them more than twice as expensive per GB as the fastest NAND flash based SSDs. They're a niche product in the same vein as the extreme capacity models like Samsung's 2TB 960 PRO and 4TB 850 EVO. But where the benefits of expanded capacity are easy to assess, the performance benefits of the Optane SSD are more subtle. For most ordinary and even relatively heavy desktop workloads, high-end flash storage is fast enough that further improvements are barely noticeable.

- jospoortvliet: But, just like if you make one component (eg a piston) in a car engine 1000x faster the entire car won't drive 1000x faster - the other components also contribute to speed, as do external factors like, you know, wind, asphalt... So the car gets 10% faster as a whole. You see the same here: even if that one part is 1000x faster, flash controllers use a ram cache and splitting data over a dozen channels to overcome the inherent limitation of flash while the NVME protocol and PCIExpress puts limits at latency improvements, so the end result is that the Optane PCIE devices are occasionally >10x faster than SSD's but generally a factor 3-5

- @swardley: Levels of duplication such as 2x, 5x are the fantasy lands of startups for many corporates. I tend to more commonly see duplication at the 10x, 100x and 1000x level

- @5imian: "AWS is down" I looked to my coworker who was now tracing tribal glyphs on his face with a sharpie. "The machine is broken'. he mused. "We must begin anew". Now fashioning a crude spear with tape and a plastic butter knife, "We will live off the land. We will find a better life."

- CDVagabundo: A Free tip from a friend Dev: DON'T SCALE FIRST. It means: at first, don't worry about scaling. Get your single server to work properly and players to have fun. Only scale when it becomes mandatory.

- Mark LaPedus: Momentum is building for a new class of ferroelectric memories that could alter the next-generation memory landscape[...]The industry has also been developing a different memory type called a ferroelectric FET (FeFET). Instead of using traditional FRAM materials, FeFETs and related technologies harness the ferroelectric properties in hafnium oxide, sometimes referred to as ferroelectric hafnium oxide. (FeFETs are different than a logic transistor type called finFETs).

- @SamGrittner: DAD: Pass the stuffing ME: *waits 20 minutes* DAD: What’s the hold up?

- ME: Give me $20 DAD: Are you out of your mind? ME: Do you want me to explain Net Neutrality or not?

- @mikegelhar: This quote is gold "Most benchmarks represent the first date experience not the marriage experience"

- matthelb: Maurice Herlihy and Jeanette Wing introduced linearizability back in 1990 [1]. It stipulates that the result of a concurrent execution of the system is equivalent to a result from a sequential execution where the order of the sequential execution respects the real-time order of operations in the concurrent execution.

- Abigail Beall: researchers used 15,000 signals to train the computers using a neural network and found it can identify planets with 96-percent accuracy. Overall, the project found 35,000 possible planetary signals in the data Kepler gathered over its four-year mission.

- @JoeEmison: The majority of benefit I get from serverless architectures is from cloud-specific services (lately: AppSync, Lex, Dynamo Streams, Connect). Lowest-Common-Denominator Cloud architectures are needlessly limiting. Would love for my competitors to use them.

- @securelyfitz: It seems google OCRs all the images in your inbox, which means Informed Delivery + gmail = text search of ads you get in the mail....

- Vamsee Juluri: the college curriculum should look at “meaning” as one of the main “needs” of a world undergoing rapid modernization and change. Society needs to equip itself with knowledge about how it came to be, what its present from means for different members, and where it is going. Education would need to include knowledge about one’s self, one’s place in the world, one’s relations to others, starting from parents and elders and moving on to children, and ultimately, to the living world of nature itself.

- @danluu: Is there a serious, undisclosed, CPU bug out there? link

- Elliot Forbes: Serverless computing will have as big an impact on software development as Unity3D had on Game Development.

- alexebird: For some reason Nomad seems to get noticeably less publicity than some of the other Hashicorp offerings like Consul, Vault, and Terraform. In my opinion Nomad is right up there with them. The documentation is excellent. I haven’t had to fix any upstream issues in about a year of development on two separate Nomad clusters. Upgrading versions live is straightforward, and I rarely find myself in a situation where I can’t accomplish something I envisioned because Nomad is missing a feature. It schedules batch jobs, cron jobs, long running services, and system services that run on every node. It has a variety of job drivers outside of Docker.

- laurentl: We use Lambda + API GW to manage the glue between our different data/service providers. So for instance we expose a "services" API (API GW) that takes a request, does some business logic (lambda code), calls the relevant provider(s) and returns the aggregate response. That principle can (and probably will) be extended to hosting our own back-end / business logic.

- cle: The most painful part [Lambda] has been scaling up the complexity of the system while maintaining fault tolerance. At some point you start running into more transient failures, and recovering from them gracefully can be difficult if you haven't designed your system for them from the beginning. This means everything should be idempotent and retryable--which is surprisingly hard to get right. And there isn't an easy formula to apply here--it requires a clear understanding of the business logic and what "graceful recovery" means for your customers.

- @reneritchie: This stuff is complicated. Samsung got caught throttling everything except for benchmark apps. (LOL.) Balancing modern smartphone SoC vs. batteries is a huge challenge for vendors.

- sdfsaasa: You hit the nail on the head. Kubernetes does eat a lot of resources. Kubernetes eats up like 70% of a micro instance and leaves you with enough to run like 5 small pods. You end up having to upgrade from micro to small and the $20/mo you were expecting to pay for a side project jumps to $60/mo.

- David Rosenthal: Fifth, Young shows no understanding of the timescales required to bring a new storage technology into the mass market. Magnetic tape was first used for data storage more than 65 years ago. Disk technology is more than 60 years old. Flash will celebrate its 30th birthday next year. It is in many ways superior to both disk and tape, but it has yet to make a significant impact in the market for long-term bulk data storage. DNA and the other technologies discussed in this part will be lucky if they enter the mass market two decades from now.

- Matthew Green: Those fossilized printers confirmed a theory we’d developed in 2014, but had been unable to prove: namely, the existence of a specific feature in RSA’s BSAFE TLS library called “Extended Random” — one that we believe to be evidence of a concerted effort by the NSA to backdoor U.S. cryptographic technology.

- cloudonaut: While there is no considerable difference in network performance between m3 and m4 instances, there is a huge difference in networking performance between the fourth and fifth generation of general purpose instance types.

- Andrew Ng: Manufacturing touches nearly every part of our society by shaping our physical environment. It is through manufacturing that human creativity goes beyond pixels on a display to become physical objects. By bringing AI to manufacturing, we will deliver a digital transformation to the physical world.

- David Gerard: Bitcoin doesn’t have an economy as such. So there’s a lot of Bitcoin paper “wealth” that can never be realised. Bitcoin is not the bloodflow of a functioning economy — there’s no circular flow of income. You can buy very few things with bitcoins — even the drug market is abandoning it, as transaction fees go through the roof. Bitcoin doesn’t have its own economy.

- Mathias Lafeldt: The rollback button is a lie. That’s not only true for application deployments but also for fault injection, as both face the same fundamental problem: state. Yes, you might be able to revert the direct impact of non-destructive faults, which can be as simple as stopping to generate CPU/memory/disk load or deleting traffic rules created to simulate network conditions.

- itcmcgrath: Improvements in consensus algos often have issues, correct implementation is hard, but it can make previously impossible things tenable which I find exciting...Our [Google] network definitely makes a big difference, but even we don't just use straight up vanilla Paxos.

- @adrianco: Totally agree. I've seen "Run what you wrote" work in lots of places, and it enables good habits like smaller more frequent updates, and encourages better automation.

-

013a: Nearly every trucking firm I've worked with has their own in-house shop, with mechanics and everything. Turns out, outsourcing the maintenance of vehicles that experience tens of thousands of miles every quarter is more expensive than just paying your own people to do it. Same with municipal fleets. Same with taxi fleets. There are many variables that enter into a cost-benefit calculation when thinking about this. But what it really comes down to is Scale. Projects on the low end of scaling will find it cheaper to outsource everything they can.

-

illumin8: every sysadmin I've met (and I used to be one) would like to think they are managing Walmart's trucking fleet, when the reality is they are more like Joe's local furniture delivery.

-

RhodesianHunter: My team (~20 people within a Fortune 50) could be considered a success story. We use Lambda for ETL type work, Healthchecks, Web Scraping, and infrastructure automation.

-

Cade Metz: the TPU outperforms standard processors by 30 to 80 times in the TOPS/Watt measure, a metric of efficiency.

-

Mark LaPedus: the foundry business is expected to see a solid year, growing 8% in 2018 over 2017

-

tzury: We at Reblaze [0] push to [BigQuery] nearly 3.5 Billion records every day. We use it as our infrastructure for big-data analysis and we get the results at the speed of light. Every 60 seconds, there are nearly 1K queries that analyze users and IPs behavior. Our entire ML is based on it, and there are half a dozen of applications in our platform that make use of BQ - from detecting scrapers, analyzing user input, defeating DDoS and more. BQ replaced 2,160,000 monthly cores (250 instances * 12 cores each always on) and petabytes of storage and cost us several grands per month. This perhaps one of the greatest hidden gems available today in the cloud sphere and I recommend everyone to give it a try. A very good friend of mine replaced MixPanel with BigQuery and saved nearly a quarter of a million dollars a year since [4].

-

sturgill: We dual write event data to BigQuery and Redshift. BigQuery is incredibly cheap at the storage level, but can be pricey to run queries against large datasets. Personally I am not a fan of paying per-query.

-

@swardley: Being told I'm wrong about K8s & containers, and how it isn't like OpenStack ... oh my, it's amazing how often patterns repeat in our economic system and people still fail to learn. Ok, by 2025 Kubernetes is a dead duck and very few in the development world will care.

-

Riot Games: we’re running over 2,400 instances of various applications - we call these “packs”. This translates to over 5,000 Docker containers globally.

-

@techpractical: i think i understand why all code that uses openssl is shit. it's not just their api is shit, it's that it takes so long to do anything useful that you'll ship anything just to get away from it.

- ughmanchoo: The strength of frameworks from my perspective is to take data from an API and display it without you worrying about keeping the DOM and your data in sync. Just worry about your model and your framework handles the rest. If you’ve ever had to write to the DOM without a framework and then update it when data changes you know the pain. Adding and removing elements and event listeners quickly becomes a delicate operation.

- Sarah Mei: Conventional wisdom says you need a comprehensive set of regression tests to go green before you release code. You want to know that your changes didn’t break something elsewhere in the app. But there are ways to tell other than a regression suite. Especially with the rise of more sophisticated monitoring and better understanding of error rates (vs uptime) on the operational side. With sufficiently advanced monitoring & enough scale, it’s a realistic strategy to write code, push it to prod, & watch the error rates. If something in another part of the app breaks, it’ll be apparent very quickly in your error rates. You can either fix or roll back. You’re basically letting your monitoring system play the role that a regression suite & continuous integration play on other teams.

- Antony Alappatt: Network applications should be developed with toolsets and languages that have communication as their foundation. Software based on computation has a good theoretical foundation, such as λ-calculus, on which the whole concept of programming is built. An application based on λ-calculus shuts out the world when the program executes, whereas a network application, by its very nature, has many agents interacting with each other on a network. This difference in the nature of the applications calls for a new foundation for the toolsets and languages for building network applications.

- Brian Bailey: One development that everyone missed is the increase in local processing. “While traditional mobile AI applications have performed all or most processing in the cloud, powerful chips are now able to handle compute-intensive processes right on consumers’ devices,” adds Moynihan. “Energy-efficient chipset designs have been critical in making this a reality, enabling local processing without a huge drain on battery life. Local neural net data processing is poised to help revolutionize the smartphone experience as OS-level processes are optimized through machine learning and smartphones predict what consumers want to do, delivering a more refined experience than ever before.”

- gizmo686: Last I checked, current market rates are about $30 for a typical transaction. To happen, transactions must be included in a fixed size block in the global block chain. Currently, there is not enough room per block, so space is auctioned off on a per byte basis. The size of a transaction is not related to the number of bitcoins involved, but the number of "utxo" involved. Essentially, if Alice sends you 1BTC and Bob sends you 1BTC, you do not simply have 2BTC, you have 2 utxo's each worth 1BTC. If you want to send more than 1.5BTC in a single transaction, you will need to include both utxo's, which will increase the size and therefore the fee. In contrast, if Carol sent you 2BTC in a single transaction, you can then send 1.5BTC at the same cost as 1BTC because only 1 utxo is involved. In a world with sufficeint space in a block, you could still need fees because the miners might decide to leave empty space instead of includeing free-rider transactions. This does not actually save them money, but if they control a non-trivial amount of the hashrate, it could encourage people to increase there fees in order to decrease their confirmation time (especially if all the major miners do this).

- ChampTruffles: Chinese from China here. I live nowhere near these places (Xinjiang and Tibet), I can say I agree with you mostly, but I highly suspect my opinion is popular here. The level of surveillance is scary but as discussed above, it is probably not uncommon. What scared me more is that any of these is not up to discuss and to be acknowledged in China, like even though I am shocked by this article I would not share it on any of my social media for obvious reasons. And for people like most of my family and friends who live far away from there, if you personally don't know anyone from Xinjiang then big chance you don't know what's happening there as it never broadcast in any sort. News like this can only be read by passing the GFW and by people who know English, real journalism in China is dead. So when 99% of this massive population only fed on what the authority gave them, the authority has virtually no control and everyone just live at their mercy. So basically if someone agrees on the safety > liberty, then I say lucky you. For anyone who disagrees with the authority action, can only suck it up and accept the reality.

- John Naughton: the thought was that if Luther were around now, he would also be using Twitter. It seemed like a banal thought at the time, but then of course one of the things that stands out about Luther and his revolution immediately is that he understood, in a way that almost nobody at that time understood, the significance of the printing press. More importantly, he understood its mechanics and how effective it could be in getting his ideas across.

- Meltdown/Spectre. In case you were wondering what would drive new processor sales for the next decade—unplanned obsolescence. The question: is it time to move on from virtualization? Virtualization is a business model; it's not necessary for building software. The system we have now is so complex we can only expect an emergent stream of new threats going forward. The past is holding us back.

- Reading privileged memory with a side-channel. Great thread by @nicoleperlroth: We're dealing with two serious threats. The first is isolated to #IntelChips, has been dubbed Meltdown, and affects virtually all Intel microprocessors. The patch, called KAISER, will slow performance speeds of processors by as much as 30 percent; 5. The second issue is a fundamental flaw in processor design approach, dubbed Spectre, which is more difficult to exploit, but affects virtually ALL PROCESSORS ON THE MARKET (Note here: Intel stock went down today but Spectre affects AMD and ARM too), and has NO FIX.

- A Simple Explanation of the Differences Between Meltdown and Spectre.

- More details about mitigations for the CPU Speculative Execution issue.

- How the Meltdown Vulnerability Fix Was Invented.

- @HansHuebner: The whole idea of running code from different trust zones on the same execution engine is dead. Forget about multi-user, forget about open systems, forget about shared tenancy clouds. Our industry is bitten by its ignorance of fundamental security principles. What strikes me, though, is that our systems are running on the presumption from when computers were invented, that computers are expensive. All systems are architected around that presumption, and on the belief that it is even possible to compartmentalize running programs within one shared machine. There is no reason for that. There is also no good, contemporary reason for the complex virtual memory architectures that we're all using today, which were created to address the problem that memory was expensive. These virtual memory architectures are what the current incidents are about. This needs to stop. Computing needs to be re-thought. We need to have simple security models that can be completely analyzed and verified at any point in time, which requires architectures that allow such analysis. I'm not talking a fixed re-release of our current CPUs, which will only lead us to the next catastrophe. I'm talking redesign systems from the ground up, with the presumption that billions of transistors can be had.

- @aionescu: We built multi-tenant cloud computing on top of processors and chipsets that were designed and hyper-optimized for single-tenant use. We crossed our fingers that it would be OK and it would all turn out great and we would all profit. In 2018, reality has come back to bite us.

- @securelyfitz: Here's my layman's not-totally-accurate-but-gets-the-point-across story about how #meltdown & #spectre type attacks work: Let's say you go to a library that has a 'special collection' you're not allowed access to, but you want to to read one of the books. 1/10

- @cshirky: I'm going to try explaining the Spectre attack with an analogy: Imagine a bank with safe deposit boxes. Every client has an ID card, and can request the contents of various boxes, which they can then take out of the vault.

- Overview of speculation-based cache timing side-channels: This whitepaper looks at the susceptibility of Arm implementations following recent research findings from security researchers at Google on new potential cache timing side-channels exploiting processor speculation. This paper also outlines possible mitigations that can be employed for software designed to run on existing Arm processors.

- Kubecon videos are now available.

- Dan Luu shows the greeks were right, we're descending from the golden age [of latency] to the iron age [of latency]. Computer latency: 1977-2017: If we look at overall results, the fastest machines are ancient. Newer machines are all over the place. Fancy gaming rigs with unusually high refresh-rate displays are almost competitive with machines from the late 70s and early 80s, but “normal” modern computers can’t compete with thirty to forty year old machines...If we had to pick one root cause of latency bloat, we might say that it’s because of “complexity”. Of course, we all know that complexity is bad. If you’ve been to a non-academic non-enterprise tech conference in the past decade, there’s a good chance that there was at least one talk on how complexity is the root of all evil and we should aspire to reduce complexity.

- Serverless is garbage, well, it helps make garbage collection more efficient. EMA Cloud Rant with GreenQ - Serverless Functions Enable the Internet of Garbage. One garbage truck collects up to 10,000 bins, so they decided to make the garbage trucks smarter, not the bins. A device installed on the truck measures the capacity of the bin and how much waste is in the bin. Also records time, location, and provides a visual verification of collection. Able to get a 53% optimization—mileage, man hours, emissions, traffic—by learning the waste production patterns of the area and develop a smarter pickup schedule. For example, collecting 2 rather 3 times a week. Able to maintain service levels, make residents happy, and cut costs. This is a pitch for IBM Cloud Functions/Open Whisk. They like it because it's open. They can understand it and contribute to it. Some customers want them to work on-premise, not on a cloud, so Open Whisk gives them a choice while using the same architecture.

- Technology shapes and forms. A Roman war chariot could still ride to battle on a modern road. Songs are typically about 3 minutes long because a 78rpm record held about three minutes of sound per side. So it shouldn't be a surprise streaming, a new technology for distributing music, also shapes music. How would artists respond to near zero digital production costs, zero marginal distribution costs, and streaming's pay per play business model? Uniquely. WS More or Less: Why Albums are Getting Longer. Michael Jackson's Thriller album had nine tracks and runs at just over 42 minutes. Chris Brown released Heartbreak on a Full Moon, an album with 45 tracks and runs 2 hours and 38 minutes. Albums are getting longer. Why? Streaming. 1500 plays or streams of a track from a single album counts as a record sale. So the longer the album the fewer people have to listen to the whole thing to increase record sales. Record sales are how chart rankings are determined. Doing better in the charts gets you more exposure, which gets you more streams, which gets you more exposure, which gets you more money. And so on. Game the system and rule the world. Streaming almost doubles every year. 251 billion songs were streamed last year. Hip Hop is leading the way. They're releasing more music per year. They work as a worldwide team. They have teams of producers and song writers. More people help create more music, which is perfect for the digital streaming age. A traditional band can't hope to keep up. The structure of songs is also changing to game the system. For a song to count as a stream you must listen for 30 seconds. Artists are doing everything they can to get your attention for 30 seconds. After that, who cares? It's no longer "get to the chorus don't bore us." What's happening is intros are becoming an audio emoji encoding of what to expect in the rest of the song. For example, Despacito, a hit song approaching 5 billion views on YouTube, starts with a Puerto Rican guitar riff that let's you know it's a latin ballad, that's followed by shout-outs that tell you it will have some reggae tones, followed by crooned vocal melody that let's you know it's a pop song, followed by some electronic sounds that let's you know it's modern, and so on. Only after that does the song really start. It's like watching a movie trailer counting as watching the movie. In a Chainsmokers song the first 30 second verse never repeats, it just hooks you in. We'll see the shape of things to come. It's possible, like how Kindle Unlimited has changed book publishing for authors, we'll see non Hip Hop bands catch on and change how they produce music for the new age of streaming.

- Which is better: multicore or Single instruction, multiple data (SIMD) instruction? Multicore versus SIMD instructions: the “fasta” case study: My belief is that the performance benefits of vectorization are underrated...the multicore version is faster (up to twice as fast) but it uses twice the total running time (accounting for all CPUs)...I decided to reengineer the single-threaded approach so that it would be vectorized. Actually, I only vectorized a small fraction of the code...I am about four times faster, and I am only using one core.

- If you're largish company with a good business reason and a strong engineering team then Kubernetes can be made to work for you, though you may not want to bother. Stripe with a great experience report on using Kubernetes distributed cron job scheduling system, to run 500 different cron jobs across around 20 machines reliably, exactly the kind of thing a company would run internally. They don't seem unhappy with the experience, yet they don't seem overly enthused either. As evidence they say they're watching "AWS’s release of EKS with interest." The authors said, jvns: one of my primary goals in writing this post was to show that operating Kubernetes in production is complicated and to encourage people to think carefully before introducing a Kubernetes cluster into their infrastructure. Learning to operate Kubernetes reliably. Why Kubernetes? Wanted to build on top of an popular, active, existing open-source project. Already includes a cron scheduler. Written in Go so they could make bug fixes. How? They built it from scratch so they could deeply integrate Kubernetes in their architecture, and develop a deep understanding of its internals. Strategy? Talk to a lot other companies about their experiences. Learned to prioritize working on the etcd cluster’s reliability. Read the code to understand how it worked. Load tested to see if it could handle projected road. Incrementally migrate jobs to cluster. Investigate and fix bugs. Test by releasing a little chaos on the cluster. Be able to migrate a job back to the old cluster if it doesn't work. Also, Lessons learned from moving my side project to Kubernetes.

- Obviously the person with the highest score should become the Supreme Leader. Confucius would love that. Big data meets Big Brother as China moves to rate its citizens: The Chinese government plans to launch its Social Credit System in 2020. The aim? To judge the trustworthiness – or otherwise – of its 1.3 billion residents.

- How going serverless helped us reduce costs by 70%: A big part of our product involved processing files in a batch process, and we had built our orchestration infrastructure on Simple Workflow and EC2 instances...Despite most being t2.micro instances, our AWS bills kept increasing, from from ~$9000 to ~$30000 in the space of 4 months and we had over 1000 EC2 instances running...Throughput was another issue, for the costs we were spending, we weren’t processing as many as we should have...we began first by converting all of our micro services into Lambda functions, this was a fairly easy task but considering the number of services written, this took us about a month and a half to complete, at the same time we decided to convert one of our smallest workflows into a step function...Human resources in processing batches went from 24 hours to 16 hours and kept decreasing. Number of EC2 instances decreased 211 instances. Number of errors in SWF also began to decrease albeit never disappeared...the biggest impact for us has been, this has made us design future services in a completely micro service orientated way.

- Yes, there's such a thing as the GRAND stack: GraphQL, React, Apollo, and Neo4j Database. There's also a TICK stack for handling metrics and events: Telegraf, InfluxDB, Chronograf, and Kapacitor.

- Ironic, Etsy's beautifully crafted bespoke infrastructure may end up the same as all the beautifully crafted bespoke products on Etsy. And for the same reasons. An Elegy for Etsy: Corporate values of sustainability, such as those that Etsy tried to establish, are fundamentally incompatible with unbounded growth, or even double-derivative growth-of-growth (a concept that I have long derided as unnatural). It was obvious that Etsy’s values would have to change, and that is what is happening now. Indeed, this outcome was preordained the instant Etsy’s founders decided to take venture capital money way back in 2006 with the first $1M Series A; as David Heinemeier Hansson (dhh) says, “Etsy corrupted itself when it sold its destiny in endless rounds of venture capital funding. This wasn’t inevitable, it was a choice.”

- A bitcoin joke: A bunch of anarchocapitalists walk into a venture capitalist’s office wanting to perform their act. The venture capitalist asks what the act is and they reply: “A new block of transactions is created every ten minutes or so, with 12.5 bitcoins — BTC — reward — plus any fees on the transactions — attached. Bitcoin miners apply as much brute-force computing power as they can to take the prize in this block’s cryptographic lottery. Unprocessed transactions are broadcast across the Bitcoin network. A miner collects together a block of transactions and the hash of the last known block. They add a random ‘nonce’ value, then calculate the hash of the resulting block. If that number is lower than the current mining difficulty, they have mined a block! This successful block is then broadcast to the network, who can quickly check the block is valid. They get 12.5 BTC plus the transaction fees. If they failed, they pick another nonce value and try again. Since it’s extremely hard to pick what data will have a particular hash, guessing what value will give a valid block takes many calculations — as of October 2016 the Bitcoin network was running 1,900,000,000,000,000,000 — 1.9×1018, or 1.9 quintillion — hashes per second, or 1.14 x 1021 — 1.14 sextillion — per ten minutes. The difficulty is adjusted every two weeks — 2016 blocks — to keep the rate of solved blocks around one every ten minutes. The 1.14 sextillion calculations are thrown away, because the only point of all this is to show that you can waste electricity faster than everyone else.” The venture capitalist says: “Holy crap, that’s the worst idea I’ve ever heard! What’s the act called?” The anarchocapitalists reply: “The Blockchain!”

- It's a thousand years ago. To run an empire you need a census database. You don't have paper, let alone a computer. What do you do? Encode information in knots. That's what the Inca's did, as rediscovered by Manny Medrano during his spring break. The College Student Who Decoded the Data Hidden in Inca Knots. Knots encode numbers. Color encodes first names. How pendant cords are tied to the top cord indicates social group. Brilliant! I didn't see a bits per string calculation. Also, How a Guy From a Montana Trailer Park Overturned 150 Years of Biology.

- The problem with software is most of the complexity isn't of the fun and challenging "this a complex problem to solve" type. Most complexity in software is simply soul crushing digitrivia. Mastering Digital: The State of Software Engineering Report 2017: By far the number 1 challenge across the board for the survey respondents can be classified as “complexity”.Complexity is an issue experienced everywhere. It is experienced when integrating different layers of the stack, using different protocols, languages and data constructs. It is experienced in the sheer volume of choices we have to make regarding our languages, platforms, tools, libraries, frameworks (yes, Javascript, your name did come up), as well as protocols, patterns, techniques, and the like… It is experienced in the integration of our software applications — some we control, some we don’t. It is also experienced as systems change over time — version upgrades, new features, deprecated components, dependencies, data model upgrades, etc.

- Awesome analysis by James Hamilton on how little it would have cost to prevent a massive power failure at the Atlanta Hartsfield-Jackson International Airport, simply by having a redundant power supply. When You Can’t Afford Not to Have Power: The argument I’m making is Atlanta International Airport could have power redundancy for only $21M. Using reasonable assumptions, the losses from failure to monetize 800 aircraft for 1 day would pay 78% of the redundancy cost. If there was a second outage during the life of the power redundancy equipment, the costs are much more than covered. If we are willing to consider non-direct outage losses, a single failure would be enough to easily justify the cost of the power redundancy. Perhaps the biggest stumbling block to adding the needed redundancy is the flight operators lost most of the money but it would be the airport operator that bears the expense of adding the power redundancy. Let’s ignore the argument above, that a single event might fully pay for the cost of power redundancy protection, and consider the harder-to-fully-account for broader impact. It seems sensible for the operators of the biggest airport in the world and the airlines that fly through that facility to collectively pay $21M for 10 years of protection and have power redundancy.

- Looking to spice up your database-life? A Look at Ten New Database Systems Released in 2017: TimescaleDB — A Postgres-based Time-Series Database with Automatic Partitioning; Microsoft Azure Cosmos DB — Microsoft’s Multi-Model Database; Cloud Spanner — Google’s Globally Distributed Relational Database; Neptune — Amazon’s Fully Managed Graph Database Service; YugaByte — An Open Source, Cloud-Native Database; Peloton — A “Self Driving” SQL DBMS; JanusGraph — A Java-based Distributed Graph Database; Aurora Serverless — An Instantly Scalable, “Pay As You Go” Relational Database on AWS; TileDB — Storage of Massive Dense and Sparse Multi-Dimensional Arrays; Memgraph — A High Performance, In-Memory Graph Database.

- Just how slow is storing JSON in PostgreSQL, MySQL, and MongoDB? All is revealed in this lovingly detailed post Jsonb: few more stories about the performance. pier25: Surprising that PG beat Mongo at its own game. We've been recently using Jsonb with PG as a nice-to-have but always assumed Mongo would be much faster with schemaless data. zmmmmm: This is a great post but sad that they addressed everything thoroughly except the most important question for me - query performance. I don't do all this mass updating of document oriented entities. They are stored and they stay stored. But we query the hell out of them

- Would love to see design by contract get more first class support. Seems a natural fit with fault injection and chaos engineering. Almost 15 Years of Using Design by Contract.

- Block away some time for this one. Not just because it's long—it is—but it's also thoughtful and challenging. It's not often reading just one article changes my mind on a topic. Testing Microservices, the sane way: when it comes to testing (or worse, when developing) microservices, most organizations seem to be quite attached to an antediluvian model of testing all components in unison...Even with modern Operational best practices like infrastructure-as-code and immutable infrastructure, trying to replicate a cloud environment locally is tantamount to booting a cloud on a dev machine and doesn’t offer benefits commensurate with the effort required to get it off the ground and subsequently maintain it...Development teams are now responsible for testing as well as operating the services they author. This new model is something I find incredibly powerful since it truly allows development teams to think about the scope, goal, tradeoffs and payoffs of the entire spectrum of testing in a manner that’s realistic as well as sustainable...By and large, the biggest impediment to adopting a more comprehensive approach to testing is the required shift in mindset. Pre-production testing is something ingrained in software engineers from the very beginning of their careers whereas the idea of experimenting with live traffic is either seen as the preserve of Operations engineers or is something that’s met with alarm and/or FUD...Pushing regression testing to post-production monitoring requires not just a change in mindset and a certain appetite for risk, but more importantly an overhaul in system design along with a solid investment in good release engineering practices and tooling...A “top-down” approach to testing or monitoring by treating it as an afterthought has proven to be hopelessly ineffective so far...Developers need to get comfortable with the idea of testing and evolving their systems based on the sort of accurate feedback they can only derive by observing the way these systems behave in production...The writing and running of tests is not a goal in and of itself — EVER. We do it to get some benefit for our team, or the business. If you can find a more efficient way to get those benefits that works for your team & larger org, you should absolutely take it. Because your competitor probably is.

- Nice slice of history from James Hamilton. Four DB2 Code Bases?

- Frontend in 2017: The important parts: Frontend trends are starting to stabilize — popular libraries have largely gotten more popular instead of being disrupted by competitors — and web development is starting to look pretty awesome...React has won for now but Angular is still kicking. Meanwhile, Vue is surging in popularity...WebAssembly will change everything eventually, but it’s still pretty new...PostCSS is the preferred CSS preprocessor, but many are switching to CSS-in-JS solutions...Webpack is still by far the most popular module bundler, but it might not be forever...TypeScript is winning against Flow...Redux is still king, but look out for MobX and mobx-state-tree...GraphQL is gaining momentum.

- Ouch, that really sucks. They must feel horrible. All programmers can relate. Russian satellite lost after being set to launch from wrong spaceport - programmers gave the $45m device coordinates for Baikonur rather than Vostochny cosmodrome.

- Etsy is methodically planning their transition to Google Cloud. Here's how. Selecting a Cloud Provider: We started by identifying eight major projects, including the production render path for the site, the site’s search services, the production support systems such as logging, and the Tier 1 business systems like Jira. We then divided these projects further into their component projects—MySQL and Memcached as part of the production render path, for example. By the end of this exercise, we had identified over 30 sub-projects...To help gather all of these requirements, we used a RACI model to identify subproject ownership. The RACI model is used to identify the responsible, accountable, consulted, and informed people for each sub-project. We used the following definitions: Responsible, Accountable, Consulted, Informed...Ultimately, we held 25 architectural reviews for major components of our system and environments. We also held eight additional workshops for certain components we felt required more in-depth review...we began outlining the order of migration. In order to do this we needed to determine how the components were all interrelated. This required us to graph dependencies, which involved teams of engineers huddled around whiteboards discussing how systems and subsystems interact...we ran some of our Hadoop jobs on one of the cloud providers’ services, which gave us a very good understanding of the effort required to migrate and the challenges that we would face in doing so at scale...Over the course of five months, we met with the Google team multiple times. Each of these meetings had clearly defined goals, ranging from short general introductions of team members to full day deep dives on various topics such as using container services in the cloud...By this point we had thousands of data points from stakeholders, vendors, and engineering teams. We leveraged a tool called a decision matrix that is used to evaluate multiple-criteria decision problems. This tool helped organize and prioritize this data into something that could be used to impartially evaluate each vendor’s offering and proposal. Our decision matrix contained over 200 factors prioritized by 1,400 weights and evaluated more than 400 scores.

- Nicely put. NP-hard does not mean hard: When a problem is NP-hard, that simply means that the problem is sufficiently expressive that you can use the problem to express logic. By which I mean boolean formulas using AND, OR, and NOT...To reiterate, NP-hardness means that Super Mario has expressive power. So expressive that it can emulate other problems we believe are hard in the worst case. And, because the goal of mathematical “hardness” is to reason about the limitations of algorithms, being able to solve Super Mario in full generality implies you can solve any hard subproblem, no matter how ridiculous the level design...So the mathematical notions of hardness are quite disconnected from practical notions of hardness...And so this is what makes Mario NP-hard, because boolean logic satisfiability is NP-hard. Any problem in NP can be solved by a boolean logic solver, and hence also by a mario-level-player.

- Google has a broad definition of What is Serverless? They include not just App Engine and Cloud Functions, but anything accessed over an API.

- An excellent in-depth Introduction to modern network load balancing and proxying: Load balancers are are a key component in modern distributed systems; There are two general classes of load balancers: L4 and L7; Both L4 and L7 load balancers are relevant in modern architectures; L4 load balancers are moving towards horizontally scalable distributed consistent hashing solutions; L7 load balancers are being heavily invested in recently due to the proliferation of dynamic microservice architectures; Global load balancing and a split between the control plane and the data plane is the future of load balancing and where the majority of future innovation and commercial opportunities will be found; The industry is aggressively moving towards commodity OSS hardware and software for networking solutions. I believe traditional load balancing vendors like F5 will be displaced first by OSS software and cloud vendors.

- Not sure if there's a practical take-away here, but maybe you can get something out of it. 18 Exponential Changes We Can Expect in the Year Ahead: international relations, the political economy, and governance will desperately need new design patterns as we enter a new phase of the digital revolution; While Silicon Valley leads, both innovation and scaling increasingly occur across the globe; More money will flow into technology but it will be concentrated at later stages; The AI software stack will continue to diverge from traditional software; and more.

- Here's How serverless scales an idea to 100K monthly users — at zero cost: How much does hosting a dozen Alexa skills that connect to over 100K unique users in 30 days with 1M function invocations cost? Zero. Zilch. Nada...what happens if your Alexa skills go viral and exceed 1M requests for the AWS Lambda service? For every 1M requests thereafter, you’ll get a charge of $0.20 to your bill...even with over 50 million Lambda requests serving 175K users per day, the sleep sound apps developed by Nick Schwab generates a frugal monthly bill under $30.

- Brendan Gregg on How Netflix Tunes EC2: I love this talk as I get to share more about what the Performance and Operating Systems team at Netflix does, rather than just my work. Our team looks after the BaseAMI, kernel tuning, OS performance tools and profilers, and self-service tools like Vector.

- PostgreSQL benchmark on FreeBSD, CentOS, Ubuntu Debian and openSUSE: Among the GNU/Linux distributions, Centos 7.4 was the best performer, while Debian 9.2 was slowest. I was positively surprised by FreeBSD 11.1 which was more than twice as fast as the best performing Linux, despite the fact that FreeBSD used ZFS which is a copy-on-write file system. The results show the inefficiency of the Linux SW RAID (or ZFS RAID efficiency). CentOS 7.4 performance without SW RAID is only slightly better than FreeBSD 11.1 with ZFS RAID (for TCP-B and 100 concurrent clients).

- Practical and detailed. Building a Distributed Log from Scratch, Part 1: Storage Mechanics: The log is a totally-ordered, append-only data structure. It’s a powerful yet simple abstraction—a sequence of immutable events. It’s something that programmers have been using for a very long time, perhaps without even realizing it because it’s so simple. Building a Distributed Log from Scratch, Part 2: Data Replication: There are essentially two components to consensus-based replication schemes: 1) designate a leader who is responsible for sequencing writes and 2) replicate the writes to the rest of the cluster.

- teraflop: The basic idea [HyperLogLog] is that for each element X in your data, you compute a function f(X) which is equal to the number of leading zeros in a bitstring generated by hashing X. Then you take the maximum of this value across all elements in the dataset (which can be efficiently done on distributed or streaming datasets). For any given value of X, the probability that its hash contains N leading zeros is proportional to 1/2^N. We're taking the maximum, so if any one of the values has N leading zeros, then max(f(X)) >= N. If the number of distinct values observed is significantly greater than 2^N, it's very likely that at least one of them has N leading zeros. Conversely, if the number of distinct values is much less than 2^N, it's relatively unlikely. So the expected value of max(f(X)) is proportional to log(distinct values), with a constant factor that can be derived. This is the basic "probabilistic counting" algorithm of Flajolet and Martin (1985). Its major flaw is that it produces an estimate with very high variance; it only takes a single unusual bitstring to throw the result way off. You can get a much more reliable result by partitioning the input into a bunch of subsets (by slicing off a few bits of the hash function) and keeping a separate counter for each subset, then averaging all the results at the end. This improvement, plus a few other optimizations, gives you HyperLogLog.

- Interesting analogy between serverless as a platform and Unity3D as a game development platform. How Serverless Computing will Change the World in 2018. By the end of 2016, thousands of games had been developed [on a game engine called Unity3D] and released onto the Steam platform. By abstracting away some of the key complexities of game development such as the graphics rendering system, game developers were able to make far further strides than they would have been should they have attempted to go it alone. Serverless computing will have as big an impact on software development as Unity3D had on Game Development.

- Good series. Microservices in Golang - Part 5 - Event brokering with Go Micro. Also, Unbounded Queue: A tale of woe.

- A long and fascinating meditation on How to Print Integers Really Fast (With Open Source AppNexus Code!): So, if you ever find yourself bottlenecked on s[n]printf...The correct solution to the “integer printing is too slow” problem is simple: don’t do that. After all, remember the first rule of high performance string processing: “DON’T.” When there’s no special requirement, I find Protobuf does very well as a better JSON. However, once you find yourself in this bad spot, it’s trivial to do better than generic libc conversion code.

- Very thorough and balanced overview of GAE. Covers development, deployment, monitoring, logging, data storage, and so on. It seems likely serverless will fill the PaaS roll GAE was designed to fill. Composing systems from event triggered functions that use scalable services is more flexible than PaaS. 3 years on Google App Engine. An Epic Review: "All in all, I liked how App Engine allowed the development team to focus on actually building an application, making users happy and earning money. Google took a lot of hassle out of the operations work. But the 'old' App Engine is on its way out. I do not think it is a good idea to start new projects on it anymore." Not all agree. asamarin: Bear in mind this article was written back in March 2017; quite a few things have changed/improved in GAE-land since. sologoub: Within less than a day I had a prototype up and running that handled inbound requests, did some processing, offloaded the rest to background workers and returned the request. The workers asynchronously stream data to BigQuery for real-time reporting. The only thing I found baffling is that I could not load Googles' own cloud sdk from within the GAE environment and had to "vendor" it in, doing a bunch of workarounds/patching to get it to run.

- github.com/kubeless: a Kubernetes-native serverless framework that lets you deploy small bits of code without having to worry about the underlying infrastructure plumbing. It leverages Kubernetes resources to provide auto-scaling, API routing, monitoring, troubleshooting and more.

- twitter/vireo: a lightweight and versatile video processing library that powers our video transcoding service, deep learning recognition systems and more. It is written in C++11 and built with functional programming principles. It also optionally comes with Scala wrappers that enable us to build scalable video processing applications within our backend services.

- capsule8/capsule8: performs advanced behavioral monitoring for cloud-native, containers, and traditional Linux-based servers. This repository contains the open-source components of the Capsule8 platform, including the Sensor, example API client code, and command-line interface.

- Stackvana/microcule: If you are using Amazon Lambda or other cloud function hosting services like Google Functions or hook.io, you might find microcule a very interesting option to remove your dependency on third-party cloud providers. microcule allows for local deployment of enterprise ready microservices. microcule has few dependencies and will run anywhere Node.js can run.

- darcius/rocketpool: a next generation decentralised Ethereum proof of stake (POS) pool currently in Alpha and built to be compatible with Casper. Features include Casper compatibility, smart nodes, decentralised infrastructure with automatic smart contract load balancing. Unlike traditional centralsed POW pools, Rocket Pool utilises the power of smart contracts to create a self regulating decentralised network of smart nodes that allows users with any amount of Ether to earn interest on their deposit and help secure the Ethereum network at the same time.

- OpenMined/PySyft: The goal of this library is to give the user the ability to efficiently train Deep Learning models in a homomorphically encrypted state, without needing to be an expert in either. Furthermore, by understanding the characteristics of both Deep Learning and Homomorphic Encryption, we hope to find a very performant combinations of the two.

- halide/Halide (article): We propose a new programming language for image processing pipelines, called Halide, that separates the algorithm from its schedule. Programmers can change the schedule to express many possible organizations of a single algorithm. The Halide compiler then synthesizes a globally combined loop nest for an entire algorithm, given a schedule. Halide models a space of schedules which is expressive enough to describe organizations that match or outperform state-of-the-art hand-written implementations of many computational photography and computer vision algorithms.

- Enzyme-free nucleic acid dynamical systems (video): An important goal of synthetic biology is to create biochemical control systems with the desired characteristics from scratch. Srinivas et al. describe the creation of a biochemical oscillator that requires no enzymes or evolved components, but rather is implemented through DNA molecules designed to function in strand displacement cascades. Furthermore, they created a compiler that could translate a formal chemical reaction network into the necessary DNA sequences that could function together to provide a specified dynamic behavior.

- acharapko/retroscope-lib (article): a comprehensive lightweight solution for monitoring and debugging of distributed systems. It allows users to query and reconstruct past consistent global states of an application. Retroscope achieves this by augmenting the system with Hybrid Logical Clocks (HLC) and streaming HLCstamped event logs to storage and processing, where HLC timestamps are used for constructing global (or nonlocal) snapshots upon request.

- Differentially private Bayesian learning on distributed data: We propose a learning strategy based on a secure multi-party sum function for aggregating summaries from data holders and the Gaussian mechanism for DP. Our method builds on an asymptotically optimal and practically efficient DP Bayesian inference with rapidly diminishing extra cost.

- Taking a Long Look at QUIC: QUIC generally outperforms TCP, but we also identified performance issues related to window sizes, re-ordered packets, and multiplexing large number of small objects; further, we identify that QUIC’s performance diminishes on mobile devices and over cellular networks. Also, The QUIC Transport Protocol: Design and Internet-Scale Deployment.

- Twizzler: An Operating System for Next-Generation Memory Hierarchies: The introduction of NVDIMMs (truly non-volatile and directly accessible) requires us to rethink all levels of the system stack, from processor features to applications. Operating systems, too, must evolve to support new I/O models for applications accessing persistent data. We are developing Twizzler, an operating system for next-generation memory hierarchies. Twizzler is designed to provide applications with direct access to persistent storage while providing mechanisms for cross-object pointers, removing itself from the common access path to persistent data, and providing fine-grained security and recoverability. The design of Twizzler is a better fit for low-latency and byte-addressable persistent storage than prior I/O models of contemporary operating systems

- Scalable eventually consistent counters over unreliable networks: This paper defines Eventually Consistent Distributed Counters (ECDCs) and presentsan implementation of the concept, Handoff Counters, that is scalable and works over unreliable networks. By giving up thetotal operation ordering in classic distributed counters, ECDC implementations can be made AP in the CAP design space,while retaining the essence of counting. Handoff Counters are the first Conflict-free Replicated Data Type (CRDT) basedmechanism that overcomes the identity explosion problem in naive CRDTs, such as G-Counters (where state size is linearin the number of independent actors that ever incremented the counter), by managing identities towards avoiding globalpropagation and garbage collecting temporary entries.

- Matters Computational Ideas, Algorithms, Source Code. Almost 1000 pages of all things computation. Truly a labor of love.

- Superhuman AI for heads-up no-limit poker: Libratus beats top professionals: We present Libratus, an AI that, in a 120,000-hand competition, defeated four top human specialist professionals in heads-up no-limit Texas hold’em, the leading benchmark and long-standing challenge problem in imperfect-information game solving. Our game-theoretic approach features application-independent techniques: an algorithm for computing a blueprint for the overall strategy, an algorithm that fleshes out the details of the strategy for subgames that are reached during play, and a self-improver algorithm that fixes potential weaknesses that opponents have identified in the blueprint strategy.