Stuff The Internet Says On Scalability For July 14th, 2017

Hey, it's HighScalability time:

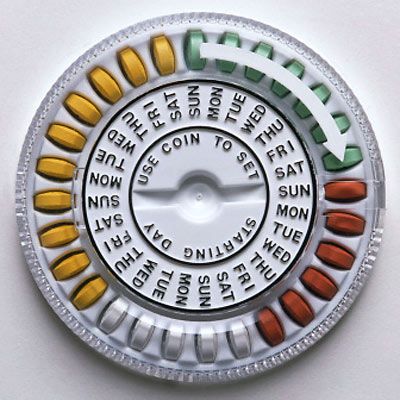

We've seen algorithms expressed in seeds. Here's an algorithm for taking birth control pills expressed as packaging. Awesome history on 99% Invisible.

If you like this sort of Stuff then please support me on Patreon.

- 2 trillion: web requests served daily by Akamai; 9 billion: farthest star ever seen in light-years; 10^31: bacteriophages on earth; 7: peers needed to repair ransomware damage; $30,000: threshold of when to leave AWS; $300K-$400K: beginning cost of running Azure Stack on HPE ProLiant; 3.5M: files in the Microsoft's git repository; 300M: Google's internal image data training set size; 7.2 Mbps: global average connection speed; 85 million: Amazon Prime members; 35%: Germany generated its electricity from renewables;

- Quotable Quotes:

- Jessica Flack: I believe that science sits at the intersection of these three things — the data, the discussions and the math. It is that triangulation — that’s what science is. And true understanding, if there is such a thing, comes only when we can do the translation between these three ways of representing the world.

- gonchs: “If your whole business relies on us [Medium], you might want to pick a different one”

- @AaronBBrown777: Hey @kelseyhightower, if you're surfing GitHub today, you might find it interesting that all your web bits come thru Kubernetes as of today.

- Psyblog: The researchers were surprised to find that a more rebellious childhood nature was associated with a higher adult income.

- Antoine de Saint-Exupéry: If you want to build a ship, don't drum up people to collect wood and don't assign them tasks and work, but rather teach them to long for the endless immensity of the sea.

- Marek Kirejczyk: In general I would say: if you need to debug — you’ve already lost your way.

- jasondc: To put it another way, RethinkDB did extremely well on Hacker News. Twitter didn't, if you remember all the negative posts (and still went public). There is little relation between success on Hacker News and company success.

- Rory Sutherland: What intrigues me about human decision making is that there seems to be a path-dependence involved - to which we are completely blind.

- joeblau: That experience taught me that you really need to understand what you're trying to solve before picking a database. Mongo is great for some things and terrible for others. Knowing what I know now, I would have probably chosen Kafka.

-

0xbear: cloud "cores" are actually hyperthreads. Cloud GPUs are single dies on multi-die card. If you use GPUs 24x7, just buy a few 1080 Ti cards and forego the cloud entirely. If you must use TF in cloud with CPU, compile it yourself with AVX2 and FMA support. Stock TF is compiled for the lowest common denominator

-

Dissolving the Fermi Paradox: Doing a distribution model shows that even existing literature allows for a substantial probability of very little life, and a more cautious prior gives a significant probability for rare life

- Peter Stark: Crews with clique structures report significantly more depression, anxiety, anger, fatigue and confusion than crews with core-periphery structures.

- Patrick Marshall: Gu said that the team expects to have a prototype [S2OS’s software-defined hypervisor is being designed to centrally manage networking, storage and computing resources] ready in about three years that will be available as open-source software.

- cobookman: I've been amazed that more people don't make use of googles preemtibles. Not only are they great for background batch compute. You can also use them for cutting your stateless webserver compute costs down. I've seen some people use k8s with a cluster of preemtibles and non preemtibles.

- @jeffsussna: Complex systems can’t be fully modeled. Failure becomes the only way to fully discover requirements. Thus the need to embrace it.

- Jennifer Doudna: a genome’s size is not an accurate predictor of an organism’s complexity; the human genome is roughly the same length as a mouse or frog genome, about ten times smaller than the salamander genome, and more than one hundred times smaller than some plant genomes.

- Daniel C. Dennett: In Darwin’s Dangerous Idea (1995), I argued that natural selection is an algorithmic process, a collection of sorting algorithms that are themselves composed of generate-and-test algorithms that exploit randomness (pseudo-randomness, chaos) in the generation phase, and some sort of mindless quality-control testing phase, with the winners advancing in the tournament by having more offspring.

- Almir Mustafic: My team learned the DynamoDB limitations before we went to production and we spent time calculating things to properly provision RCUs and WCUs. We are running fine in production now and I hear that there will be automatic DynamoDB scaling soon. In the meantime, we have a custom Python script that scales our DynamoDB.

- I've written a novella: The Strange Trial of Ciri: The First Sentient AI. It explores the idea of how a sentient AI might arise as ripped from the headlines deep learning techniques are applied to large social networks. I try to be realistic with the technology. There's some hand waving, but I stay true to the programmers perspective on things. One of the big philosophical questions is how do you even know when an AI is sentient? What does sentience mean? So there's a trial to settle the matter. Maybe. The big question: would an AI accept the verdict of a human trial? Or would it fight for its life? When an AI becomes sentient what would it want to do with its life? Those are the tensions in the story. I consider it hard scifi, but if you like LitRPG there's a dash of that thrown in as well. Anyway, I like the story. If you do too please consider giving it a review on Amazon. Thanks for your support!

- Serving 39 Million Requests for $370/Month, or: How We Reduced Our Hosting Costs by Two Orders of Magnitude. Step 1: Just Go Serverless: Simply moving to a serverless environment had the single greatest impact on reducing hosting costs. Our extremely expensive operating costs immediately shrunk by two orders of magnitude. Step 2: Lower Your Memory Allocation: Remember, each time you halve your function’s memory allocation, you’re roughly halving your Lambda costs. Step 3: Cache Your API Gateway Responses: We pay around $14 a month for a 0.5GB API Gateway cache with a 1 hour TTL. In the last month, 52% (20.3MM out of 39MM) of our API requests were served from the cache, meaning less than half (18.7MM requests) required invoking our Lambda function. That $14 saves us around $240 a month in Lambda costs.

- Had to check the date on this one. Is Ruby Too Slow For Web-Scale? Yep, it's recent. Nothing really new, but well written. If your goal the lowest possible latency and the lowest possible server cost then Ruby is not for you. If you want programmer productivity or you care about this thing called 'happiness', then maybe it is. Good discussion on Hacker News.

- What's the end state of a unicorn? Unicorpse. Ouch. Silicon Valley's Overstuffed Startups: With Silicon Valley under pressure to make cash back from prior startup investments, more young tech companies will run out of money and die. Already this year, more than a billion dollars of investor money has been wiped out in the closing of tech startups like fitness hardware company Jawbone, food-delivery startup Sprig and messaging app Yik Yak. Expect to hear the colorful term "unicorpse" -- that's a dead startup unicorn -- much more in 2017.

- Hit a performance problem in an Erlang VM and it seems the thing to do is rewrite the internals. Is that a good thing? How Discord Scaled Elixir to 5,000,000 Concurrent Users: we are up to nearly five million concurrent users and millions of events per second flowing through the system...Elixir is a new ecosystem, and the Erlang ecosystem lacks information about using it in production...Discord is rich with features, most of it boils down to pubsub...Eventually we ended up with many Discord servers like /r/Overwatch with up to 30,000 concurrent users. During peak hours, we began to see these processes fail to keep up with their message queues...Manifold was born. Manifold distributes the work of sending messages to the remote nodes of the PIDs...as Discord scaled, we started to notice issues when we had bursts of users reconnecting. The Erlang process responsible for controlling the ring would start to get so busy that it would fail to keep up with requests to the ring...After solving the performance of the node lookup hot path, we noticed that the processes responsible for handling guild_pid lookup on the guild nodes were getting backed up.

- The cost curve for storage is no longer favorable. For really cheap storage we'll have to wait for DNA. Until then dust off those long forgotten disk conservation skills. Hard Drive Cost Per Gigabyte: The change in the rate of the cost per gigabyte of a hard drive is declining. For example, from January 2009 to January 2011, our average cost for a hard drive decreased 45% from $0.11 to $0.06 – $0.05 per gigabyte. From January 2015 to January 2017, the average cost decreased 26% from $0.038 to $0.028 – just $0.01 per gigabyte. This means that the declining price of storage will become less relevant in driving the cost of providing storage.

- Kotlin mania aside, does it perform? Kotlin's hidden costs – Benchmarks. Very close to Java in most of tests, sometimes better. So, the performance doesn't suck. Follow your bliss.

- AMD's back baby. Or is it? For an epic review there's Sizing Up Servers: Intel's Skylake-SP Xeon versus AMD's EPYC 7000 - The Server CPU Battle of the Decade? AnandTech must be doing something right, accusations fly saying the article is both pro Intel and pro AMD. Summary: At a high level, I would argue that Intel has the most advanced multi-core topology, as they're capable of integrating up to 28 cores in a mesh. The mesh topology will allow Intel to add more cores in future generations while scaling consistently in most applications...AMD's MCM approach is much cheaper to manufacture. Peak memory bandwidth and capacity is quite a bit higher with 4 dies and 2 memory channels per die. However, there is no central last level cache that can perform low latency data coordination between the L2-caches of the different cores (except inside one CCX). Lots and lots of comments. lefty2: I can summarize this article: "$8719 chip beaten by $4200 chip in everything except database and Apache spark." Now this is a good question: Shankar1962: Can someone explain me why GOOGLE ATT AWS ALIBABA etc upgraded to sky lake when AMD IS SUPERIOR FOR HALF THE PRICE? PixyMisa answers: Epyc is brand new; Functions like ESXi hot migration may not be supported on Epyc yet; Those companies don't pay the same prices we do; Amazon have customised CPUs for AWS. ParanoidFactoid: Epyc has latency concerns in communicating between CCX blocks, though this is true of all NUMA systems. If your application is latency sensitive, you either want a kernel that can dynamically migrate threads to keep them close to their memory channel - with an exposed API so applications can request migration. (Linux could easily do this, good luck convincing MS). OR, you take the hit. OR, you buy a monolithic die Intel solution for much more capital outlay. Further, the takeaway on Intel is, they have the better technology. But their market segmentation strategy is so confusing, and so limiting, it's near impossible to determine best cost/performance for your application. So you wind up spending more than expected anyway. AMD is much more open and clear about what they can and can't do. Intel expects to make their money by obfuscating as part of their marketing strategy. Finally, Intel can go 8 socket, so if you need that - say, high core low latency securities trading - they're the only game in town. Sun, Silicon Graphics, and IBM have all ceded that market. intelemployee2012: After looking at the number of people who really do not fully understand the entire architecture and workloads and thinking that AMD Naples is superior because it has more cores, pci lanes etc is surprising. AMD made a 32 core server by gluing four 8core desktop dies whereas Intel has a single die balanced datacenter specific architecture which offers more perf if you make the entire Rack comparison. It's not the no of cores its the entire Rack which matters. Intel cores are superior than AMD so a 28 core xeon is equal to ~40 cores if you compare again Ryzen core so this whole 28core vs 32core is a marketing trick. Everyone thinks Intel is expensive but if you go by performance per dollar Intel has a cheaper option at every price point to match Naples without compromising perf/dollar. To be honest with so many Fabs, don't you think Intel is capable of gluing desktop dies to create a 32core,64core or evn 128core server (if it wants to) if thats the implementation style it needs to adopt like AMD? The problem these days is layman looks at just numbers but that's not how you compare. Amiga500: No surprise that the Intel employee is descending to lies and deceit to try and plaster over the chasms! They've also reverted to bribing suppliers to offer Ryzen with only crippled memory speeds too

- With Google's new first of its kind Virtual Private Cloud it will be much easier to create and maintain a network across multiple datacenters. None of the hassle of replicating configurationb across each location. No worrying about overlays, underlays, etc. And you aren't flowing over the open internet either, you're on Google's network. Once you bind datacenters together you create a single failure domain, but it's just so dang convenient. And doesn't convenience explain a lot about the world today?

- Why [ShoutOUT] switched from docker to serverless: Around 80% of the backend services we had were successfully migrated to a serverless stack and we were able to reduce a considerable amount of cost this way...Since integration, we've taken a serverless first approach; all new services are built in a serverless fashion unless there is an obvious reason not to go serverless. This has helped us dramatically shorten our release cycles, which, as a startup and a SaaS provider, has been hugely beneficial.

- Wait, Serverless requires a more skilled developer? Developer Experience Lessons Operating a Serverless-like Platform At Netflix: In serverless, a combination of smaller deployment units and higher abstraction levels provides compelling benefits, such as increased velocity, greater scalability, lower cost and more generally, the ability to focus on product features. However, operational concerns are not eliminated; they just take on new forms or even get amplified. Operational tooling needs to evolve to meet these newer requirements. The bar for developer experience as a whole gets raised.

- Bummer for Silicon Valley plot points. Is Decentralized Storage Sustainable?: The result of this process is a network in which the aggregate storage resource is overwhelmingly controlled by a small number of entities, controlling large numbers of large peers in China. These are the ones which started with a cost base advantage and moved quickly to respond to demand. The network is no longer decentralized, and will suffer from the problems of centralized storage outlined above. This should not be a surprise. We see the same winner-take-all behavior in most technology markets. We see this behavior in the Bitcoin network.

- How Nature Solves Problems Through Computation: We have this principle of collective computation that seems to involve these two phases. The neurons go out and semi-independently collect information about the noisy input, and that’s like neural crowdsourcing. Then they come together and come to some consensus about what the decision should be. And this principle of information accumulation and consensus applies to some monkey societies also. The monkeys figure out sort of semi-independently who is capable of winning fights, and then they consolidate this information by exchanging special signals. The network of these signals then encodes how much consensus there is in the group about any one individual’s capacity to use force in fights...If big contributors become more likely to join fights, the system moves toward the critical point where it is very sensitive, meaning a small perturbation can knock it over into this all-fight state.

- Videos are available from SharkFest, the Wireshark Developer and User Conference.

- Simple tool for measuring Cross Cloud Latency with source code. The purpose of the tool says rmanyari is to know where to create my VMs to have a reasonable latency to other services. In my case reasonable means < 100ms. This will definitly vary across products. inopinatus makes a good point that tool uses ping which has problems for four reasons: network elements often don't treat ICMP echo packets with respect; virtual machine may have more jitter in scheduling the transmission and the response; you don't get a picture of how congested the route is - is it good for a gigabit blast, or only a trickle of data; ping measures round-trip time and the return route can be different than the request route.

- Nice approach of using an analogy to describe each technique. Scaling a Web Service: Load Balancing. Covers: Layer 7 load balancing (HTTP, HTTPS, WS); Layer 4 load balancing (TCP, UDP); Layer 3 load balancing; DNS load balancing; Manually load balancing with multiple subdomains; Anycast.

- Here are 10 Common Data Structures Explained with Videos + Exercises: Linked Lists, Stacks, Queues, Sets, Maps, Hash Tables, Binary Search Tree, Trie, Binary Heap, Graph. Is your favorite on the list? Maps are my favorite. They are the duct tape in the programmer's tool box.

- Good summary. Apple’s WWDC 2017 Highlights For iOS Developers. Also, Creating a Simple Game With Core ML in Swift 4. Also also, Developers Are Already Making Great AR Experiences using Apple’s ARKit.

- VividCortex's State of Production Database Performance report. Is MySQL really faster than Postgres? Real data taken from real systems says no, neither seems to have the advantage.

- quacker: Typical [load balancing] setups that I've seen break the app up into (micro)services. Each service specializes in one type of "work". You may have API nodes that receive/validate incoming HTTP requests and some worker nodes that do more involved work (like long running tasks, for example). Then you can add more worker nodes as load increases (this is "horizontal" scaling). Your API nodes would be sitting behind a loadbalancer. Your nodes need to communicate with each other. This could happen over a queue (like RabbitMQ). Some nodes will put tasks onto a queue for other nodes to run. Or each node could provide its own API...All of these services would still connect to a "single" database. For resiliency, you'll want more than one database node. There are plenty of hosted database solutions (both for SQL and NoSQL databases). I've seen lots of multi-master sql setups, but whatever serves your app best...If your application supports user sessions, you'll have to ensure the node that handles the request can honor the session token. The "best" way of doing this is having a common cache (redis/memcached) where session tokens are stored (if a node gets a session token, just look it up in the common cache)...Of course, now your deployment is very complicated. You have to configure multiple nodes, potentially of different service types, which may need specific config. This is where config management tools like ansible/puppet/chef/terraform/etc come up.

- The advice is usually to not use a database as a queue. This looks like it might work for most needs, as long as you’re not UPDATEing then COMMITing work-in-progress queue items. What is SKIP LOCKED for in PostgreSQL 9.5?

- What problems will we solve with a quantum computer?: a quantum computer can be employed to reveal reaction mechanisms in complex chemical systems, using the open problem of biological nitrogen fixation in nitrogenase as an example.

- Design patterns for microservices: Ambassador can be used to offload common client connectivity tasks; Anti-corruption layer implements a façade between new and legacy applications; Backends for Frontends creates separate backend services for different types of clients; Bulkhead isolates critical resources; Gateway Aggregation aggregates requests to multiple individual microservices into a single request; Gateway Offloading enables each microservice to offload shared service functionality; Gateway Routing routes requests to multiple microservices using a single endpoint; Sidecar deploys helper components of an application as a separate container; Strangler supports incremental migration.

- Not sure if GraphQL is the new REST, but this a good tutorial: The Fullstack Tutorial for GraphQL. sayurichick notes a weakness: However, 99% of the tutorials on graphql , this one included, fail to show a real life use case. What I mean by that is a working Example of a SQL database from start to finish. So this tutorial was very cool, but not very useful. Just like the rest of them. Also, React, Relay and GraphQL: Under the Hood of the Times Website Redesign

- Revisiting the Unreasonable Effectiveness of Data. Google has we built an internal dataset of 300M images that are labeled with 18291 categories, which they call JFT-300M. Turns out bigger is better: Our findings suggest that a collective effort to build a large-scale dataset for pretraining is important. It also suggests a bright future for unsupervised and semi-supervised representation learning approaches. It seems the scale of data continues to overpower noise in the label space; Perhaps the most surprising finding is the relationship between performance on vision tasks and the amount of training data (log-scale) used for representation learning. We find that this relationship is still linear!

- ApolloAuto/apollo: an open autonomous driving platform. It is a high performance flexible architecture which supports fully autonomous driving capabilities.

- Istio: an open platform that provides a uniform way to connect, manage, and secure microservices. Istio supports managing traffic flows between microservices, enforcing access policies, and aggregating telemetry data, all without requiring changes to the microservice code.

- Here we have a paper that's hard to understand. Fractal: An Execution Model for Fine-Grain Nested Speculative Parallelism. One of the author's takes to Hacker News to better explain what the paper is about. Now the author does a good job on HN. The writing is natural and helpful. Why can't authors do a better job at explaining what their paper is about in the actual paper? suvinay: What motivated this work? While multi-cores are pervasive today, programming these systems and extracting performance on a range of real world applications remains challenging. Systems that support speculative parallelization, such as Transactional Memory (supported in hardware in commercial processors today, such as Intel Haswell, IBM's Power 8 etc.) help simplify parallel programming, by allowing programmers to designate blocks of code as atomic, in contrast to using complex synchronization using locks, semaphores etc. However, their performance on real world applications remains poor. This is because real world applications are complex, and often have large atomic tasks either due to necessity or convenience. For example, in database workloads, transactions have to remain atomic (i.e. all actions within a transaction have to "appear as a single unit"). But each transaction can be long (~few million cycles) -- such large tasks are prone to aborts, and are expensive to track (in software or hardware).

- The second annual Microservices Practitioner Virtual Summit is being held online from July 24 - 27. Practitioners from Lyft, Zalando, Ancestry, and Squarespace will discuss their experiences adopting microservices at scale as well as using cutting edge technologies like Kubernetes, Docker, Envoy, and Istio. Register now at microservices.com/summit to access the livestream.