Stuff The Internet Says On Scalability For July 26th, 2019

Wake up! It's HighScalability time—once again:

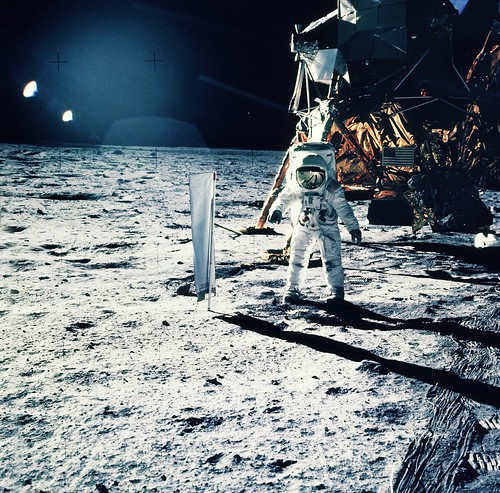

The Apollo 11 guidance computer repeatedly crashed on descent. On earth computer scientists had just 13 hours to debug the problem. They did. It was CPU overload because of a wrong setting. Some things never change!

Do you like this sort of Stuff? I'd greatly appreciate your support on Patreon. I wrote Explain the Cloud Like I'm 10 for people who need to understand the cloud. And who doesn't these days? On Amazon it has 52 mostly 5 star reviews (120 on Goodreads). They'll learn a lot and hold you in even greater awe.

Number Stuff:

- $11 million: Google fine for discriminating against not young people.

- 55,000+: human-labeled 3D annotated frames, a drivable surface map, and an underlying HD spatial semantic map in Lyft's Level 5 Dataset.

- 645 million: LinkedIn members with 4.5 trillion daily messages pumping through Kafka.

- 49%: drop in Facebook's net income. A fine result.

- 50 ms: repeatedly randomizing elements of the code that attackers need access to in order to compromise the hardware.

- 7.5 terabytes: hacked Russian data.

- 5%: increase in Tinder shares after bypassing Google's 30% app store tax.

- $21.7 billion: Apple's profit from other people's apps.

- 5 billion: records in 102 datasets in the UCR STAR spatio-temporal index.

- 200x: speediest quantum operation yet.

- 45%: US Fortune 500 companies founded immigrants and their kids.

- 70%: hard drive failures caused by media damage, including full head crashes.

- 12: lectures on everything Buckminster Fuller knew.

- 600,000: satellite images taken in a single day used to create a picture of Earth

- 149: hours between Airbus reboots needed to mask software problems.

Quotable Stuff:

- @mdowd: Now that Alan Turing is on the 50 pound note, they should rename ATMs to “Turing Machines”

- @hacks4pancakes: Another real estate person: “I tried using a password manager, but then my credit card was stolen and my annual payment for it failed - and they cut off access to all my passwords during a meeting.”

- @juliaferraioli: "Our programs were more complex because Go was so simple" -- @_rsc on reshaping #Golang at #gophercon

- Jason Shen: A YC founder once said to me that he found little correlation between the success of a YC company and how hard their founders worked. That is to say, among a group of smart, ambitious entrepreneurs who were all already working pretty hard, the factors that made the biggest difference were things like timing, strategy, and relationships. Which is why Reddit cofounder-turned-venture capitalist Alexis Ohanian now warns against the “utter bullshit” of this so-called hustle porn mentality.

- Dale Markowitz: A successful developer today needs to be as good at writing code as she is at extinguishing that code when it explodes.

- @allspaw: I’m with @snowded on this. Taleb’s creation of ‘antifragile’ is what the Resilience Engineering community has referred to as resilience all along.

- Timothy Lee: Heath also said that the interviewer assumed that the word "byte" meant eight bits. In his view, this also revealed age bias. Modern computer systems use 8-bit bytes, but older computer systems could have byte sizes ranging from six to 40 bits.

- General Patton: If everybody is thinking alike, somebody isn’t thinking

- panpanna: Architecturally, microkernels and unikernels are direct opposites. Unikernels strive to minimize communication complexity (and size and footprint but that is not relevant to this discussion) by putting everything in the same address space. This gives them many advantages among which performance is often mentioned but ease of development is IMHO equally important. However, the two are not mutually exclusive. Unikernels often run on top of microkernels or hypervisors.

- Wayne Ma: The team ultimately put a stop to most [Apple] device leaks—and discovered some audacious attempts, such as some factory workers who tried to build a tunnel to transport components to the outside without security spotting them.

- Dr. Steven Gundry: I think, uploaded most of our information processing to our bacterial cloud that lives in us, on us, around us, because they have far more genes than we do. They reproduce virtually instantaneously, and so they can do fantastic information processing. Many of us think that perhaps lifeforms on Earth, particularly animal lifeforms, exist as a home for bacteria to prosper on Earth.

- @UdiDahan: I've been gradually coming around to the belief that any "good" code base lives long enough for the environment around it to change in such away that its architecture is no longer suitable, making it then "bad". This would probably be as equally true of FP as of OOP.

- @DmitryOpines: No one "runs" a crypto firm Holger, we are merely the mortal agents through whose minor works the dream of disaggregated ledger currency manifests on this most unworthy of Prime Material Planes.

- Philip Ball: One of the most remarkable ideas in this theoretical framework is that the definite properties of objects that we associate with classical physics — position and speed, say — are selected from a menu of quantum possibilities in a process loosely analogous to natural selection in evolution: The properties that survive are in some sense the “fittest.” As in natural selection, the survivors are those that make the most copies of themselves. This means that many independent observers can make measurements of a quantum system and agree on the outcome — a hallmark of classical behavior.

- @mikko: Rarely is anyone thanked for the work they did to prevent the disaster that didn't happen.

- David Rosenthal: Back in 1992 Robert Putnam et al published Making democracy work: civic traditions in modern Italy, contrasting the social structures of Northern and Southern Italy. For historical reasons, the North has a high-trust structure whereas the South has a low-trust structure. The low-trust environment in the South had led to the rise of the Mafia and persistent poor economic performance. Subsequent effects include the rise of Silvio Berlusconi. Now, in The Internet Has Made Dupes-And Cynics-Of Us All, Zynep Tufecki applies the same analysis to the Web

- Diego Basch: So here is an obvious corollary. Like I just mentioned, if you have an idea for an interesting gadget you will move to a place like Shenzhen or Hong Kong or Taipei. You will build a prototype, prove your concept, work with a manufacturer to iterate your design until it’s mature enough to mass-produce. Either you will bootstrap the business or you will partner with someone local to fund it, because VCs won’t give you the time of the day. Now, let’s say hardware is not your cup of tea and you want to build services. Why be in Silicon Valley at all?

- Buckminster Fuller~ You derive data by segregating; You derive principles by integrating; Without data, you cannot calculate; Without calculations, you cannot generalize; Without generalizations, you cannot design; Without designs, you cannot discover; Without discoveries, you cannot derive new data...Segregation and integration are not opposed: they are complementary and interdependent. Striving to be a specialist OR a generalist is counterproductive; the aim is to be COMPREHENSIVE!

- @jessfraz: I see a lot of debates about “open core”. To me the premise behind it is “we will open this part of our software but you gotta take care of supporting it yourself.” Then they charge for support. Except the problem was some other people *cough* clouds *cough* beat them to it.

- @greglinden: Tech companies consistently get this wrong, thinking this is a simple black-and-white ML classification problem, spam or not spam, false or not false. Disinformation exploits that by being just ambiguous enough to not get classified as false. It's harder than that.

- Brent Ozar: The ultimate, #1, primary, existential, responsibility of a DBA – for which all other responsibilities pale in comparison – is to implement database backup and restore processing adequate to support the business’s acceptable level of data loss.

- Alex Hern: A dataset with 15 demographic attributes, for instance, “would render 99.98% of people in Massachusetts unique”. And for smaller populations, it gets easier: if town-level location data is included, for instance, “it would not take much to reidentify people living in Harwich Port, Massachusetts, a city of fewer than 2,000 inhabitants”.

- Memory Guy: Our forecasts find that 3D XPoint Memory’s sub-DRAM prices will drive that technology’s revenues to over $16 billion by 2029, while stand-alone MRAM and STT-RAM revenues will approach $4 billion — over one hundred seventy times MRAM’s 2018 revenues. Meanwhile, ReRAM and MRAM will compete to replace the bulk of embedded NOR and SRAM in SoCs, to drive even greater revenue growth. This transition will boost capital spending, increasing the spend for MRAM alone by thirty times to $854 million in 2029.

- @unclebobmartin: John told me he considered FP a failure because, to paraphrase him, FP made it simple to do hard things but almost impossible to do simple things.

- Dr. Neil J. Gunther: All performance is nonlinear.

- @mathiasverraes: I wish we'd stop debating OOP vs FP, and started debating individual paradigms. Immutability, encapsulation, global state, single assignment, actor model, pure functions, IO in the type system, inheritance, composition... all of these are perfectly possible in either OOP or FP.

- Troy Hunt: "1- All those servers were compromised. They were either running standalone VPSs or cpanel installations. 2- Most of them were running WordPress or Drupal (I think only 2 were not running any of the two). 3- They all had a malicious cron.php running"

- Gartner: AWS makes frequent proclamations about the number of price reductions it has made. Customers interpret these proclamations as being applicable to the company's services broadly, but this is not the case. For instance, the default and most frequently provisioned storage for AWS's compute service has not experienced a price reduction since 2014, despite falling prices in the market for the raw components.

- mcguire: Speaking as someone who has done a fair number of rewrites as well as watching rewrites fail, conventional wisdom is somewhat wrong. 1. Do a rewrite. Don't try to add features, just replace the existing functionality. Avoid a moving target. 2. Rewrite the same project. Don't redesign the database schema at the same time you are rewriting. Try to keep the friction down to a manageable level. 3. Incremental rewrites are best. Pick part of the project, rewrite and release that, then get feedback while you work on rewriting the next chunk.

- Atlassian: Isolating context/state management association to a single point is very helpful. This was reinforced at Re:Invent 2018 where a remarkably high amount of sessions had a slide of “then we have service X which manages tenant → state routing and mapping”.

- taxicabjesus: I have a ~77 year old friend who was recently telling me about going to Buckminster Fuller's lectures at his engineering university, circa 1968. He quoted Mr. Fuller as saying something like, "entropy takes things apart, life puts them back together."

- Daniel Abadi: PA/EC systems sound good in theory, but are not particularly useful in practice. Our one example of a real PA/EC system --- Hazelcast --- has spent the past 1.5 years introducing features that are designed for alternative PACELC configurations --- specifically PC/EC and PA/EL configurations. PC/EC and PA/EL configurations are a more natural cognitive fit for an application developer. Either the developer can be certain that the underlying system guarantees consistency in all cases (the PC/EC configuration) in which case the application code can be significantly simplified, or the system makes no guarantees about consistency at all (the PA/EL configuration) but promises high availability and low latency. CRDTs and globally unique IDs can still provide limited correctness guarantees despite the lack of consistency guarantees in PA/EL configurations.

- Simone de Beauvoir: Then why “swindled”? When one has an existentialist view of the world, like mine, the paradox of human life is precisely that one tries to be and, in the long run, merely exists. It’s because of this discrepancy that when you’ve laid your stake on being—and, in a way you always do when you make plans, even if you actually know that you can’t succeed in being—when you turn around and look back on your life, you see that you’ve simply existed. In other words, life isn’t behind you like a solid thing, like the life of a god (as it is conceived, that is, as something impossible). Your life is simply a human life.

- SkyPuncher: I think Netflix is the perfect example of where being data driven completely fails. If you listen to podcasts with important Netflix people everything you hear is about how they experiment and use data to decide what to do. Every decision is based on some data point. At the end of the day, they just continue to add features that create short term payoffs and long term failures. Pennywise and pound foolish.

- Frank Wilczek: I don't think a singularity is imminent, although there has been quite a bit of talk about it. I don't think the prospect of artificial intelligence outstripping human intelligence is imminent because the engineering substrate just isn’t there, and I don't see the immediate prospects of getting there. I haven’t said much about quantum computing, other people will, but if you’re waiting for quantum computing to create a singularity, you’re misguided. That crossover, fortunately, will take decades, if not centuries.

Useful Stuff:

- What an incredible series. Apollo 11 13 minutes to the Moon. Ep.05 The fourth astronaut tells the story of how the Apollo computer system was made. Apollo guidance computer weighed 30 kilos, was as big as a couple of shoe boxes, built by a team at MIT, was the worlds first digital, portable, general purpose computer. It was the first software system were people's lives depended on it. It was the first fly-by-wire system. Contract 1, the first contract of the Apollo program, was for the navigation computer. The MIT group used inertial navigation, first pioneered in Polaris missies. The idea is if you know where you start, direction, and acceleration, then you always know where you are and where you are going. Until this time flight craft were moved by manually pushing levers and flipping switches. Apollo couldn't affort the weight of these pulley based systems. They chose, for the first time ever (1964), to make a computer to control the flight of the space craft. They had to figure out everything. How would the computer communicate to all the different subsystems? Command a valve to open? Turn on an engine? Turn off an engine? Redirect an engine? Apollo is the moment when people stopped bragging about how big their computer was and started bragging about how small they were. Digital computers were the size of buildings at the time. At the time nobody trusted computers because they would only work a few hours or days at a time. They needed a computer to work for a couple weeks. They risked everything on a brand new technology called Integrated Circuits that were only in the labs. They made the very first computer with ICs. But they got permission to do it. A huge risk betting everything, but there was no alternative. There was no other way to build a computer with enough compute power. Use of ICs to build a digital computers is one of the lasting legacies of Apollo. Apollo bought 60% of the total chip output at the time, a huge boost to a fledgling computer industry. But the hardware needed software. Software was not even in the original 10 page contract. In 1967 they were afraid they wouldn't meet the end of the decade deadline because software is so complicated to build. And nothing has changed since. Margaret Hamilton joined the project in 1964. There were no rules for software at the time. There was no field of software development. You could get hired just for knowing a computer language. So again, not much has changed. Nobody knew what software was. You couldn't describe what you did to family. Very unregimented, very free environment. Don Eyles wrote the landing software on the AGC (Apollo Guidance Computer). The AGC was one square foot in size, weighed 70 pounds, 55 watts, 76kb of memory in the form of 16 bit words, only 4k was RAM, the rest was hard wired memory. Once written a program was printed out on paper and then converted to punch cards, by people key punch operators, that could be read directly into main frame computers, which translated them onto the AGC. Over 100 people worked on it at the end. All the cards had to be integrated together and submitted in one big run that executed overnight. Then the simulation would be run the next day to see if the code was OK. This was your debug cycle. The key punch operators had to go around at night and beat up on the prima donna programmers, who always wanted more time or do something over, to submit their jobs. Again, not much has changed. The key punch operators would go back to the programmers when they notice syntax errors. If the code wasn't right the program wouldn't go. It used core rope memory. Software was woven into the cores. If a wire went through one of the donut shaped cores of magnetic material that was a 0, if it went around a core that represented a 1. Software was hardware that was literally sewn into the structure of the computer, manually, by textile workers, by hand. Rope memory was proven tech at the time. It was bullet proof. There was no equivalent bullet proof approach to software, which is why Hamilton invented software engineering. There were no tools for finding errors at the time. They tried to find a way to build software so errors would not happen. Wrong time, wrong priority, wrong data, interface errors were the big source of errors. Nobody knew how to control a system with software. They came up with a verb and noun system that looked like a big calculator with buttons. The buttons had to be big and clear so they could be punched with gloves and seen through a visor. Verb, what do you want to do? Noun, what do you want to do it to? It used a simple little key board. There were three digital read outs, no text, it was all just numbers, three sets of numbers. To start initiating lunar landing program you would press noun, 63, enter. To start the program in 15 seconds you enter verb, 15, enter. A clock would start counting down. At zero it would start program 63 which initiated a large breaking burn to slow you down so you start dropping down to the surface of the moon. The astronauts didn't fly, they controlled programs. They ended 200 meters from where they intended to land. Flying manually would have taken a lot more fuel. The computer was always on and in operation. It was a balance of control, a partnership. The intention at first was to create a fully automated system with two buttons: go to the moon; go home. They ended up with 500 buttons. Again, things don't change.

- Why Some Platforms Thrive and Others Don’t: Some digital networks are fragmented into local clusters of users. In Uber’s network, riders and drivers interact with network members outside their home cities only occasionally. But other digital networks are global; on Airbnb, visitors regularly connect with hosts around the world. Platforms on global networks are much less vulnerable to challenges, because it’s difficult for new rivals to enter a market on a global scale...As for Didi and Uber, our analysis doesn’t hold out much hope. Their networks consist of many highly local clusters. They both face rampant multi-homing, which may worsen as more rivals enter the markets. Network-bridging opportunities—their best hope—so far have had only limited success. They’ve been able to establish bridges just with other highly competitive businesses, like food delivery and snack vending. (In 2018 Uber struck a deal to place Cargo’s snack vending machines in its vehicles, for instance.) And the inevitable rise of self-driving taxis will probably make it challenging for Didi and Uber to sustain their market capitalization. Network properties are trumping platform scale.

- James Hamilton: Where Aurora took a different approach from that of common commercial and open source database management systems is in implementing log-only storage. Looking at contemporary database transaction systems, just about every system only does synchronous writes with an active transaction waiting when committing log records. The new or updated database pages might not be written for tens of seconds or even minutes after the transaction has committed. This has the wonderful characteristic that the only writes that block a transaction are sequential rather than random. This is generally a useful characteristic and is particularly important when logging to spinning media but it also supports an important optimization when operating under high load. If the log is completing an I/O while a new transaction is being committed, then the commit is deferred until the previous log I/O has completed and the next log I/O might carry out tens of completed transactions that had been waiting during the previous I/O. The busier the log gets, the more transactions that get committed in a single write. When the system is lightly loaded each log I/O commits a single transaction as quickly as possible. When the system is under heavy load, each commit takes out tens of transaction changes at a slight delay but at much higher I/O efficiency. Aurora takes a bit more radical approach where it simply only writes log records out and never writes out data pages synchronously or otherwise. Even more interesting, the log is remote and stored with 6-way redundancy using a 4/6 write quorum and a 3/6 read quorum. Further improving the durability of the transaction log, the log writes are done across 3 different Availability Zones (each are different data centers). In this approach Aurora can continue to read without problem if an entire data center goes down and, at the same time, another storage server fails.

- Videos from DSConf 2019 are now available.

- Given Microsoft's purchase of LinkedIn three years ago, that LinkedIn is moving cloud to Azure should not be a big surprise. Have to wonder if there will be an Azure tax? Moving over from your own datacenters will certainly chew up a lot of cycles that could have went in to product.

- Best name ever: A grimoire of functions.

- ML helping programmers is becoming a thing. A GPT-2 model trained on ~2 million files from GitHub. Autocompletion with deep learning: TabNine is an autocompleter that helps you write code faster. We’re adding a deep learning model which significantly improves suggestion quality.

- It's hard to get a grasp on how EventBridge will change architectures. This article on using it as a new webhook is at least concrete. Amazon EventBridge: The biggest thing since AWS Lambda itself. Though with webhooks I just enter a url in a field on a form and I start receiving events. This works for PayPal, Slack, chatbots, etc. What's the EventBridge equivalent? The whole how to hook things up isn't clear at all. Also, Why Amazon EventBridge will change the way you build serverless applications.

- Tired of pumping all your data into a lake? Mesh it. The eternal cycle of centralizing, distributing, and then centralizing continues. How to Move Beyond a Monolithic Data Lake to a Distributed Data Mesh: "In order to decentralize the monolithic data platform, we need to reverse how we think about data, it's locality and ownership. Instead of flowing the data from domains into a centrally owned data lake or platform, domains need to host and serve their domain datasets in an easily consumable way." There's also an interview at Straining Your Data Lake Through A Data Mesh - Episode 90.

- The problem of false knowledge. Exponential Wisdom Episode 74: The Future of Construction. In this podcast there's a segment that extols the wonders of visiting the Sistine Chapel through VR instead of visiting in-person. Is anyone worried about the problem of false knowledge? If I show you a picture of a chocolate bar do you know what chocolate tastes like? Constricting all experience through only our visual senses is a form of false knowledge. The Sistine Chapel evoked in me a visceral feeling of awe tinged with sadness. Would I feel that through VR? I don't think so. I walked the streets. Tasted the food. Met the people. Saw the city. All experiences that can't be shoved through our eyes.

- Good to see Steve Balmer hasn't changed. Players players players. Developers developers developers.

- Never thought of this downside of open source before. SECURITY NOW 724 HIDE YOUR RDP NOW!. Kazakhstan is telling citizens to install a root cert into their browser so they can perform man- in-the-middle attacks. An interesting question is how browser makers should respond. More interesting is what if Kazakhstan responds by making their own browser based on open source, compromising it, and requring its use? Black Mirror should get on this. Software around us appears real, but has actually been replaced by pod-progs. Also, Open Source Could Be a Casualty of the Trade War

- Darkweb Vendors and the Basic Opsec Mistakes They Keep Making. Don't use email addresses that link to other accounts. Don't use the same IDs across accounts. Don't ship from the same area. Don't do stuff yourself so you can be photographed. Don't model your product using your own hands. Don't cause anyone to die. Don't sell your accounts to others. Don't believe someone when they offer to launder your money.

- Though it's still Electron. When a rewrite isn’t: rebuilding Slack on the desktop: The first order of business was to create the modern codebase: All UI components had to be built with React; All data access had to assume a lazily loaded and incomplete data model; All code had to be “multi-workspace aware”. The key to our approach ended up being Redux. The key to its success is the incremental release strategy that we adopted early on in the project: as code was modernized and features were rebuilt, we released them to our customers.

- Re-Architecting the Video Gatekeeper: We [Netflix] decided to employ a total high-density near cache (i.e., Hollow) to eliminate our I/O bottlenecks. For each of our upstream systems, we would create a Hollow dataset which encompasses all of the data necessary for Gatekeeper to perform its evaluation. Each upstream system would now be responsible for keeping its cache updated. With this model, liveness evaluation is conceptually separated from the data retrieval from upstream systems. Instead of reacting to events, Gatekeeper would continuously process liveness for all assets in all videos across all countries in a repeating cycle. The cycle iterates over every video available at Netflix, calculating liveness details for each of them. At the end of each cycle, it produces a complete output (also a Hollow dataset) representing the liveness status details of all videos in all countries.

- Should you hire someone who has already done the job you need to do? Not necessarily. Business Lessons from How Marvel Makes Movies: Marvel does something that is very counterintuitive. Instead of hiring people that are going to be really good at directing blockbusters, they look for people that have done a really good job with medium-sized budgets, but developing very strong storylines and characters. So, generally speaking, what they do is they looked to other genres like Shakespeare or horror. You can have spy films, comedy films, buddy cop films and what they do is they say, if I brought this director into the Marvel universe, what could they do with our characters? How could they shake up our stories and kind of reinvigorate them and provide new energy and new life?

- What is a senior engineer? A historian. EliRivers: I work on some software of which the oldest parts of the source code date back to about 2009. Over the years, some very smart (some of them dangerously smart and woefully inexperienced, and clearly - not at all their fault - not properly mentored or supervised) people worked on it and left. What we have now is frequently a mystery. Simple changes are difficult, difficult changes verge on the impossible. Every new feature requires reverse-engineering of the existing code. Sometimes literally 95% of the time is spent reverse-engineering the existing code (no exaggeration - we measured it); changes can take literally 20 times as long as they should while we work out what the existing code does (and also, often not quite the same, what it's meant to do, which is sometimes simply impossible to ever know). Pieces are gradually being documented as we work out what they do, but layers of cruft from years gone by from people with deadlines to meet and no chance of understanding the existing code sit like landmines and sometimes like unbreakable bonds that can never be undone. In our estimates, every time we have to rely on existing functionality that should be rock solid reliable and completely understood yet that we have not yet had to fully reverse-engineer, we mark it "high risk, add a month". The time I found that someone had rewritten several pieces of the Qt libraries (without documenting what, or why) was devastating; it took away one of the cornerstones I'd been relying on, the one marked "at least I know I can trust the Qt libraries". It doesn't matter how smart we are, how skilled a coder we are, how genius our algorithms are; if we write something that can't be understood by the next person to read it, and isn't properly documented somewhere in some way that our fifth replacement can find easily five years later - if we write something of which even the purpose, let alone the implementation, will take someone weeks to reverse engineer - we're writing legacy code on day one and, while we may be skilled programmers, we're truly Godawful software engineers.

- You always learn something new when you listen to Martin Thompson. Protocols and Sympathy With Martin Thompson. He goes into the many implications of the Universal Scalability Law which covers what can be split up and shared whlle considering coherence costs, which is the time it takes parties working together to reach agreement. The mathematics for systems and the mathematics for people are all very similar because it's just a system. Doubling the size of system doesn't mean doubling the amount of work done. You have to ask if the workload is decomposable. The workload needs to decompose and be done in parallel, but not concurrently. Parallelism is doing multiple things at the same time. Concurrency is dealing with multiple things at the same time. Concurrency requires coordination. Adding slack to a system reduces response time because it reduces utilization. If we constantly break teams up and reform them we end up spending more time on achieving coherence. If your team has become more efficient and reaches agreement faster than you can do more things at the same time with less overhead. You get more throughput by maximizing parallelism and minimizing coherency. Slow down and think more. Also, Understanding the LMAX Disruptor.

- Excellent explanation. Distributed Locks are Dead; Long Live Distributed Locks! and Testing the CP Subsystem with Jepsen.

- Atlassian on Our not-so-magic journey scaling low latency, multi-region services on AWS. Do you have something like this: "a context service which needed to be called multiple times per user request, with incredibly low latency, and be globally distributed. Essentially, it would need to service tens of thousands of requests per second and be highly resilient." They were stuck with a previous sharding solution so couldn't make a complete break as they moved to AWS. The first cut was CQRS with DynamoDB, which worked well until higher load hits and DynamoDB had latency problems. They used SNS to invalidate node level caches. They replaced ELBs with ALBs which increased reliability but the p99 latency went from 10ms to 20ms. They went with Caffeine instead of Guava for their cache. They added a sidecar as a local proxy for a service. A sidecar is essentially just another containerised application that is run alongside the main application on the EC2 node. The benefit of using sidecars (as opposed to libraries) is that it’s technology agnostic. Latencies fell drastically.

- Nike on Moving Faster With AWS by Creating an Event Stream Database: we turned to the Kinesis Data Firehose service...a service called Athena that gives us the ability to perform SQL queries over partitioned data...how does our solution compare to more traditional architectures using RDS or Dynamo? Being able to ingest data and scale automatically via Firehose means our team doesn’t need to write or maintain pre-scaling code...Data storage costs on S3($0.023 per GB-month) are lower when compared to DynamoDB($0.25 per GB-month) and Aurora($0.10 per GB-month)...In a sample test, Athena delivered 5 million records in seconds, which we found difficult to achieve with DynamoDB...One limitation is that Firehose batches out data in windows of either data size or a time limit. This introduces a delay between when the data is ingested to when the data is discoverable by Athena...Queries to Athena are charged by the amount of data scanned, and if we scan the entire event stream frequently, we could rack up serious costs in our AWS bill.

- It's not easy to create a broadcast feed. Here's how Hoststar did it. Building Pubsub for 50M concurrent socket connections. They went through a lot of different options. They ended up using EMQX, client side load balancing, and multiple clusters with bridges connecting them and a reverse bridge. Each subscriber could support 250k clients. With 200 subscribe nodes, the system can support 50M connections and more. Also, Ingesting data at “Bharat” Scale.

- Making Containers More Isolated: An Overview of Sandboxed Container Technologies: We have looked at several solutions that tackle the current container technology’s weak isolation issue. IBM Nabla is a unikernel-based solution that packages applications into a specialized VM. Google gVisor is a merge of a specialized hypervisor and guest OS kernel that provides a secure interface between the applications and their host. Amazon Firecracker is a specialized hypervisor that provisions each guest OS a minimal set of hardware and kernel resources. OpenStack Kata is a highly optimized VM with built-in container engine that can run on hypervisors. It is difficult to say which one works best as they all have different pros and cons.

Soft Stuff

- Nodes: In Nodes you write programs by connecting “blocks” of code. Each node – as we refer to them – is a self contained piece of functionality like loading a file, rendering a 3D geometry or tracking the position of the mouse. The source code can be as big or as tiny as you like. We've seen some of ours ranging from 5 lines of code to the thousands. Conceptual/functional separation is usually more important.

- Picat: Picat is a simple, and yet powerful, logic-based multi-paradigm programming language aimed for general-purpose applications. Picat is a rule-based language, in which predicates, functions, and actors are defined with pattern-matching rules. Picat incorporates many declarative language features for better productivity of software development, including explicit non-determinism, explicit unification, functions, list comprehensions, constraints, and tabling. Picat also provides imperative language constructs, such as assignments and loops, for programming everyday things.

- When the aliens find the dead husk of our civilization the irony is what will remain of history are clay cuneiform tablets. Something comforting knowing what's oldest will last longest. Cracking Ancient Codes: Cuneiform Writing.

- donnaware/AGC: FPGA Based Apollo Guidance Computer.

Pub Stuff

- Unikernels: The Next Stage of Linux’s Dominance (overview): In this paper, we posit that an upstreamable unikernel target is achievable from the Linux kernel, and, through an early Linux unikernel prototype, demonstrate that some simple changes can bring dramatic performance advantages. rwmj: The entire point of this paper is not to start over from scratch, but to reuse existing software (Linux and memcached in this case), and fiddle with the linker command line and a little bit of glue to link them into a single binary. If you want to start over from scratch using a safe language then see MirageOS.

- Linux System Programming. rofo1: The book is solid. I mentally place it up there with "Advanced programming in the UNIX Environment" by Richard Stevens.

- Checking-in on network functions: we need beter approaches to VERIFY and INTERACT with network functions and packet processing program properties. here, we provide a HYBRID-APPROACH and implementation for GRADUALLY checking and validating the arbitrary logic and side effects by COMBINING design by contract, static assertions and type-checking, and code generation via macros all without PENALIZING programmers at development time

- Are We Really Making Much Progress? A Worrying Analysis of Recent Neural Recommendation Approaches: In this work, we report the results of a systematic analysis of algorithmic proposals for top-n recommendation tasks. Specifically, we considered 18 algorithms that were presented at top-level research conferences in the last years. Only 7 of them could be reproduced with reasonable effort. For these methods, it however turned out that 6 of them can often be outperformed with comparably simple heuristic methods, e.g., based on nearest-neighbor or graph-based techniques. The remaining one clearly outperformed the baselines but did not consistently outperform a well-tuned non-neural linear ranking method.

- DistME: A Fast and Elastic Distributed Matrix Computation Engine using GPUs: We implement a fast and elastic matrix computation engine called DistME by integrating CuboidMM with GPU acceleration on top of Apache Spark. Through extensive experiments, we have demonstrated that CuboidMM and DistME significantly outperform the state-of-the-art methods and systems, respectively, in terms of both performance and data size.

- PARTISAN: Scaling the Distributed Actor Runtime (github, video, twitter): We present the design of an alternative runtime system for improved scalability and reduced latency in actor applications called PARTISAN. PARTISAN provides higher scalability by allowing the application developer to specify the network overlay used at runtime without changing application semantics, thereby specializing the network communication patterns to the application. PARTISAN reduces message latency through a combination of three predominately automatic optimizations: parallelism, named channels, and affinitized scheduling. We implement a prototype of PARTISAN in Erlang and demonstrate that PARTISAN achieves up to an order of magnitude increase in the number of nodes the system can scale to through runtime overlay selection, up to a 38.07x increase in throughput, and up to a 13.5x reduction in latency over Distributed Erlang.

- BPF Performance Tools (book): This is the official site for the book BPF Performance Tools: Linux System and Application Observability, published by Addison Wesley (2019). This book can help you get the most out of your systems and applications, helping you improve performance, reduce costs, and solve software issues. Here I'll describe the book, link to related content, and list errata.