Stuff The Internet Says On Scalability For June 15th, 2018

Hey, it's HighScalability time:

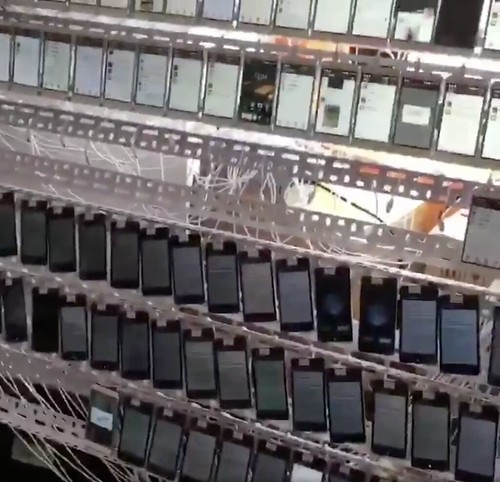

Scaling fake ratings. A 5 star 10,000 phone Chinese click farm. (English Russia)

Do you like this sort of Stuff? Please lend me your support on Patreon. It would mean a great deal to me. And if you know anyone looking for a simple book that uses lots of pictures and lots of examples to explain the cloud, then please recommend my new book: Explain the Cloud Like I'm 10. They'll love you even more.

- 1.6x: better deep learning cluster scheduling on k8s; 100,000: Large-scale Diverse Driving Video Database; 3rd: reddit popularity in the US; 50%: increase in Neural Information Processing System papers, AI bubble? 420 tons: leafy greens from robot farms; 75%: average unused storage on EBS volumes; 12TB: RAM on new Azure M-series VM; 10%: premium on Google's single-tenant nodes; $7.5B: Microsoft's cost of courting developers; 100th: flip-flop invention anniversary; 1 million: playlist dataset from Spotify; 38GB torrent: Stackoverflow public database; 85%: teens use YouTube; 20%-25%: costs savings using Aurora; 80%: machine learning Ph.D.s work at Google or Facebook; 18: years of NASA satellite data; >1TB: Ethereum blockchain; 200,000 trillion: IBM's super computer calculations per second;

- Quotable Quotes:

- Michael Pollan: “I have no doubt that all that Hubbard LSD all of us had taken had a big effect on the birth of Silicon Valley.”

- @hisham_hm: Strongly disagree. Most of use who started coding in the 80s started with BASIC. We turned out just fine. The first thing that your first language should teach you is the _joy of coding_.

- @bryanmikaelian: OH: GraphQL is SOAP for millennials

- @JoeEmison: Another thing that serverless architectures change: how do you software development. I find myself using a local dev environment to do infra config mgmt, but then often use the web consoles for writing functions and testing; so much faster that way.

- Jürgen Schmidhuber: And now we can see that all of civilisation is just a flash in world history. In just a flash, the guy who had the first agriculture was almost the same guy who had the first spacecraft in 1957. And soon we are going to have the first AIs that really deserve the name, the first true AIs.

- Dave Snowden~ A key principle of complex design is shift a system to an adjacent possible. Once there's enough stability more conventional approaches can be used. Architect for discovery before architecting for delivery. Starting with delivery misses the discovery phases which misses opportunities as well as threats. Fractal engagement is they way to achieve change. People don't make decisions about what other people do. People make decision about what they can do tomorrow within their own sphere of influence. The system as a whole orientates through multiple actions. You scale be decomposition and recombination, not through aggregation, and not by imitation.

- Alex Lindsay: When possibility is greater than circumstance you get action.

- Andrew Barron: These are all this family of traits that at one time were considered to be the thing that separated humans from all other animals, and then was slowly recognized to appear in primates and then large-brained mammals. And then suddenly we’re recognizing that something like a honey bee, with less than a million neurons, is able to do all of these things.

- John Hennessy & David Patterson~ We're entering a new golden age [in processors]. The end of Dennard Scaling and Moore's Law means architecture is where we have to innovate to improve performance, cost, and energy. Rasing the level of abstraction using Domain Specific Languages makes it easier for programmers and architects to innovate. Domain Specific Architectures are getting 20x and 40x improvements, not just 5-10%.

- AI Jokester: What do you call a cat does it take to screw in a light bulb? They could worry the banana.

- Home Depot: So we put all over the store — all these shelves 20-foot high — we had these boxes and everybody thought, 'Oh my God! Look at all this merchandise!' But there was air in the boxes. There was no products. They just gave us empty boxes. And we struggled,

- @copyconstruct: The cost of maintenance of a system must scale sublinearly with the growth of the service - #velocityconf At Google, Ops work needs to be less than 50% of the total work done by SRE. Once you have an SLO that’s really not an SLO since the users have come to expect better, then you’re unable to take any risks. Systems that are *too* reliable can become problematic too. #VelocityConf. Alerts need to be informed by both the SLO and the error budget. # velocityconf. At Google, burning an error budget within 24 hours is worth an alert.

-

John Lewis Gaddis: Hedgehogs, Berlin explained, “relate everything to a single central vision” through which “all that they say and do has significance.” Foxes, in contrast, “pursue many ends, often unrelated and even contradictory, connected, if at all, only in some de facto way.”

- Sargun Dhillon: If you’ve gotten this far, you’ll realize that [systems languages are] still terrible. If I want to implement anything at this layer of the system, my choices are largely still C, and Go. I’m excited because a number of new participants have entered the ring. I’m unsure that I’m ever going to want to use Rust, unless they have a massive attitude adjustment. I’m excited to see Nim, and Pony mature.

- Lone Ranger: the real value of OOP in the age of the internet is going to be massive amounts of data processing (exabytes) into elegant ontologies of knowledge. In other words: data itself is the gold we're looking for -- the fundamental value-unit.

- Facebook: Based on our testing in several different locations, we anticipate the SPLC system can reduce water usage by more than 20 percent for data centers in hot and humid climates and by almost 90 percent in cooler climates, in comparison with previous indirect cooling systems,

- @copyconstruct: The distributed real time telemetry challenge at NS1 - 5-700K data points per sec - avg of 200K data points per second (terabytes per day) - why an OpenTSDB approach failed (DDOS attack mitigation required more granular telemetry and per packet inspection) - why ELK made sense

- @Carnage4Life: There's a website that shows how much rented computing power it would take to attack a crypto currency network with a 51% attack then double spend cash on the network. Some of these numbers are scary low given market cap of the coins in question

- Quirky: Outsiders are important to innovation; they often operate in fields where they are highly motivated to solve problems in which they are personally invested. They often look at problems in different ways from those who are well indoctrinated in the field, and they may question (or ignore) assumptions that specialists take for granted.

- @monadic: Only 320000 cores running 210 kubernetes clusters? Pshaw. Mind-blowing story from CERN at #KubeCon

- @asymco: Services now the second largest revenue segment. More than twice iPad and almost 60% more than Mac.

- @taotetek: The goal of an observability team is not to collect logs, metrics or traces. It is to build a culture of engineering based on facts and feedback, and then spread that culture within the broader organization.

- Paul Weinstein: While Microsoft’s acquisition of GitHub is major news, it is just another in a long line of illustrations of a basic truth about the primary value of most successful high-tech startups. Namely, building a self-sustaining business is the exception, not the rule. Strategic, not financial, value is what drives most successful outcomes. If you reorient your thinking around this thesis, making sense of the crazy world of Silicon Valley will be much easier.

- Rich Miller: Increases in rack density are being seen broadly, with 67 percent of data center operators seeing increasing densities, according to the recent State of the Data Center survey by AFCOM. With an average power density of about 7 kilowatts per rack, the report found that the vast majority of data centers have few problems managing their IT workloads with traditional air cooling methods.

- @tiffanycli: Reminder: When you give your DNA data to companies like http://Ancestry.com or 23andMe, you give up not only your own genetic privacy, but that of your entire family. (It’s in the terms & conditions.)

- StevenMercatante: I'll give you a real world example where I found [GraphQL] useful: I worked on a really large API that fed data to a bunch of clients. Many of the clients either needed more or less data than what was provided by the RESTful endpoints. This resulted in them either over-fetching data, or having to make extra calls to get all the data they needed (often times, both cases were true.) Deploying a GraphQL endpoint helped this by letting them ask for only the data they needed, without requiring us to write a whole bunch of custom endpoints. It helped them reduce code-complexity, and helped the server by reducing the amount of data it needed to send over the wire. GraphQL isn't a silver bullet - like any tech, it has its pros & cons. Plus, you can always support both GraphQL & RESTful endpoints for an application - it's not a "one or the other" situation.

- trout_*ucker: if you're talking frontend only, then "microsites" are the way to go, but how you cut them up will depend on your tech and what your app/site structure looks like. I've developed react apps where different site functions were treated like different apps and injected in under a common header through code splitting. It can get tough to manage and really doesn't buy a whole lot. The most common and easiest way, is to treat your main app like a portal for other applications. This way each app can have its own tech. Walmart.com does this. But each app needs to have it's own self contained functionality, because you will switching the experience over to a new site. You can't have the user going from app1 to app2 and back to app1 constantly. This methods relies on strict adhering to design standards and can be a lot of reinventing the wheel if you don't have common UI component libraries, but it is by far the easiest thing to manage.

- Daniel: Who are you and what mental illnesses are you suffering from? AMD f*cked Intel up in 2017, are doing it again in 2018 and it seems like the only thing Intel has to offer against AMD for the future is a 28 core fantasy processor. Bench marks are available. Pricing is available. Corporations are switching their server's over to Epyc. You're simply irrationally yelling from a point without facts - and AMD increased it's market share by 40% in 2017 despite releasing a processor halfway through the year. That's *INCREDIBLE*. My computer is running Ryzen 1700+. My PC before that was running an i7 - I buy the best parts available and don't have brand loyalty.

- pnash: I worked at BBN from 1996 to 1999. BBN was an amazing place; if you had a question about a protocol for example, you could track down one of the original authors of the RFC - sometimes they were located right down the hall. it always broke my heart when we were sold off to GTE to become GTE Internetworking. It eventually was bought by Verizon and spun off to become Genuity, which tanked. Level3 swooped in like vultures and picked over the remaining folks - you could keep your job if you moved to Denver or Atlanta from what I recall. Of the folks that moved, most of them were laid off in a few years. Sadly, BBN had ASN1, which Level3 scuttled in favor of their ASN - 3356.

- 88,000 tests. Nine drivers. Three cars. Twelve @Samsung Galaxy S9 phones. Two months of @saschasegan life. FASTEST MOBILE NETWORKS 2018: Compared with 2017, we're seeing faster, more consistent LTE connections on all four major US wireless networks. Peak speeds have jumped from the 200Mbps range to the 300Mbps range, average download speeds have bumped up by 10Mbps or more, and latency has dropped by 10ms. Winners in order: Verizon, T-Mobile, At&T, Sprint. T-Mobile's strength coming in uploads, for the social-media, content-creator crowd. AT&T and Sprint have focused on download speeds, so they are best for content consumption, video streaming, and web browsing. If you're dissatisfied with your speeds and coverage, consider switching your phone before you switch your carrier.

- Great, your town got that new widget factory. Not so great, it only employess 4 people...to fix the robots. A giant new retail fulfillment center in China has only four employees.

- How do you perform a query that balances a two sided market? Food Discovery with Uber Eats: Building a Query Understanding Engine: Once the ingest component of the offline pipeline transforms the data to fit our ontology, a set of classifiers are then run to de-duplicate the data and make cross-source connections in order to leverage the graph’s abstraction power...With an established graph, our next task involves leveraging it to optimize an eater’s search results. Offline, we can extensively annotate restaurants and menu items with very descriptive tags. Online, we can rewrite the eater’s query in real time to optimize the quality of the returned results. We combine both approaches to guarantee high accuracy and low latency...If it were handling a query for “udon,” the graph would use online query rewriting to expand the search to also include related terms such as “ramen,” “soba,” and “Japanese”; however, if restaurants are not properly tagged in our underlying database, it will be very difficult to ensure total recall of all relevant restaurants. This is where the offline tagging comes into play and surfaces restaurants that sell udon or udon-related dishes to the eater as quickly as possible.

- OK, this is very slick and glossy. There's nothing much technical at all and they like to speak in high level jargon, but if you have a lot of teams there's some good experience here. How we run bol.com with 60 autonomous teams. 1200 people. 350 engineers. 60 cross-functional teams. A Fleet consists of 4–5 closely related teams that share a common mission. A Space consists of several Fleets. They're data driven using a process called CISL that favors creating minimum viable products as soon as possible and picking those that work. The process is Scrum though not slavishly so. Teams are coordinate using a strategic plan for the year that can change and adapt as needed. Plans are pitched by individuals. People are not assigned to plan. People volunteer. If enough people volunteer it must be a good plan. Incidently, this is how the Mongols worked. Also the Vikings. Warriors stay with leaders that would bring in the booty. You Built It, You Run It, You Love It (YBIYRIYLI) is the policy for projects. Everything is automated. There's a common platform component. They use microservices each team is owned by a team.

- Videos from Uber's tech day are now available.

- FTL communication it's not. How fast is the Internet on the ISS? Clayton C. Anderson: I recently asked this question of one of my NASA “go-to guys,” Space Station Flight Director Ed Van Cise. Answer: The interface is still the same as you knew it - you're still interfacing with a computer at JSC and that computer is what's connecting to the Internet. Obviously the connection between that ground computer and the Internet is really quick, just like any other office PC. The bandwidth allocated for Crew Support LAN (CSL) isn't your supreme package from Xfinity but it's in the Mbps ballpark. That's not your bottleneck. The real slow-down is the interface between the onboard laptop and the ground computer, coupled with the transmission latency (500ms - 700ms). Compare that latency with a standard ping doing a 'speed test' on the ground, ~12-25 ms. It's those two factors (software providing the remote connection from laptop to desktop, and latency) that causes the slowdown. There's no real way to benchmark the speed but the PLUTOs (Mission Control Center flight controllers) think it's around 128 kbps - faster than a dialup connection (56 kbps) but way slower than home internet. Forget doing any streaming, etc. Also, BUILD YOUR OWN ARTHUR SATELLITE DISH FOR TRACKING THE ISS.

- Etsy is moving to Google Cloud. An interesting part of that process is, as part of the transition, they made their Kubernetes clusters work on both Google Kubernetes Engine (GKE) and in their own data centers. Deploying to Google Kubernetes Engine. One big big difference was their requirement that everything in GCP be provisioned via Terraform. Terraform is used to declare every possible aspect of their GCP infrastructure. The problem is Terraform can't do everything. Helm templates were used to create all of the common resources that were needed inside GKE. The result: a Jenkins deployment pipeline which can simultaneously deploy services to our on-premises Kubernetes cluster and to our new GKE cluster by associating a GCP service account with GKE RBAC roles.

- DRAM is getting more expensive. The dream? Use NVM as a complement. Does it work? Reducing DRAM footprint with NVM in Facebook. Yes, but it's not a drop in replacement. Facebook did a lot of work to make it work. They did get results: We presented MyNVM, a system built on top of MyRocks, which utilizes NVM to significantly reduce the consumption of DRAM, while maintaining comparable latencies and QPS. We introduced several novel solutions to the challenges of incorporating NVM, including using small block sizes with partitioned index, aligning blocks with physical NVM pages, utilizing dictionary compression, leveraging admission control to the NVM, and reducing the interrupt latency overhead. Our results indicate that while it faces some unique challenges, such as bandwidth and endurance, NVM is a potentially lower-cost alternative to DRAM.

- Brendan Gregg says everyone using %CPU to measure performance is wrong. The kernel doesn't measure it very well. Thank you Meltdown and Spectre.

- Watch out Go, you have competition. Dropbox found Rust is more efficient than Go. Extending Magic Pocket Innovation with the first petabyte scale SMR drive deployment: We needed to overcome dealing with sequential writes of SMR disks, which we accomplished with a few key workarounds. For example, we use an SSD as a staging area for live writes, while flushing them to the disk in the background. Since the SSD has a limited write endurance, we leverage the memory as a staging area for background operations. Our implementation of the software prioritizes live, user-facing read/writes over background job read/writes. We improved performance by batching writes into larger chunks, which avoids flushing the writes too often. Moving from Go to Rust also allowed us to handle more disks, and larger disks, without increased CPU and Memory costs by being able to directly control memory allocation and garbage collection.

- We keep relearning the same lessons. MQTT vs REST from IoT Implementation perspective. It's more efficient to use an always connected bidirectional queue than xfer data one request at a time.

- You go through all that effort to build a website, how do you get users to convert? Avinash has 6 nudges for you: show in-stock status; show how long the current price will last; display direct competitor comparisons like you'll save 34% over Costco; give delivery times based on geo/IP/mobile phone location; display social cues like x people have looked at this hotel today; include personalization prompts based on customer history. To pull all these off is not easy. You need a beefy back-end with a solid logistics system, machine learning component, and data gathering capability.

- Want a simple, comprehensible example of how and LSTM is implemented end-to-end in Python code? Minimal character-based LSTM implementation.

- Why the Future of Machine Learning is Tiny?: I’m convinced that machine learning can run on tiny, low-power chips, and that this combination will solve a massive number of problems we have no solutions for right now...I spend a lot of time thinking about picojoules per op. This is a metric for how much energy a single arithmetic operation on a CPU consumes, and it’s useful because if I know how many operations a given neural network takes to run once, I can get a rough estimate for how much power it will consume. For example, the MobileNetV2 image classification network takes 22 million ops (each multiply-add is two ops) in its smallest configuration. If I know that a particular system takes 5 picojoules to execute a single op, then it will take (5 picojoules * 22,000,000) = 110 microjoules of energy to execute. If we’re analyzing one frame per second, then that’s only 110 microwatts, which a coin battery could sustain continuously for nearly a year. These numbers are well within what’s possible with DSPs available now, and I’m hopeful we’ll see the efficiency continue to increase. That means that the energy cost of running existing neural networks on current hardware is already well within the budget of an always-on battery-powered device, and it’s likely to improve even more as both neural network model architectures and hardware improve.

- We keep relearning lessons for real-time systems too. Which one? Parallelism can actually slows you down. Is that contentious to say? Great detective story with lots of cool graphs, diagrams, and deep cut lessons learned. Go code refactoring : the 23x performance hunt: The optimal utilization of all the CPU cores consists in a few goroutines that each processes a fair amount of data, without any communication and synchronization until it’s done...Performance can be improved at many levels of abstraction, using different techniques, and the gains are multiplicative...Tune the high-level abstractions first: data structures, algorithms, proper decoupling. Tune the low-level abstractions later: I/O, batching, concurrency, stdlib usage, memory management...Big-O analysis is fundamental but often it’s not the relevant tool to make a given program run faster...Algorithms in Ω(n²) and above are usually expensive...Complexity in O(n) or O(n log n), or below, is usually fine...I/O is often a bottleneck: network requests, DB queries, filesystem...Regular expressions tend to be a more expensive solution than really needed...Memory allocation is more expensive than computations...An object in the stack is cheaper than an object in the heap...The hidden factors are not negligible! For example, all of the improvements in the article were achieved by lowering those factors, and not by changing the complexity class of the algorithm.

- A Neat Trick For Compressing Networked State Data. bjpbakker: tl;dr Send the full initial state, and then send deltas where each delta is the current state xor the previous state.

- When you move to the cloud does your team lose skills by using other people's services? Yes, but what that means is they're developing other skills. That's evolution, not deskilling. David Torgerson: After moving to Aurora, we don’t have to maintain that. We don’t have to worry about EBS volumes or pointing a replica back to a master’s replication point. We just don’t have to deal with that. Some of that knowledge and expertise is becoming a little lost among our developers who traditionally were very sharp when it came to database management. Again, Aurora makes things so easy that we’re getting a little stagnant in our skill set. That’s one of the drawbacks. But again, that’s probably also a pro for Aurora is you don’t have to know anything to use it.

- Algorithmic feeds are how you drive engagement at the expense of reach. Here's Instagram's non-technical explanation of their feed ranking criteria. Feed order is a function of Interest, Recency, Relationship, Frequency, Following, Usage. They say you can see everything, if you scroll down far enough. That's what they say.

- Why is dropbox is building a storage system when a bunch of NetApp EFs or EMC systems could be used? amwt: (Dropboxer heavily involved in these systems) This is one of the largest storage clusters in the world (exabytes), so you generally stitch together some custom distributed systems to manage this efficiently from a cost and operations perspective. Trying to have a vendor solve it for you with SANs or whatever would be... to put it mildly, prohibitively expensive. :-) You would definitely be their favorite customer, though! Just to give you a flavor of the kind of designs in these systems, typically you don’t even use machine-local RAID for these clusters because it’s too expensive and too limited. Instead you build in all of the replication and parity at a higher software layer, and that allows you to pursue extremely efficient storage overhead schemes and seamless failover at disk, machine, even rack level. There are some blog posts on our tech blog that go into the design of the system if you’re curious to know more.

- How will cars talk to each other? 5g is an expensive option. Another is 802.11ax, also known as high efficiency wireless (HEW). Connecting Cars Reliably. It depends on what the control plane for the pan car traffic OS will look like. Will it be centralized? Will the smarts be located at the edge and hop from fixed station to fixed station like cellular? Will the smarts be embedded in the car? Will cars coordinate peer-to-peer? If it's p2p then cars will need a way to communicate with each other. So why not wifi? Likely it will be some combo. Cars can coordinate locally, but system wide packing algorithms will need a more global view to create optimal flows.

- Yes, people still build their own pub/sub. BrowserStack on Building A Pub/Sub Service In-House Using Node.js And Redis. 100M+ messages. 2 bytes and 100+ MB. Runs on 6 t1.micro instances of ec2 at a cost of about $70 per month. Why build instead of buy? Scales better for their needs; Cheaper than outsourced services; Full control over the overall architecture.

- yandex/odyssey: Advanced multi-threaded PostgreSQL connection pooler and request router.

- improbable-eng/thanos: a set of components that can be composed into a highly available metric system with unlimited storage capacity. It can be added seamlessly on top of existing Prometheus deployments and leverages the Prometheus 2.0 storage format to cost-efficiently store historical metric data in any object storage while retaining fast query latencies.

- Snowflake to Avalanche: A Novel Metastable Consensus Protocol Family for Cryptocurrencies: This paper introduces a new family of consensus protocols, inspired by gossip algorithms: The system operates by repeatedly sampling the participants at random, and steering the correct nodes towards the same consensus outcome. The protocols do not use proof-of-work (PoW) yet achieves safety through an efficient metastable mechanism. So this family avoids the worst parts of traditional and Nakamoto consensus protocols. Similar to Nakamoto consensus, the protocols provide a probabilistic safety guarantee, using tunable security parameters to make the possibility of a consensus failure arbitrarily small. The protocols guarantee liveness only for virtuous transactions. Liveness for conflicting transactions issued by Byzantine clients is not guaranteed.

- Faster: A Concurrent Key-Value Store with In-Place Updates: This paper presents Faster, a new keyvalue store for point read, blind update, and read-modify-write operations. Faster combines a highly cache-optimized concurrent hash index with a hybrid log: a concurrent log-structured record store that spans main memory and storage, while supporting fast in-place updates of the hot set in memory. Experiments show that Faster achieves orders-of-magnitude better throughput – up to 160M operations per second on a single machine – than alternative systems deployed widely today, and exceeds the performance of pure in-memory data structures when the workload fits in memory.

- A CULTURE OF INNOVATION INSIDER ACCOUNTS OF COMPUTING AND LIFE AT BBN A SIXTY YEAR REPORT: In establishing BBN, the founders deliberately created an environment in which engineering creativity could flourish. The author describes steps taken to assure such an environment and a number of events that moved the company into the fledgling field of computing.