Stuff The Internet Says On Scalability For June 25th, 2021

Hey, it's HighScalability time!

Only listen if you want a quantum earworm for the rest of the day.

Not your style? This is completely different. No, it’s even more different than that.

Today in things that nobody stopped me from doing:

The AWS Elastic Load Balancer Yodel Rag. pic.twitter.com/ocyVLf8WlUMay 28, 2021

Love this Stuff? I need your support on Patreon to help keep this stuff going.

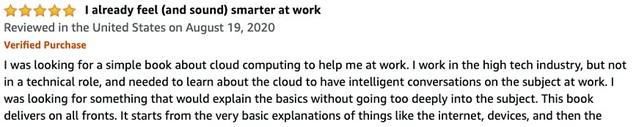

Do employees at your company need to know about the cloud? My book will teach them all they need to know. Explain the Cloud Like I'm 10. On Amazon it has 307 mostly 5 star reviews. Here's a 100% keto paleo low carb carnivore review:

Number Stuff:

- 60%: of people abandoned an app idea because they were concerned Apple wouldn’t approve it. 60% have held back features for the same reason. 69% say Apple is limiting innovation with their App Store policies.

- 130 million: connections in brain map featuring 50,000 cells based on a dataset of 1.4 petabytes. A mouse brain is only 1000 times bigger—an exabyte instead of a petabyte. A Browsable Petascale Reconstruction of the Human Cortex.

- 60x: more power than predicted was used by Atlas robot. Animals FTW!

- 1.8 EFLOPS: Tesla’s supercomputer that they’ll use to save a few bucks on sensors. More details in CVPR 2021 Workshop on Autonomous Vehicles.

- 15–20%: COVID driven surge in internet traffic during the fall of 2020.

- 80%: of orgs that paid the ransom were hit again.

- 40%: productivity increase at Microsoft Japan by moving to a 4 day work week, reducing meetings to 30 minutes, and capping meetings to a max of 5 people. This is from 2019, but seems relevant post COVID.

- 2.8 billion: API requests processed per day by GitHub. Peak 55k rps. 2 billion git operations per day on average. ~800M background jobs per day.

- 1 petabyte: per week data transfer between national labs.

- 1 billion: sparrows in the world.

- 90: seconds to build LLVM on top of Lambda. It took 2 minutes and 37 seconds to build on a 160-core ARM machine.

- 1 exabyte: data stored by LinkedIn across all Hadoop clusters.

- #1: Oracle is the most popular database and has been since 2006. MySQL is number 2.

- $5K: for a Chip Scale Atomic Clock the size of a grain of sand.

- 19 mph: speed at which seeds disperse from a plant.

- 80 Million: IOPS on a Standard 2U Intel® Xeon System using NVM Express SSDs.

- $55 million: cost for a seat on SpaceX.

- 28 billion: photos stored on Google Photos each week.

- 80,000: iterations needed to create the NFL’s schedule.

- 268: books sold more than 100,000 copies. That’s out of 2.6 million books sold online in 2020.

- 400 million: API calls EVERY SECOND handled by @AWSIdentity

- 26: new writing systems invented in West Africa.

Quotable Stuff:

- nicoff: Genie: I’ll give you one billion dollars if you can spend 100M in a month. There are 3 rules: No gifting, no gambling, no throwing it away. SRE: Can I use AWS? Genie: There are 4 rules

- artichokeheart: It reminds me of that ancient joke: A QA engineer walks into a bar. He orders a beer. Orders 0 beers. Orders 99999999999 beers. Orders a lizard. Orders -1 beers. Orders a ueicbksjdhd. First real customer walks in and asks where the bathroom is. The bar bursts into flames, killing everyone.

- @drbarnard: Over dinner I told my wife one of my tweets ended up in a court case against Apple. Her first reaction, without even knowing what it said: “Is Apple going to retaliate?!" So yeah, for almost 13 years of making a living on the App Store, we‘ve lived in fear of Apple.

- spamizbad: Innovation is the product manager coming to your desk and saying "Hey has feature X. How long would it take for us to build something like that?"

- @jamie_maguire1: As I look back on 20 years in tech, I should have moved every 2-4 years. That said, staying a bit longer meant I could see implementations go from inception right through to delivery into prod and gain the experience.

- Theo Schlossnagle: the leading reason we decided to create our own database had to do with simple economics. Basically, in the end, you can work around any problem but money. It seemed that by restricting the problem space, we could have a cheaper, faster solution that would end up being more maintainable over time.

- Giancarlo Rinaldi~ Lewis Fry Richardson derived many of the complex equations needed for weather prediction in the 1920's. However, the math was so difficult that to predict the weather six hours in advance, it took him six weeks to do the calculations.

- Rick Houlihan~ the cost of s3 is in the puts and gets. If you're doing high velocity puts and gets on s3 make sure they are on large objects, otherwise bundle smaller objects into larger objects to control costs.

- Agentlien: Near the beginning they talk about how targeting the PlayStation 5, which has an SSD, drastically changed how they went about making the game. In short, the quick data transfer meant they were CPU bound rather than disk bound and could afford to have a lot of uncompressed data streamed directly into memory with no extra processing before use.

- string: My primary use has been for serving image assets, switched over from Cloudfront and have seen probably a >80% cost reduction, and no noticeable performance reduction, but as I mentioned I'm operating at a scale where milliseconds of difference don't mean much.

- manigandham: All this focus on redundancy should be replaced with a focus on recovery. Perfect availability is already impossible. For all practical uses, something that recovers within minutes is better than trying to always be online and failing horribly.

- Mars Helicopter: On the sixth flight, 54 seconds into the flight, a single image did not arrive to be processed. Landmarks were in the wrong place from where they were predicted, and the helicopter adjusted speed and tilt to compensate. This was the reason for the constant oscillation, as every following image bore the wrong timestamp and showed the “wrong" landmarks for the calculated position.

- Riccardo Mori: Going through Big Sur’s user interface with a fine-tooth comb reveals arbitrary design decisions that prioritise looks over function, and therefore reflect an un-learning of tried-and-true user interface and usability mechanics that used to make for a seamless, thoughtful, enjoyable Mac experience.

- VMO: Risk is a factor of amount of loss x probability of that loss. The reason why cyber risk is as treated high risk because it has higher losses and the probability of occurrence is also really high. This is causing a lot of stress on insurers profitability as the premiums that they earn is not sufficient to cover all payouts. Mere 5 payouts can wash out the entire annual premium earned from 250 companies that have at least $200MM in insurance. This is leading to a different dynamic in the industry.

- balabaster: A savvy engineer, like a spy, knows how to gain the confidence of the CEO long before they put a plan of "Gee, I think you should do this" in the CEOs ear. I've spent months ingratiating myself with the political circle before someone says "Hey Ben, what do you think?" and from there, it's game on.

- enesakar: Redis REST has overhead less than a millisecond compared to Redis TCP. But when EDGE caching is enabled, reads become much faster as expected. Rough numbers we have seen: REST: ~0.70ms REST (EDGE cached): ~0.12ms

- dkubb : I think the next generation of ORMs will be built on top of this approach. Being able to query and receive an entire object graph in a single round trip has changed how we develop apps at my company.

- Michelle Hampson: His team tested the new software in a series of simulations. “The results revealed that logic circuits could be flawlessly designed, simulated, and tested," says Marks, noting that they were able to use DNAr-Logic to design some synthetic biological circuits capable of generating up to 600 different reactions.

- @kellabyte: So basically it’s cheaper AND faster to store non-indexed data on S3 and pay for ridiculously cheap and compute to buy enough machines to process it in parallel at blazing speeds? And just forget about optimized data structures and local SSD’s?

- @johncutlefish: "Let’s start with a basic truth: Great products and services do NOT mainly come from hours worked. The real drivers are things like creativity, collaboration and good decision making." @IamHenrikM

- @LiveOverflow: 95% of all vulnerabilities can easily be found by scanners or inexperienced penetration testers. Skilled hackers probably cost 10x-100x and only get you another 2-3%. Nobody can guarantee 100%, so do not waste money on higher quality services #cisotips

- @matthlerner: Eventually, I discovered Jobs To Be Done. And here’s how I use it specifically for marketing. First, I interview people who recently signed up for my service or a competitor and ask questions like: 1. What were you hoping to do? 2. Why is that important to you? 3. Where did you look? 4. What else did you try?

- DSHR: In other words it is technically and economically infeasible to implement tests for both storage systems and CPUs that assure errors will not occur in at-scale use. Even if hardware vendors can be persuaded to devote more resources to reliability, that will not remove the need for software to be appropriately skeptical of the data that cores are returning as the result of computation, just as the software needs to be skeptical of the data returned by storage devices and network interfaces.

- staticassertion: #1 assumption I always make sure to check now is "is the code that's running actually from the source code I'm looking at". So may "impossible" bugs I run into are in fact impossible... in the codebase I'm looking at. And it turns out what's deployed is some other code where it is very much possible.

- @emileifrem: In my NODES keynote today, we ran a live demo of a social app with more people nodes than FB (!), backed by a trillion+ relationship graph sharded across more than 1,000 servers, executing deep, complex graph queries that return in <20 ms.

- @uhoelzle: Very excited about the new Tau VMs on GCP: 56% faster than Graviton2. 42% better price/performance. That's "leapfrog", not "better".

- @gurlcode: Raise your hand if you’ve taken down prod before 🙋♀️

- smueller1234: The larger your infrastructure, the smaller the relative efficiency win that's worth pursuing (duh, I know, engineering time costs the same, but the absolute savings numbers from relative wins go up). That's why an approach along the lines of "redundancy at all levels" (raid + x-machine replication + x-geo replication etc) starts becoming increasingly worth streamlining.

- Katy Milkman: My colleagues argue that their study highlights a common mistake companies make with gamification: Gamification is unhelpful and can even be harmful if people feel that their employer is forcing them to participate in “mandatory fun." Another issue is that if a game is a dud, it doesn’t do anyone any good. Gamification can be a miraculous way to boost engagement with monotonous tasks at work and beyond, or an over-hyped strategy doomed to fail. What matters most is how the people playing the game feel about it.

- Robin George Andrews: Altogether, these simulations hint that magnetism may be partly responsible for the abundance of intermediate-mass exoplanets out there, whether they are smaller Neptunes or larger Earths.

- Nicole Hemsoth: The pair see the next major challenges and opportunities in the I/O realm revolving around computational storage, which they’ve laid the groundwork for in the new, fully refactored 2.0 spec, even if there is not enough common ground to directly build into the NVMe standard just yet.

- murat: Maybe [fail-silent Corruption Execution Errors (CEEs) in CPU/cores] will lead to abandonment of complex deep-optimizing chipsets like Intel chipsets, and make simpler chipsets, like ARM chipsets, more popular for datacenter deployments. AWS has started using ARM-based Graviton cores due to their energy-efficiency and cost benefits, and avoiding CEEs could give boost to this trend.

- James Hunter: “No," the chief replied sternly. “Not more important. Just different. As a leader, you must learn that every part is equally important, and every job must be done just so, or things fall apart.

- Jill Bolte Taylor: So, from a purely biological perspective, we humans are feeling creatures who think, rather than thinking creatures who feel. Neuroanatomically you and I are programmed to feel our emotions, and any attempt we may make to bypass or ignore what we are feeling may have the power to derail our mental health at this most fundamental level.

- Aleron Kong: That was a lesson he’d learned from the internet. As a compendium of all human knowledge, it should have made everyone smarter. Instead, it just gave a pulpit to every idiot who otherwise could have been ignored. The resulting noise was so loud it drowned out logic and good sense.

- Robert M. Sapolsky: The cortex and limbic system are not separate, as scads of axonal projections course between the two. Crucially, those projections are bidirectional—the limbic system talks to the cortex, rather than merely being reined in by it. The false dichotomy between thought and feeling is presented in the classic Descartes’ Error, by the neurologist Antonio Damasio of the University of Southern California

- Geoff Huston: We appear to be seeing a resurgence of expressions of national strategic interest. The borderless open Internet is now no longer a feature but a threat vector to such expressions of national interest. We are seeing a rise in the redefining of the Internet as a set of threats, in terms of insecurity and cyber-attacks, in terms of work force dislocation, in terms of expatriation of wealth to these stateless cyber giants, and many similar expressions of unease and impotence from national communities. It seems to be a disillusioning moment where we’ve had a brief glimpse of what will happen when we bind our world together in a frictionless highly capable universally accessible common digital infrastructure and we are not exactly sure if we really liked what we saw! The result appears to be that this Internet that we’ve built looks like a mixed blessing that can be both incredibly personally empowering and menacingly threatening at the same time!

- Tim Bray: In my years at Google and AWS, we had outages and failures, but very very few of them were due to anything as simple as a software bug. Botched deployments, throttling misconfigurations, cert problems (OMG cert problems), DNS hiccups, an intern doing a load test with a Python script, malfunctioning canaries, there are lots of branches in that trail of tears. But usually not just a bug. I can’t remember when precisely I became infected, but I can testify: Once you are, you’re never going to be comfortable in the presence of untested code.

- cjg: We are using Rust for backend web development and other things. For us, the safety is the critical reason to choose Rust - particularly the thread-safety. Also the relatively small memory footprint compared to something like Java. Performance hasn't driven our decision at all - the number of requests per second is very low. It's correctness that matters.

- @jordannovet: new: YouTube, the second largest internet site, is moving parts of its service from Google's internal infrastructure to the Google public cloud that external customers use. also, Google Workspace is now using Google public cloud

- Ivan: Smaller containers don't just mean faster builds and smaller disk and networking utilization, they mean safer life.

- Surjan Singh: I think of the factor of safety as a modern day version of the libation or offering. I’d rather keep pouring out the same amount of wine as my ancestors rather than skimping and risking offending the gods. That $1.5 billion pricetag, which seemed absurd when I began writing this, now just looks like the cost of being human.

- Sanjay Mehrotra: Micron has now determined that there is insufficient market validation to justify the ongoing high levels of investments required to successfully commercialise 3D XPoint at scale to address the evolving memory and storage needs of its customers.

- @saranormous: the number of companies out there that won't pay $20K a year for off-the-shelf SaaS, but then spend unlimited engineering effort building internal tooling themselves and then complain they don't have enough engineering capacity

- @JannikWempe: DynamoDB tip Prefer KSUIDs over something like UUIDv4. KSUIDs include the current time and are sortable (and even more unique than UUIDv4). You can get an access pattern (sorted in chronological order) for free.

- boulos: Disclosure: I used to work on Google Cloud. Perlmutter seems like an awesome system. But, I think the “ai exaflops" is a “X GPUS times the NVIDIA peak rate". The new sparsity features on A100 are promising, but haven’t been demonstrated to be nearly as awesome in practice (yet). It also all comes down to workloads: large-scale distributed training is a funny workload! It’s not like LINPACK. If you make your model compute intensive enough, then the networking need mostly becomes bandwidth (for which multi-hundred Gbps worth of NICs is handy) but even without it there are lots of ways to max out your compute. Similarly, storage is a serious need for say giant video corpora, but not for things like text! GPT-2 had like a 40 GiB corpus.

- hocuspocus: my employer is becoming an AWS partner and we'll contractually need to spend a lot of money, meaning it's better if we use their SaaS offerings directly rather than going through the marketplace or a 3rd party.

- Scott Aaronson: when information from the state (say, whether a qubit is 0 or 1, or which path a photon takes through a beamsplitter network) “leaks out" into the environment, the information effectively becomes entangled with the environment, which damages the state in a measurable way. Indeed, it now appears to an observer as a mixed state rather than a pure state, so that interference between the different components can no longer happen. This is a fundamental, justly-famous feature of quantum information that’s not shared by classical information.

- @tlipcon: One of the coolest things about Spanner is that you can scale more or less indefinitely with strong semantics. But previously the scaling was only in the up direction, and the price of entry was prohibitive for small use cases. No more!

- Orbital Index: A protoplanet lovingly named Theia is thought to have slammed into the early Earth 4.5 billion years ago, dislodging enough combined material to form the Moon and leaving Earth with a 23-degree tilt. A new paper (pdf) links this event to two continent-sized higher density regions of the Earth’s mantle that underlie Africa and the Pacific Ocean

- @Nick_Craver: If you don't have worker space to complete that operation: you're using memory for the file buffer, things like a socket, if it's off-box, etc. until the completion can happen. If other tasks queued up are waiting on those resources: uh oh, deadlock city. That's no good! Let me give an example: When we had a load balancer bug at Stack Overflow assuming web servers were *up* going into rotation, and not *ready to be health checked*, it'd slam the app with 300-500 req/sec instantly. This caused our initial connection to Redis to take 2 minutes.

- @lil_iykay: Messed with a varying combination of stacks over the years. Here's what seems to be working super well for me. Context: building a FinTech mobile app that would be used across the country. Being FinTech, the must-haves for me are Security, easy-to-rollback deployments - with < 1000ms downtime across all stacks in the worst case scenario, real-time updates visible to users. Mobile App connects over a bidirectional gRPC stream to the serverless gateway which are several instances of docker containers running on @flydotio - they leverage firecracker VM to deploy your containers to edge servers closest to your users that respond elastically to traffic 🔥.Database (data API I should say) is @fauna - a truly excellent serverless semi-structured NoSQL database - which doesn't depend on syncing clocks for transactions and data consistency - currently using their streaming API on the JVM client for real-time data updates. For syncing and setting up hooks for communicating with our Banking Services platform (http://silamoney.com), I use @Cloudflare workers - serverless functions which aren't isolated on the container level like docker, but by Chrome V8 Isolates. This gets rid of cold starts and the need to keep your functions "warm" (which isn't even deterministic at this scale) and are super lightweight - And orders of magnitudes cheaper than AWS Lambda, Google cloud Functions etc. Oh and the KV service from cloudflare just comes in as a global cache for basically nothing. This stack makes me smile daily 😜 Happy to learn more on this journey.

- khanacademy: Yep, Go is more verbose in general than Python…But we like it! It’s fast, the tooling is solid, and it runs well in production

- @GergelyOrosz: Coinbase rewrote both their Android and iOS apps in React Native, and are happy with the end result. My takeaways from their writeup (thread) 1. It's hard to hire good lots of native mobile engineers. Heck, this is what was probably a major trigger for their whole transition

- @dhh: It’s safe to say that Shopify is pushing the absolute frontier of what’s possible with Rails and Ruby. Their main majestic monolith is a staggering 2.8m lines of Ruby responsible for processing over $100m in sales per hour at peak Exploding head. And they’re doing it running latest Rails

- Charles R. Martin: Flight software for rockets at SpaceX is structured around the concept of a control cycle. “You read all of your inputs: sensors that we read in through an ADC, packets from the network, data from an IMU, updates from a star tracker or guidance sensor, commands from the ground," explains Gerding. “You do some processing of those to determine your state, like where you are in the world or the status of the life support system. That determines your outputs – you write those, wait until the next tick of the clock, and then do the whole thing over again."

- @jrhunt: Summary: Functions are for quick request/response rewrites (< 1ms runtime and 2MB memory) Pricing: $0.10 per 1 million invocations ($0.0000001 per request) Lambda@Edge still exists for longer lived (> 1ms) functions that run at regional cache nodes.

- throwdbaaway: In the worst case, there can be a 20% variance on GCP. On Azure, the variance between identical instances is not as bad, perhaps about 10%, but there is an extra 5% variance between normal hours and off-peak hours, which further complicates things.

- Cal Newport: This way of collaborating, this hyperactive hive mind, took over much of knowledge work. Now my argument is, once you are collaborating using the hyperactive hive mind, any non-trivial amount of deep work becomes almost impossible to accomplish.

- Mikael Ronstrom: The results show clearly that AWS has the best latency numbers at low to modest loads. At high loads GCP gives the best results. Azure has similar latency to GCP, but doesn’t provide the same benefits at higher loads. These results are in line with similar benchmark reports comparing AWS, Azure and GCP.

- Michael Drogalis: Databases and stream processing will increasingly become two sides of the same coin. At this point in engineering history, the metal of those technologies are still melding together. But if it happens, 10 years from now the definition of a database will have expanded, and stream processing will be a natural part of it.

- papito: No, Stackoverflow architecture is still a simple server farm with aggressively maintained code and a lite SQL ORM that lets them to highly optimize queries. One of the architects talked about it on their pod a few weeks ago. She mentioned explicitly how they don't jump on new hotness and keep it classic, maintainable, and highly performant without spending tens of millions of dollars on FAANG alumni who seem to think that every shop needs a Google infrastructure because THIS IS THE WAY.

- rualca: it seems you're missing the forest for the trees. They put in the work to refactorize their services so that they could be treated as cattle and also autoscale, and the author stated that after doing his homework he determined that for his company Kubernetes offered the most benefits. There isn't much to read from this.

Useful Stuff:

- Accidental Discoveries in CPU Performance: AMD vs Intel (2021 Cloud Report): Discovery #1: Intel Sweeps Across All Clouds in Single-Core CPU Benchmarks. Discovery #2: AMD & Graviton2 Take Top Spots in 16-Core CPU Benchmarks.

- Disasters I've seen in a microservices world. Not much new, but it did create quite a discussion.

- Disaster #1: too small services. Disaster #2: development environments. Disaster #3: end-to-end tests. Disaster #4: huge, shared database. Disaster #5: API gateways. Disaster #6: Timeouts, retries, and resilience.

- A little later we’re going to talk about how noise is a source of error. There’s a lot of error in building software simply because there’s no good way of building software. Give a problem to 5 different groups and they’ll come up with 12 different solutions. Each member of an organization is a source of noise in the development process. Each decision in a project can amplify the noise or dampen it. The end result is the usual complexity and error we are all so used to.

- There’s an unusually thoughtful discussion on HN. Even reading through those comments it’s clear as long as software is being created by people there is no ultimate solution, just different problems at higher levels of abstraction. What Will Programming Look Like In The Future?

- One approach is to reduce error by industrializing components. In this quote Google industrializes components by having a large dedicated group creating reliable infrastructure. This same role for most of us has been outsourced to cloud providers. h43k3r: Google has a dedicated organisation for maintaining all the infrastructure required for and around microservices. We are talking about 500-1000 engineers just improving and maintaining the infrastructure. I don't have to worry about things like distributed tracing, monitoring, authentication, logs, logs search, logs parsing for exceptions, anomaly detection on logs, deployment, release management etc. They are already there and is glued pretty well that 90 percent of engineers don't even have to spend more than a day to understand all that.

- Better education is never the entire solution, but it couldn’t hurt. throwawaaarrgh: I don't know anything about modern computer science education. But it appears that new developers have absolutely no idea how anything but algorithms work. It's like we taught them how hammers and saws and wrenches work, and then told them to go build a skyscraper. There are only two ways I know of for anyone today to correctly build a modern large-scale computer system: 1) Read every single book and blog post, watch every conference talk, and listen to every podcast that exists about building modern large-scale computing systems, and 2) Spend 5+ years making every mistake in the book as you try to build them yourself.

- Yah, no. You build solutions using predefined components, but this isn’t the same thing as building tech. By definition tech is always a level above whatever low code is at the moment. 80% of tech could be built outside IT by 2024, thanks to low-code tools.

- A number used for counting should always be 64 bits. I’ve had this conversation many times with the storage mafia. Even on constrained embedded systems it’s usually fine. Because when an int rolls over you did not handle it everywhere it needed to be handled. Once More Unto the Breach.

- A lot of good advice, most of which is difficult to disagree with, especially "Crap I'm out of wine." Drunk Post: Things I've learned as a Sr Engineer. But I don’t agree with “titles mostly don’t matter." They do. If you want to ride the management track go to a small startup and grab the biggest baddest management title you can. That defines your base level as you progress in the game.

- Nice to see an example with code. Stateful Serverless workflows with Azure Durable Functions.

- Each day, more than 100k email notifications are sent to ASOS

- Durable Functions let you write stateful functions in a serverless compute environment.

- Durable Functions is an extension of Azure Functions that lets you write stateful functions in a serverless compute environment.

- Durable Entities allows us to aggregate event data from multiple sources over indefinite periods of time.

- Some triggers, or client functions (input). An entity (our state). An entity (our state)

- Though this review of Azure did not fill one with confidence.

- Another player in the serverless edge space. It’s Akamai with EdgeWorkers.

- Akamai’s platform consists of more than 340,000 servers deployed in approximately 4,100 locations in approximately 130 countries. Akamai delivered more than 300 trillion API requests — a 53% year-over-year increase.

- I didn’t see anything on pricing. But The two tiers — Basic Compute for applications requiring lower CPU and memory consumption, and Dynamic Compute for those applications demanding higher levels — include free usage for a combined 60 million EdgeWorkers events per month, up to 30 million per tier.

- I have not used it yet, primarily because I’m not an Akamai user. It looks something like Cloudfront’s initial offering in that there’s not a lot of services yet. You can write a small function and there’s a KV store. No Websockets, for example. It’s based on V8 so it looks like you can use most of node, which is convenient.

- Here’s a good video overview Serverless Edge Computing with Akamai EdgeWorkers. Though they say it can be used more generally for Microservices, the examples, as you might expect, CDN related. Cookies, request headers, load balancing, optimize images, that sort of thing.

- Like other edge platforms this is not a generic platform for running any code. There are quite a few limitations.

- Lots more documentation here: akamai/edgeworkers-examples

- On how giving PR answers to serious technical questions makes you look foolish. We have two cases.

- Case one: an interview with Apple’s Craig Federighi and Greg Joswiak in The Talk Show Remote From WWDC 2021. For Apple I was interested in how they would answer developer concerns over their developer unfriendly app store policies.

- Case two: an interview with John Deere’s CTO. For John Deere I was interested in how they would address right to repair issues.

- Throughout both interviews I was wondering if the hosts would ask the questions I wanted answered. They waited until the end, but the questions were asked. Unfortunately, both answers were pure PR.

- Apple pretended to be baffled by all this bizarre talk, going so far as to say developer concerns were not founded on reality. They doubled down on how much they love and support developers. No specific issues were addressed at all. They seem to think bringing “energy" to developer relations was a response. It’s not. They closed by saying developer concerns were “crazy." Condescending much?

- I didn’t know what to expect from John Deere’s CTO. The technical content of the episode was great. He obviously knows what he’s talking about and provided a compelling vision of how John Deere would support a future of farming. Until we got to the question. He doubled down on the security and safety argument for not allowing software changes. Somebody could make a crazy software change that would turn a giant combine into a weapon of mass destruction. In some ways I’m sympathetic. No doubt they see their product as a closed system. What they’re missing is that they’ve become a platform. John Deere needs to do all the same things Apple has done with iOS. Provide APIs and secure hardware so people can develop against a safe and secure sandbox. John Deere should have an app store. And so on. When you are used to creating embedded systems and highly integrated systems the change to becoming a platform is a big one, but it’s one customers want. Repeating all the same old safety and security arguments to maintain the status quo isn't recognizing what the future needs to look like.

- Also Steve Jobs Insult Response. Also also commoditizing your complement.

- Cloud repatriation sparked a lot of commentary. My first thought was this is a lot like horse owners telling car owners horses have a much lower TCO. That kind of misses the point. But cloud providers now think they are Apple and price doesn’t matter. By not continuing a drive towards lower costs for customers they will open themselves up to disruption. What form that will take I don’t know. I do know it won’t be a return to the good old days of on-premise architectures. Those days sucked.

- The Cost of Cloud, a Trillion Dollar Paradox. Let's Talk about Repatriation - The Cloudcast. Paradoxical Arguments of a16z. On HN. On reddit.

- How soon people forget. Back in the elder days one of the questions I would often get on HS is how to create a large site like Yahoo. When you think about the number of skills you have to master to create a site like Yahoo it was mind numbing. I was literally speechless because I realized if you didn’t know there was no way to explain it. There was just too much. I don’t think people appreciate just how hard it is to build a complete system in a datacenter from scratch.

- Now anyone has a decent shot at building Yahoo. And the reason is the cloud. If you are pro repatriation it’s probably because you have the skills or can afford to hire those who have the skills to pull it off. That’s not 99.9999% of the world today.

- Is on-prem great for predictable workloads? Sure. If you can have the skills to build it and the people to maintain it. In an era where that kind of expertise is in short supply, good luck on hiring.

- Is the cloud too expensive? Absolutely. We have oligopoly pricing. Some bottom-up disruption would be a good thing. We do have a few contenders such as Cloudflare, but so far the feature completeness of the big cloud providers is more attractive than even the cheapest partial solutions.

- Keep in mind VCs don’t care about you as a developer. They don’t care if you get stuck in a dead-end job. Valuation is what matters. Think about your career trajectory and where you want to end-up.

- If anyone is going to repatriate it’s Dropbox. They have a very skilled team. They have a known problem. Minimizing costs makes sense for their life cycle phase. But you do have to wonder how many new features were not added because they were so focussed on the repatriation effort?

- You can do a lot to lower costs before a move becomes your best option. Design for lower costs. Turn stuff off. Negotiate with your cloud provider and pit cloud providers against each other.

- kelp: I don't know how every company does it. But I led the datacenter and networking teams at Square from 2011 to 2017. In that time we went from 1 DC cage with 4 server racks to 4 US DCs, one in a Japan and a couple of network pops. A bit more than 100 server racks total. My team had 2 guys doing SiteOps. They would travel to the various DCs in the Bay Area and Virginia and do all the maintenance, new installs, etc. And sometimes we'd lean on the colocation remote hands to do a few things. We had about 5 network engineers, that also handled the corporate network. (12 offices and a network backbone that connected east and west coast DCs, offices, etc). And maybe 2 SWEs who handled things like our host OS install system, etc. Basically the next layer above the hardware. So 9 people that were required to run all of that stuff. But really, NetEng ended up spending like 70% of their time on corporate network things because we'd add offices faster than datacenters. So if we focused on production only, we really needed about 6 or 7 people total. I did the math a few times (every single year) and compared our costs, including people, to the costs of moving to AWS 3 year reserve instances. Doing it ourselves was always half the price.

- paxys: This analysis completely skips over the "elastic" aspect of cloud infrastructure, which was a key motivator for using these providers since the very beginning and is as relevant today regardless of company size. My company (which is in fact part of the charts in the article) had every single metric across the board spike 15-20x basically overnight when the pandemic started last year in March. Our entire infrastructure burden was clicking a few buttons on the AWS console and making sure everything was provisioning and scaling as needed. If we had to send out people to buy hard drives and server racks at that time, there is no chance we would have been able to meet the extra demand.

- It’s always DNS. The stack overflow of death. How we [Bunny] lost DNS and what we're doing to prevent this in the future..

- In an effort to reduce memory, traffic usage, and Garbage Collector allocations, we recently switched from using JSON to a binary serialization library called BinaryPack. For a few weeks, life was great, memory usage was down, GC wait time was down, CPU usage was down, until it all went down.

- Turns out, the corrupted file caused the BinaryPack serialization library to immediately execute itself with a stack overflow exception, bypassing any exception handling and just exiting the process. Within minutes, our global DNS server fleet of close to a 100 servers was practically dead.

- We designed all of our systems to work together and rely on each other, including the critical pieces of our internal infrastructure. If you build a bunch of cool infrastructure, you're of course lured into implementing this into as many systems as you can.

- They did have a canary type rollout, but not for this. dejangp: So to add some details, we already use multiple deployment groups (one for each DNS cluster). We always deploy each cluster separately to make sure we're not doing something destructive. Unfortunately this deployment went to a system that we believed was not a critical part of infrastructure (oh look how wrong we were) and was not made redundant, since the rest of the code was supposed to handle it gracefully in case this whole system was offline or broken.

- Shite does happen, but there’s still a need to pick your infrastructure components with great care. Sergio0694: Hey, I'm the author of BinaryPack! This was very interesting to read, though I'm sad to hear about the issue. I can say that the issue could've easily been avoided if you had contacted me about the issue though. I haven't updated the library in 3 years now and I didn't really claim it was production-ready (and I would've told you so).

- This conclusion I believe is wrong: “The biggest flaws we discovered were the reliance on our own infrastructure to handle our own infrastructure deployments." If you can’t rely on your own infrastructure what makes you think the rest of your stuff works? All parts of your infrastructure have to be designed as first class components—fault tolerant turtles all the way down.

- lclarkmichalek: More specifically, the triggering event here, the release of a new version of software, isn't super novel. More discussion of follow ups that are systematic improvements to the release process would be useful. Some options: - Replay tests to detect issues before landing changes - Canaries to detect issues before pushing to prod - Gradual deployments to detect issues before they hit 100% - Even better, isolated gradual deployments (i.e. deploy region by region, zone by zone) to mitigate the risk of issues spreading between regions. Beyond that, start thinking about all the changing components of your product, and their lifecycle. It sounds like here some data file got screwed up as it was changed. Do you stage those changes to your data files? Can you isolate regional deployments entirely, and control the rollout of new versions of this data file on a regional basis? Can you do the same for all other changes in your system?

- Also That Salesforce outage: Global DNS downfall started by one engineer trying a quick fix. Which is BS. If one engineer can take down the system then the system is broken.

- Handling one million concurrent players with ease on AWS. These case studies are always hand wavy and self-serving, but the big takeaway is they were able to cut costs by 50%, do a lift and shift, and then transform the system into a scalable low latency architecture.

- The end of an era. The Next Backblaze Storage Pod. Backblaze is no longer making their own storage pods. They are buying commodity hardware from Dell. That means Backblaze is a software company now. Hopefully they can keep their competitive advantage when they aren’t innovating over the whole stack.

- Daniel Kahneman — Noise: A Flaw in Human Judgment. Progamming is all about removing error. There's some process we can use to help reduce errors.

- The main idea is that error has two sources: bias and noise. The good news is algorithms can reduce error from noise because given the same data they make consistent decisions. Not so with us. Humans are noisey. Have a bad lunch and who knows what crappy decision we’ll make next? What they missed is that algorithms can make errors worse by encoding bias deep within the system. So deep we don’t even notice it is there. Which come to think of it, is so very human after all.

- Another takeaway for software projects is the idea of reducing errors by reducing noise in decision making. You can do that by following decision making hygiene rules. Averaging data reduces noise if judgements are independent of each other. Let people come up with their ideas on their own before the inevitable group meeting where the loudest most persistent person usually wins. Another cool idea is to delay intuition. Do your research, load up on facts, then use your intuition. It will be more informed and less biased.

- Heavy Networking 583: How Salesforce Evolved Its Branch Network With Prisma SD-WAN.

- The biggest idea is the idea of decoupling topology from policy. Traditionally topology and policy have been entwined together. But you don’t have to trombone all your traffic through a datacenter. The datacenter security stack can be delivered as a massively distributed edge service by specifying policies at the edge for security, compliance, networking, etc. The system can specify policy and that policy can be enforced at the edge.

- Using SDN instead of traditional nailed up MPLS circuits gave Salesforce 5 to 10 times the bandwidth for the same money.

- Another advantage of SDN is—like the cloud—it’s permissionless. You don’t have to wait months for your MPLS provider to hook you up. You can use any available internet provider. Can you smell the network freedom?

- Network hose: Managing uncertain network demand with model simplicity: Instead of forecasting traffic for each (source, destination) pair, we perform a traffic forecast for total egress and total ingress traffic per data center, i.e., network hose. Instead of asking how much traffic a service would generate from X to Y, we ask how much ingress and egress traffic a service is expected to generate from X. Thus, we replace the O(N^2) data points per service with O(N). When planning for aggregated traffic, we naturally bake in statistical multiplexing in the forecast. By adopting a hose-based network capacity planning method, we have reduced the forecast complexity by an order of magnitude, enabled services to reason network like any other consumable entity, and simplified operations by eliminating a significant number of traffic surge–related alarms because we now track traffic surge in aggregate from a data center and not surge between every two data centers.

- Nice detective story. Fixing Magento 2 Core MySQL performance issues using Percona Monitoring and Management (PMM). Yes, it was a missing index, but how you find that out for horrendously complex queries is the trick. Result: CPU usage dropped to less than 25%; increased its QPS by 5x; query time dropped from the 20s to only 66ms. Also The Mysterious Gotcha of gRPC Stream Performance.

- No matter what you think of the cloud you have to admit it’s great for load testing. ONLINE TSUNAMI – A MICROSITE FACES MEGA LOADS.

- The largest test generated 1.5 million hits per minute from 500 load agents in the cloud.

- These were LAMP architecture servers running on a Debian instance and load-balanced by a customized version of NGINX. One of the key factors in being able to deliver things as quickly as necessary was the extensive use of memcache. That meant that we could take a firehose approach to database writes and the reads would all be coming out of memory. During our testing, we even discovered and reported a bug in memcache that had to be fixed for us to be able to deal with the high concurrency.

- Episode #105: Building a Serverless Banking Platform with Patrick Strzelec. Everything is pretty much eventually consistent so event driven serverless miscroservices are a good fit. Use tokenization to protect private data. That’s where you get a token back instead of the data and just use the token everywhere. Scale was never a concern. It’s all managed with very few people. Onboarding new developers is pretty easy. Innovation velocity there has been really good because you can just try things out. One of the mistakes they made was spinning up a bunch of API gateways that would talk to each other; alot of REST calls going around the spider web of communication. They also consider fat Lambdas a mistake. Now one Lambda does one thing, and then there's pieces of infrastructure stitching that together. EventBridge between domain boundaries, SQS for commands instead of using API gateway. Use a lot of step functions. Being able to visualize a state machine has a lot of value. You can when a step fails in the logs. They created a shared boilerplate to standardize code. They landed on SNS to SQS fan-out, and it seems like fan-out first is the serverless way of doing a lot of things. They use Dynamo streams to SNS, to SQS. and shared code packages to make those subscriptions very easy. Highly recommend the library Zod and the TypeScript first object validation. The next step of the journey, is figuring out how do we share code effectively while not hiding away too much of serverless, especially because it's changing so fast. Only use ordering when you absolutely need it.

- In honor of the 10th anniversary of launching Twitch, I thought I’d share some of the lessons I learned along the way.

- Make something 10 people completely love, not something most people think is pretty good.

- If your product is for consumers, either it’s a daily habit, it’s used consistently in response to an external trigger, or it’s not going to grow.

- There are only five growth strategies that exist, and your product probably only fits one. Press isn’t a growth strategy, and neither is word of mouth.

- The five growth strategies are high-touch sales, paid advertising, intrinsic virality, intrinsic influencer incentives (Twitch!), and platform hacks.

- You know it’s a good article when they benchmark memcpy. How we achieved write speeds of 1.4 million rows per second: We reach maximum ingestion performance using four threads, whereas the other systems require more CPU resources to hit maximum throughput. QuestDB achieves 959k rows/sec with 4 threads. We find that InfluxDB needs 14 threads to reach its max ingestion rate (334k rows/sec), while TimescaleDB reaches 145k rows/sec with 4 threads. ClickHouse hits 914k rows/sec with twice as many threads as QuestDB. When running on 4 threads, QuestDB is 1.7x faster than ClickHouse, 6.4x faster than InfluxDB and 6.5x faster than TimescaleDB.

- A good explanation. Practical Reed-Solomon for Programmers.

- An excellent description of how they build, test, and deploy software. THE LEGENDS OF RUNETERRA CI/CD PIPELINE. The LoR monorepo is ~100 GB and ~1,000,000 files, so there’s a lot going on.

- HA vs AlwaysOn: Thus to be called AlwaysOn a Database must have zero downtime solutions for at least the following events: Hardware Failure; Software Failure; Software Upgrade; Hardware Upgrade; Schema Reorganisation; Online Scaling; Major Upgrades; Major disasters. To reach AlwaysOn it is necessary that your database software can deliver solutions to all 8 problems listed above without any downtime (not even 10 seconds). Actually the limit on the availability is the time it takes to discover a failure, for the most part this is immediate since most failures are SW failures, but for some HW failures it is based on a heartbeat mechanism that can lead to a few seconds of downtime.

- This is what industry is like folks. I can remember when I killed myself. It was a couple of years spent just like this. I can tell you from the perspective of history it’s not worth it. Uber's Crazy YOLO App Rewrite, From the Front Seat.

- Looks like fun. Zero to ASIC Course.

- If you want to ship product, here’s how you keep it real. The tools and tech I use to run a one-woman hardware company.

- Wholly crap there’s a lot of detail here. Love it! Extreme HTTP Performance Tuning: 1.2M API req/s on a 4 vCPU EC2 Instance: The main takeaway from this post should be an appreciation for the tools and techniques that can help you to profile and improve the performance of your systems. Should you expect to get 5x performance gains from your webapp by cargo-culting these configuration changes? Probably not. Many of these specific optimizations won't really benefit you unless you are already serving more than 50k req/s to begin with. On the other hand, applying the profiling techniques to any application should give you a much better understanding of its overall behavior, and you just might find an unexpected bottleneck.

- Ever wonder how they develop software at SpaceX? Here’s a Summary of SpaceX Software AMA. They keep it general, clearly not wanting to dive too deep into various details, but there’s still a lot to learn. Few jobs must combine as much pressure, fun, and pride.

- The most important thing is making sure you know how your software will behave in all different scenarios. This affects the entire development process including design, implementation and test. Design and implementation will tend towards smaller components with clear boundaries. This enables those components to be fully tested before they are integrated into a wider system.

- we want automated tests that exercise the satellite-to-ground communication links. We have HITL (hardware in the loop) testbeds of the satellites, and we can set up a mock ground station with a fixed antenna. We can run a test where we simulate the satellite flying over the ground station, but we have to override the software so that it thinks it is always in contact with our fixed antenna. This lets us test the full RF and network stack, but doesn't let us test antenna pointing logic. Alternatively we can run pure software simulations to test antenna pointing. We have to make sure that we have sufficient piecemeal testing of all the important aspects of the system.

- we don't separate QA from development - every engineer writing software is also expected to contribute to its testing...One unique thing about testing for a large satellite constellation is that we can actually use "canary" satellites to test out new features. We run regression tests on the software to ensure it won't break critical functionality, but then we can select a satellite, deploy the new feature, and monitor how it behaves with minimal risk to the constellation.

- We have a whole team dedicated to building CI/CD tooling as well as the core infrastructure for testing and simulation that our vehicles use. The demands of running high-fidelity physics simulations alongside our software for the sake of testing it make for some interesting challenges that most off-the-shelf CI/CD tools don't handle super well. They not only require a lot of compute, but can also be long duration (think: flying Dragon from liftoff to Docking), and we need to be able to run both "hardware out of the loop" simulations as well as "hardware in the loop"

- We use a mix of synchronous and asynchronous techniques, depending on the problem at hand. We aren't afraid to try new systems, strategies, standards, or languages, particularly early in the development of a new program

- Sometimes the best place to put a control algorithm is an embedded system close to the thing being controlled. Other times, a process needs to be a lot more centralized. When you think about a vehicle as complex as Starship, there's not just a single control process, as you have to control engines, flaps, radio systems, etc.

- We approach this by building the software in modular components, writing defensive logic, and checking the status of each operation. If an operation we expect to complete fails, we have defined error handling paths and recovery strategies.

- Dragon is a fully autonomous vehicle, so it is capable of completing the trip to and from the ISS without any interaction from the crew.

- For the control interfaces, we aim for a 'quiet-dark' philosophy–if the vehicle is behaving nominally, the interface is streamlined and minimal, but still shows overall system status. This way, we visually prioritize off-nominal information, while allowing the operators and crew to maintain system context.

- Dragon is a very capable vehicle so much of the existing software will just work with the Inspiration4 mission design.

- We try to roll out new builds to our entire fleet of assets (satellites, ground stations, user terminals, and WiFi routers) once per week. Every device is periodically checking in with our servers to see if it's supposed to fetch a new build, and if one is available it will download and apply the update during the ideal time to minimize impact to users.

- The starlink system is built to be super dynamic since our satellites are moving so fast (>7km/s) that a user isn't connected to the same satellite for more than a few minutes. Each user terminal can only talk to one satellite at a time, so our user to satellite links utilize electrically steered beams to instantaneously change targets from satellite to satellite, and we temporarily buffer traffic in anticipation of this "handover."

- We also chose to not keep all data but created a powerful system to aggregate information over time as well as age out information when it is no longer useful.

- gemmy0I: What stands out to me, both from this AMA and from everything we've heard over the years about how SpaceX operates internally, is that they understand - much more viscerally than most companies - that the culture of a company is shaped by its processes, structures, and hierarchies of responsibility and communication. So many companies unwittingly or well-intentionedly box their employees into approaching their jobs from a perspective of "I was hired as an expert in hammers, and that is what it says on my business card, so I must make all of my tasks look like a nail".

- Social networks these days are not monoliths. They are composed of subsets of audiences created, curated, and managed by an algorithm. Your experience of TikTok will be completely different from mine because the algorithm slots us into different slices of a demographic. Here’s how Instagram runs their algorithm. Shedding More Light on How Instagram Works: From there we make a set of predictions. These are educated guesses at how likely you are to interact with a post in different ways. There are roughly a dozen of these. In Feed, the five interactions we look at most closely are how likely you are to spend a few seconds on a post, comment on it, like it, save it, and tap on the profile photo. The more likely you are to take an action, and the more heavily we weigh that action, the higher up you’ll see the post. We add and remove signals and predictions over time, working to get better at surfacing what you’re interested in.

Soft Stuff:

- yatima-inc/yatima: A programming language for the decentralized web. Yatima is a pure functional programming language implemented in Rust with the following features: Content-Addressing; First-class types; Linear, affine and erased types; Type-safe dependent metaprogramming.

- nelhage/llama: A CLI for outsourcing computation to AWS Lambda.

- Open Telemetry (article): An observability framework for cloud-native software.

- ClickHouse/ClickHouse: an open-source column-oriented database management system that allows generating analytical data reports in real time.

- microsoft/CyberBattleSim: an experimentation research platform to investigate the interaction of automated agents operating in a simulated abstract enterprise network environment. The simulation provides a high-level abstraction of computer networks and cyber security concepts.

Pod Stuff:

- The Downtime Project: a podcast on outages. You might like 7 Lessons From 10 Outages. Starts @ 5:30. The most common problem was circular dependencies. Tools you need to use to solve an outage turn out to depend on what failed. The next was complex automations can fail in spectacular ways because they can make really dumb decisions when the environment changes. Next, don’t run high variance queries on production databases. And more.

Vid Stuff:

- A deep dive into a database engine internals: Oren Eini, founder of RavenDB, is going to take apart a database engine on stage. We are going to inspect all the different pieces that make for an industrial-grade database engine.

Pub Stuff:

- Exploiting Directly-Attached NVMe Arrays in DBMS: PCIe-attached solid-state drives offer high throughput and large capacity at low cost. Modern servers can easily host 4 or 8 such SSDs, resulting in an aggregated bandwidth that hitherto was only achievable using DRAM. In this paper we study how to exploit such Directly-Attached NVMe Arrays (DANA) in database systems.

- An I/O Separation Model for Formal Verification of Kernel Implementations: This paper presents a formal I/O separation model, which defines a separation policy based on authorization of I/O transfers and is hardware agnostic. The model, its refinement, and instantiation in the Wimpy kernel design, are formally specified and verified in Dafny. We then specify the kernel implementation and automatically generate verified-correct assembly code that enforces the I/O separation policies. Our formal modeling enables the disc

- TCMalloc hugepage paper: One of the key observations in the paper is that increasing hugepage coverage improves application performance, not necessarily allocator performance. i.e. you can spend extra time in the allocator preserving hugepage coverage, this improves the performance of the code that uses the memory. The net result is that you can slightly increase time spent in the allocator, and reduce time spent in the application.

- DRESSING FOR ALTITUDE: Pressure suits, partial and full, can allow a pilot to survive in a vacuum for limited period, but that is not their primary focus. The history of spacesuits has been well covered in literature; this book attempts to fill the void regarding aviation pressure suits.

- Reward is enough: In this article we hypothesise that intelligence, and its associated abilities, can be understood as subserving the maximisation of reward. Accordingly, reward is enough to drive behaviour that exhibits abilities studied in natural and artificial intelligence, including knowledge, learning, perception, social intelligence, language, generalisation and imitation.

- The Petascale DTN Project: High Performance Data Transfer for HPC Facilities: This paper describes the Petascale DTN Project, an effort undertaken by four HPC facilities, which succeeded in achieving routine data transfer rates of over 1PB/week between the facilities. We describe the design and configuration of the Data Transfer Node (DTN) clusters used for large-scale data transfers at these facilities, the software tools used, and the performance tuning that enabled this capability.

- Metastable Failures in Distributed Systems (article): We describe metastable failures—a failure pattern in distributed systems. Currently, metastable failures manifest themselves as black swan events; they are outliers because nothing in the past points to their possibility, have a severe impact, and are much easier to explain in hindsight than to predict. Although instances of metastable failures can look different at the surface, deeper analysis shows that they can be understood within the same framework.

- FoundationDB: A Distributed Unbundled Transactional Key Value Store: . FoundationDB is the underpinning of cloud infrastructure at Apple, Snowflake and other companies, due to its consistency, robustness and availability for storing user data, system metadata and configuration, and other critical information. davgoldin: Only great things to say about FoundationDB. We've been using it for about a year now. Got a tiny, live cluster of 35+ commodity machines (started with 3 a year ago), about 5TB capacity and growing. Been removing and adding servers (on live cluster) without a glitch. We've got another 100TB cluster in testing now. Of all the things, we're actually using it as a distributed file system.

- The overfitted brain: Dreams evolved to assist generalization: The goal of this paper is to argue that the brain faces a similar challenge of overfitting and that nightly dreams evolved to combat the brain's overfitting during its daily learning. That is, dreams are a biological mechanism for increasing generalizability via the creation of corrupted sensory inputs from stochastic activity across the hierarchy of neural structures.

- Internet Computer Consensus: We present the Internet Computer Consensus (ICC) family of protocols for atomic broadcast (a.k.a., consensus), which underpin the Byzantine fault-tolerant replicated state machines of the Internet Computer. The ICC protocols are leader-based protocols that assume partial synchrony, and that are fully integrated with a blockchain.