Stuff The Internet Says On Scalability For November 10th, 2017

Hey, it's HighScalability time:

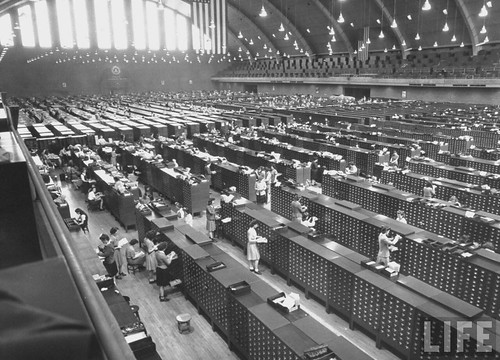

Ah, the good old days. This is how the FBI stored finger prints in 1944. (Alex Wellerstein). How much data? Estimates range from 30GB to 2TB.

If you like this sort of Stuff then please support me on Patreon. Also, there's my new book, Explain the Cloud Like I'm 10, for complete cloud newbies.

- 1 million: times we touch our phones per year; 13 million: lines of Javascript @ Facebook; 256K: RAM needed for TensorFlow on a microcontroller; 2,502%: increase in the sale of ransomware on the dark web; 800 million: monthly Instagram users; 40%: VMs in Azure run Linux; 40%: improved GCP network latency from new SDN stack; 50%: fat content of a woolly mammoth;

- Quotable Quotes:

- Sean Parker: And that means that we [Facebook] need to sort of give you a little dopamine hit every once in a while, because someone liked or commented on a photo or a post or whatever. And that's going to get you to contribute more content, and that's going to get you ... more likes and comments

- David Gerard: I spent yesterday afternoon on Twitter and /r/buttcoin, giggling. It was a popcorn overload moment for every acerbic cryptocurrency sceptic who ever thought that immutable, unfixable smart contracts were an obviously stupid idea that would continue to end in tears and massive losses, as they so often had previously.

- @jessfraz: I remember now why I put everything into containers in the first place, it's because all software is 💩

- Amin Vahdat: What we have found running our applications at Google is that latency is as important, or more important, for our applications than relative bandwidth. It is not just latency, but predictable latency at the tail of the distribution. If you have a hundred or a thousand applications talking to one another on some larger task, they are chatty with one another, exchanging small messages, and what they care about is making a request and getting a response back quickly, and doing so across what might a thousand parallel requests.

- @SteveBellovin: Why anyone with any significant programming experience--and hence experience with bugs--every liked smart contracts is a mystery to me.

- Neha Bagri: Startups worship the young. But research shows people are most innovative when they’re older

- @manisha72617183: OH: I no longer tolerate complicated programming languages. My mental space is like Silicon Valley; rent is high and space is at a premium

- @atoonk: On days like today, we're yet again reminded that the Internet is held together with duct tape.. #rockSolid #BGP #comcast #outage

- @bradfitz: 0 days since last high impact bug in an experimental programming language on the Ethereum VM affecting millions of dollars.

- TheScientist: The genetic, molecular, and morphological diversity of the brain leads to a functional diversification that is likely necessary for the higher-order cognitive processes that are unique to humans.

- Woods' Theorem: As the complexity of a system increases, the accuracy of any single agent's own model of that system decreases rapidly.

- Carlos E. Perez: The brain performs compensation when it encounters something it does not expect. It learns how to correct itself through perturbative methods. That’s what Deep Learning systems also do, and it’s got nothing to do with calculating probabilities. It’s just a whole bunch of “infinitesimal” incremental adjustments.

- @erickschonfeld: “What can one expect of a few wretched wires?”—telegraph skeptic, 1841

- @ErikVoorhees: The average Bitcoin transaction fee ($10.17) is now more than twice the cost of Bitcoin itself when I first learned of it ($5) in 2011 :(

- LightShadow: StackOverflow should be one of the first internet companies to accept cryptocurrency micro payments. All they'd have to do is skim a small percentage from people tipping each other pennies for good answers

- @lworonowicz: I feel like I killed a family dog - had to decommission an old #Solaris server with uptime of 6519 days.

- Google: Andromeda 2.1 latency improvements come from a form of hypervisor bypass that builds on virtio, the Linux paravirtualization standard for device drivers. Andromeda 2.1 enhancements enable the Compute Engine guest VM and the Andromeda software switch to communicate directly via shared memory network queues, bypassing the hypervisor completely for performance-sensitive per-packet operations.

- iAfrikan News: The first-ever fiber optic cable with a route between the U.S. And India via Brazil and South Africa will soon be a reality. This is according to a joint provisioning agreement entered into by Seaborn Networks ("Seaborn") and IOX Cable Ltd ("IOX").

- @iamdevloper: 1969: -what're you doing with that 2KB of RAM? -sending people to the moon 2017: -what're you doing with that 1.5GB of RAM? -running Slack

- Eric Schmidt: Bob Taylor invented almost everything in one form or another that we use today in the office and at home.

- @ben11kehoe: I am so on board with CRDT-based data stores providing state to FaaS at the edge.

- VMG: “Code is Law” fails again.

- Paul Frazee: In Bitcoin, acceptance of a change is signaled by the miners - once some percent of the miners agree, the change is accepted. This means that hashing power is used as a measure of voting power, and so the political system is essentially plutocratic. How is that significantly better than the board of a publicly traded company?

- gtrubetskoy: Professor Tanenbaum is one of the most respected computer scientists alive, and for Intel to include Minix in their chip and not let him know is kind of unprofessional and not very nice to say the least. That is his only (and quite fair) point.

- jsolson: Both approaches have tradeoffs, although I think even with ENA AWS hits ~70µs typical round-trip-times while GCE gets down to ~40µs. Amazon's largest VMs in some families do advertise higher bandwidth than GCE does currently.

- @brendangregg: AWS put lots of work into optimizing Xen, including net & disk SR-IOV (direct metal access). But their new optimized KVM is even better.

- @wheremattisat: “Facebook and Google are proto-AIs and we are their microbiome. The objective function of those AIs today is to make more money” @timoreilly

- @ossia: "Weeks of programming can save you hours of planning." - Anonymous

- @sallamar: For kicks, we run over 6.2 billion requests a month on lambda (450% yoy) at @ExpediaEng. Still cheaper than renting an apartment for a year.

- @Joab_Jackson: At this point,IBM #openwhisk is the most viable #open source #serverless platform—@ryan_sb @thecloudcastnet #podcast

- Polvi: I think PaaS is dead. That's why you see OpenShift and Cloud Foundry and everyone pivoting to Kubernetes. What's going to happen is PaaS will be reborn as serverless on the other side of the Kubernetes transition.

- mmgutz: We're running our Debian farm on Azure thanks to startup perks. It's been up 100% for us the last 2.5 years. Azure service is no less or better than AWS.

- zzzeek: I switch between multiple versions of MySQL and MariaDB all day long. If you aren't using specific things like MySQL's JSON type or NDB storage engine or expecting CHECK constraints to enforce on MySQL (oddly omitted from this feature comparison!), there is nothing different at all from a developer point of view, beyond the default values of flags which honestly change more between MySQL releases than anything else.

- SEJeff: They [Azure] allow you to have native RDMA[1] for your VMs, something neither amazon or google will give you. As an oldhat Linux/Unix guy, it is somewhat amusing to think of Microsoft's cloud offering as the high perf one, but the facts don't lie. If you have true HPC style workloads such as bioinformatics, oil/natgas exploration, finance, etc, the extra node to node communication bits are necessary. The QDR fabric they have has a native speed of 40 Gbps. It is a shame they don't have FDR (56G) or EDR (100G), but still is quite impressive depending on your app. This also could be a game changer for large MPI jobs.

- johnnycarcin: I've honestly yet to see a customer moving to Azure who has more than 50% Windows based systems. Almost everyone I've worked with only uses Windows Server for their SQL Server services, outside of that it's RHEL, CentOS or Ubuntu.

- lurchedsawyer: So to answer your question as to what is needed for Azure to become a viable alternative to AWS: I would say about 10 years.

- @mjpt777: If Google thinks latency trumps bandwidth then they should look to software before hardware for the main source of latency.

- Ben Kehoe: Like so many things in life, serverless is not an all-or-nothing proposition. It’s a spectrum — and more than that, it has multiple dimensions along which the degree of serverlessness can vary

- zzzeek: If I was doing brand new development somewhere I'm sure I'd use Postgresql, since from a developer point of view it's the most consistent and flexible. While for the last few years I've worked way more with MySQL / MariaDB and at the moment the MySQL side of things is a bit more familiar to me, I still appreciate PG's vastly superior query planner and index features.

- Jdcrunchman: I remember the time when Woz got caught red handed with his box at a payphone by police, he told the cop it was a synthesizer. Because of the power of the blue box, we had the capability of jumping in on others calls during this time. An acquaintence who approached me regarding blue boxes was asking me some suspicious questions, so I tapped his line and learned he was an informant. I also tapped the FBI's line.

- Polvi: We've heard from our customers, if you cross $100,000 a month on AWS, they'll negotiate your bill down. If you cross a million a month, they'll no longer negotiate with you because they know you're so locked that you're not going anywhere. That's the level where we're trying to provide some relief.

- Jdcrunchman: Personally, I think Jobs was a jerk. He treated his programmers like shit but his driving force was really necessary to urge his team to produce.

- @erikcorry: Bitcoin is the answer to the question "What if you could never get banks to reverse a transaction, even if it was accidental or fraudulent?" Ethereum is "What if you could only reverse a transaction by reversing all worldwide transactions that week?"

- @allspaw: The quickest way to get me to stop using (or never use) your product or service? Use the phrase "human error" or "situation(al) awareness" in any of your marketing, blog, product description.

- Coding Horror: Battle Royale is not the game mode we wanted, it's not the game mode we needed, it's the game mode we all deserve. And the best part is, when we're done playing, we can turn it off.

- @gravislizard: almost everything on computers is perceptually slower than it was in 1983

- Parimal Satyal: The web is open by design and built to empower people. This is the web we're breaking and replacing with one that subverts, manipulates and creates new needs and addiction.

- FittedCloud: Machine Learning driven optimization was able to achieve 89% less cost over DynamoDB auto scaling

- Anonymous Coward: If you follow best practices for architecting a Serverless application, then you’ll break down your app into a series of micro services. Each of these has the potential to be ported without impacting the rest of the app. Yes, this takes time, but you balance that (hopefully low) risk against the benefits of agility and delivering services quickly on your chosen platform.

- @milesward: Sure, just lay a few thousand miles of fiber, snag a few atomic clocks, get GPS sorted, and yah, yer cool

- @krinndnz: "the cryptocurrency that's cross-promoting with the new Björk album" sure is a 2017 sentence.

- Dan Garisto: Scale is fascinating. Scientifically it’s a fundamental property of reality. We don’t even think about it. We talk about space and time—and perhaps we puzzle more over the nature of time than we do over the nature of scale or space—but it’s equally mysterious.

- @h0t_max: Game over! We (I and @_markel___ ) have obtained fully functional JTAG for Intel CSME via USB DCI. #intelme #jtag #inteldci

- Robert M. Sapolsky: Testosterone makes people cocky, egocentric, and narcissistic.

-

@ewither: The Paradise and Panama papers wouldn't have been possible without the tech to connect the dots. We spoke to the man behind it @emileifrem @neo4j on @ReutersTV

- Daniel C. Dennett: Creationists are not going to find commented code in the inner workings of organisms, and Cartesians are not going to find an immaterial res cogitans “where all the understanding happens"

- John: Hey Marek, don't forget that REUSEPORT has an additional advantage: it can improve packet locality! Packets can avoid being passed around CPUs!

- harlows_monkeys: Block chains are today what microprocessor dryer timers were in the '70s.

- Aphyr: The fact that linearizability only has one unit of atomicity, and serializability has two, suggests that in order to compare them we need to map linearizable operations to serializable transactions. Since transactions operate on multiple keys, our linearizable operations need to operate on multiple keys as well. So instead of a system of linearizable registers, let’s have a single linearizable map, and operations on that map will be transactions composed of multiple reads, writes, etc. From this perspective, linearizability and strict serializability are the same thing.

- Martin Geddes: 5G is making the network far more dynamic, without having the mathematics, models, methods or mechanisms to do the "high-frequency trading". The whole industry is missing a core performance engineering skill: they can do (component) radio engineering, but not complete systems engineering. When you join all the bits, you don't know what you get until you turn it on!

- Tully Foote: We were going to build both state-of-the-art hardware and software, with the goal of being a LAMP stack for robotics: You’d be able to take its open-source software, put your business model on top, and you’d have a startup

- The Memory Guy: The Objective Analysis NVDIMM market model reveals that the market for NVDIMMs is poised to grow at a 105% average annual rate to nearly 12 million units by 2021.

- Polvi: Lambda and serverless is one of the worst forms of proprietary lock-in that we've ever seen in the history of humanity. It's seriously as bad as it gets. It's code that tied not just to hardware – which we've seen before – but to a data center, you can't even get the hardware yourself. And that hardware is now custom fabbed for the cloud providers with dark fiber that runs all around the world, just for them. So literally the application you write will never get the performance or responsiveness or the ability to be ported somewhere else without having the deployment footprint of Amazon.

- Tilman Wolf: I argue that building systems that require large amounts of brute-force processing for normal operation (i.e., to support benign users performing valid transactions) is not only a terrible waste of resources; it is equivalent to computer programmers and engineers waiving the proverbial white flag and surrendering any elegance in system and protocol design to a crude solution of last resort.

- leashless: Hi. I'm Vinay Gupta, the release coordinator for the Ethereum launch. Firstly, nobody believes that Proof of Work is here to stay - it's bleeding money from the currencies that use it at an astonishing rate, and as soon as Proof of Stake (or other algorithms) can replace it, they will. PoW is a direct financial drag on these economies, and it will not last long. Bitcoin will probably take longer to clean up its act than Ethereum, but that's largely for political reasons, not technical ones. Technically, it should be a lot easier to do than Ethereum, in fact. (It helps not being nearly Turing complete.) Secondly, brute force is how things begin. The Unix philosophy has always suggested using brute force first: premature optimization is the root of all evil, as they say. We are at the very earliest stages of designing global public heterogeneous parallel supercomputers, and we should not be surprised that the early approaches are brute force. It won't be that way for long.

- tedivm: Processors that have hyperthreading duplicate the parts of the CPU needed to maintain state, but they don't duplicate the parts that run the actual logic. So if you compare a single core hyperthreaded processor with a dual core processor without hyperthreading the dual core one will be faster. However it gets a bit more complicated than that. Oftentimes processors sit idle while waiting for the rest of the system to catch up- for instance it may have to wait for memory to get loaded from RAM. Processors with hyperthreading see a boost in performance here because they can run the second thread while waiting for the operations needed to run the first thread- thus making these processors about 30% more efficient. So while more cores are definitly better, if you are comparing two processors with the same number of cores and all else is equal the ones with hyperthreading will perform better. One thing to note is that AWS provides vCPUs in pairs, so even though they are giving you the number of threads you can always be sure you're getting individual cores along with them.

- The great migration. Charles-Axel Dein of Uber explains how they taimed their monolith. The great SOA migration. Uber went from a 450,000 line great ball of mud to over 1000 microservices. The 5 year process just finished this year. Since 2012 Uber grew from 10 cities to 600 cities and from 10 engineers to over 2000 today. In 2012 the architecture was simple. One dispatch engine (node.js) would keep track of position of driver and riders and an API (Python) to handle payments, signup, and all the rest. The back-end database was PostgreSQL. One service per 2 engineers may seem too much, but there's a lot of complexity in the system, especially on the driver side. Why split? A large monolith slows down developers. Tests and deploys are slow. Refactoring is slow. It's hard to onboard new engineers. Monoliths suffer from the tragedy of the commons. Nobody really owns anything. When there's an outage who is responsible? You lose a lot of precious time. A monolith is very difficult to scale. It's also a single point of failure. They were running out db connections, running out of memory on machines. And surprisingly, launching in all these new cities added a lot new translations which required a lot memory. How split? Make a rough plan to know where you are going. Don't move to a distributed monolith. Design teams around the architecture. But don't over plan. Add business monitoring (monitor number of signups per device not CPU), feature flags, and a storage layer. Start with one microservice and one use case. Migrate data and keep it up to-date using shadow writes. Now migrate reads by shadowing reads. Compare new and old system to make sure there's no error. Reads and writes are now to the new service, so delete the old service. Now migrate clients one by one, take the opportunity to redesign the UI. Uber tests on tenancies. If trip has been marked that it's in test mode, it will behave differently. Gives benefits of testing in production without the risks. Give teams engineering and end product metrics. It's more important to be resilient than smart. Standardization promotes faster learning.

- An incredibly detailed review of 21 different microcontrollers. The Amazing $1 Microcontroller by Jay Carlson. This was a lot of work. The test code is available at jaydcarlson/microcontroller-test-code. Conclusion: there is no perfect microcontroller — no magic bullet that will please all users. What I did learn, however, is it’s getting easier and easier to pick up a new architecture you’ve never used before, and there have never been more exciting ecosystems to choose from. If you’re an Arduino hobbyist looking where to go next, I hope you realize there are a ton of great, easy-to-use choices. And for professional developers and hardcore hackers, perhaps there’s an odd-ball architecture you’ve noticed before, but never quite felt like plunging into — now’s the time.

- 10 Lessons from 10 Years of AWS: Embrace Failure; Local State is a Cloud Anti-Pattern: transient state does not belong in a database; Immutable Infrastructure: don't update in-place; Infrastructure as Code: use all of Amazon's complicated templating stuff; Asynchronous Event-Driven Patterns Help You Scale: drive events into Lambda; Don't Forget to Scale the Database: shard it; Measure, Measure, and Measure: set targets and make data driven decisions; Plan for the Worst, Prepare for the Unexpected: release you inner chaos monkey; You Build IT, You Run IT: DevOps; Be Humble, Learn From Others, Make History: then someday you could make your own top 10 list. Also, Keynote: 10 Lessons from 10 Years of EC2 by Chris Schlaeger

- A better way to handle smart contracts? Well, nearly anything is better than what we have now. Worse is better doesn't really fit when hundreds of millions of dollars are involved. A Formal Language for Analyzing Contracts: The author presents a mini-language for lawyers, legal scholars, economists, legal and economic historians, business analysts, accountants, auditors, and others interested in drafting or analyzing contracts. It is intended for computers to read, too. The main purpose of this language is to, as unambiguously and completely and succinctly as possible, specify common contracts or contractual terms. These include financial contracts, liens and other kinds of security, transfer of ownership, performance of online services, and supply chain workflow.

- Videos from the OpenZFS Developer Summit 2017 are now available. Dedupe doesn't have to Suck!

- Sampling is great. It is the only reasonable way to keep high value contextually aware information about your service while still being able to scale to a high volume. Instrumenting High Volume Services: Part 3. Constant Throughput: specify the maximum number of events per time period you want to send for analysis. The algorithm then looks at all the keys detected over the snapshot and gives each key an equal portion of the throughput limit. Average Sample Rate: With this method, we’re starting to get fancier. The goal for this strategy is achieve a given overall sample rate across all traffic. However, we want to capture more of the infrequent traffic to retain high fidelity visibility. We accomplish both these goals by increasing the sample rate on high volume traffic and decreasing it on low volume traffic such that the overall sample rate remains constant. This gets us the best of both worlds - we catch rare events and still get a good picture of the shape of frequent events. Average Sample Rate with Minimum Per Key: To really mix things up, let’s combine two methods! Since we’re choosing the sample rate for each key dynamically, there’s no reason why we can’t also choose which method we use to determine that sample rate dynamically!

- Not all domains are created equal. GetStream.io shares some hard won experience. Stop using .IO Domain Names for Production Traffic: about 20% of DNS resolutions for all .io domains were totally broken...NIC.IO isn’t equipped with the technical support and systems necessary to manage a top-level domain...Searching for .io on HN returns a long list of similar outages...Looking forward, our plan is to add a .org domain and find a DNS provider to manage the nameservers...DNS as a whole is one of those things that most take for granted but can easily cause serious downtime and trouble. Using a widely used TLD like .com/.net/.org is the best and easiest way to ensure reliability

- Excellent discussion. Imaging how blockchains work for applications is not easy. Why does UPS need block chain instead of using proven server database Technolgies? Isn’t the overhead a lot more inefficient compared to just running distributed servers? killerstorm: I can't say they really need a blockchain, because UPS is big enough to host this database in a central location and give access through API. arbitrary-fan: Looks like it is mostly going to be used for logistics integrity. This would mean the more loosely coupled parts of their business workflow can be more easily audited mean to identify inefficient (or corrupt) parts. munchbunny: I think the decentralized trust aspect of blockchain is what they're going for - if UPS has to subcontract or if someone else pays UPS, then the "paper trail" is clear and hard to falsify, and whichever entity is handling the package can update the record instead of going through UPS's API. Philluminati: This is where I think Blockchain's biggest test is. It has the potential to be a way to create a standardised database, owner-free, for a specific role. If people see blockchain as simply a drop in replacement for a regular database (like mongo became), and use it that way, it may start to receive negative connotations. killerstorm: No, private blockchains do not use computing power. Their integrity relies on the fact that it's unlikely for a majority of members (individual companies in the network) to become corrupt. This is known as proof-of-authority. Every block is signed by a supermajority of consortium members. If you have only one authority company (i.e. if UPS runs it without involving partners), then it's much weaker. It's still tamper-evident, i.e. if customer got a cryptographic proof from UPS he can then prove that he has original data in court if there's a dispute. But it's tamper-evident rather than tamper-resistant. And it's debatable if it can be called a blockchain. Isvara: Here's what I don't understand: if it's not being used for a crypto currency, who's doing the mining and what's their incentive?

- Earth on AWS. Big public spatial datasets hosted at AWS. rburhum: The problem with dealing with datasets of this size is that just the mere collection and storage of it, is a problem of resources. This AWS link here is saying that they have grabbed all these datasets from various govt and non-profits and are hosting them in raw form so you can use them.

- The VM vs Container wars continue they do. My VM is lighter (and safer) than your container: In today’s paper choice the authors investigate the boundaries of Xen-based VM performance. They find and eliminate bottlenecks when launching large numbers of lightweight VMs (both unikernels and minimal Linux VMs). The resulting system is called LightVM and with a minimal unikernel image, it’s possible to boot a VM in 4ms. For comparison, fork/exec on Linux takes approximately 1ms. On the same system, Docker containers start in about 150ms. See sysml/lightvm

- Slack has a very wide API. Over 150 methods. They use OpenAPI 2.0, formerly known as Swagger, to describe and document it. Standard practice. The advantage of using a standard format is you can parse the API spec and generate useful things automatically. You can make the methods available in a GUI. Generate method tests. Format the docs differently. Generate mock servers. You know, stuff we've always done with interface definitions. The spec itself is generated by script that spiders hundreds of files internally.

- No, the answer is not move to Azure or AWS! 5 steps to better GCP network performance: Know your tools: lperf, netperf, traceroute; Put instances in the right zones; Choose the right core-count for your networking needs: the more virtual CPUs in a guest, the more networking throughput you get; Use internal over external IPs: achieve max performance by always using the internal IP to communicate. In many cases, the difference in speed can be drastic; Rightsize your TCP window: TCP window sizes on standard GCP VMs are are tuned for high-performance throughput.

- Instagram on Improving performance with background data prefetching: it becomes more and more important that our app can withstand diverse network conditions, a growing variety of devices, and non-traditional usage patterns...Most of the world does not have enough network connectivity...One of our solutions was to add the user’s connection type into our logging events...Offline is a status, not an error...The offline experience is seamless...At Instagram one of our engineering values is “do the simple thing first"...Currently we prefetch on background only once in between sessions. To decide at what time we will do this after you background the app, we compute your average time in between sessions (how often do you access Instagram?) and remove outliers using the standard deviation (to not to take into account those times where you might not access the app because you went to sleep if you are a frequent user). We aim to prefetch right before your average time...What other benefits can background prefetching give you beyond breaking the dependency on network availability and reducing cellular data usage? If we reduce the sheer number of requests made, we reduce overall network traffic. And by being able to bundle future requests together, we can save overhead and battery life.

- If you are interested in an overview of all the things GraphQL has to offer, then here's what you need: The GraphQL stack: How everything fits together.

- Why is epoll is more performant that select and poll? The method to epoll’s madness (great diagrams!): The cost of select/poll is O(N), which means when N is very large (think of a web server handling tens of thousands of mostly sleepy clients), every time select/poll is called, even if there might only be a small number of events that actually occurred, the kernel still needs to scan every descriptor in the list. Since epoll monitors the underlying file description, every time the open file description becomes ready for I/O, the kernel adds it to the ready list without waiting for a process to call epoll_wait to do this. When a process does call epoll_wait, then at that time the kernel doesn’t have to do any additional work to respond to the call, but instead returns all the information about the ready list it’s been maintaining all along...As a result, the cost of epoll is O(number of events that have occurred) and not O(number of descriptors being monitored) as was the case with select/poll

- Nice tutorial. From Zero to Production with Angular, Firebase, and GitLab CI. Also, What we’ve learned from four years of using Firebase.

- Not utterly convincing, but persuasive. Why native app developers should take a serious look at Flutter: Flutter is an app SDK (backed by Google) to build ‘modern mobile apps...Flutter not only helped me build fast, more importantly, it helped me actually finish. It was that much fun...[compared to React Native] generational leap forward in terms of the ideas it has implemented upon... same code can run on Android and iOS...It is all Dart...The way Flutter works, it needs to create and destroy a large number of short lived objects very fast. Dart’s ‘garbage collection’ is very well suited for such a use case...layouts are defined using Dart code only. There is no XML / templating language. There’s no visual designer/storyboarding tool either...changes you make in code can be hot reloaded instantly...I have been way more productive writing layouts in Flutter (Dart) than either Android/XCode...Flutter is reactive...There will be a listener to track changes in the TextField and the Dropdown values. On change we would be updating these values in the ‘global state’ and tell Flutter to repaint...In complex apps, this style of coding really starts to shine. It is much simpler to maintain and reason about...The architecture in Reactive tends to be more around figuring out how to manage State...Everything is a widget...Composition over Inheritance. It is powerfully applied throughout Flutter’s API and makes it really elegant and simple...Flutter apps compile to Native code, so the performance is as good as it gets.

- Virtual Memory Tricks. You can use virtual memory to allocate Application wide unique IDs, implement memory overwrite protection, reduce memory fragmentation, and build ring buffers.

- How Netflix works: the (hugely simplified) complex stuff that happens every time you hit Play: Hundreds of microservices, or tiny independent programs, work together to make one large Netflix service; Content legally acquired or licensed is converted into a size that fits your screen, and protected from being copied; Servers across the world make a copy of it and store it so that the closest one to you delivers it at max quality and speed; When you select a show, your Netflix app cherry picks which of these servers will it load the video from; You are now gripped by Frank Underwood’s chilling tactics.

- DigitalOcean has moved much of their infrastructure to Go. Go at DigitalOcean. hardwaresofton: While rust is the safer, and more featureful language, I think Go is quite possibly the better pick for large corporations just due to it's simplicity. Rust to me is the more interesting language, but it definitely offers more power and more choice -- they probably did the right thing by going for Go IMO.

- Top 10 Time Series Databases: All of the TSDBs are eventually consistent with the exception of Elasticsearch which tries to be consistent but fails in the true sense of the definition...Databases built from the ground up for time series data are significantly faster than those that sit on top of non purpose built databases like Riak KV, Cassandra and Hadoop...Now let's say you are crazy enough to actually be storing 3 million metrics per second. With DalmatinerDB that's 93Tb of disk space needed for a years worth of data. With Elasticsearch it's just over 2Pb. On S3 that would be the difference of $3k per month vs $63k per month and unfortunately SSD's (which is what most time series databases run on) are magnitudes pricier than cloud storage. Now factor in replication and you're in a world of pain...DalmatinerDB is at least 2 - 3 times faster for writes than any other TSDB in the list...The DalmatinerDB storage engine, which was designed around the properties of the ZFS filesystem...DalmatinerDB is based on Riak Core you get all of the benefit of the riak command line utilities. The rest in order: InfluxDB; Prometheus; Riak RS; OpenTSB; KairosDB; Elasticsearch; Druid; Blueflood; Graphite.

- Great series of articles. How JavaScript works: Deep dive into WebSockets and HTTP/2 with SSE [Server Side Events] + how to pick the right path. How to choose between WebSocket and HTTP/2? Say you want to build a Massive Multiplayer Online Game that needs a huge amount of messages from both ends of the connection. In such a case, WebSockets will perform much, much better. In general, use WebSockets whenever you need a truly low-latency, near realtime connection between the client and the server. If your use case requires displaying real-time market news, market data, chat applications, etc., relying on HTTP/2 + SSE will provide you with an efficient bidirectional communication channel while reaping the benefits from staying in the HTTP world.

- A concise comparison. Cheap Cloud Hosting Price Comparison: DigitalOcean vs. Linode vs. Vultr vs. Amazon Lightsail vs. SSD Nodes vs. Many Others.

- Running Neuroimaging Applications on Amazon Web Services: How, When, and at What Cost? In this paper we describe how to identify neuroimaging workloads that are appropriate for running on AWS, how to benchmark execution time, and how to estimate cost of running on AWS. By benchmarking common neuroimaging applications, we show that cloud computing can be a viable alternative to on-premises hardware. We present guidelines that neuroimaging labs can use to provide a cluster-on-demand type of service that should be familiar to users, and scripts to estimate cost and create such a cluster.

- STELLA Report from the SNAFUcatchers Workshop on Coping With Complexity: A consortium workshop of high end techs reviewed postmortems to better understand how engineers cope with the complexity of anomalies (SNAFU and SNAFU catching episodes) and how to support them. These cases reveal common themes regarding factors that produce resilient performances. The themes that emerge also highlight opportunities to move forward.