Stuff The Internet Says On Scalability For October 5th, 2018

Hey, wake up! It's HighScalability time:

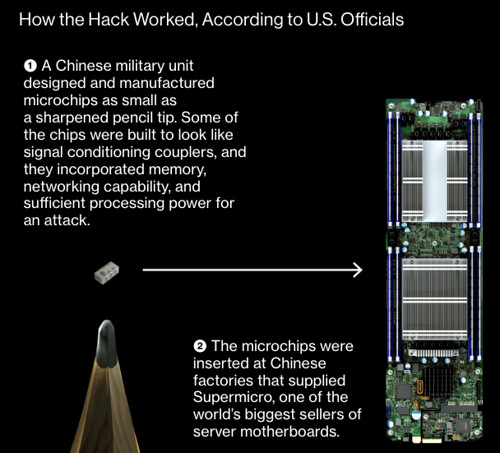

Halloween is early. Do you know what's hiding inside your computer? Probably not. (bloomberg)

Do you like this sort of Stuff? Please support me on Patreon. I'd really appreciate it. Know anyone looking for a simple book explaining the cloud? Then please recommend my well reviewed book: Explain the Cloud Like I'm 10. They'll love it and you'll be their hero forever.

- 127k: lines of code in first version of Photoshop; $15: Amazon's new minimum wage; 100,000: botnet hijacks Brazilian bank traffic; 3,000: miles per gallon efficiency of a bike; 1 billion: Reddit video views per month; 3: imposters found using face rekognition software; 24%: run their cloud database using RDS, DynamoDB, etc; 250+: decentralized exchanges in the world today; $9 billion: Apple charge to make Google default iOS search; $1.63B: EU fine for Facebook breach; 9 million: broken Wikipedia links rescued by Internet Archive; 1 million: people who rely on gig work; 6,531,230,326: Duck Duck Go queries;

- Quotable Quotes:

- DEF CON: A voting tabulator that is currently used in 23 states is vulnerable to be remotely hacked via a network attack. Because the device in question is a high-speed unit designed to process a high volume of ballots for an entire county, hacking just one of these machines could enable an attacker to flip the Electoral College and determine the outcome of a presidential election

- @antirez: "After 20 years as a software engineer, I've started commenting heavily. I used to comment sparingly. What made me change was a combination of reading the SQLite and Redis codebases" <3 false myth: code should be auto-explaining. Comments tell you about the state, not the code.

- @ben11kehoe: Uniquely at AWS, S3 bucket *names* are global despite the buckets themselves being regional, hence creation is routed through us-east-1. I would be surprised to see similar cross-region impacts for other services.

- @jpetazzo: "Windows 95: the best platform to run Prince of Persia... 4.5 millions lines of code, and today we can run it on top of a JavaScript emulator"—@francesc about the evergrowing size of codebases #VelocityConf

- @lizthegrey: and then you have the moment that the monolith doesn't scale. So you go into microservices, and a myriad of different storage systems. These are the problems: (1) one problem becomes many, (2) failures are distributed, (3) it's not clear who's responsible. #VelocityConf

- Tim Bray: I think the CloudEvents committee probably made a mistake when they went with the abstract-event formulation and the notion that it could have multiple representations. Because that’s not how the Internet works. The key RFCs that define the important protocols don’t talk about sending abstract messages back and forth, they describe actual real byte patterns that make up IP packets and HTTP headers and email addresses and DNS messages and message payloads in XML and JSON. And that’s as it should be. ¶

- romwell: The IT 'cowboys' didn't ride into sunset. They were fired by banks which are/were stupid enough to cut corners on people who know things about their infrastructure before migrating to more modern systems.

- ben stopford: The Streaming Way: Broadcast events; Cache shared datasets in the log and make them discoverable; Let users manipulate event streams directly (e.g., with a streaming engine like KSQL); Drive simple microservices or FaaS, or create use-case-specific views in a database of your choice

- phkahler: When you're really good you hide exploits in plain sight.

- specialp: I don't understand what the operational burden is. We literally do nothing to our K8s cluster, and it runs for many months until we make a new updated cluster and blow away the old one. We've never had an issue attributed to K8s in the 2 years we have been running it in production. If we ever did, we'd just again deploy a new cluster in minutes and switch over. Immutable infrastructure.

- arminiusreturns: It isn't just banks either. I'm a greybeard sysadmin type who has seen the insides of hundreds of companies, and for some reason in the late 2000s it seemed like everyone from law firms to insurance companies decided to fire their IT teams and then pay 3x the money for 1/16 the service from contractor/msp-types... and they wonder why they wallow in tech debt... I'd they even knew what it is, they did fire all the people telling them about it after all!

- Ivan Pepelnjak: we usually build oversubscribed leaf-and-spine fabrics. The total amount of leaf-to-spine bandwidth is usually one third of the edge bandwidth. Leaf-and-spine fabrics are thus almost never non-blocking, but they do provide equidistant bandwidth.

- Christine Hauser: Police Use Fitbit Data to Charge 90-Year-Old Man in Stepdaughter’s Killing

- pcwalton: "I've measured goroutine switching time to be ~170 ns on my machine, 10x faster than thread switching time." This is because of the lack of switchto support in the Linux kernel, not because of any fundamental difference between threads and goroutines. A Google engineer had a patch [1] that unfortunately never landed to add this support in 2013. Windows already has this functionality, via UMS. I would like to see Linux push further on this, because kernel support seems like the right way to improve context switching performance.

- @krishnan: I have a different view. Even though edge computing to cloud starts out as a "client - server" kinda model, we will eventually see an evolution towards P2P cloud. There are tons of unused capacity at the edge locations and there is an opportunity to build a more distributed cloud

- zie: For us, for most web stuff, that is mostly stateless, we scale by just starting more instances of the application. For stuff like Postgres, we scale hardware, versus doing something like citus or offloading reads to a hot-standby or whatever. It gets complicated quickly doing that stuff.

- @lindadong: I’m sorry NO. One of the worst parts of my time at Apple was the toxic culture this line of thinking bred. Swearing at and insulting people’s work is not okay and is never helpful. Criticism for criticism’s sake is a power move.

- @danielbryantuk: "Ops loves serverless. We've embraced this mode of working for quite some time with PagerDuty and Datadog There will always be the need for ops (and associated continuous testing). #NoOps is probably as impractical as #NoDev" @sigje #VelocityConf

- @danielbryantuk: "Bringing in containers to your organisation will require new headcount. You will also need someone with existing knowledge that knows where the bodies are buried and the snowflakes live" @alicegoldfuss #velocityconf

- yashap: 100% this. I think people who have not worked directly in marketing dramatically underestimate just how much of marketing at a big company is taking credit for sales that were going to happen anyways. People have performance driven bonuses/promotions/whatever, TRULY generating demand is very hard, taking credit for sales you didn’t drive is WAY easier, and most companies have very feeble checks and balances against this.

- @peteskomoroch: “[AutoML] sells a lot of compute hours so it’s good for the cloud vendors” - @jeremyphoward

- Implicated: I spent 3-4 years deep in the blackhat SEO world - it was my living, and it almost completely was dependent on free subdomains because they ranked _so much better_ than fresh purchased domains. Let's use a real world example. Insert free dynamic dns service here - you create a subdomain on one of their 25-100 domains, provide an IP address for that subdomain and.. whala, spam site. So let's say we've now got spam-site-100.free-dynamic-dns-service.com - it's a record is pointed to my host, I'm serving up super spammy affiliate pages on it. I don't build links to it, that takes too much time and investment... instead I just submit a sitemap to google and move on. That's the short story. The long story is that I built hundreds of thousands of these sub domains for each service of this type I could find, on every one of the domains they made available. Over the course of time it became clear that the performance (measured in google search visitors) was VASTLY different based on the primary domain... to the point that I stopped building for all of them and focused on only a handful of highly performant and profitable domains.

- Lyndsey Scott (model): I have 27418 points on StackOverflow; I'm on the iOS tutorial team for RayWendelich.com; I'm the lead iOS software engineer for @RallyBound, the 841st fastest growing company in the US according to @incmagazine

- @jpetazzo: "Containers are processes,born from tarballs, anchored to namespaces, controlled by cgroups" 💯 @alicegoldfuss #VelocityConf

- @BenZvan: If engineers were paid based on how well they document things they still wouldn't write documentation @rakyll #velocityconf

- @tleam: @rakyll getting into one of the most critical issues with scaling systems: scaling the knowledge of the systems beyond the initial creators. The bus factor is real and it can be *very* difficult to avoid. Documentation isn't really an answer. #VelocityConf

- @jschauma: You know, back in my day we didn’t call it “chaos engineering” but “committing code”; we didn’t need a “chaos day”, we called it Monday. #velocityconf

- hardwaresofton: I absolutely love Hetzner -- they're pricing is near unbeatable. To be a bit more precise, I believe that they offer cut rate pricing (which is not a bad thing if you're the consumer) but not cut-rate service -- there is just enough for a DIYer to be very productive and cost effective. This gets even easier if you use Hetzner Cloud directly, and they've got fantastic prices for beefy machines there too -- while a t2.micro on AWS is ~$10/month on hetzner cloud CX51 with 8 vcores and 32GB of RAM & and 250GB SSD with 20TB of traffic allowed is 29.90 gbp.

- halbritt: Scaling applications in k8s, updating, and keeping configs consistent are a great deal easier for me than using Ansible or any other config management tool. that (on top of k8s). As such, a developer can spin up a new environment with the click of a button, deploy whatever code they like, scale the environment, etc. with very little to no training. Those capabilities were a tremendous accelerator for my organization.

- @bridgetnomnom: How do you run your first Chaos Day? 1. Know what your critical systems are 2. Plan to monitor cost of down time 3. Try it on new products first 4. Stream it internally 5. Pick a good physical location 6. Invite the right people for the test #VelocityConf @tammybutow

- @stevesi: What are characteristics creating opportunity:• Cloud distribution, mobile usage • Articulated problem/soln • Solves problem right away • Network and/or viral component • Bundled solns bloated and horizontal (old tools do too much/not enough) • Min. Infrastruct Reqs

- @johncutlefish: The fix? Visualize the work. Measure lead times. Blameless retrospectives. Psyc safety. An awareness of the non-linear nature of debt and drag. Listen! And deeply challenge the notion that technical debt can be artfully managed. Maybe? Very, very hard (13/13) #leanagile #devops

- @lizthegrey: She set out to reduce outage frequency by 20% but instead had a 10x reduction in outage frequency in first 3 months by simulating and practicing until she got the big wins. 0 Sev0 incidents for 12 months after the 3 month period. #VelocityConf

- @stillinbeta: Systems at Dropbox were so reliable new oncall engineers were almost never paged - chaos engineering provided much-needed practise! #VelocityConf

- @chimeracoder: Hacker News: "I could build that in a weekend."

-

Yeah, but could you then operate it for the rest of your life? #velocityconf #velocity

- Mark Harris: Swildens' research uncovered several patents and books that seemed to pre-date the Waymo patent. He then spent $6,000 of his own money to launch a formal challenge to 936. Waymo fought back, making dozens of filings, bringing expert witnesses to bear, and attempting to re-write several of the patent's claims and diagrams to safeguard its survival.

- SEJeff: I'm a sysadmin who manages thousands of bare metal machines (A touch less than 10,000 Linux boxes). We have gotten to a point in some areas where you can't linearly scale out the app operations teams by hiring more employees so we started looking at container orchestration systems some time ago (I started working on Mesos about 3 years ago before Kubernetes was any good). As a result, I got to understand the ecosystem and set of tools / methodology fairly well. Kelsey Hightower convinced me to switch from Mesos to Kubernetes in the hallway at the Monitorama conference a few years back. Best possible decision I could have made in hindsight. Kubernetes can't run all of our applications, but it solves a huge class of problems we were having in other areas. Simply moving from a large set of statically provisioned services to simple service discovery is life changing for a lot of teams. Especially when they're struggling to accurately change 200 configs when a node a critical service was running has a cpu fault and panics + reboots. Could we have done this without kubernetes? Sure, but we wanted to just get the teams thinking about better ways to solve their problems that involved automation vs more manual messing around. Centralized logging? Already have that. Failure of an Auth system? No different than without Kubernetes, you can use sssd to cache LDAP / Kerberos locally. Missing logs? No different than without kubernetes, etc. For us, Kubernetes solves a LOT of our headaches. We can come up with a nice templated "pattern" for continuous delivery of a service and give that template to less technical teams, who find it wonderful. Oh, and we run it bare metal on premise. It wasn't a decision we took lightly, but having used k8s in production for about 9 months, it was the right one for us.

- Demis Hassabis (DeepMind founder): I would actually be very pessimistic about the world if something like AI wasn't coming down the road. The reason I say that is that if you look at the challenges that confront society: climate change, sustainability, mass inequality — which is getting worse — diseases, and healthcare, we're not making progress anywhere near fast enough in any of these areas. Either we need an exponential improvement in human behavior — less selfishness, less short-termism, more collaboration, more generosity — or we need an exponential improvement in technology. If you look at current geopolitics, I don't think we're going to be getting an exponential improvement in human behavior any time soon. That's why we need a quantum leap in technology like AI.

- Nitro is an improved custom built hypervisor that optimizes the entire AWS stack. The Nitro Project: Next-Generation EC2 Infrastructure. Excellent introduction, including a great explanation of what virtualization means at the instruction level. It's really a tour of how the hypervisor has evolved to support different instance types over time. The theme is offloading and customization. AWS does a lot of custom hardware that has moved the software hypervisor completely into hardware, which is now why you can now run securely on bare metal. They've built custom Nitro cards for storage, networking, management, monitoring, and security; plus a Nitro Security Chip built directly into the motherboard that provides physical security for the chip. X1 is a monster of instance type that has 2TB of RAM and 128 vcpus. It introduced a hardware networking device specifically for EC2 using a custom Nitro ASIC that created the elastic network adapter. For the I3 instance type a change was made to support NVMe. When you're talking to an NVMe drive on I3 storage you're really talking directly to the underlying hardware using the Nitro ASIC. Now that instance and EBS storage has been offloaded and networking has been offloaded, what's next? All the other software: the virtual machine monitor and management partition. The goal is to make all those resources available to customers. There's no need anymore to have a full blow traditional hypervisor in the stack. This led the C5 instance type and the full Nitro architecture. In the Nitro architecture there is no management partition anymore because they aren't running software device models on the x86 processors anymore. You have access to all the underlying CPUs and have access to nearly all memory on the platform. They based the new hypervisor on kvm and it consists of just the virtual machine monitor. It's called a "quiescent hypervisor" because it only runs when you're trying to do something on behalf of the instance. This led to the i3.metal instance. By adding a Nitro security processor they were able to implement security entirely in hardware which allows accessing the bare metal directly without going through a hypervisor. They will continue with an incremental stream of improvements based on customer feedback.

- A big list of references for transaction processing in NewSQL systems. Transaction Processing in NewSQL.

- Tim Berners-Lee has a Solid commercial venture to redecentralisation the web. How? Your data is stored in a Solid POD. You give people and your apps permission to read or write to parts of a pod. POD stands for personal online data store. What is pod? Hard to say. There's very little information on what they are. The site says a pod is "like secure USB sticks for the Web, that you can access from anywhere." What does that mean? No idea. Data appears to be stored as Linked Data that's accessed via the library RDFLIB.js. What's the storage capacity? Latency? Scalability? No idea. There's a Solid server written in Javascript based on node.js. There's a confusing example Yo app. Is this a good thing? Hard to tell with so few details. One take on it is How solid is Tim’s plan to redecentralize the web? which is concerned "ill-equipped to tackle the challenges of the data ownership space and deliver impact." The concerns are: the assumption that's there is a clear ownership of data; lack of clear value proposition; not broad enough in vision. Will it work? Hypertext was well developed long before the web won the day. So as unlikely as Solid appears to be, you can't count Tim out.

- Looking for a hosting alternative? Lot's of Hetzner love in this thread. Hetzner removes traffic limitation for dedicated servers (hetzner.de). Cheap, good infrastructure, good service, and now no traffic limits.

- Why not just provision a VM and configure your web app as a service? Because Kubernetes has solutions for all of the problems with that type of solution. Kubernetes: The Surprisingly Affordable Platform for Personal Projects: Kubernetes makes sense for small projects and you can have your own Kubernetes cluster today for as little as $5 a month...Kubernetes is Reliable...Kubernetes is No Harder to Learn than the Alternatives...Kubernetes is Open Source...Kubernetes Scales...we can have a 3 node Kubernetes cluster for the same price as a single Digital Ocean machine. There's a great step-by-step set of instructions on how to setup a web site on k8s on Google. wpietri: Personally, as somebody who is building a small Kubernetes cluster right now at home just for the fun of it: I think using Kubernetes for small projects is mostly a bad idea. So I appreciate the author warning people so they don't get misled by all the (justified) buzz around it. sklivvz1971: The list of things mentioned in the article to do and learn for a simple, personal project with k8s is absolutely staggering, in my opinion. We've tried to adopt k8s 3-4 times at work and every single time productivity dropped significantly without having significant benefits over normal provisioning of machines. mhink: From the application-developer side, I'd dispute this. I was told to use Docker + Kubernetes for a relatively small work project recently, and I was able to go from "very little knowledge of either piece of tech" to "I can design, build, and deploy my app without assistance" in about 1 week. Also, Kubernetes for personal projects? No thanks!, Unbabel migrated to Kubernetes and you won’t believe what happened next!

- How much it Costs to Run a Mass Emailing Platform built using AWS Lambda? $49.44/year for 4 Billion emails per year. On EC2 the cost was $198.72.

- Scalability is all about automation. Thinking in state machines is a powerful way to implement automation. eBay uses state machines to good effect in their Unicorn—Rheos Remediation Center. Unicorn includes a centralized place to manage operation jobs, the ability to handle alerts more efficiently, and the ability to store alert and remediation history for further analysis. It's event driven and consists of several tiers, but the heart of it is a event driven workflow engine. There are state machines to handle problems like disk sector error flows and Kafka partition reassign flow.

- Users are a priority, not a product, says Ben Thompson in Data Factories. Yes, users are a priority in the same way addicts are a priority to drug dealers. The dealer does everything they can to keep their users addicted. Priority does not mean in anyway their interests are aligned. As long as the pusher can keep users hooked the money will flow, which is exactly why the user really is a socially engineered product for sale up the value chain.

- 355E3B: I’ve listed what I commonly do for most web apps below I’m responsible for: Look for monotonicity: Plot the following: requests, CPU, Memory, Response Time, GC Free, GC Used. If you see sections of the graph which just keep going up with no stops, or sudden harsh drops, you may have a resource leak of some kind...Slow Queries: A lack of indexes or improperly written queries can be a major slowdown. Depending on your database EXPLAIN, will be able to guide you in the right direction...Cache things: If there are slow or expensive operations on your app, try to see if you can cache them...Deal with bad users: Do you track which users or IPs are making requests which always fail? Can you block them via something like fail2ban?...Fail early: Check access, validate parameters and ensure the request is valid before doing anything expensive.

- Notes from ICFP 2018 (The International Conference on Functional Programming).

- Yes, infrastructure does matter. Cisco Webex meltdown caused by script that nuked its host VMs. An automated script deleted the virtual machines hosting the service. This can happen. It's the machine equivalent of rm -rf *. The issue is why did it take so long to restore? lordgilman: I use Cisco WebEx Teams (formerly Cisco Spark) at work so I’ve been able to see the disaster first hand. The entire chat service was hard down for the first 24 hours with no ETA of resolution. When the service did come back up it still had small outages during business hours all week. Chatroom history is slowly being restored and many chatrooms are entirely glitched, unable to receive new messages or display old ones. Can you imagine the internet meltdown that would happen if Slack had this kind of outage? I feel bad for the ops employee: not only did they kill all the servers but they’ve almost certainly killed the business prospects of this giant, corporate, multi-year program with hundreds of contributors.

- Brief, but excellent. A Brief History of High Availability. Covers Active-Passive, Five 9s, Sharding, Active-Active, Multi-Active Availability, Multi-Active Availability, and Active-Active vs. Multi-Active.

- The Cloudcast #365 - Taking Spinnaker for a Spin. If you were afraid of looking at Spinnaker as cloud your control plane because it's Netflix only then be not afraid. A lot of companies use and contribute to Spinnaker. You won't be alone. The main point of the talk is Spinnaker is opening up its governance model as way to attract more users. What is it? A continuous delivery and infrastructure management platform. It solves the problem of how to move this asset (binary, container, image, etc) from your local environment to production as fast and reliably and securely as possible. It's essentially a pipeline tool. For example, Jenkins runs CI and would trigger a pipeline in Spinnaker to move the asset into AWS (or whever). It bakes it into a VM, deploy it into a test environment, and then use an a selectable apporach to put into production into the configured reasons. Stages can be added as part of the delivery process. It's pluggable so k8s is supported as are many other extensions like GCP and AppEngine, Azure, Oracle cloud, Pivital, DCoS, OpenStack, and webhooks.

- Don't want to use cloudfront? There is another. 99.9% uptime static site deployment with Cloudflare and AWS S3.

- What do people in cloud not think about? What happens in AWS when US-EAST-1 fails? There's no problem because AWS is diverse, right? Not unless you are paying for it. In the last hurricane everyone has all their stuff in Virginia and the next thing you know a hurricane was barrelling down on Virginia and everyone tried to move everything to Oregon. They couldn't. With that much data the links to Oregon were swamped. Weekly Show 409: The 100G Data Center And Other Roundtable Topics. Another big problem is once diverse circuits over time can be made and less diverse as carriers make changes.

- Disasters are hard; plan accordingly. Disaster Recovery Considerations in AWS: AWS regions have separate control planes...if us-west-2 has an issue, and your plan is to fail over to us-east-1, you should probably be aware that more or less everyone has similar plans...AWS regions can take sudden inrush of new traffic very well; they learned these lessons back in the 2012 era. That said, you can expect provisioning times to be delayed significantly...if you're going to need to sustain a sudden influx of traffic in a new region you're going to want those instances spun up and waiting

- Don't see many sharding articles these days. This is a good one. Sharding writes with MySQL and increment offsets: we shard writes and avoid conflicts using an increment offset on PKs; each data center contains local write servers; we use multi-source replication on read servers so they contain all the data from all data centers, partitioned by month. Also, Scaling PostgreSQL using Connection Poolers and Load Balancers for an Enterprise Grade environment.

- A lot of good practical details with code. Rate limiting for distributed systems with Redis and Lua: we implemented the token bucket and the leaky bucket algorithms in Redis scripts in Lua.

- What happens when you try for single code base nirvana? Good things. Porting a 75,000 line native iOS app to Flutter: the Flutter port will have half the lines of code of the native iOS original...I hadn’t realised what a huge proportion of my time was spent writing UI related code and futzing around with storyboards and auto layout constraints...I ended up following Eric’s advice and using an architecture pattern called Lifting State Up which is a first step along the way to Redux...there are many areas in which the ported Flutter app is faster than the native iOS app...the kicker [with Dart] for me is that I can implement functionality much faster (and strangely, more enjoyably) than I have in a long time...The Flutter UI is virtually indistinguishable from native Android and native iOS UIs...Because of the way Flutter UIs are constructed I’m able to build new functionality in Flutter more quickly than in native code...The code base shared between the iOS and Android versions of the app is currently greater than 90%.

- noufalibrahim: I worked at the Archive for a few years remotely. It permanently altered my view of the tech. world. Here are the notable differences. I think these would apply to several non-profits but this is my experience 1. There was no rush to pick the latest technologies. Tried and tested was much better than new and shiny. Archive.org was mostly old PHP and shell scripts (atleast the parts I worked on). 2. The software was just a necessity. The data was what was valuable. Archive.org itself had tons of kluges and several crude bits of code to keep it going but the aim was the keep the data secure and it did that. Someone (maybe Brewster himself) likened it to a ship traveling through time. Several repairs with limited resources have permanently scarred the ship but the cargo is safe and pristine. When it finally arrives, the ship itself will be dismantled or might just crumble but the cargo will be there for the future. 3. Everything was super simple. Some of the techniques to run things etc. were absurdly simple and purposely so to help keep the thing manageable. Storage formats were straightforward so that even if a hard disk from the archive were found in a landfill a century from now, the contents would be usable (unlike if it were some kind of complex filesystem across multiple disks). 4. Brewster, and consequently the crew, were all dedicated to protecting the user. e.g. https://blog.archive.org/2011/01/04/brewster-kahle-receives-.... There was code and stuff in place to not even accidentally collect data so that even if everything was confiscated, the user identities would be safe. 5. There was a mission. A serious social mission. Not just, "make money" or "build cool stuff" or anything. There was a buzz that made you feel like you were playing your role in mankinds intellectual history. That's an amazing feeling that I've never been able to replicate. Archive.org is truly only of the most underappreciated corners of the world wide web. Gives me faith in the positive potential of the internet.

- An Introduction to Probabilistic Programming: This document is designed to be a first-year graduate-level introduction to probabilistic programming. It not only provides a thorough background for anyone wishing to use a probabilistic programming system, but also introduces the techniques needed to design and build these systems. It is aimed at people who have an undergraduate-level understanding of either or, ideally, both probabilistic machine learning and programming languages.

- Stateless Load-Aware Load Balancing in P4: This paper presents SHELL, a stateless application-aware load-balancer combining (i) a power-of-choices scheme using IPv6 Segment Routing to dispatch new flows to a suitable application instance from among multiple candidates, and (ii) the use of a covert channel to record/report which flow was assigned to which candidate in a stateless fashion. In addition, consistent hashing versioning is used to ensure that connections are maintained to the correct application instance, using Segment Routing to “browse” through the history when needed.

- DEF CON 26 Voting Village: As was the case last year, the number and severity of vulnerabilities discovered on voting equipment still used throughout the United States today was staggering. Among the dozens of vulnerabilities found in the voting equipment tested at DEF CON, all of which (aside from the WINVote) are used in the United States today, the Voting Village found: