Prismatic Update: Machine Learning on Documents and Users

In update to Prismatic Architecture - Using Machine Learning on Social Networks to Figure Out What You Should Read on the Web, Jason Wolfe, even in the face of deadening fatigue from long nights spent getting their iPhone app out, has gallantly agreed to talk a little more about Primatic's approach to Machine Learning.

Documents and users are two areas where Prismatic applies ML (machine learning):

ML on Documents

- Given an HTML document:

- learn how to extract the main text of the page (rather than the sidebar, footer, comments, etc), its title, author, best images, etc

- determine features for relevance (e.g., what the article is about, topics, etc.)

- The setup for most of these tasks is pretty typical. Models are trained using big batch jobs on other machines that read data from s3, save the learned parameter files to s3, and then read (and periodically refresh) the models from s3 in the ingest pipeline.

- All of the data that flows out of the system can be fed back into this pipeline, which helps learn more about what's interesting, and learn from mistakes over time.

- From a software engineering perspective one of the most interesting frameworks Prismatic has written is the 'flop' library, which implements state-of-the-art ML training and inference code that looks very similar to nice, ordinary Clojure code, but compiles (using the magic of macros) down to low-level array maniuplation loops, which are as close to the metal as you can get in Java without resorting to JNI.

- The code can be an order of magnitude more compact and easy to read than the corresponding Java, and execute at basically the same speed.

- A lot of effort has gone into creating a fast running story clustering component.

ML on Users

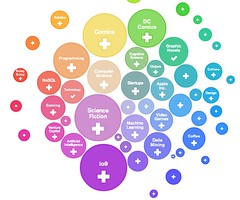

- Guess what users are interested in from social network data and refine these guesses using explicit signals within the app (+/remove).

- The problem of using explicit singnals is interesting as user inputs should be reflected in their feeds very quickly. If a user removes 5 articles from a given publisher in a row, then stop showing them articles from that publisher right now, not tomorrow. This means there isn't time to run another batch job over all the users, The solution is online learning: immediately update the model of a user with each observation they provide us.

- The raw stream of user interaction events is saved. This allows rerruning later the user interest ML over the raw events, in case any data is lost through slightly loose write-back caches on this data when a machine goes down or something like that. Drift in the online learning can be corrected and more accurate models can be computed.