How Wistia Handles Millions of Requests Per Hour and Processes Rich Video Analytics

This is a guest repost from Christophe Limpalair of his interview with Max Schnur, Web Developer at Wistia.

Wistia is video hosting for business. They offer video analytics like heatmaps, and they give you the ability to add calls to action, for example. I was really interested in learning how all the different components work and how they’re able to stream so much video content, so that’s what this episode focuses on.

What does Wistia’s stack look like?

As you will see, Wistia is made up of different parts. Here are some of the technologies powering these different parts:

- HAProxy

- Nginx

- MySQL (Sharded)

- Ruby on Rails

- Unicorn and some services run on Puma

- nsq (they wrote the Ruby library)

- Redis (caching)

- Sidekiq for async job

What scale are you running at?

Wistia has three main parts to it:

- The Wistia app (The ‘Hub’ where users log in and interact with the app)

- The Distillery (stats processing)

- The Bakery (transcoding and serving)

Here are some of their stats:

- 1.5 million player loads per hour (loading a page with a player on it counts as one. Two players counts as two, etc…)

- 18.8 million peak requests per hours to their Fastly CDN

- 740,000 app requests per hour

- 12,500 videos transcoded per hour

- 150,000 plays per hour

- 8 million stats pings per hour

They are running on Rackspace.

What challenges come from receiving videos, processing them, then serving them?

- They want to balance quality and deliverability, which has two sides to it:

- Encoding derivatives of the original video

- Knowing when to play which derivative

Derivatives, in this context, are different versions of a video. Having different quality versions is important, because it allows you to have smaller file sizes. These smaller video files can be served to users with less bandwidth. Otherwise, they would have to constantly buffer. Have enough bandwidth? Great! You can enjoy higher quality (larger) files.

Having these different versions and knowing when to play which version is crucial for a good user experience.

- Lots of I/O. When you have users uploading videos at the same time, you end up having to move many heavy files across clusters. A lot of things can go wrong here.

- Volatility. There is volatility in both the number of requests and the number of videos to process. They need to be able to sustain these changes.

- Of course, serving videos is also a major challenge. Thankfully, CDNs have different kinds of configurations to help with this.

- On the client side, there are a ton of different browsers, device sizes, etc... If you've ever had to deal with making websites responsive or work on older IE versions, you know exactly what we're talking about here.

How do you handle big changes in the number of video uploads?

They have boxes which they call Primes. These Prime boxes are in charge of receiving files from user uploads.

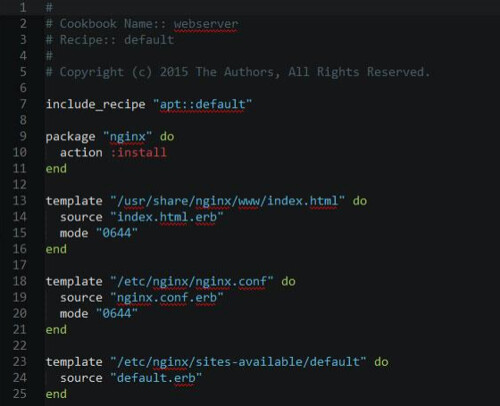

If uploads start eating up all the available disk space, they can just spin up new Primes using Chef recipes. It's a manual job right now, but they don't usually get anywhere close to the limit. They have lots of space.

What transcoding system do you use?

The transcoding part is what they call the Bakery.

The Bakery is comprised of Primes we just looked at, which receive and serve files. They also have a cluster of workers. Workers process tasks and create derivative files from uploaded videos.

Beefy systems are required for this part, because it's very resource intensive. How beefy?

We’re talking about several hundred workers. Each worker usually performs two tasks at one time. They all have 8 GB of RAM and a pretty powerful CPU.

What kinds of tasks and processing goes on with workers? They encode video primarily in x264 which is a fast encoding scheme. Videos can usually be encoded in about half or a third of the video length.

Videos must also be resized and they need different bitrate versions.

In addition, there are different encoding profiles for different devices--like HLS for iPhones. These encodings need to be doubled for Flash derivatives that are also x264 encoded.

What does the whole process of uploading a video and transcoding it look like?

Once a user uploads a video, it is queued and sent to workers for transcoding, and then slowly transferred over to S3.

Instead of sending a video to S3 right away, it is pushed to Primes in the clusters so that customers can serve videos right away. Then, over a matter of a few hours, the file is pushed to S3 for permanent storage and cleared from Prime to make space.

How do you serve the video to a customer after it is processed?

When you're hitting a video hosted on Wistia, you make a request to embed.wistia.com/video-url, which is actually hitting a CDN (they use a few different ones. I just tried it and mine went through Akamai). That CDN goes back to Origin, which is their Primes we just talked about.

Primes run HAProxy with a special balancer called Breadroute. Breadroute is a routing layer that sits in front of Primes and balances traffic.

Wistia might have the file stored locally in the cluster (which would be faster to serve), and Breadroute is smart enough to know that. If that is the case, then Nginx will serve the file directly from the file system. Otherwise, they proxy S3.

How can you tell which video quality to load?

That's primarily decided on the client side.

Wistia will receive data about the user's bandwidth immediately after they hit play. Before the user even hits play, Wistia receives data about the device, the size of the video embed, and other heuristics to determine the best asset for that particular request.

The decision of whether to go HD or not is only debated when the user goes into full screen. This gives Wistia a chance to not interrupt playback when a user first clicks play.

How do you know when videos don't load? How do you monitor that?

They have a service they call pipedream, which they use within their app and within embed codes to constantly send data back.

If a user clicks play, they get information about the size of their window, where it was, and if it buffers (if it's in a playing state but the time hasn't changed after a few seconds).

A known problem with videos is slow load times. Wistia wanted to know when a video was slow to load, so they tried tracking metrics for that. Unfortunately, they only knew if it was slow if the user actually waited around for the load to finish. If users have really slow connections, they might just leave before that happens.

The answer to this problem? Heartbeats. If they receive heartbeats and they never receive a play, then the user probably bailed.

What other analytics do you collect?

They also collect analytics for customers.

Analytics like play, pause, seeks, conversions (entering an email, or clicking a call to action). Some of these are shown in heatmaps. They also aggregate those heatmaps into graphs that show engagement, like the areas that people watched and rewatched.

How do you get such detailed data?

When the video is playing, they use a video tracker which is an object bound to plays, pauses, and seeks. This tracker collects events into a data structure. Once every 10-20 seconds, it pings back to the Distillery which in turn figures out how to turn data into heatmaps.

Why doesn't everyone do this? I asked him that, because I don't even get this kind of data from YouTube. The reason, Max says, is because it's pretty intensive. The Distillery processes a ton of stuff and has a huge database.

(http://www.cotswoldsdistillery.com/First-Cotswolds-Distillery-Opens)

What is scaling ease of use?

Wistia has about 60 employees. Once you start scaling up customer base, it can be really hard to make sure you keep having good customer support.

Their highest touch points are playback and embeds. These have a lot of factors that are out of their control, so they must make a choice:

- Don't help customers

- Help customers, but it takes a lot of time

Those options weren't good enough. Instead, they went after the source that caused the most customer support issues--embeds.

Embeds have gone through multiple iterations. Why? Because they often broke. Whether it was WordPress doing something weird to an embed, or Markdown breaking the embed, Wistia has had a lot of issues with this over time. To solve this problem, they ended up dramatically simplifying embed codes.

These simpler embed codes run into less problems on the customer’s end. While it does add more complexity behind the scenes, it results in fewer support tickets.

This is what Max means by scaling ease of use. Make it easier for customers, so that they don't have to contact customer support as often. Even if that means more engineering challenges, it's worth it to them.

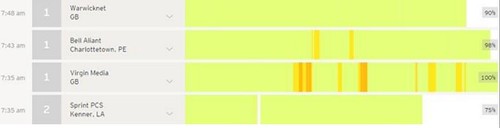

Another example of scaling ease of use is with playbacks. Max is really interested in implementing a kind of client-side CDN load balancer that determines the lowest latency server to serve content from (similar to what Netflix does).

What projects are you working on right now?

Something Max is planning on starting soon is what they're calling the upload server. This upload server is going to give them the ability to do a lot of cool stuff around uploads.

As we talked about, and with most upload services, you have to wait for the file to get to a server before you can start transcoding and doing things with it.

The upload server will make it possible to transcode while uploading. This means customers could start playing their video before it's even completely uploaded to their system. They could also get the still capture and embed almost immediately

Conclusion

I have to try and fit as much information in this summary as possible without making it too long. This means I cut out some information that could really help you out one day. I highly recommend you view the full interview if you found this interesting, as there are more hidden gems.

You can watch it, read it, or even listen to it. There's also an option to download the MP3 so you can listen on your way to work. Of course, the show is also on iTunes and Stitcher.

Thanks for reading, and please let me know if you have any feedback!- Christophe Limpalair (@ScaleYourCode)