Appknox Architecture - Making the Switch from AWS to the Google Cloud

This is a guest post by dhilipsiva, Full-Stack & DevOps Engineer at Appknox.

Appknox helps detect and fix security loopholes in mobile applications. Securing your app is as simple as submitting your store link. We upload your app, scan for security vulnerabilities, and report the results.

What's notable about our stack:

- Modular Design. We modularized stuff so far that we de-coupled our front-end from our back-end. This architecture has many advantages that we'll talk about later in the post.

- Switch from AWS to Google Cloud. We made our code largely vendor independent so we were able to easily make the switch from AWS to the Google Cloud.

Primary Languages

- Python & Shell for the Back-end

- CoffeeScript and LESS for Front-end

Our Stack

- Django

- Postgres (Migrated from MySQL)

- RabbitMQ

- Celery

- Redis

- Memcached

- Varnish

- Nginx

- Ember

- Google Compute

- Google Cloud Storage

Architecture

How it works?

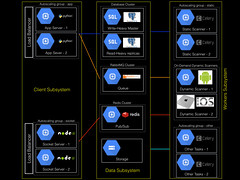

Our back-end architecture consists of 3 subsystems: Client, Data and Workers.

Client Subsystem

The client subsystem consists of two different load-balanced, auto-scaling App & Socket Servers. This is where all user-interactions takes place. We took much care not to have any blocking calls here to ensure lowest possible latency.

App Server: Each App server is a single Compute unit loaded with Nginx and Django-gunicorn server, managed by supervisord. User requests are served here. When a user submits the url their app, we submit it to the RabbitMQdownload queue and immediately let user know that the URL has been submitted. In case of uploading any app, a signed-url is fetched from server. The browser uploads data directly to the S3 with this signed-url and notifies the app server when it is done.

Socket server: Each socket server is a single compute unit loaded with Nginx and a node (socket-io) server. This server uses Redis as its adapter. And yes, of course, this is used for real-time updates.

Data subsystem

This system is used for data storage, queuing and pub/sub. Which is also responsible for a decoupled architecture.

Database Cluster: We use Postgres. It goes without saying that it consists of a Write-Heavy master and few Read-Heavy replicas.

RabbitMQ: A broker to our celery workers. We have different queues for different workers. Mainly download,validate, upload, analyse, report, mail and bot. The web server puts data into queue, the celery workers pick it up and run it.

Redis: This acts a adapters to socket-io servers. When ever we want to notify user an update from any of our workers, we publish it to Redis, which in turn will notify all users trough Socket.IO.

Worker Subsystem

This is where all the heavy lifting works are done. All the workers gets tasks from RabbitMQ and Published updates to users thorough Redis.

Static Scanners: This is an auto-scaling Compute unit group. Each unit consists of 4-5 celery workers. Each celery worker scans single app at a time.

Other tasks: This is an auto-scaling Compute unit group. Each unit consists of 4-5 celery workers which does various tasks like download apps from stores, generating report pdf, uploading report pdf, sending emails, etc.

Dynamic Scanning: This is platform-specific. Each Android dynamic scanner is a On-Demand Compute instance that has android emulator (With SDKs) and a script that captures data. This emulator is shown on a canvas in the browser for user to interact. Each iOS scanner is in a managed Mac-Mini farm that has scripts and simulators supporting the iOS platform.

Reasons for Choosing the Stack

We chose Python because the primary libraries that we use to scan applications is in python. Also, we love python more than any other languages that we know.

We chose Django because it embraces modularity.

Ember - We think that this is the most awesome Front-end framework that is out there. Yes, the learning curve is steeper than any other, but once you climb that steep mountain, you will absolutely love ember. It is very opinionated. So as long as you stick to its conventions, you write less to do more.

Postgres - Originally, we chose MySQL because it was de-facto. After Oracle purchased Sun Microsystems (Parent company of MySQL), MySQL became stagnant. I guess we all expected it. So we dided to use MariaDB (A fork of MySQL) maintained by community. Later, we required persistent key-value stores a bit, which is offered out of the box by Postgres. It plays really well with Python. We use UUIDs as primary keys which is a native data type in Postgres. Also, the uuis-ossp module provided functions to generate and manipulate UUIDs at the Database level, rather than creating them at application level, which was costlier. So we switched to Postgres.

And the rest are de-facto. RabbitMQ for Task Queues. Celery for Task Management. Redis for Pub/Sub. Memcached & Varnish for caching.

Things that Didn't Go as Expected

One of the things that didn't go as expected is scaling sockets. We were using Django-socket.io initially. We realized that this couldn't be scaled to multiple servers. So we wrote that as a separate node module. We used node's socket-io library that supported Redis-adapter. Clients are connected to the node's socket server. So we now publish to Redis from our python code. Node will just push the notifications to clients. This can be scaled independently of the app-server that acts as a JSON endpoint to the clients.

Notable Stuff About Our Stack

We love modular design. We went to modularize stuff so far that we de-coupled our front-end from our back-end. Yes, you read it right. All the HTML, CoffeeScript and LESS code is developed independently of the back-end. Front-end development does not require server to be running. We rely on front-end fixtures for fake data during development.

Our back-end is named Sherlock. We detect security vulnerabilities in mobile applications. So the name seemed apt. Sherlock is smart.

And our Front-end is named Irene. Remember Irene Adler? She is beautiful, colorful and tells our user's whats wrong.

And our Admin is named Hudson. Remember Mrs.Hudson? Sherlock's land-lady? Thinking of which we should have probably given a role to poor Dr.Watson. Maybe we will.

So Sherlock does not serve any HTML/CSS/JS files. I repeat, It does not serve ANY single static file / HTML file. Both sherlock and Irene are developed independently. Both have separate deployment process. Both have their own test-cases. We deploy Sherlock to Compute instances and we deploy Irene to Google Cloud Storage.

The advantage of such architecture is that:

- The Front End team can work independent of the back-end without stepping on each other toes.

- The heavy lifting work like rendering pages on the server is taken off of server.

- We can open-source the front-end code. Making it easy to hire front-end guys. Just ask them to fix a bug in the repo and they are hired. After all, front-end code can be read by anyone even if you don't open-source it right?

Our Deployment Process

The code is auto-deployed from the master branch. We follow Vincent Driessen's Git branching model. Jenkins build commits to develop branch. If it succeeds we do another manual testing, just to be sure and merge it with masterbranch and it gets auto deployed.

Initially used AWS. We decided to use Google Cloud for 3 reasons.

- We liked the `Project` based approach for managing resources for different applications. It made accessing infrastructure more pragmatic. It made Identifying instances easier because of complexity our `Dynamic Scanning` feature.

- It had a awesome documentation and had private 1:1 help from Google Engineers when we were struck.

- We received some significant Google Credits, which helps us cut cost at this early stage.

I always stayed away from special services offered by IaaS providers. For instance we did not use Amazon RDS or SQS. Configured our own DB servers, RabbitMQ and Redis instances. The reasons for doing so is that - those services were comparatively slower (and costlier) and your product becomes vendor-dependant. We abstracted all these to be vendor-independent. One such thing that we forgot to abstract was Storage.

We consumed S3 directly. Which was a small pickup when we tried to migrate to Google Cloud. So when we decided to migrate to Google Storage, we abstracted the Storage Layer and followed the Google Storage Migration docs. And everything worked just fine. Now code base can be hosted on both Google Cloud and AWS with no code change. Of course, you will have to change configuration. But not the code.