Machine Learning Driven Programming: A New Programming for a New World

If Google were created from scratch today, much of it would be learned, not coded. Around 10% of Google's 25,000 developers are proficient in ML; it should be 100% -- Jeff Dean

Like the weather, everybody complains about programming, but nobody does anything about it. That’s changing and like an unexpected storm the change comes from an unexpected direction: Machine Learning / Deep Learning.

I know, you are tired of hearing about Deep Learning. Who isn’t by now? But programming has been stuck in a rut for a very long time and it's time we do something about it.

Lots of silly little programming wars continue to be fought that decide nothing. Functions vs objects; this language vs that language; this public cloud vs that public cloud vs this private cloud vs that ‘fill in the blank’; REST vs unrest; this byte level encoding vs some different one; this framework vs that framework; this methodology vs that methodology; bare metal vs containers vs VMs vs unikernels; monoliths vs microservices vs nanoservices; eventually consistent vs transactional; mutable vs immutable; DevOps vs NoOps vs SysOps; scale-up vs scale-out; centralized vs decentralized; single threaded vs massively parallel; sync vs async. And so on ad infinitum.

It’s all pretty much the same shite different day. We are just creating different ways of calling functions that we humans still have to write. The real power would be in getting a machine to write the functions. And that’s what Machine Learning can do, write functions for us. Machine Learning might just might be some different kind of shite for a different day.

Machine Learning Driven Programming

I was first introduced to Deep Learning as a means of programming in a talk Jeff Dean gave. I wrote an article on the talk: Jeff Dean On Large-Scale Deep Learning At Google. Definitely read the article for a good intro to Deep Learning. The takeaway from the talk, at least for this topic, is how Deep Learning can effectively replace human coded programs:

What Google has learned is they can take a whole bunch of subsystems, some of which may be machine learned, and replace it with a much more general end-to-end machine learning piece. Often when you have lots of complicated subsystems there’s usually a lot of complicated code to stitch them all together. Google likes that you can replace all that with data and very simple algorithms.

Machine Learning as an Agile Tool for Software Engineering

Peter Norvig, Research Director at Google, has a whole talk on this subject: Deep Learning and Understandability versus Software Engineering and Verification. Here’s the overall idea:

Software. You can think of software as creating a specification for a function and implementing a function that meets that specification.

Machine Learning. Given some example (x,y) pairs you try to guess the function that takes x as an argument and produces y as a result. And that will generalize well to novel values of x.

Deep Learning. You are still given example (x,y) pairs, but it’s a kind of learning where the representations you form have several levels of abstraction rather than a direct input to output. And that will generalize well to novel values of x.

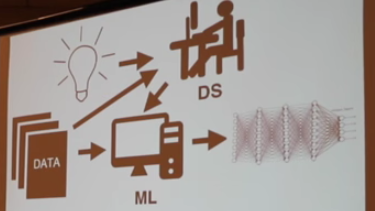

The software engineer is out of the loop. Instead, data flows into a machine learning component and a representation flows out.

The representation doesn’t look like code, it looks like this:

This new kind of program is not a human understandable functional decomposition, it looks like a pile of parameters.

In Machine Learning Driven Programming (MLDP), there’s still can be a human in the loop, we just won’t call them a programmer anymore, they are more Data Scientists.

-

Can we learn parts of programs from examples? Yes.

-

The example is given of a 2000+ line open source spelling program that still doesn’t get some stuff right. A 17 line Naive Bayes classifier version of the program has about the same performance, with a 100x reduction in the amount of code, and gives better answers.

-

Another example is AlphaGo where the structure of the program is written by hand and all the parameters are learned.

-

-

Can we learn entire programs just from examples? Yes, for short programs. No, for big traditional programs (yet).

-

In Jeff Dean’s talk he goes into some detail on Sequence to Sequence Learning with Neural Networks. Using this approach it was possible to build a state of the art machine translation program from scratch, a full end-to-end learned system, without a bunch of hand coded or machine learned models for sub pieces of the problem.

-

Another example is learning how to play Atari games, most of which the machine can play at a human level or better. If there’s a long term planning component the performance is not as good.

-

Neural Turing Machines are an attempt at learning how to program big programs, but Peter doesn’t think it will go very far.

-

As you might expect from Mr. Norvig, it’s a great and visionary talk that is well worth watching.

Google is Gathering Data in the form of Open Source Code from GitHub

Deep Learning needs data, so if you want to build an AI that learns how to program from examples you would needs lots of programs/examples to learn from.

It turns out Google and GitHub have got together with the goal of Making open source data more available. Uploaded into BigQuery from the GitHub Archive is a 3TB+ dataset that contains activity data for more than 2.8 million open source GitHub repositories including more than 145 million unique commits, over 2 billion different file paths.

One might presume this is a good source of training data.

Is it all Peaches and Cream?

Of course not. The black box nature of neural networks makes them difficult to work with, it’s like trying interrogate the human unconscious for the reasons behind our conscious actions.

Jeff Dean talks about the hesitation of the Search Ranking Team at Google in using a neural net for search ranking. With search ranking you want to be able to understand the model, you want to understand why it is making certain decisions. When the system makes a mistake they want to understand why it is doing what it is doing.

Debugging tools had to be created and enough understandability had to be built into the models to overcome this objection.

It must have worked. RankBrain, machine learning technology for search results, was launched in 2015. It’s now the third most important search ranking signal (of 100s). More information at: Google Turning Its Lucrative Web Search Over to AI Machines.

Peter goes into more details by talking about problems covered in a paper: Machine Learning: The High Interest Credit Card of Technical Debt. Lack of Clear Abstraction Layers. If there's a bug you don't know where it is. Changing Anything Changes Everything. It's difficult to make corrections and predict what the result of the corrections will be. Feedback Loops. When a machine learning system generates data it can create a feedback loop as reactions to the data feed back into the system. Attractive Nuisance. Once a system is made everyone may want to use it but it may not work in a different context. Non-stationarity. Data changes over time so you have to figure out what data you want to use. There's no clear cut answer. Configuration dependencies. Where did the data come from? Is it accurate? Have there been changes in other systems that will change the data so what I see today is not what I saw yesterday? Is the training data different than production data? When the system gets loaded is some of the data dropped? Lack of tooling. There are great tools for standard software development, this is all new so we don't have the tooling yet.

Is MLDP the Future?

This is not an “oh my god the world is going to end” kind of article. It’s a “here’s something you may not of heard about and isn’t it cool how the world is changing again” kind of article.

It’s still very early days. Learning parts of programs is doable now. Learning big complex programs is not yet practical. But, when we see article titles like How Google is Remaking Itself as a “Machine Learning First” Company it may not be clear what this really means. It’s not just making systems like AlphaGo, the end-game is a lot deeper than that.

Related Articles

Google Fellow Talks Neural Nets, Deep Learning

Google’s vision of machine-learning: all software engineering to use it, will change humanity

Session with Peter Norvig Research Director at Google

Deep Learning and Understandability versus Software Engineering and Verification by Peter Norvig