UGFydCAzIG9mIFRoaW5raW5nIFNlcnZlcmxlc3Mg4oCU4oCKIERlYWxpbmcgd2l0aCBEYXRhIGFu ZCBXb3JrZmxvdyBJc3N1ZXM=

This is a guest repost by Ken Fromm, a 3x tech co-founder — Vivid Studios, Loomia, and Iron.io. Here's Part 1 and 2.

This post is the third of a four-part series of that will dive into developing applications in a serverless way. These insights are derived from several years working with hundreds of developers while they built and operated serverless applications and functions.

Iron.ioAWS LambdaGoogle Cloud FunctionsAzure FunctionsOpenWhisk

Serverless Processing — Data Diagram

Thinking Serverless! The Data

If you are more data and security-minded than the average developer, serverless processing will cause you to think more about data handling than you might with a legacy and/or web application. The reason being is that with a more monolithic application, data concerns are typically isolated to a limited set of tiers — for example, there is often an application tier, a caching layer, and a database tier. In a traditional legacy/web architecture, all these tiers are relatively self-contained, clustered in one or more zones and/or regions.

In an serverless world, processing tends to be far more deconstructed — and data handling and storage far more distributed and segmented.

Physical and virtual resource boundaries can be much larger, more disparate, and often not even readily apparent. The reason is because serverless platforms can spread workloads across a much wider range of infrastructure. Additional components, also distributed in their own right, can be used in conjunction with this type of processing.

In the email use case referenced in Part 1 of this series, processing an email as part of a legacy application via a serialized workflow would typically result in the processing and the data handling taking place within fixed number of servers for the entire sequence of processing. With a serverless approach, though, each task might run in a different server across a number of clusters, or even in a different zone or data center. And if a serverless platform uses multiple infrastructure providers, data payloads could even cross multiple clouds.

Processing an image, for example, might be performed in different clusters specifically set up to handle the higher memory requirements needed for these types of tasks. Different portions of an email message might be distributed across a wider topology either as payloads for tasks or within key-value stores or databases that the tasks access. Consequently, developers have to think about not just different type of data stores but also different locations and even different types and states of data — input data, output data, temporary data, config data, and more.

Below is a general breakdown of the types of data encountered and where they may be stored and/or transmitted within in a serverless processing environment.

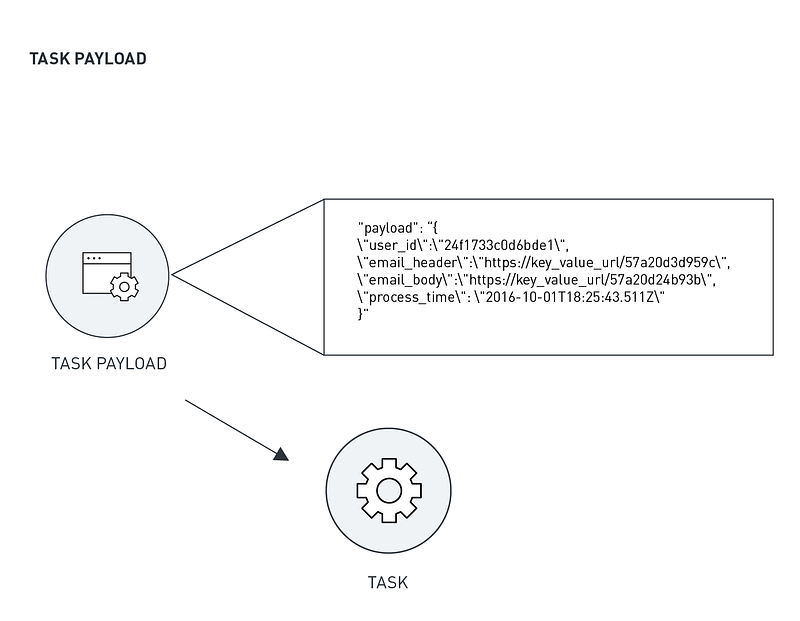

Task Payloads

Keeping in mind that tasks should be stateless, everything that a task needs for processing should therefore be provided at runtime. Typically, a task payload serves as the primary mechanism for providing the workload that the serverless task should use. Much like functional processing, data is passed to tasks, tasks operate on the data, tasks store or return the data, and then the tasks exit or terminate.

In certain situations, tasks may not have payloads per se as they may pull in data from a queue, database, key-value store, or other data source. In this case, tasks may use config data to provide the location of the data and then as the first order of business, pull the data in and process it.

Data Payload for a Task

As with functional processing, developers will want to pay attention to what gets passed to tasks. Tasks should only receive data that it will operate on–meaning that developers will want to send only the portion of a data record that is pertinent for that task as opposed to the full data record.

Although platforms may allow you to pass large amounts of data within a task payload, it is usually not wise to do so. The reasons are because of both efficiency and security. Less data means less transmission and less storage, hence greater efficiency. Fewer copies of the data and fewer locations translates into greater security. To reduce this overhead, it is better to pass IDs to data instead of the data itself, especially when the data is large and/or unstructured.

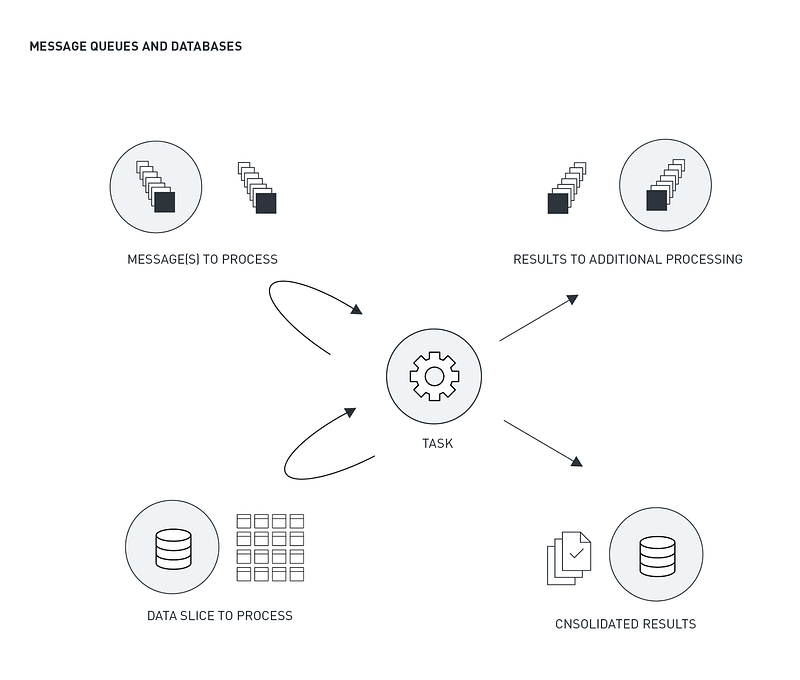

Message Queues and Databases

In some cases, serverless tasks may get the workloads they are processing from a message queue or a database. This is commonly seen in certain event-driven loops as well as when scheduled tasks come into play within a particular workflow.

In the first example, events might be placed on a queue, and after they reach a certain threshold, tasks might get triggered to run. Alternatively, scheduled tasks might run at regular intervals. Each task might take one or more messages from the queue, perform its operations, and then exit.

Message Queues and Databases

In the case of a scheduled task, it may get data from a queue or a database. An example here might be consolidating and post-processing of sensor data. Data from any of number of devices might be placed in a queue or stored in a database at a regular frequency. Tasks could be set up to run on a particular schedule which would take this data from the queue or database and consolidate it and/or perform other analytical operations for storage or presentation.

Temporary File Storage — internal task processing

Most serverless platforms provide a certain amount of non-persistent file storage that is available for a task to read from and write to. In AWS Lambda, for example, this temporary storage is provided via the /tmp directory. In IronWorker, it is accessed via the current working directory “.”. This file storage is accessible only by the task and cannot be written to or read from by other tasks. It will also be erased when a task completes.

As with any temp file storage, it can be used as a way to process large amounts of data by reading and writing slices of the data, store temporary results to be used by other functions within the task, or otherwise perform other memory-intensive operations.

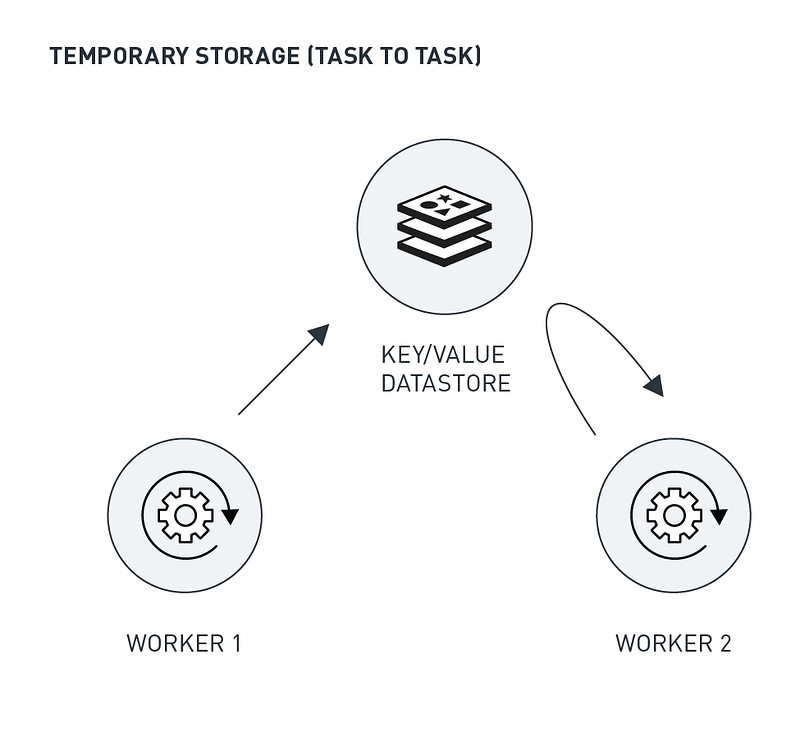

Temporary Storage — task to task data sharing

Another form of temporary data storage that is useful in serverless processing is storage that can be between separate tasks in a processing workflow. Certain events might involve a sequence of tasks which in turn might require some element of data sharing between the tasks.

In the case of email processing, one type of task might parse the body of an email message, separating it into text, links, and other objects. Other tasks might take these data objects and process them. Text might go through natural language processing for contextual analysis. Links might get analyzed for page ranking or spam detection.

Temporary Data Storage — Useful for Task-to-Task Data Sharing

In order to reduce the amount of data replication as well as provide an easy mechanism for data sharing, key-value datastores are well-suited for storing these shared data objects. The key to the value may be placed on a queue or within the task payload. The receiving task then the key to get the data and perform its operation.

More depth on key-value datastores is provided below but one of the nice things about this type of datastore is that you can set expiration times for the data elements. This means that you do not have to consciously delete entries as you might have to do with a database.

Third-Party Data

Another form of data is third-party data. Serverless tasks may make HTTPS or other network calls or, as mentioned above, reach out to a queue or database to pull in data. The general rules and guidelines around data access calls will also apply within a task-processing environment. These include encryption, authorization protocols, timeouts, and exception handling.

One difference from long-running applications, however, is that within an application, you will likely have credentials for access to databases and third party sites provided as part of the global memory of the application. These are likely read into memory from a config file at startup time. Within a serverless processing environment, however, tasks are largely stateless and do not have any sense of application awareness. The question then becomes how do you get URIs and/or access credentials for these tasks to use.

Developers get around this in a couple ways. In some cases, a serverless platform might provide a global config capability (as in the case of environment variables within IronWorker). In this case, developers are able to load config information into the system independently from any any task, thereby allowing any task access to this information at run-time. In other cases, the credentials could be passed in with the data payload. This is less advisable because it means many copies of the credentials may exist.

Twelve-Factor App

By this I mean it is not much different than changing a config file in a long-running app. In this case, you change a single file and upload it. Here, you have the added step of having to restart the app. In a serverless platform, you don’t need to restart, because any code changes within a task will trigger use of the updated task. As such, handling config data will likely take some thought (given it will largely be platform-dependent) as well as some discipline given the ease of combining code with data.

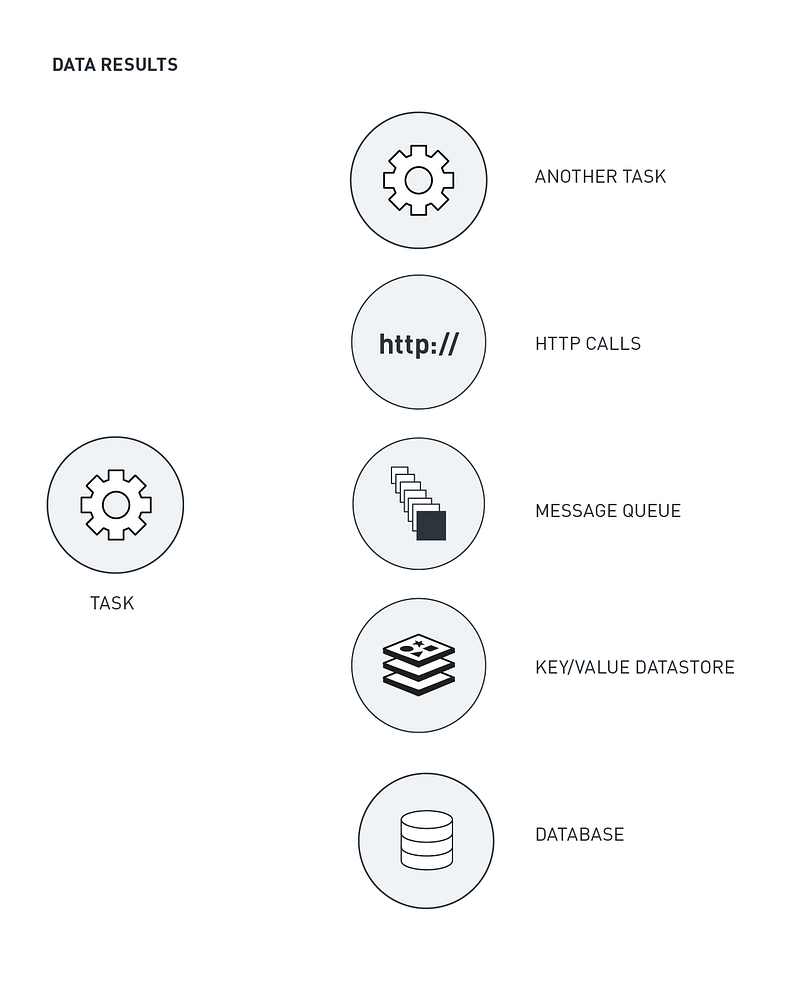

Data Results

Handling data results in a serverless platform differs depending on whether tasks are synchronous or asynchronous. In the case of synchronous processing, data could be returned to the calling task. In the case of asynchronous processing, data results will need to be stored, temporarily or permanently, in order for another process or entity to make use of it.

Data Results

Data results might be placed on a queue, in a key-value datastore, in an SQL or NoSQL database, written to block storage, or even pushed out via a webhook.

A couple points to consider:

- a transaction completes successfully and the resulting data gets written to some form of persistent storage

- that actions are idempotent meaning that transactions do not get duplicated or data gets overwritten with different results

In the first case, it is critical that writes get verified and committed before a task ends. In the second case, it is a matter of checking whether another performed an operation on the same data. In both cases, you’ll need to insert logic as well as exception-handling to address these processing and storage concerns.

One point to mention has to do with writing to databases. Some architectures may place limits on access to databases, requiring processes to be behind particular firewalls, come from certain IP whitelists, or follow other restrictive measures.

If the cloud processing takes place outside of these requirements, then there will be difficulty in updating a database. In this type of situation, one answer might be to have a process behind the firewall pull in results from a queue or datastore and store the data. This setup would uphold the access restrictions while still allowing for flexible and scalable processing via a serverless platform.

Another point about databases is that they may have hard connection limits and so developers will need to take concurrency levels into account so as not to exceed these limits. Concurrency is mentioned as a platform-level issue in Part 2 of this series.

The Value of Key-Value Stores

One of the key insights when starting to use a serverless platform is the value that a good key-value datastore provides. As mentioned above, there are a number of data sharing use cases whether it’s accessing global config data or sharing data between tasks.

It is somewhat ironic in that while serverless tasks should be stateless, a fair amount of “state” needs to be preserved as part of a processing pipeline or workflow.

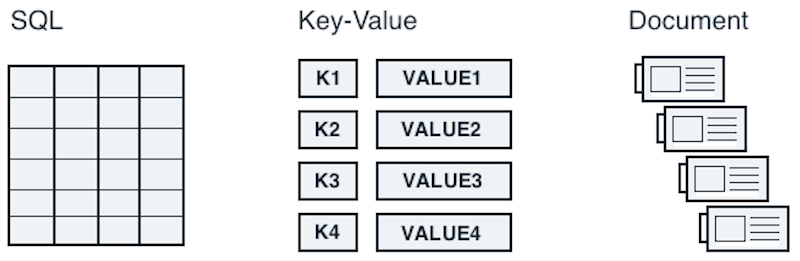

The reason key-value datastores are highlighted as opposed to SQL databases or document stores is that while these others can be used, key-value stores have a number of advantages to them.

- Key-value datastores are fast. They are designed to do a limited set of actions really well — read and write key-value pairs.

- Key-value pairs map well to the needs of task processing — here’s the key, and here’s the data. Whether it is storing a data set that needs to be processed or storing data between tasks, passing keys instead of large blocks of data provides more flexibility and can also improve performance. (Less copying and transferring of data within the system.)

- Key-value datastores typically scale better. They are usually designed and structured to scale horizontally and so there are less concerns with resource limits, locks, and bottlenecks.

I don’t have a recommendation to make as to what key-value datastore to use — i.e. internally hosted, cloud-based, managed service, or otherwise — but do recommend that high-availability and scalability factor high in the requirements. Any third-party dependency that can serve as a block on processing needs to be addressed with a fail-safe design and scalable manner in mind.

Idempotency

Key-value stores can also be used to ensure idempotency. By using the appropriate key, it can be possible to a) determine if you need to process data or b) determine if you need to write data to a data store.

For example, in the case of a web crawling application, a big part of the process it to grab all the links that are available within a site. By using a key-value store to contain the links of pages to process and doing so in a way that uses the links as keys, you can avoid the need to store duplicate links. Likewise, by checking to see if a key for a certain data result already exists, you can also avoid processing.

Data Store Types (attribution)

Thinking Serverless! The Workflow

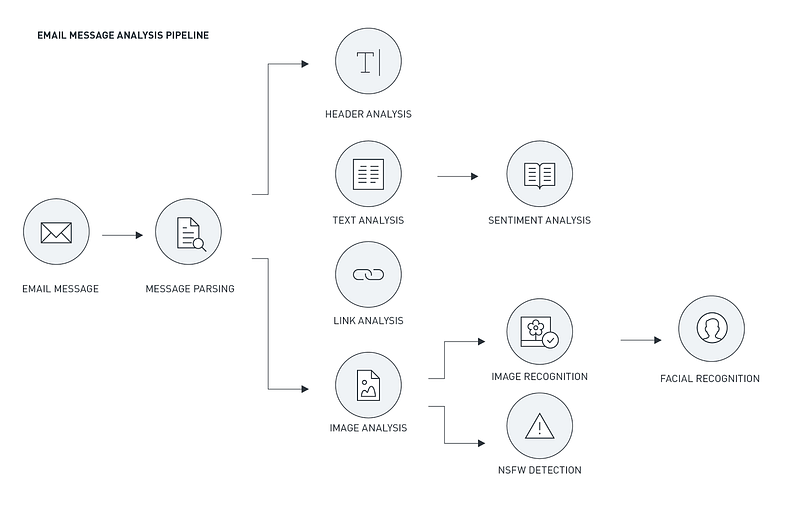

With serverless processing, some tasks might run independent of others, perform their work, and terminate without additional processing. Other workflows might entail a sequence of tasks.

The email use case mentioned in this series is an example of this. One task might parse an email message, others might process data objects within the email, and still others might perform actions based on the results of the data analysis.

This is why orchestration and workflow issues need to be addressed as part of building and deploying serverless applications. Here is a rundown of the issues developers might come across.

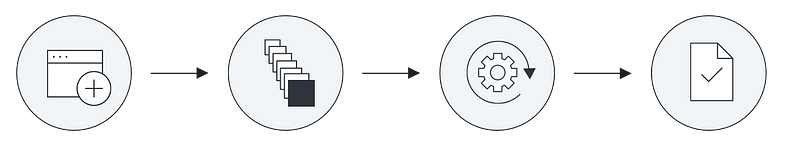

Synchronous and Asynchronous

Serverless workloads can processed either be synchronously or asynchronously. With synchronous processing, connections are maintained throughout the invocation of the serverless task. Which means the calling task will block until the serverless task is processed and then return a response.

Because of this aspect, synchronous tasks should be fast processing and operate in an environment that imposes strict time limits. Use cases for a synchronous processing can be transactional in nature or other situations where real-time response is critical.

Note that synchronous may or may not provide strict First-In-First-Out processing (FIFO) and so if this is critical developers might want to address this via the use of a message queues with FIFO capabilities in combination with asynchronous processing.

Asynchronous tasks, on the other hand, do not maintain a connection and therefore can have more relaxed SLAs regarding latency as well as increased limits on processing time. In all but the most time-sensitive situations, asynchronous processing should address most application needs.

Synchronous processing is less forgiving, requiring greater attention be paid to task performance and load capacities. Asynchronous processing provides a lot more flexibility and fails more gracefully (tasks may be delayed when run asynchronously as opposed to creating blocks that might ripple through other parts of an application creating resource or processing overloads).

Synchronous Processing

Asynchronous Processing

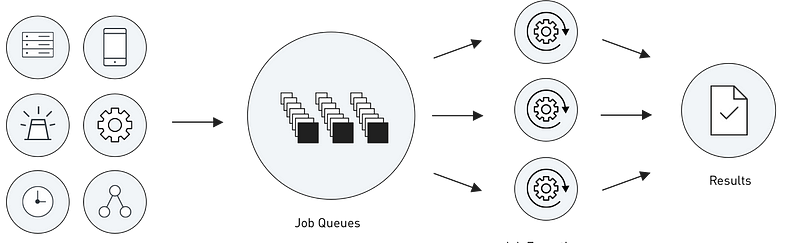

Sequencing and Processing Pipelines

Serverless processing lends itself to working on problems in a deconstructed and loosely coupled way. By focusing on building single-purpose functions and tasks, developers are able to build a system that can scale easily and be modified and extended in much more flexible manner than if the processing was contained within a larger more monolithic application framework.

When constructing sequences and pipelines, developers can make use of message queues as a way to buffer workloads between tasks. This traditional approach offers advantages of allowing introspection as to the size and throughput of queues as as to better understand the flow of events through the system. Some serverless platforms might provide the ability to invoke or trigger tasks directly from another task. Under the hood of the serverless platforms, message queues may be used to buffer the work so in the end, it may be an arbitrary choice.

For complex applications, message queues do make sense as they provide an added layer of visibility and inspection. For simpler applications and workflows, external queues may introduce unnecessary complexity.

For serverless tasks invoking serverless tasks, developers need to be extremely conscious about recursion and take measures to put limit on the number of levels of recursion. For example, an application that analyzes links might hit some form of a recursive pattern. Recursive tasks are a more common occurrence than you might think.

In the early development of IronWorker, developers were quickly forced to put measures in to prevent recursive calls beyond a certain threshold. Rather than rely on any platform limits, however, serverless apps that run the risk of recursion should make their own efforts to prevent things from getting out of hand.

Email Message Analysis Processing Pipeline

Task Invocation — Triggers, Webhooks, and Push vs Pull

How tasks get created, when they run, and how much they process is entirely dependent on the event, the workflow, and the serverless platform you’re using. With an internal project that, a task might be invoked directly by making a direct HTTP call to a serverless platform. With external applications, it might be via a webhook — which essentially provides a shell around an API call to the platform.

In other cases, events may need to be processed on a regular schedule or when a certain number of events happen. In these cases, tasks could be triggered to run either by a queue that can push messages the serverless platform. Each message could contain data for a single event or for multiple events. In other situations, a scheduled task could be used to pick up one or more messages from a queue or one or more records from a database.

Fortunately for developers, more and more use cases and patterns are being published around serverless computing and so developers will have a growing supply of models that they can work from. Serverless platforms are also likely to offer an increasing amount of hooks and internal capabilities around sequencing and workflow issues.

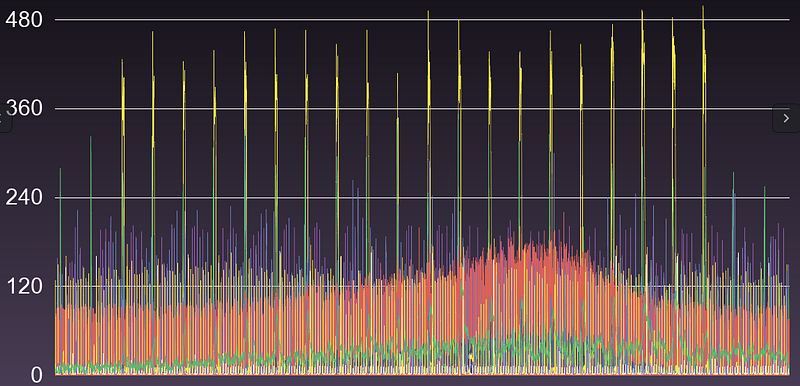

The graph below depicts a segment of users and their task concurrencies over a 24-hour period within a serverless platform. Many of the spikes occur on the hourly mark and illustrate the processing that is taking place as part of a regular schedule.

Task Currencies by User — Spikes Are Result of Scheduled Jobs

Retries

It goes without saying that in computing processing, things break. Whether it is legacy system, a large web application, or serverless architectures, internal or external system failures will occur. Developers, therefore, need to assume that tasks will fail and plan accordingly.

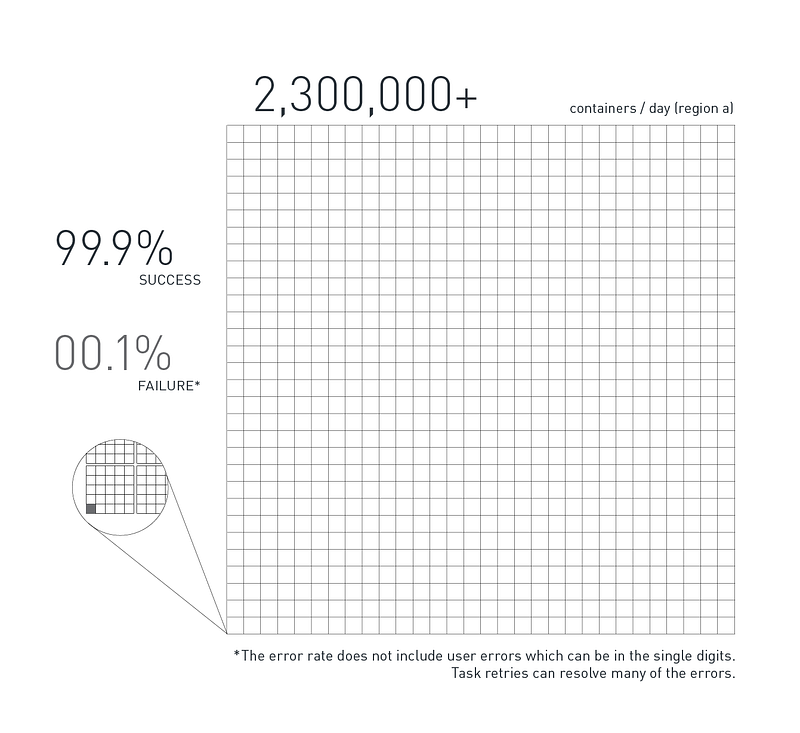

Most serverless platforms will provide as a feature the ability to automatically retry failed tasks . Provided that developers have written their tasks to be idempotent, any retry will be largely unnoticed — much like retries on disk writes go unnoticed.

Sample Task Error Rate

It is important, however, to monitor failed tasks — either through the platform or via log files. High error rates may indicate a system conflict when a task uses too many resources such as RAM, storage space, CPU. Or the high error rates could signify an external issue such as exceeding database connections or errors with access calls.

The ability to develop microservices and executable functions and move them quickly into production and run them at high concurrency means that many errors may not surface in testing. Analyzing error rates and resulting log files will help identify and address resources issues or external conflicts.

Exception Handling

Even when running in systems designed to be highly available and with the ability to retry tasks, tasks are still at risk for failing — whether it’s running into memory limits, third party read/access issues, network outages, versioning conflicts, or even just bad code.

Some remedies can be enabled at the platform level, either via APIs that allow access to failed task lists or dead-letter queues that might put failed workloads on a queue.

It may still be left as an exercise for developers to put in the logic to address failed tasks. One of the great things about serverless processing, however, is that it is relatively easy to write new features and put them into production quickly.

This benefit makes it so that you can build in exception handling capabilities from the start or as an additional hardening phase. In doing so, you can make it independent from the primary processing sequences to serve as either a check and balance or as a clean-up or oversight process.

- One method to address failed task might be to retry them — under the assumption that they failed because of a third-party outage or block.

- Another might be to go through a series of tests to isolate the issue and highlight it for automated and/or manual post-processing.

- A third approach might be to automatically parse through log files and post the resulting data to a chat room or other alert forum.

Logging and Audit Trails

On the outside looking in, making sense of log files within a serverless processing environment can be more difficult than in a legacy application. The reason being the work units might be much smaller and far more distributed. A greater number of components might also be involved — tasks, server configurations, zones, regions, clouds, storage facilities, data access methods, and more. All this involves more data, more noise, and more difficulty in following what was processed, when, and where.

With a solid logging strategy, added processing complexity does not necessarily have to translate into added logging complexity, especially with today’s logging technologies.

Whether you are using a logging service or hosting on your own something like ELK — Elasticsearch, Logstash, Kibana — the first order of business is to get access to logs from any task or sequence of tasks. The second component is in when and what to log. The third involves how to search and retrieve the data and the fourth is how to create automated queries, triggers, and other alerting mechanisms.

Here are some recommendations when it comes to logging

- LogFmt — Use a standard well-formulated logging format such as logfmt. Logfmt is a way to use key-value pairs that make logs human readable as well as machine processable.

- Log all events — You should log all events but use different log levels and labels to inform or direct action. For example, one form of labels include warn/error/info/debug as a way to indicate of the severity or actionable nature of the event.

- Some serverless platforms will allow logging to STDOUT/STDERR which in turn will redirect to various services. (IronWorker, for example, provides this capability which lets developers make use of a number of real-time logging services.)

- Capture versions as well as timestamps — down to the nanosecond w/timezone. Inputs are important although see the note below regarding log files as a source of data breach.

- Capture process IDs and server instances as well as any other location/system data. Although task-based processing is designed to be transparent across servers and infrastructures, this may not always be the case. In rare instances where you need to track down system-level conflicts, having system-level data will provide the only way to do so.

- Logging levels can help with recursive function calls but since tasks operate independently, you’ll need to maintain the level via an external variable — although it will need to be unique to the thread).

One key issue to note is how to preserve logs in the event of a task failure or a logging service outage. In the case of the former, when a task fails, local file storage will not be accessible after the termination of the task. And so even if a task was logged locally to disk — as you might do with a persistent application — log data will likely not be accessible after the fact.

With real-time logging to external services, this risk is reduced as data will be persisted in the third-party service. Note that STDOUT and STDERR might need to be periodically flushed in order to make sure logging data gets transmitted to the third-party service.

At the same time, external logging can introduce its own costs and/or points of failure. If you need to guarantee that logs get written and persisted outside of a running task, you may be in introducing certain performance hits. If the logging service is delayed or is unavailable, it can cause a block on the task.

Even with asynchronous logging, a service outage with the logging service can mean the absence of logs. If logging is paramount, they you may need to address alternative strategies to address task failures and/or service outages.

One concern about logs is that because they can have a long duration of persistence (e.g. 7 days, 30 days, or indefinitely), they can be a significant source for data breach. Developers need to give hard thought as to what they log and how/where logs are stored.

Developers will want to take the same precautions when logging data with respect to data segmenting, data anonymizing, and possibly even data encryption as they do in the original processing of the workloads and the transmission of the data payloads.

For more information on logging, an easily digestible set of anti-patterns can be found here on StackExchange.