How we implemented the video player in Mail.Ru Cloud

We’ve recently added video streaming service to Mail.Ru Cloud. Development started with contemplating the new feature as an all-purpose “Swiss Army knife” that would both play files of any format and work on any device with the Cloud available. Video content uploaded to the Cloud mostly falls into one of the two categories: “movies/series” and “users’ videos”. The latter are the videos that users shoot with their phones and cameras, and these videos are most versatile in terms of formats and codecs. For many reasons, it is often a problem to watch these videos on other end-user devices without prior normalization: a required codec is missing, or the file size is too big to download, or whatever.

In this article, I’ll go into detail to explain how video playback works in Mail.Ru Cloud, and how we made the Cloud player “omnivorous” and ensured support on a maximum number of end-user devices.

Storing and Caching: two approaches

A number of services (for example, YouTube, social networks, etc) convert users’ videos into appropriate formats after upload. The videos become available for playback only after conversion. A different approach is used in Mail.Ru Cloud: the original file is converted as it’s played. Unlike some specialized video hosting sites, we can’t change the original file. Why have we chosen this option? Mail.Ru Cloud is primarily a cloud storage, and users would be unpleasantly surprised if, while downloading their files, they find out that the files’ quality has deteriorated or the file size has changed even a bit. On the other hand, we can’t afford storing pre-converted copies of all the files: that would require too much space. We would also have to do lots of extra work, as some of the stored files will never be watched, not even once.

Another pro of the on-the-fly conversion is the following: in case we decide to change the conversion settings or, for example, add one more feature, we won’t have to reconvert the old videos (which wouldn’t be always possible, since the original video is already gone). Everything will apply automatically in this case.

How it works

We are using the HLS (HTTP Live Streaming) format created by Apple for online video streaming. The idea behind HLS is that every video file is cut into small fragments (called “media segment files”), which are added to a playlist, with a name and time in seconds specified for every fragment. For example, a two-hour movie cut into ten-second fragments comes as a series of 720 media segment files. Depending on which moment the user wants to start watching their video from, the player requests the proper fragment from the transmitted playlist. One of the benefits of HLS is that the user doesn’t have to wait for the video to start playing whilst the player is reading the file header (the wait time could be rather significant in case of a full-length movie and slow mobile Internet).

Another important possibility provided by this format is adaptive streaming that allows changing quality on the fly depending on the user’s Internet speed. For example, you start watching in 360p with 3G, but after your train moves into an LTE area, you continue in 720p or 1080p. It’s implemented quite simply in HLS: the player gets the “main playlist”, consisting of alternate playlists for different bandwidths. After loading a fragment, the player evaluates the current speed, and based on that it makes a decision regarding the quality of the next fragment: same, lower or higher. We currently support 240p, 360p, 480p, 720p and 1080p.

The backend

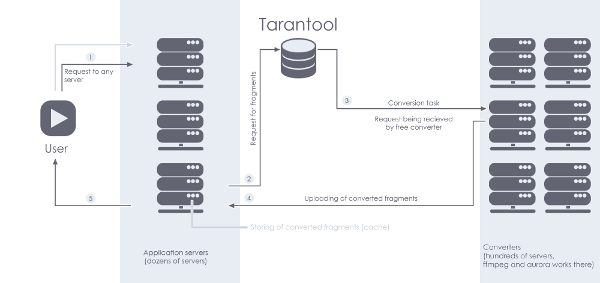

The Mail.Ru Cloud service consists of three groups of servers. The first group, application servers, accepts a video stream request: it creates an HLS playlist and sends it back, distributes converted fragments, and sets up conversion tasks. The second group, database with embedded logic (Tarantool), stores video information and manages the conversion queue. The third group, converters, receives tasks from a queue in Tarantool and then records task completion in the database again. Upon receiving a request for a video file fragment, we first check the database for a converted, ready-to-use fragment of the requested quality on one of our servers. Two scenarios are possible here.

First scenario: we do have a converted fragment. In this case, we send it back right away. If you or somebody else has requested it recently, the fragment will already exist. This is the first caching level, which works for all converted files. It’s worth mentioning that we also use another caching level, where frequently requested files are distributed across several servers to avoid network interface overload.

Second scenario: we do not have a converted fragment. In this case, a conversion task is created in the database, and we wait for it to be completed. As we said earlier, it’s Tarantool (a very fast open-source NoSQL database that lets you write stored procedures in Lua) which is in charge of storing video information and managing the conversion queue. Communication between the application servers and the database is done as follows. An application server sends out a request: “I need the second fragment of the file movie.mp4 in 720p quality; ready to wait no longer than 4 seconds”, and within 4 seconds it receives information on where to get the fragment, or an error message. So, the database client isn’t interested how its task is carried out — right away or via a chain of complicated actions: it uses a very simple interface that allows sending out a request and receiving what’s requested.

Our way to provide database fault tolerance is master-replica failover. A database client sends requests only to the master server. If there are problems with the current master server, we mark one of the replicas as the master, and the client is redirected to the new master. Such master-replica switch is transparent to the client, as the client continues interacting with a master.

Besides application servers, who else can act as a database client? It can be those converter servers who are ready to start converting fragments and now need a parametrized HTTP link to a source video file. Communication between such converters and Tarantool is similar to the above-described interface for application servers. A converter sends out a request: “Give me a task, I’m ready to wait for 10 seconds”, and if the task appears within these 10 seconds, it’s given to one of the converters awaiting. We used IPC channels in Lua inside Tarantool to easily implement client-to-converter task forwarding. Channels allow communication between different requests. Here is some simplified code for converting a fragment:

function get_part(file_hash, part_number, quality, timeout) -- Trying to select the requested fragment local t = v.fragments_space.index.main:select(file_hash, part_number, quality) -- If it exists — returning immediately if t ~= nil then return t end -- Creating a key to identify the requested fragment, and an ipc channel, then writing it -- in a table in order to receive a “task completed” notification later local table_key = msgpack.encode{file_hash, part_number, quality} local ch = fiber.channel(1) v.ctable[table_key] = ch -- Creating a record about the fragment with the status “want to be converted” v.fragments_space:insert(file_hash, part_number, quality, STATUS_QUEUED) -- If we have idle workers, let’s notify them about the new task if s.waitch:has_readers() then s.waitch:put(true, 0) end -- Waiting for task completion for no more than “timeout” seconds local body = ch:get(timeout) if body ~= nil then if body == false then -- Couldn’t complete the task — return error return box.tuple.new{RET_ERROR} else -- Task completed, selecting and returning the result return v.fragments_space.index.main:select{file_hash, part_number, quality} end else -- Timeout error is returned return box.tuple.new{RET_ERROR} end end local table_key = msgpack.encode{file_hash, part_number, quality} v.ctable[table_key]:put(true, 0)

The real code is slightly more complex: for example, it considers the scenarios when the fragment is in the “being converted” status at the moment of a request. Thanks to this scheme, converters are immediately notified of a new task, and the client is immediately notified of task completion. That’s very important because the longer a user sees the video loading spinner, the more likely they are to leave the page before the video even starts playing.

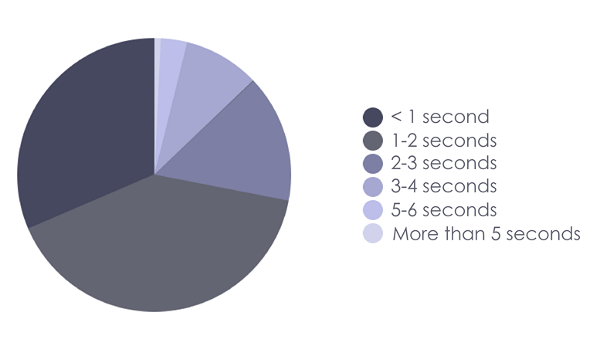

As you can see on the diagram below, the majority of conversions and, consequently, the wait time don’t last longer than a couple of seconds.

Conversion

For conversion, we are using FFmpeg that we’ve modified for our needs. Our initial plan was to use FFmpeg built-in tools for HLS conversion; however, we faced problems for our use case. If you ask FFmpeg to convert a 20-second file to HLS with 10-second fragments, you get two files and a playlist that plays them with no problems. But if you request to convert the same file, first 0-to-10 seconds, and after that 10-to-20 seconds (launching another instance of FFmpeg converter), then, when transitioning from one file to the other (approximately on the 10th second), you’ll hear an obvious click. We spent several days trying out different FFmpeg settings, but with no success. So, we had to get inside FFmpeg and write a small patch. It takes a command line argument to fix the “click” bug rooted in nuances of coding audio and video tracks.

Besides, we used some other available patches that were not included in FFmpeg upstream at that moment; for example, a patch for solving the known issue of slow MOV files conversion (video made by iPhone). A daemon called “Aurora” controls the process of getting tasks from the database and starting up FFmpeg. The “Aurora” daemon, as well as the daemon located on the other side of the database, is written in Perl and works asynchronously with the EV event loop and various useful modules, such as: EV-Tarantool and Async::Chain.

Interestingly, no extra servers were installed for the new video streaming service in Mail.Ru Cloud: conversion (the part that requires the largest amount of resources) runs on our storages in a specially isolated environment. Logs and graphs show that our capacities allow for several times greater load than what we have now. FYI: since the launch of our video streaming service at the end of June 2015, more than 5 million unique videos have been requested; 500–600 unique files are watched every minute.

The frontend

Nowadays, almost everyone has a smartphone. Or two. It’s no big deal to make a short video for your friends and family. That’s why we were ready for the scenario where a person uploads a video from their phone or tablet to Mail.Ru Cloud and deletes it from their device right away to free up space. If the user wants to show this video to somebody, they can simply open it with a Mail.Ru Cloud app, or start up the player in the Cloud web version on their desktop. It’s now possible to not store all the video clips on your phone, and at the same time always have access to them on any device. The streaming bitrate is reduced on mobile Internet and, consequently, so is the size in megabytes.

Furthermore, when playing a video on a mobile platform, we use Android and iOS native libraries. That’s why the video would play on “out-of-the-box” smartphones and tablets, in mobile browsers: we don’t need to create an extra player for the format we use. Similarly to the web version, on desktop computers the adaptive streaming mechanism is activated, and the image quality is dynamically adapted to the current bandwidth.

One of the main differences between our player and the competitors’ ones is that our video player is independent of the user’s environment. Most of the time, developers create two different players: one — with Flash interface, and the other (for browsers with native HLS support, for example, Safari) — exactly the same one, but implemented in HTML5, with subsequent upload of an appropriate interface. We only have one player. And we aimed at the possibility to easily change the interface. Therefore, our player looks very similar for both video and audio — all the icons, layout, etc., are written in HTML5. The player doesn’t depend on the technology used for playing the video.

We use Flash for drawing the video, but the whole interface is built on HTML; therefore, we don’t encounter version synchronization problems since there is no need to support particular Flash versions. An open-source library was enough to play HLS. We wrote a shim to translate the HTML5 video element interface to Flash. That’s why we could write our whole interface on the assumption that we would always work with HTML5. If the browser doesn’t support this format, we simply substitute the native video element for our own one that implements the same interface.

If the user’s device doesn’t support Flash, the video plays in HTML5 with HLS support (so far, this is only implemented in Safari). HLS is played on Android 4.2+ and iOS using the native tools. In case there is no support and no native format, we offer the user a file download.

***

If you have experience implementing a video player, welcome to the comment section: I’m very keen to know, how you would break a video into fragments, choose between storage and caching, and what other challenges you have. All in all, let’s share our experience.