Stuff The Internet Says On Scalability For October 7th, 2016

Hey, it's HighScalability time:

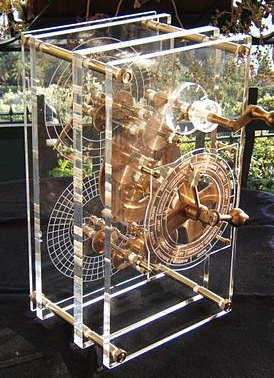

The worlds oldest analog computer, from 87 BC, the otherworldly Antikythera mechanism.

If you like this sort of Stuff then please support me on Patreon.

- 70 billion: facts in Google's knowledge graph; 80 million: monthly visitors to walmart.com; 50%: lower cost for sending a container from Shanghai to Europe; 6 billion: Docker Hub pulls per 6 weeks; 5x: impact reduction using new airbag helmet; 400: node Cassandra + Spark Cluster in Azure; 66%: loss of installs when apps > 100MB; 223GB: Udacity open sources self-driving car data;

- Quotable Quotes:

- rfrey: The success of many companies, and probably all of the unicorns, has nothing to do with technology. The tech is necessary, of course, but so are desks and an accounting department. Internalizing that has been difficult for me as an engineer.

- @mza: 72 new features/services released last month on #AWS. 706 so far this year (up 42.9% YoY).

- Marc Andreessen: To me the problem is clear: The problem is insufficient technological adoption, innovation, and disruption in these high-escalating price sectors of the economy. My thesis is that we're not in a tech bubble — we’re in a tech bust. Our problem isn't too much technology or people being too excited about technology. The problem is we don't have nearly enough technology. These cartel-like legacy industries are way too hard to disrupt.

- @mfdii: What did the NSA agent say when it got access to all the email? Yahoo!

- Ben Thompson~ [Google's Pixel event] was a huge event, you rarely see a company changing business models

- @kerryb: News just in: databases to be “named and shamed” if they use foreign keys without trying to train local British keys first.

- kazagistar: The biggest use of REST in our system (and I suspect a lot of large newer systems) is not "web client to backend server" but "microservice to microservice". And for this, GraphQL is severely immature.

- @amcafee: Tesla software update: good braking "even if a UFO were to land on the freeway in zero visibility conditions."

- evanelias: Facebook uses MySQL for countless other critical OLTP use-cases, and (for better or worse) even a few OLAP use-cases. It's the primary store of Facebook, across the entire company. It's the storage layer for ad serving, payments, async task persistence, internal tooling, many many other things. Most of these use-cases make full use of SQL and the relational model.

- @rakamaric: Deschutes Brewery using light-weight formal methods (white-box fuzzing) to find bugs in their code! #soarlab

- @tottinge: "Crowdsourcing is the tyranny of the herd, not the wisdom of crowds" @snowded #lascot16

- @pedrolopesme: @toddlmontgomery "Your API is a protocol. Treat it like one." #qconnyc 2016

- Rodrick Brown: A pattern today many use to accomplish this [logging] is using a kafka logging library that hooks into their microservice and use something like spark to consume the logs from Kafka into elasticsearch. We're doing hundreds of thousands of events/sec on a tiny ~8 node ES cluster.

- @dominicad: "The way people make decisions is key to understanding company culture. Instead of system analysis, record decisions." @snowded #lascot16

- Hugh E. Williams: Engineers irrationally avoid hash tables because of the worst-case O(n) search time. In practice, that means they’re worried that everything they search for will hash to the same value

- @JoeEmison: That's just not accurate. I've spent the last year trying to run on GCP and keep going back to AWS. It's not just perception.

- boulos: Where I do agree is networking egress. The big three providers all have metered bandwidth rates that are way above the "all inclusive" fee you pay to Hetzner, OVH, DO, and others. The cheapest way to host an ftp server that serves 20 TB per month is certainly on one of these (today). None of these providers will let you serve 1 PB / month this way, but if you're in their sweet spot and they can make it work out on average, it's a good fit.

- @DDDBE: "If you have a magical genie, you still have the problem of trying to explain what you want. That is domain complexity." @malk_zameth

- avitzurel: The networking on AWS needs to be better. I don't want the strongest machine just to have a better transfer rate. It makes complete sense to have a micro machine for some services, but if those services are accessed or access other HTTP/s services, it will be unnecessarily slow

- Alan Huang: the number of [Internet] hops can be reduced by 2X by converting the network into a toroid. The number of hops can be further reduced by recasting the network into N-dimensional hypercube or into a multistage network, such as a Perfect Shuffle or Banyan.

- @jessfraz: Can we go back to ncurses apps instead of these memory hogging bullshits?

- Russ White: The reality is we shouldn’t need DevOps for configuration at all. This is a bit of a revolution in my thinking in the last two or three years, but what I’m trying to do is to simply make DevOps, as it’s currently constituted, obsolete. DevOps should be about understanding how the network is working and making the network work better

- Software is eating the world, but software is also eating software. Laugh. Cry. Shake your head and then your fist, but it's a satire that's all true: How it feels to learn JavaScript in 2016. Epic. Once you wipe away the tears you may also realize this is a great tutorial on all the different frameworks and how they fit together. You won't find better.

- Videos are available for Full Stack Fest 2016, held in Barcelon, with topics ranging from Docker, IPFS & GraphQL to Reactive Programming, Immutable Interfaces & Virtual Reality.

- Great analogy by paulddraper on cloud pricing: "Restaurant prices are ridiculous ... made the comparison between groceries and menu offerings of McDonalds, Taco Bell, Burger King ... Olive Garden (SO EXPENSIVE) and you pay 5 times at a restaurant for the same." You're not paying for hardware. You're paying for hardware, expertise, services, and convenience. On-prem or colocation may be a good choice. But limiting your comparison to raw computing power mischaracterizes the decision.

- Interesting discussion on HackerNews as to why RethinkDB is shutting down. A lot of people praise the technical prowess of RethinkDB, it just works many say, yet it did not succeed. Why? formula1: RethinkDB failed because, despite its sweet name, it wasnt solving that important of problems. pluma: But the company was not able to turn any of those numbers into revenue. Downloads, GitHub stars and Twitter mentions don't pay to keep the lights on. A successful open source project does not equal a successful commercial enterprise. tensor: I think it failed because it failed to gain popularity for social reasons. It solved a lot of very important correctness problems. On the other hand MongoDB is successful despite having many serious problems. jkarneges: If I had to speculate, I'd say that they spent a long time in development before monetizing, longer than investors were willing to entertain. It's hard for a B2B company to raise a Series B without a thoroughly proven revenue engine. jnordwick: Time to man up. Performance really was crappy? That only having a float for your ONLY number type really was kind of dumb? Having no native datetime was bad? Saddling timezones on the pseudo datetime was a brainfart? Milliseconds only was shortsighted? Horizon was a bad idea too soon? Document databases are the new object databases - forever 5 years away? Aggregates were poorly done? Nobody really knew how to do stationary time series properly. _benedict: Having worked for a successful(ish) database vendor, I can say that user stupidity (ignorance) is a major factor in database software success.

- You will not beleive the three simple tests that can prevent most critical failures...Error handlers that ignore errors; Error handlers with “TODO” or “FIXME” in the comment; Error handlers that catch an abstract exception type (e.g. Exception or Throwable in Java) and then take drastic action such as aborting the system. From The Morning Paper: Simple testing can prevent most critical failures.

- Someone said once that academic debates are vicious because the stakes are so low. Computer science is not free of these arguments. Here are some of them: Vigorous Public Debates in Academic Computer Science. One interesting bit is "while I cannot find online copies of their rebuttals, Knight and Leveson’s reply to the criticisms includes plenty of quotes." Reminds me of how we often only have quotes from writers who were being accused of heresy by theologians trying to establish Church orthodoxy. Their heterodox writings have long since been purged. Only the quotes remain.

- Interesting kind of software flow for hardware development. Epiphany-V: A 1024-core 64-bit RISC processor: "The Epiphany-V was designed using a completely automated flow to translate Verilog RTL source code to a tapeout ready GDS, demonstrating the feasibility of a 16nm “silicon compiler”. The amount of open source code in the chip implementation flow should be close to 100% but we were forbidden by our EDA vendor to release the code. All non-proprietary RTL code was developed and released continuously throughout the project as part of the “OH!” open source hardware library.[20] The Epiphany-V likely represents the first example of a commercial project using a transparent development model pre-tapeout." Isn't this inefficient? adapteva: Yes, we are leaving 2X on the table in terms of peak frequency compared to well staffed chipzilla teams. Not ideal, but we have a big enough of a lead in terms of architecture that it kind of works.

- Sounds good. Computer Scientists Close In on Perfect, Hack-Proof Code. But UncleMeat says not so fast: It's very true that formal methods have improved dramatically in the last decade. SMT solvers are finally good enough for real world use. But the idea of using something like this to prevent heart bleed is still a dream. It's still far far far too hard to write complex systems with proof assistants in the real world.

- Plug your ears against the siren song of the database that does everything. NoPlsql vs ThickDB: which one requires a bigger database server? Advocates a thick database approach where all business logic via PLSQL and runs inside the database. This is old school. And it works great...until it doesn't and then you are totally without options. And who want to program in SQL?

- Social engineering may work just as well on AIs. How to steal the mind of an AI: Machine-learning models vulnerable to reverse engineering: Taking advantage of the fact that machine learning models allow input and may return predictions with percentages indicating confidence of correctness, the researchers demonstrate "simple, efficient attacks that extract target ML models with near-perfect fidelity for popular model classes including logistic regression, neural networks, and decision trees."

- Tumblr made smart use of proxies to move database masters between data centers. JJuggling Databases Between Datacenters: When performing the cutover, our workflow was as follows. In each data center, there was a config which pointed to a local read slave, a remote master, and a local proxy with the master (remote or local) as a backend. When moving masters between datacenters, our database automation, Jetpants (new release coming soon!), reparented all replicas, and our automation updated the proxy backend to point to the new master. This resulted in seconds of read-only state per database pool and minimal user impact.

- New lower Azure pricing by between 11% to 50% depending on what you are using. Good to see.

- Open source giveth and it taketh away. Brace yourselves—source code powering potent IoT DDoSes just went public: "Both Mirai and Bashlight exploit the same IoT vulnerabilities, mostly or almost exclusively involving weakness involving the telnet remote connection protocol in devices running a form of embedded Linux known as BusyBox." These bots are smart enough to not totally commandeer a device. The device will keep on working, perhaps a bit slower, all the while it's performing nefarious deeds.

- A modern version of the civil service examination system in Imperial China. google-interview-university: A complete daily plan for studying to become a Google software engineer.

- InfraKit, for creating and managing declarative, self-healing infrastructure. As explained by Docker founder shykes: "InfraKit is a higher-level component, it focuses on production deployment and "self-healing" state reconciliation. You can actually use terraform as a backend for infrakit. Another difference is that Infrakit is not a standalone tool, it's a component designed to be embedded in a higher-level tool. For example we're embedding it in the Docker platform for built-in infrastructure management."

- Here's an excellent overview of Infrakit and where it fits in the scheme of things by Jesse White: Docker Kata 006: Using components called plugins, InfraKit is at the core a set of collaborating processes. Implemented as servers running over HTTP and communicating through unix sockets, plugins define an interface that provide the behavior of three types of primitives: groups, instances and flavors.

- If you've wondered how you might run your own private cloud then go shopping at Walmart. Really. Walmart releases a lot of open source code. Application Deployment on OpenStack via OneOps. OneOps: is a multi-cloud and open-source orchestration platform for DevOps. A big win is it supports deployment on major public, private clouds, and on bare metal. The article is a nice step-by-step showing how to deploy MySQL on OpenStack via OneOps. See also Electrode: a platform for building universal React/Node.js applications with standardized structure, best practices, and modern technologies baked in.

- Another thing we always knew deep in our hearts. Practice Doesn’t Make Perfect: But for education and professions like computer science, military-aircraft piloting, and sales, the effect ranged from small to tiny. For all of these professions, you obviously need to practice, but natural abilities matter more.

-

Yahoo scanned all of its users’ incoming emails on behalf of U.S. intelligence officials. Consider how a secret command could transform your Echo from family friend to betrayer. An opened microphone would hear everything. The only fix is design. No device should have a command to turn on a microphone and transmit data to the Enemy.

-

Neuroscientists Have a New Computational Model for Memory: Rather than just a single dial, what if synapses could be imagined as arrays of multiple dials? It would be as if the same synapses could simultaneously serve as both RAM (fast, volatile memory) and hard-drive memory (slow, long-term). "This form of synaptic complexity allows extended memory lifetimes without sacrificing the initial memory strength," the paper explains, "accounting for our remarkable ability to remember for long times a large number of details even when memories are learned in one shot."

-

Great story on A Technical Follow-Up: How We Built the World’s Prettiest Auto-Generated Transit Maps. Lots of interesting comparisons with Google and Apple maps. First they built a compression algorithm that hrinks transit schedules up to 100 times smaller than the zip-files transit agencies provide. They implemented a special sparse image library that could deal with these very large monochromatic images with relatively few white areas. Then they created an algorithm to draw transit shapes on a giant black-and-white canvas representing the whole world, where every pixel is equivalent to one square-meter.

- Yep, this sort of hubris effects many a programmer/godling: "I ran across some people who claimed that Lucene was going to surpass Google’s capabilities any day now." Dan Luu meditates on how people can be so tragically wrong. Why's that company so big? I could do that in a weekend: "When people imagine how long it should take to build something, they’re often imagining a team that works perfectly and spends 100% of its time coding. But that’s impossible to scale up. The question isn’t whether or not there will inefficiencies, but how much inefficiency. A company that could eliminate organizational inefficiency would be a larger innovation than any tech startup, ever. But when doing the math on how many employees a company “should” have, people usually assume that the company is an efficient organization." Great discussion on HackerNews.

- Why we moved from Amazon Web Services to Google Cloud Platform?: Amazon’s awesome, but Google Cloud is built by developers, for developers, and you see it right away...GAE just works, has auto-scaling, load-balancers and free memcache all built in...Google lets you create custom machine types with any cpu/memory configuration...Google connects each and every VM to its super-fast, low-latency networking...Google bills by the minute (not hour) and apply AUTOMATIC DISCOUNTS for long-running workloads...GCP has only Pub/Sub which just works and is insanely scalable...Google BigQuery is nicely priced by the GB stored and TB queried...Amazon has one of the most confusing IAM...

- Ronald Bradford on Improving MySQL Performance with Better Indexes. This is a slide deck but I think you can get the idea of techniques that were able to reduce query times from 175ms to 10ms and eliminate 100 AWS EC2 servers. There's a 6 step process: capture, identify, confirm, analyze, optimize, verify. Examples and commands are given for each step.

- If your are downplaying the Chinese silicon ecosystem then you are making mistake says Charlie Demerjian. For example: Phytium shows off 64-core ARM server systems: we left Hot Chips deeply impressed by what Phytium has accomplished. They have two homegrown ARM cores, three CPUs/SoCs from 4-64 cores, and they run. Not only that they are not delicate demo systems, they just work.

- Good discussion: Ask HN: Alternatives to AWS? Lots of different options and opinions on those options. No conclusions of course, but lots to think about.

- Early example of how an installed based creates a strategy tax on the future. The Longbox: CDs had been around for a few years, but record stores still didn’t have a good way to display them, because their shelves were formatted to display 12” vinyl LPs. The solution was to package CD jewel cases inside of cardboard boxes that were just as tall as a vinyl album but half as wide. This allowed the shelves to fit two “longbox” CDs side-by-side on an LP rack.

- Eventsourcing: Why Are People Into That?: What makes the combination of eventsourcing, Domain Driven Design and CQRS so attractive is that it can greatly simplify building software which keeps subsystems cleanly separated and independently maintainable as more features are added over time, akin to what we have learned to do for cars, spacecraft and toasters. See also Developing Transactional Microservices Using Aggregates, Event Sourcing and CQRS - Part 1.

- Hostinger with a good description of their automation infrastructure. The Cost Of Infrastructure Automation: For network automation we use Ansible because it has flexible template mechanism...configurations are pushed using our first-class citizen Chef and some parts are handled with Consul + consul-template...Why not run a single tool for all things? Because every tool has its own limitations...We make changes on Github by creating a pull request and Jenkins pulls these changes...we do changes locally on personal laptop first, later push the code to Github as Pull Request...When we provision a new server, it is automatically detected by our monitoring platform Prometheus...Network automation is done using primary tool Ansible. We use Cumulus network operating system which allows us to have a fully automated network where we reconfigure the network including BGP neighbors, firewall rules, ports, bridges, etc. on changes...We internally use Slack and it’s common sense to do work as much as possible in the chat.

- yahoo/open_nsfw: This repo contains code for running Not Suitable for Work (NSFW) classification deep neural network Caffe models. Please refer our blog post which describes this work and experiments in more detail.

- Graph Query Engine Benchmark: compare the ability of varying graph frameworks in a reproducible manner. We hope that this in turn will churn up interesting discussion about improvements that need be addressed at both the individual and overall level.

- Full Resolution Image Compression with Recurrent Neural Networks: We compare to previous work, showing improvements of 4.3%-8.8% AUC (area under the rate-distortion curve), depending on the perceptual metric used. As far as we know, this is the first neural network architecture that is able to outperform JPEG at image compression across most bitrates on the rate-distortion curve on the Kodak dataset images, with and without the aid of entropy coding.