VGhlIGNhdC1hbmQtbW91c2Ugc3Rvcnkgb2YgaW1wbGVtZW50aW5nIGFudGktc3BhbSBmb3IgTWFp bC5SdSBHcm91cOKAmXMgZW1haWwgc2VydmljZSBhbmQgd2hhdCBUYXJhbnRvb2wgaGFzIHRvIGRv IHdpdGggdGhpcw==

Hey guys!

In this article, I’d like to tell you a story of implementing the anti-spam system for Mail.Ru Group’s email service and share our experience of using the Tarantool database within this project: what tasks Tarantool serves, what limitations and integration issues we faced, what pitfalls we fell into and how we finally arrived to a revelation.

Let me start with a short backtrace. We started introducing anti-spam for the email service roughly ten years ago. Our first filtering solution was Kaspersky Anti-Spam together with RBL (Real-time blackhole list — a realtime list of IP addresses that have something to do with spam mailouts). This allowed us to decrease the flow of spam messages, but due to the system’s inertia, we couldn’t suppress spam mailouts quickly enough (i.e. in the real time). The other requirement that wasn’t met was speed: users should have received verified email messages with a minimal delay, but the integrated solution was not fast enough to catch up with the spammers. Spam senders are very fast at changing their behavior model and the outlook of their spam content when they find out that spam messages are not delivered. So, we couldn’t put up with the system’s inertia and started developing our own spam filter.

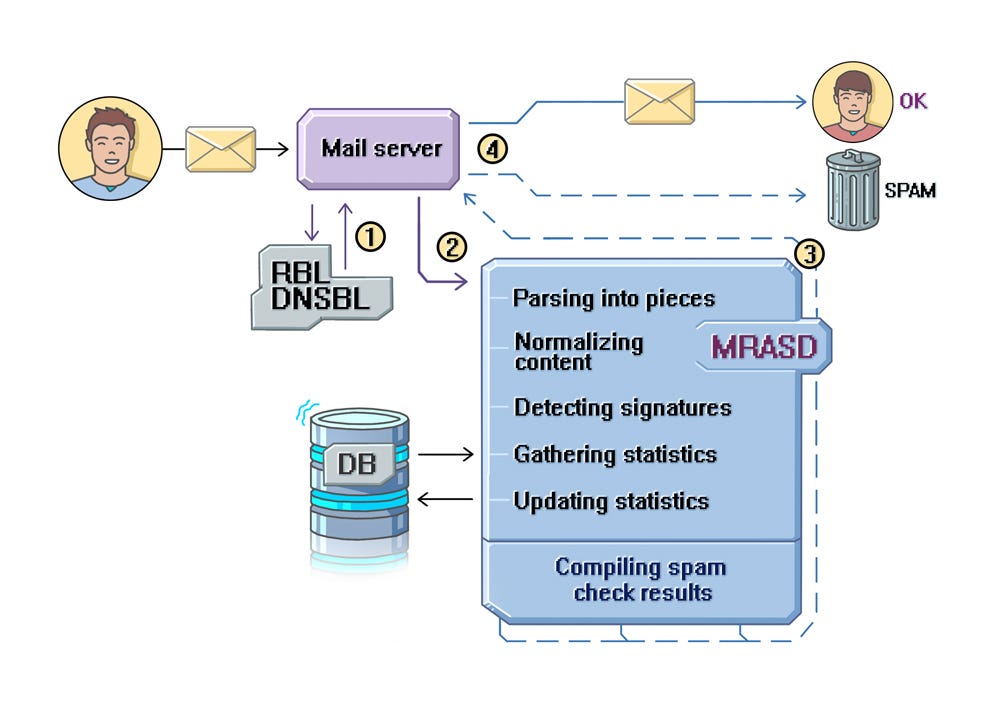

Our second system was MRASD — Mail.Ru Anti-Spam Daemon. In fact, this was a very simple solution. A client’s email message went to an Exim mail server, got through RBL that acted as the primary filter, and then went to MRASD where all the magic happened. The anti-spam daemon parsed the message into pieces: headers and body. Then it normalized each of the pieces using elementary algorithms like normalizing the character case (all in lowercase or uppercase), bringing similar-looking symbols to a specific form (using one symbol for the Russian and English “O”, for example), etc. After normalization, the daemon extracted so-called “entities”, or email signatures. Our spam filters analyzed different parts of the email message and blocked the message if they found any suspicious content. For example, we could define a signature for the word “viagra”, and all messages that contained this word were blocked. An entity could also be a URL, an image, an attachment and so on. Another thing done during the anti-spam check was to calculate a fingerprint for the verified email message. A fingerprint, calculated as a handful of tricky hash functions, was a unique characteristic of the message. Based on the calculated hash values and collected hash statistics, the anti-spam system could filter out the message as spam or let it through. When a hash value or an entity reached a certain frequency threshold, a server started blocking all matching email messages. For this purpose, we maintained statistics (counters) that tracked how often an entity was met, how often the recipients complained about it, and set an entity flag SPAM/HAM (in spam-related terminology, “ham” is the opposite of “spam” and means that the verified email message contains no spam content).

The core part of MRASD was implemented using C++, while a considerable piece of its business logic was implemented using an interpretive language, Lua. As I have already said, spammers are highly dynamic guys who change their behavior very fast. Our aim was to respond as fast to every change on the spammers’ side, that’s why we implemented our business logic using an interpretive language (with Lua, we didn’t have to recompile the system and update it on all servers every time). The other requirement was speed: code in Lua showed good results in performance testing. Finally, it was easy to integrate with code in C++.

The scheme above illustrates a simplified workflow of our anti-spam filter: an email message comes from the sender to our mail server; if the message has successfully passed the primary filter (1), it goes further to MRASD (2). MRASD returns its check results to the mail server (3), and based on these results the message is delivered either to the recipient’s “Spam” folder or to the inbox.

MRASD allowed us to decrease the number of non-filtered spam messages ten times. As time went on, we kept improving the system: added new subsystems and components, introduced new tools. So, the system kept growing and became still more complex, and anti-spam tasks also became still more diverse. These changes couldn’t help affecting our technology stack. That’s what the next part of this story is about.

Evolution of our technology stack

At the dawn of the era of email services, the message flow as well as the message content was notably scarcer than today. But the choice of tools and computing capacities was poorer too. As you can see from the above-described “parental” model of MRASD, it was necessary to store all sorts of statistical data. A considerable part of this data was “hot” (i.e. frequently used), and this posed certain requirements for the data storage system. As a result, we chose MySQL as a storage system for the “cold” data, but felt still undecided about that for the “hot” statistics. We analyzed all possible solutions (their performance and functionality as applied for “hot” but not mission-critical data) and finally arrived to Memcached — at that moment, this solution was stable enough. But we still had a problem with storing “hot” and critical data. Like any other cache solution, Memcached has its limitations, including no replication and the long-and-slow warm up period after the cache went down (and was flushed). Our further search brought us to Kyoto Cabinet, a non-relational key-value database system.

The time ticked by, and the email workload increased, and so did the anti-spam workload. There emerged new services that required storing ever more data (Hadoop, Hypertable). By the way, today’s peak processing workload reaches 550 thousand email messages per minute (if we calculate a daily average, this makes about 350 thousand email messages every minute), and the amount of log files to analyze is over 10 Tbytes a day. But let’s get back into the past: in spite of the increasing workloads, our requirements for data processing (loading, saving) remained the same. And one day we realized that Kyoto couldn’t manage the amount of data we needed. Moreover, we wanted a storage system with broader functionality for our “hot” and critical data. That said, it was high time to look around for better alternatives that would be more flexible and easier to use, with higher performance and failover capabilities. It was the time when a NoSQL database named Tarantool gained popularity within our company. Tarantool was developed inside the company and fully met our “wannas”. By the way, I’ve been lately revising our services, and I felt an archeologist when I bumped into one of the earliest Tarantool versions — Tarantool/Silverbox. We decided to give Tarantool a try as its benchmark tests covered the data amounts we needed (I don’t have exact workload figures for that period) and it also satisfied our requirements for memory usage. Another important factor was that the project team was located next door, and we could quickly make feature requests using JIRA. We were among the pioneers who decided to try Tarantool in their project, and I think that our first step towards Tarantool was much encouraged by the positive experience of the other pioneers.

That’s when our “Tarantool era” began. We actively introduced — and keep introducing — Tarantool into our anti-spam architecture. Today we have queues based on Tarantool, high-workload services for storing all sorts of statistics: user reputation, sender IP reputation, user trustworthiness (“karma” statistics), etc. Our current activity is integrating the upgraded data storage system into the our entity statistics processor. You may be wondering why we have focused on a single database solution for our anti-spam project and do not consider migrating to other storages. Well, that’s not quite the case. We consider and analyze competing systems as well, but for the time being Tarantool handles well all tasks and workloads required within our project. Introducing a new (unknown, previously not used) system is always risky and takes much time and resources. Meanwhile, Tarantool is a well-known tool for us (and for many other projects). Our developers and system administrators already know all the onions of using and configuring Tarantool and how to make the most of it. Another advantage is that Tarantool’s development team keeps improving its product and provides good support (and these guys are working next door, which is nice :)). When we were implementing still another Tarantool-based solution, we got all the necessary help and support straightaway (I will tell you about this a bit later).

Further on I’ll give you an overview of several systems in our anti-spam project that use Tarantool and will relate the issues we faced.

Overview of our systems that use Tarantool

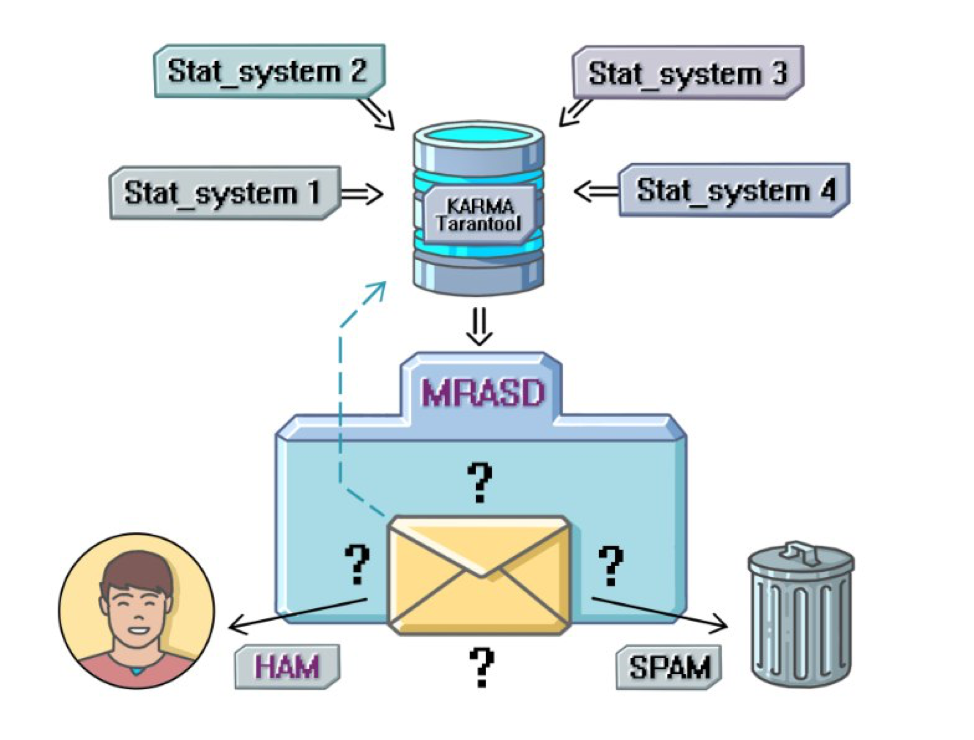

Karma

Karma is a numeric value that indicates a user’s trustworthiness. It was originally intended as the basis of a general “carrot and stick” system for users that wouldn’t require complex dependent systems. Karma is an aggregative value based on data received from other user reputation systems. The idea behind the Karma system is simple: every user has their karma — the higher, the more we trust this user; the lower, the more strict we are while assessing their email messages during our anti-spam check. For example, if a sender sends an email message with suspicious content and the sender’s karma rating is high, such message will hit the recipient’s inbox. And low karma rating would be a pessimistic factor for the anti-spam system. This system makes me think about an attendance book that a teacher consults during school examinations. Students that attended all classes get just a couple of extra questions and leave for vacations, while those who missed many classes will have to answer lots of questions to get a high grade.

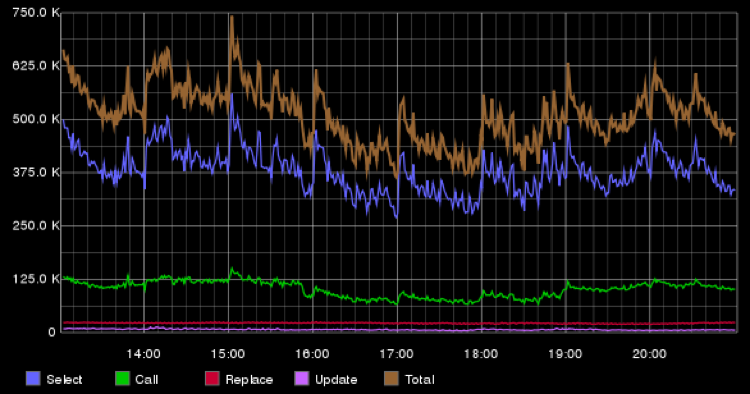

Tarantool that stores karma-related data works on a single server. The graph below illustrates the number of requests that one such instance performs per minute.

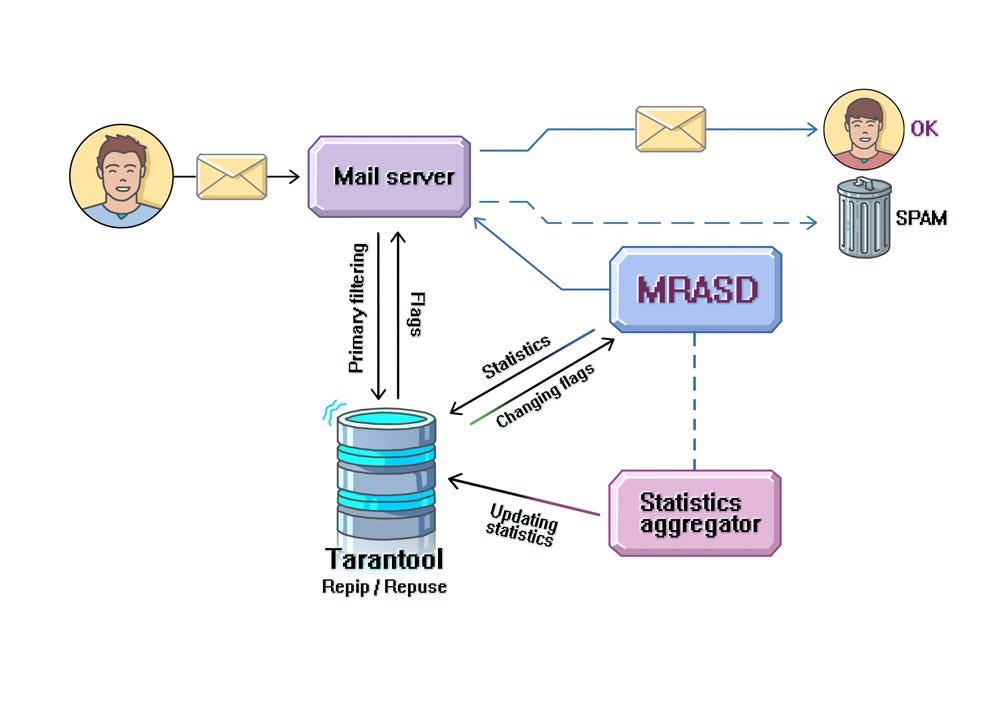

RepIP/RepUser

RepIP and RepUser (reputation IP and reputation user) is a high-workload service for processing statistics related to the activity and actions of a sender (user) with a specific IP as well as statistics related to how intensively a user worked with the email service over a certain period of time. This systems lets us know how many email messages a user has sent, how many of them were read, and how many were marked as spam. An advantage of this system is that it provides a timeline rather than a snapshot of a user’s activity. Why is that important for behavior analysis? Imagine that you have moved to a foreign country without any means of communication, and all your friends remained at home. Then, several years later, you get an Internet cable in your hut. Wow! You browse to the website of your favorite social network and see a photo your friend — hm, he has changed a lot… How much information can you get from that photo? I guess, not too much. And now imagine that you watch a video that shows your friend change, get married and so on — kind of a short biographical clip. I bet, in the second case you’ll get a much better idea of your friend’s life. The same thing is with data analysis: the more information we have, the better we can assess a user’s behavior. We can notice trends in a sender’s mailing activities, understand a sender’s habits. Based on this kind of statistics, each user and IP address is assigned “trust rating points” and a special flag. This flag is used in the primary filter that filters out up to 70% of spam messages before they even hit our mail server. This percentage illustrates the great importance of the reputation service. This is why this service requires the maximum possible performance and failure tolerance. And this is why we use Tarantool here.

Reputation statistics are stored on two servers with four Tarantool instances per each server. The graph below illustrates the average number of requests to RepIP per minute.

While we implemented the reputation service, we had a number of configuration issues with Tarantool. Unlike the systems we discussed earlier, a data packet for RepIP/RepUser is much larger: the average packet size here is 471,97 bytes (the maximal size is 16 Kbytes). Logically, a packet comprises two parts: a small “basic” part (flags, aggregated statistics) and a large statistical part (detailed per-action statistics). Addressing an entire packet results in intensive network usage, so it takes more time to load and save a record. Many systems need only the basic part of a packet, but how can we strip it out of a tuple (“tuple” is Tarantool’s term for a record)? Here’s where stored procedures come in handy. We added the required function to Tarantool’s init.lua file and called it from the client (starting from Tarantool version 1.6, you can write stored procedures in plain C).

Problems with Tarantool versions before 1.5.20

It would be wrong to say that we’ve never had problems with Tarantool. Yes, we had some. For example, after a scheduled restart, Tarantool clients (more than 500) failed to reconnect due to a timeout. We tried introducing progressive timeouts when after a failure the next reconnection attempt is delayed for some increasing amount of time, but this didn’t help. As we found out, the problem was that Tarantool accepted just one connection request within every cycle of its event loop, although there were hundreds of requests awaiting. We had two alternatives: install a new Tarantool version (1.5.20 or higher) or amend Tarantool’s configuration (disabling the io_collect_interval option solved the problem). Tarantool developers fixed this bug very quickly, so you won’t have it with Tarantool 1.6 or 1.7.

RepEntity — entity reputation

We are currently integrating a new component for storing entity statistics (URL, image, attachment, etc.) — RepEntity. The purpose of RepEntity is similar to that of the already discussed RepIP/RepUser: it offers detailed information about entity behavior, which is a decision criterion for our anti-spam filter. Thanks to RepEntity statistics, we can filter out a spam mailout based on the entities of an email message. As an example, a mailout may contain a suspicious URL (e.g. it may contain spam content or lead to a phishing website), and RepEntity helps us notice and block such mailouts much faster. How? We can see the mailing out dynamics of this URL, and we can detect changes in its behavior, which would be impossible with “flat” counters.

Besides a different data packet format, the basic difference between the RepEntity and RepIP systems is that RepEntity produces a tangibly higher workload on our service (the amount of processed and stored data is greater, and so is the number of requests). A single email message may contain up to a hundred entities (versus 10 IP addresses at maximum), and for most of these entities we must load and save a complete packet of statistics. It’s noteworthy that packets are saved by a special aggregator that first waits to accumulate enough statistics. So, this all implies much more workload for the database system and requires accurate design and implementation. Let me stress it that for RepEntity we used Tarantool 1.5 (due to some project limitations), so further on I’m writing about this version.

First thing, we estimated the amount of memory required to store all our statistics. To better illustrate the importance of this task, let me bring some figures: with the expected workload, increasing a data packet by one byte means increasing the total amount of data by one gigabyte. As you can see, our task was to store data in a tuple in the most compact way (as I have already said, we cannot store the entire packet in one tuple, because we have frequent requests for retrieving only part of the packet data). To calculate the amount of data to be stored in Tarantool, we also need to consider:

- extra space for storing the index

- extra space for storing the size of data in a tuple (1 byte)

- one-megabyte limitation for the maximum tuple size (for version 1.7, see http://tarantool.org/doc/book/box/limitations.html)

The increased number of various requests (read, insert, delete) made Tarantool produce timeout errors. Our investigation revealed that in case of frequent insertions and deletions Tarantool initiated a complex process of tree rebalancing (all our indexes were of TREE type). Tree indexes in Tarantool have their tricky self-balancing logic that’s initiated only when some “unbalanced” condition is met. So, when a tree got “unbalanced enough”, Tarantool initiated the rebalancing process and this made Tarantool stutter. In the logs, we found messages like Resource temporarily unavailable (errno: 11) that went away in a few seconds. But while those errors occurred, the client couldn’t get the requested data. Our colleagues from the Tarantool team came up with a solution: try using a different type of a tree index, AVLTREE, that gets rebalanced on every insertion/deletion/change. Indeed, although the number of rebalance calls has increased, their total cost was lower. After we updated the schema and restarted the database, the problem was gone forever.

Another problem we faced was cleaning up the outdated records. Unfortunately, Tarantool (as I know, that’s also true for version 1.7) doesn’t allow defining TTL (time to live) for a certain record and forget about it, having all cleanup activities delegated to the database. Well, you can still implement the desired cleanup logic yourself using Lua and box.fiber. On the bright side, this allows for greater flexibility: you can define complicated cleanup conditions, not only those based on TTL. However, to implement the cleanup logic correctly, you need to be aware of some nuances. The first cleaning fiber I implemented made Tarantool terribly slow. The fact is that the amount of data that we can delete is considerably smaller than the total number of records. To reduce the number of to-be-deleted record candidates, I introduced a secondary index built on the field I needed. After that, I implemented a fiber that traversed all candidates (whose last-modified timestamp was older than the timestamp indicated), checked additional cleanup conditions (e.g. whether the “write-in-progress” flag is currently set for the record) and, in case all conditions were met, the fiber deleted the record. When I tested my logic under zero workload, everything worked fine. Under a low workload, it was fine too. But when I increased the workload to half of the expected level, I got problems. My requests began failing with timeout errors. I understood that I must have slipped a cog. As I got deeper into the problem, I realized that I had a wrong idea of how a fiber worked. In my world, a fiber was a standalone thread that had no influence (except context switching) upon receiving and processing client requests. But shortly after I found out that my fiber used the same event loop as the request processing thread. This way, iterating in a cycle through a big number of records, without deleting anything, I simply blocked the event loop, so client requests were not processed. Why have I mentioned the delete operation? You see, every time I deleted some record, a yield operation happened that unblocked the event loop and allowed processing the next event. At this point, I concluded that if some N operations (where N was an empirically deduced value, I took N=100) were performed without yielding, it was necessary to force a yield (for example, using wrap.sleep(0)). Another thing to keep in mind was that record deletion could trigger an index update, so I could miss some data to delete when iterating through the records. Here came one more solution. In a cycle, I could select a small portion of elements (under 1000) and iterate through these elements, deleting the ones I needed and keeping track of the last non-deleted element. At the next iteration, I would select another small portion of elements starting from the last non-deleted one.

We have also made an attempt to implement a solution that would allow for smooth resharding in future, but this attempt failed: the implemented mechanism had too much overhead, so we abandoned resharding for now. Hopefully, we’ll find the resharding feature in the newer versions of Tarantool.

And here are some performance tips.

To increase Tarantool’s performance, you can disable *.xlog files. But remember that in this case Tarantool starts working only as a cache, with all the limitations that follow (I mean no replication and a long warmup period after restart). A workaround here is making a snapshot now and then and using it to restore data, if needed.

If you have several Tarantool instances on one machine, you can “pinpoint” each instance to a certain CPU core to improve performance. Nonetheless, if you have say 12 physical cores, it’s no good starting 12 instances, because along with the execution thread, each Tarantool instance also has a WAL thread.

Features wanted:

- Sharding.

- Cluster-based approach with the possibility of dynamic cluster configuration that comes in handy, for example, in case of adding nodes or if a node goes down, similar to MongoDB (mongos) and Redis (redis sentinel).

- Possibility to define TTL (time to live) for records cleanup.

Conclusions

Tarantool is a cornerstone of our anti-spam system, and many of our key high-workload services are based on it. Ready-made connectors make it possible to easily integrate Tarantool with components implemented using different programming languages. Tarantool has a long success story: over the years of using Tarantool in our anti-spam project, we have had no serious problems with its operation or stability. But to make the most of this database, you need to consider some configuration nuances (here we’ve been speaking about the nuances for Tarantool version 1.5).

A few words about our future plans:

- Increase the number of Tarantool-based services in our project.

- Migrate to Tarantool 1.7.

- Start using Vinyl engine, especially for RepEntity where the amount of really “hot” data is not that big.

Сохранить