The Data-Scope Project - 6PB storage, 500GBytes/sec sequential IO, 20M IOPS, 130TFlops

“Data is everywhere, never be at a single location. Not scalable, not maintainable.” –Alex Szalay

While Galileo played life and death doctrinal games over the mysteries revealed by the telescope, another revolution went unnoticed, the microscope gave up mystery after mystery and nobody yet understood how subversive would be what it revealed. For the first time these new tools of perceptual augmentation allowed humans to peek behind the veil of appearance. A new new eye driving human invention and discovery for hundreds of years.

Data is another material that hides, revealing itself only when we look at different scales and investigate its underlying patterns. If the universe is truly made of information, then we are looking into truly primal stuff. A new eye is needed for Data and an ambitious project called Data-scope aims to be the lens.

A detailed paper on the Data-Scope tells more about what it is:

The system will have over 6PB of storage, about 500GBytes per sec aggregate sequential IO, about 20M IOPS, and about 130TFlops. The Data-Scope is not a traditional multi-user computing cluster, but a new kind of instrument, that enables people to do science with datasets ranging between 100TB and 1000TB. There is a vacuum today in data-intensive scientific computations, similar to the one that lead to the development of the BeoWulf cluster: an inexpensive yet efficient template for data intensive computing in academic environments based on commodity components. The proposed Data-Scope aims to fill this gap.

A very accessible interview by Nicole Hemsoth with Dr. Alexander Szalay, Data-Scope team lead, is available at The New Era of Computing: An Interview with "Dr. Data". Roberto Zicari also has a good interview with Dr. Szalay in Objects in Space vs. Friends in Facebook.

The paper is filled with lots of very specific recommendations on their hardware choices and architecture, so please read the paper for the deeper details. Many BigData operations have the same IO/scale/storage/processing issues Data-Scope is solving, so it’s well worth a look. Here are some of the highlights:

- There is a growing distance between computational and the I/O capabilities of the high-performance systems. With the growing size of multicores and GPU-based hybrid systems, we are talking about many Petaflops over the next year

- The system integrates high I/O performance of traditional disk drives with a smaller number of very high throughput SSD drives with high performance GPGPU cards and a 10G Ethernet interconnect.

- We need to have the ability to read and write at a very much larger I/O bandwidth than today, and we also need to be able to deal with incoming and outgoing data streams at very high aggregate rates.

- Eliminate a lot of system bottlenecks from the storage system by using direct-attached disks, a good balance of disk controllers, ports and drives. It is not hard to build inexpensive servers today where cheap commodity SATA disks can stream over 5GBps per server.

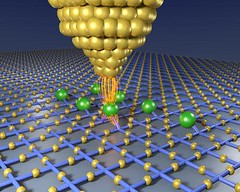

- GPGPUs are extremely well suited for data-parallel, SIMD processing. This is exactly what much of the data-intensive computations are about. Building systems where GPGPUs are co-located with fast local I/O will enable us to stream data onto the GPU cards at multiple GB per second, enabling their stream processing capabilities to be fully utilized.

- In a healthy ecosystem everything is a 1/f power law, and we will see a much bigger diversity [in database options].

- It requires a holistic approach: the data must be first brought to the instrument, then staged, and then moved to the computing nodes that have both enough compute power and enough storage bandwidth (450GBps) to perform the typical analyses, and then the (complex) analyses must be performed.

- There is a general agreement that indices are useful, but for large scale data analytics, we do not need full ACID, transactions are much more a burden than an advantage.

- Experimental and simulation data are growing at a rapid pace. Dataset sizes follow a power law distribution and challenges abound at both extremes of this distribution.

- The performance of different architecture components increases at different rates.

- CPU performance has been doubling every 18 months

- The capacity of disk drives is doubling at a similar rate, somewhat slower that the original Kryder’s Law prediction, driven by higher density platters.

- Disks’ rotational speed has changed little over the last ten years. The result of this divergence is that while sequential IO speeds increase with density, random IO speeds have changed only moderately.

- Due to the increasing difference between the sequential and random IO speeds of our disks, only sequential disk access is possible – if a 100TB computational problem requires mostly random access patterns, it cannot be done.

- Network speeds, even in the data center, are unable to keep up with the doubling of the data sizes.

- Petabytes of data we cannot move the data where the computing is–instead we must bring the computing to the data.

- Existing supercomputers are not well suited for data intensive computations either; they maximize CPU cycles, but lack IO bandwidth to the mass storage layer. Moreover, most supercomputers lack disk space adequate to store PB-size datasets over multi-month periods. Finally, commercial cloud computing platforms are not the answer, at least today. The data movement and access fees are excessive compared to purchasing physical disks, the IO performance they offer is substantially lower (~20MBps), and the amount of provided disk space is woefully inadequate (e.g. ~10GB per Azure instance).

- Hardware Design

- The Data-Scope will consist of 90 performance and 12 storage servers

- The driving goal behind the Data-Scope design is to maximize stream processing throughput over TBsize datasets while using commodity components to keep acquisition and maintenance costs low

- Performing the first pass over the data directly on the servers’ PCIe backplane is significantly faster than serving the data from a shared network file server to multiple compute servers.

- The Data-Scope’s aim is providing large amounts of cheap and fast storage. no disks that satisfy all three criteria. In order to balance these three requirements we decided to divide the instrument into two layers:performance and storage. Each layer satisfies two of the criteria, while compromising on the third.

- Performance Servers will have high speed and inexpensive SATA drives, but compromise on capacity.

- The Storage Servers will have larger yet cheaper SATA disks but with lower throughput. The storage layer has 1.5x more disk space to allow for data staging and replication to and from the performance layer.

- In the performance layer we will ensure that the achievable aggregate data throughput remains close to the theoretical maximum, which is equal to the aggregate sequential IO speed of all the disks. Each disk is connected to a separate controller port and we use only 8-port controllers to avoid saturating the controller. We will use the new LSI 9200-series disk controllers, which provide 6Gbps SATA ports and a very high throughput

- Each performance server will also have four high-speed solid-state disks to be used as an intermediate storage tier for temporary storage and caching for random access patterns.

- The performance server will use a SuperMicro SC846A chassis, with 24 hot-swap disk bays, four internal SSDs, and two GTX480 Fermi-based NVIDIA graphics cards, with 500 GPU cores each, offering an excellent price-performance for floating point operations at an estimated 3 teraflops per card.

- In the storage layer we maximize capacity while keeping acquisition costs low. To do so we amortize the motherboard and disk controllers among as many disks as possible, using backplanes with SATA expanders while still retaining enough disk bandwidth per server for efficient data replication and recovery tasks. We will use locally attached disks, thus keeping both performance and costs reasonable. All disks are hot-swappable, making replacements simple. A storage node will consist of 3 SuperMicro SC847 chassis, one holding the motherboard and 36 disks, with the other two holding 45 disks each, for a total of 126 drives with a total storage capacity of 252TB.

- Given that moveable media (disks) are improving faster than networks, sneakernet will inevitably become the low cost solution for large ad hoc restores,

- We envisage three different lifecycle types for data in the instrument. The first would be persistent data, massive data processing pipelines, and community analysis of very large data sets.

Related Articles

- The New Era of Computing: An Interview with "Dr. Data"

- MRI: The Development of Data-Scope – A Multi-Petabyte Generic Data Analysis Environment for Science

- GrayWulf: Scalable Clustered Architecture for Data Intensive Computing

- Objects in Space vs. Friends in Facebook by Roberto V. Zicari

- Extreme Data-Intensive Computing

- Alex Szalay Home Page