Stuff The Internet Says On Scalability For October 6th, 2017

Hey, it's HighScalability time:

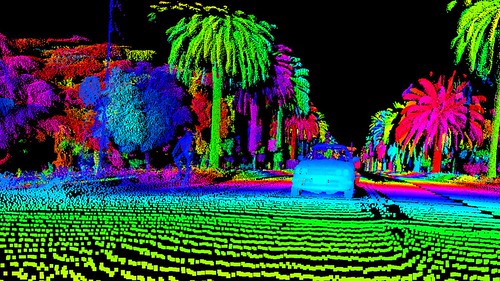

LiDAR sees an enchanted world. (Luminar)

If you like this sort of Stuff then please support me on Patreon.

- 14TB: Western Digital Hard Drive; 3B: Yahoo's perfidy; ~80%: companies traded on U.S. stock market 1950-2009 were gone by 2009; 21%: conversion increase with AI-enabled site personalisation; $1 billion: US Air Force jets off the cloud; 1 billion: iOS devices in use; 1000x: new DeepMind WaveNet model produces 20 seconds of higher quality audio in 1 second; 96: vCPUs on new GCE machine type, with 624GB of memory;

- Quotable Quotes:

- fusiongyro: The amount of incipient complexity in programming has been growing, not going down. What's more complex, "hello, world" to the console in Python, or "hello world" in a browser with the best and newest web stack? Mobility and microservices create lots of new edge cases and complexity—do non-programmers seem particularly well-equipped to handle edge cases to you? The problem has never really been the syntax—if it were, non-programmers would have made great strides with Applescript and SQL, and we'd all be building PowerBuilder libraries for a living. The problem is that programming requires a mode of thinking which is difficult. Lots of people, even people who do it daily, who are trained to do it and exercise great care and use great tool tools, are not great at it. This is not a syntax problem or a lack of decent libraries problem. We have simple programming languages with huge bodies of libraries. What's hard is the actual programming.

- @troyhunt: 1 person didn’t patch Struts, got Equifax breached, sold shares & created dodgy search site with bad results. Right?

- @rob_pike: Once in a while I need to build some large system written in C or C++ and am reminded why we made Go. #golang

- @adam_chal: Me before #strangeloop: I'm not a real programmer unless I know Haskell Me after #strangeloop: I'm not a real programmer unless I knit

- Julian Squires: I make a petty point about premature optimization; don't go out and rewrite your switch statements as binary searches by hand; maybe do rewrite your jump tables as switch statements, though.

- @GossiTheDog: Re this - vuln scanners only find the vuln if you point them at a Struts URL. If you just point them at hostname or IP, it won’t find vuln.

- @stevesi: Yes very much. Not unlike Wells Fargo trying to find a mid-level manager who signed people up for credit cards independent of metrics/execs.

- @patio11: We would laugh out of the room a CEO who said "The reason that we didn't file our taxes last year was an employee forgot to buy a stamp."

- @swardley: In general, the reasons for hybrid cloud have nothing to do with economics & everything to do with executives justifying past purchases

- @asymco: Changes in Android propagate to users over six years. iOS propagates in about three months.

- bb611: It isn't luck [re: Incident: France A388 over Greenland on Sep 30th 2017, fan and engine inlet separated]. It is the result of millions of engineering hours spent on the development of highly reliable and resilient passenger aircraft, an emphasis on public identification and dissemination of design weaknesses, errors, and failures, and an unwavering focus by industry regulators on safety.

- @mipsytipsy: "I would rather have a system that's 75% 'down' but users are fine, than a system 99.99% 'up' but user experience is impacted." #strangeloop

- psyc: A huge proportion of the ICOs I investigate turn out to be pure facade. It's amazing to me just how quickly this con was honed and formalized, but I guess people have always been good at aping when it comes to get-rich-quick bandwagons. The standard ICO consists solely of: 1) A slick website. 2) A well-produced video. 3) A whitepaper that discusses trivially standard blockchain features and goals. No differentiation necessary. 4) The appearance that prominent or well-credentialed people are working on the "technology". That's all. The "product" is vapor. The real product is another pump & dump vehicle to satisfy the insatiable demand for pump & dump vehicles. This product is sold to the "investors" during the ICO. Said "investors" are even explicitly awarded more coins for shilling the pump everywhere by creating amateurish articles and YouTube videos.

- nameless912~ As a developer at a company that's trying to shove Lambda down our throats for EVERYTHING...AWS needs to get better at a few key things before Lambda/serverless become viable enough that I'll actually consider integrating them into my services: 1. Permissions are a nightmare. 2. Networking is equally nightmarish. 3. If the future of compute is serverless, then Lambda, Google Cloud Functions, and whatever half-baked monstrosity Azure has cooked up are going to have to get together and define a common runtime for these environments.

- @erikstmartin: “OS’s are dinosaurs. Let them rest” - @nicksrockwell #velocityconf

- @bridgetkromhout: Thought experiment: what if all your systems restart at once? How long does it take you to recover? *Can* you? @whereistanya #velocityconf

- Eric Hammond: Some services, like API Gateway, are far more complicated, difficult to use, and expensive than I expected before trying. Other services, like Amazon Kinesis Streams, are simpler, cheaper, and far more useful than I expected.

- nameless912: please, please chop off my hands and pull out my eyeballs if cloud computing becomes yet another workflow engine. I though we killed those off in the 90s.

- MIT: The proof-of-principle experiment that Neill and Roushan and co have pulled off is to make a chip with nine neighboring loops and show that the superconducting qubits they support can represent 512 numbers simultaneously.

- @swardley: Equifax: We're a security nightmare! Adobe: Hold my beer Deloitte: Hold my beer Yahoo: Amateurs. Learn from a pro -

- @somic: seeing more & more indicators these days that devops as a unifying idea is now dead. devops appears now to be ops who can write simple code

- @slightlylate: So true. I'll trade 10 devs who are high on abstractions and metaprogramming for one who gives a damn about the user.

- @postwait: I am just a single data point, but I use about 10% of my CS education (CS/MSe/~PhD) daily; about 50% of it monthly. I value it immensely.

- @mweagle: The Go compiler will likely slow down your first sprint. It will radically improve your marathon performance.

- @mtnygard: Centralizing for efficiency creates fragility in the system. Decentralizing for resilience creates inertia that resists needed change.

- rm999: DynamoDB is amazing for the right applications if you very carefully understand its limitations. Last year I built out a recommendation engine for my company; it worked well, but we wanted to make it real-time (a user would get recommendations from actions they made seconds ago, instead of hours or days ago). I planned a 4-6 week project to implement this and put it into production. Long story short: I learned about DynamoDB and built it out in a day of dev time (start to finish, including learning the ins and outs of DynamoDB). The whole project was in stable production within a week. There has been zero down time, the app has seamlessly scaled up ~10x with consistently low latency, and it all costs virtually (relatively) nothing.

- soared: Sorry to be that guy, but: I spend over $5m a year on rtb ads. I literally spend 50 hours a week doing this. If the money I spend doesn't produce verifiable results, I lose it. For example, that 40cpm is to reach a pool of <1000 users who are in charge of purchasing for networks of hospitals, and my ads are for MRI machines. 3rd party data is unbelievably valuable, probably $1.5 million of my budget goes to data costs alone.

- @devpuppy: "black swans are the norm" so store and sample raw events so you can ask questions about unknown unknowns – @mipsytipsy #strangeloop2017

- Asymco: Apple has come to the point where is dominates the processor space. But they have not stopped at processors. The effort now spans all manners of silicon including controllers for displays, storage, sensors and batteries. The S series in the Apple Watch the haptic T series in the MacBook, the wireless W series in AirPods are ongoing efforts. The GPU was conquered in the past year. Litigation with Qualcomm suggests the communications stack is next.

-

The Divided Brain: One way of looking at the difference would be to say that while the left hemisphere's raison d'être is to narrow things down to a certainty, the right hemisphere's is to open them up into possibility. In life we need both. In fact for practical purposes, narrowing things down to a certainty, so that we can grasp them, is more helpful. But it is also illusory, since certainty itself is an illusion – albeit, as I say, a useful one. There is no certainty.

-

Patrick Proctor: Because the 5.25” platters could not sustain spindle speeds at 7200RPM+ and increase platter count. The amount of warping at those speeds would cause the platters to strike each other with so many packed into the same space. Torque = force * distance to center * sin(theta). The larger the radius of the platter, the higher the torque caused by turbulence, and the greater the warping. 5.25” is dead, and it’s not coming back.

-

David Chandler: Rather than reading and writing data one bit at a time by changing the orientation of magnetized particles on a surface, as today’s magnetic disks do, the new system would make use of tiny disturbances in magnetic orientation, which have been dubbed “skyrmions.” These virtual particles, which occur on a thin metallic film sandwiched against a film of different metal, can be manipulated and controlled using electric fields, and can store data for long periods without the need for further energy input.

-

David Rosenthal: People tend to think that security is binary, a system either is or is not secure. But we see that in practice no system, not even the NSA's, is secure. We need to switch to a scalar view, systems are more or less secure. Or, rather, treat security breaches like radioactive decay, events that happen randomly with a probability per unit time that is a characteristic of the system. More secure systems have a lower probability of breach per unit time. Or, looked at another way, data leakage is characterized by a half-life, the time after which there is a 50% probability that the data will have leaked. Data that is deleted long before its half-life has expired is unlikely to leak, but it could. Data kept forever is certain to leak. These leaks need to be planned for, not regarded as exceptions.

- The Coming Software Apocalypse. After all these years it's still strange to see people fall into the "if we only had complete requirements we could finally make reliable systems, what's wrong with these idiots?" tarpit. Requirements are a trap. We went through all of this with waterfall and big design up front. It doesn't work. Requirements are no less complex and undiscoverable than code. Tools are another trap. Tools are code. Tools encode one perspective on a solution space and if there's anything the real world is good at, it's destroying perspective. IMHO, our mostly likely future is to treat programming as an act of computational creativity. Human programmers will work with AIs to co-create software systems. We'll work together to produce better software than a human can on their own or an AI can produce on it's own. We're better together, which is why I'm not afraid AI will replace programmers. Here's an example in music, A.I. Experiments: A.I. Duet, where a computer accompanies a piano player. Here's a better example—Ripples - A piano duet for improvising musician and generative software—where the AI piano player riffs off a human in real-time. You can imagine this is how sofware will be built in the future. Here's a hint at the productivity gain, thought it isn't a complete example, because what I'm talking about doesn't exist yet: @DynamicWebPaige: Blue lines: @Google's old Translate program, 500k lines of stats-focused code. Green: now, 500 lines of @tensorflow. See also, Jeff Dean On Large-Scale Deep Learning At Google and Peter Norvig on Machine Learning Driven Programming: A New Programming For A New World.

- Another great explanation with clear diagrams. On Disk IO, Part 4: B-Trees and RUM

- A Large-Scale Study of Programming Languages and Code Quality in GitHub. Buggy languages: C, C++, Objective-C, Php, and Python. Less buggy languages: Clojure, Haskell, Ruby, and Scala. Before you get your hackles up and feel the need to defend your language against the unbelievers, it's not as bad as you might think: Some languages have a greater association with defects than other languages, although the effect is small; There is a small but significant relationship between language class and defects. Functional languages are associated with fewer defects than either procedural or scripting languages; There is no general relationship between application domain and language defect proneness; Defect types are strongly associated with languages; some defect type like memory errors and concurrency errors also depend on language primitives. Language matters more for specific categories than it does for defects overall.

- Conjecture: These three tunables: Read, Update and Memory overheads can help you to evaluate the database and deeper understand the workloads it’s best suitable for. All of them are quite intuitive and it’s often easy to sort the storage system into one of the buckets and guess how it’s going to perform.

- Get your BSD fix here. Videos from EuroBSDcon 2017 are now available.

- Turtle Ants link many different nests together by making a network of trails. Part of the function of the network is to keep ants circulating round and round so the different parts of the colony stay connected. To find food they branch off this network. The ants do not use the shortest path search algorithm. They modify and prune the network to minimize the number of nodes. This minimizes the chance an ant can get lost by minimizing the number of decisions an ant has to make. Neurons also prune. Stanford researcher uses ant network as basis for algorithm.

- Greg Young - The Long Sad History of MicroServices. kkapelon: The presentation has actually three points: Microservices are nothing new. Well behaved companies were already "doing microservices", long before they became a trend; If your company cannot build a monolith correctly, then micro-services will add even more problems (because distributed stuff is always harder); Learn the basic concepts (like queues and messages) and don't focus on specific technologies. LtAramaki: Greg Young is increasingly trollish with every next talk he does, isn't he? Let me give you a summary of this video: Step 1: Set-up a strawman "Microservices are the new thing, right? You need to use microservices to be cool, right? Everything should be microservices, right?" Step 2: Awkward pause "Let's spend some time aimlessly listing albums of old music performers I like." Step 3: Start arguing with my own straw-man "Microservices are not new at all! Let me prove it with a few tidbits that everyone only I know! SOA! DCOM! Erlang! Actor Model!" Step 4: A brief detour into pure lunacy Step 5: My thesis, built upon non-sense Step 6: Bait and switch. So what's the take-away from this talk? Just a long aimless rant that ends with "it depends", which every reasonable engineer was already aware of. Very disappointing.

- What can you use IPFS for? How the Catalan government uses IPFS to sidestep Spain's legal block: how is the catalan government supposed to notify people their assigned polling stations?...Catalonia’s solution involves IPFS, some crypto and some ingenuity...The website is published through https://ipfs.io...The peer-to-peer distributed part takes care of distribution: it is nearly-impossible for any actor to block access to this content because it is replicated around the network, using peer-to-peer encrypted connections that would be very hard to identify and block at the ISP level...The content-addressed part solves any concerns regarding tampering...In past elections, citizens were able to check their assigned voting station on a government website. To do so, they had to enter a limited set of their personal information (birthdate, government ID number (sort of ISSN) and current zip code) and, if all those are correct, they would get the voting station back...However, Catalonia cannot use any servers in this case, because this would introduce an easy way for the Spanish authorities to render the website inoperative. The entire website had to be static, and the database distributed with it...Not too complicated. Basically, the code recurses a sha256 computation 1714 times to get a password for decryption, and then once more to get the lookup key. Then, this lookup key is used to locate one or more matching lines, and the password is used to decrypt that line’s content (which results in the voting station information).

- Poor table handling has limited how many SaaS customers you can shove into one database. Not anymore. One Million Tables in MySQL 8.0: MySQL 8.0 general tablespaces looks very promising. It is finally possible to create one million tables in MySQL without the need to create two million files. Actually, MySQL 8 can handle many tables very well as long as table cache misses are kept to a minimum. At the same time, the problem with “Opening tables” (worst case scenario test) still persists in MySQL 8.0.3-rc and limits the throughput.

- Serverless is not the same as programmerless. The Serverless Revolution Will Make Us All Developers.

- Google Clips as an example of how a new class of autonomous smart devices will be built. An ultralow-power microprocessor allows the "port some of the most ambitious algorithms to a production device years before we could have with alternative silicon offerings." Google used "video editors and an army of image raters to train its models. Google collected a lot of its own video. It then had editors on staff look at the content and say what they liked — and then the labelers looked at the clips and decided which ones they liked better, which became the training material for the model."

- Open your data, let people take a look, and good things happen. You may remember we talked about how Citizen Scientists Discover Four-Star Planet with NASA Kepler using public data. Here's another example. Open data from the Large Hadron Collider sparks new discovery: The MIT team was able to show, using CMS data, that the same equation can predict both the pattern of these jets and the energy of the particles produced from a proton collision. Scientists suspected this was indeed the case, and now that hypothesis has been verified.

- Vinton Cerf on Building an Interplanetary Communications Protocol. zamadatix: Latency and loss are high in interplanetary communications; Classic forwarding results in too much being thrown away; In extremely high latency it's hard to know when things are up so end clients don't want to be waiting for heartbeats or ACKs directly; Solution is to do long term store and forward on intermediate nodes; Old orbiting spacecraft can and have been used to create higher speed paths than doing direct point to point connections between every end node; The protocol they made scales to low latency high speed links down to high latency low speed links

- Cool picture. AWS Application Load Balancer Summary.

- Serverless ETL on AWS Lambda: Upon joining Nextdoor, my first project was to replace the aging Apache Flume-based pipeline...You can either invest in operating your own infrastructure or offload that responsibility to an infrastructure as a service provider...Bender provides an extendable Java framework for creating serverless ETL functions on AWS Lambda...Out of the box, Bender includes modules to read, filter, and manipulate JSON data as well as semi-structured data parseable by regex from S3 files or Kinesis streams. Events can then be written to S3, Firehose, Elasticsearch, or even back to Kinesis...Working with cloud based services doesn’t eliminate engineering effort — rather, it shifts it. My team, Systems Infrastructure, helps bridge the gaps between servers and services so that our engineers can focus on the main goal of Nextdoor: building a private social network for neighborhoods.

- Great detective story of how Netflix debugged and tuned their sysytem to Serving 100 Gbps from an Open Connect Appliance. Usual lock problems. Usual problems with the OS hurting more than helping and engineers heroically compensating. Quite the journey.

- AWS vs. DigitalOcean: Which Cloud Server is Better: If you are already of DigitalOcean, you should congratulate yourself for making the smarter choice. If you’re on AWS, running a couple of ECM virtual machines, with high costs of bandwidth each month, it might be deserving to switch and take advantage of the free bundled bandwidth.

- Yes, another database. Google is Introducing Cloud Firestore: Our New Document Database for Apps: a fully-managed NoSQL document database for mobile and web app development. It's designed to easily store and sync app data at global scale. Documents and collections with powerful querying. iOS, Android, and Web SDKs with offline data access. Real-time data synchronization. Automatic, multi-region data replication with strong consistency. Node, Python, Go, and Java server SDKs. Nice to see a generous fixed price option. The $25/month pricing plan looks like a good value for developers. Didn't see how conflicts were actually handled. @eric_brewer: Firebase is a fantastic and super-easy way to build mobile apps, and managing persistent state just got easier. @rahulsainani5: Adding data with merge is really cool! Was hoping to see location based queries. How can that be done with Firestore? @abeisgreat: Right now it's not really supported, it's high priority though! @abeisgreat: Yes, it has a completely overhauled offline mode. @TLillestolen: Does it support multiple inequality filters for different properties? Eg. fetch me results where a > 5 && b < 10? @mbleigh: No. Single inequality, though you can do multiple inequalities on the same property. How is it different than Firebase?: While the Firebase Realtime Database is basically a giant JSON tree where anything goes and lawlessness rules the land, Cloud Firestore is more structured. Cloud Firestore is a document-model database, which means that all of your data is stored in objects called documents that consist of key-value pairs -- and these values can contain any number of things, from strings to floats to binary data to JSON-y looking objects the team likes to call maps. These documents, in turn, are grouped into collections.

- Good overview. Strange Loop 2017 in Review. And here are the slides for Strange Loop 2017. And the videos.

- We have another serverless platform. This time from Oracle. Here's Oracle's vision of the future: Meet the New Application Development Stack - Managed Kubernetes, Serverless, Registry, CI/CD, Java. They announced Fn, An Open Source Serverless Functions Platform. Fn: a container native Apache 2.0 licensed serverless platform that you can run anywhere–any cloud or on-premise. Fn consists of three components: (1) the Fn Platform (Fn Server and CLI); (2) Fn Java FDK (Function Development Kit) which brings a first-class function development experience to Java developers including a comprehensive JUnit test harness (JUnit is a unit test harness for Java); and (3) Fn Flow for orchestrating functions directly in code. Fn Flow enables function orchestration for higher level workflows for sequencing, chaining, fanin/fanout, but directly and natively in the developer’s code versus relying on a console. We will have initial support for Java with additional language bindings coming soon. How is Fn different? Because it’s open (cloud neutral with no lock-in), can run locally, is container native, and provides polyglot language support (including Java, Go, Ruby, Python, PHP, Rust, .NET Core, and Node.js with AWS Lambda compatibility). We believe serverless will eventually lead to a new, more efficient cloud development and economic model. Think about it - virtualization disintermediated physical servers, containers are disintermediating virtualization, so how soon until serverless disintermediates containers? In the end, it’s all about raising the abstraction level so that developers never think about servers, VM’s, and other IaaS components, giving everybody better utilization by using less resources with faster product delivery and increased agility.

- LOL (I think). OUR OPEN-PLAN OFFICE FAILED, SO WE’RE MOVING TO A TOWERING PANOPTICON.

- Getting Started with Building Realtime API Infrastructure: Realtime is about pushing data as fast as possible — it is automated, synchronous, and bi-directional communication between endpoints at a speed within a few hundred milliseconds...We’ll start by contrasting data push with “request-response.” Request-response is the most fundamental way that computer systems communicate...In a data push model, data is pushed to a user’s device rather than pulled (requested) by the user...HTTP streaming provides a long-lived connection for instant and continuous data push...WebSockets provide a long-lived connection for exchanging messages between client and server...Webhooks are a simple way of sending data between servers. No long-lived connections are needed. The sender makes an HTTP request to the receiver when there is data to push...Here is a compilation of resources that are available for developers to build realtime applications...Here are some realtime application IaaS providers (managed) to check out for further learning: PubNub, Pusher, and Ably...Here are some realtime API IaaS providers (managed/open source) to check out for further learning: Fanout/Pushpin, Streamdata.io, and LiveResource.

- Chaos engineering and Measuring Correctness of State in a Distributed System. Goal: output is correct after any number of network or process failures and their respective recovery events. Methods: fault-injection testing, chaos engineering, and lineage-driven testing. Want to prove faults definitely did not occur. Want to detect loss, reordering, corruption, and duplication are the indicators we will use to determine if the sequential consistency of the state has been violated. Wallaroo has a network fault-injector named Spike, which can introduce both random and deterministic network failure events. To simulate a process crash, we use the POSIX SIGKILL signal to abruptly terminate a running Wallaroo worker process. Batch Testing: compare the output sets from application runs with and without a crash-recovery event, and if they match (and if the applications’ outputs are sensitive to invariant violations), then we say that no violations occurred during the recovery. Streaming Test: output is validated in real time, as it is produced, message-by-message.

- Making DNA Data Storage a Reality: Right now, combining DNA storage and computing sounds a little ambitious to some in the field. “It’s going to be pretty hard,” says Milenkovic. “We’re still not there with simple storage, never mind trying to couple it with computing.” Columbia’s Erlich also expressed skepticism. “In storage, we leverage DNA properties that have been developed over three billion years of evolution, such as durability and miniaturization,” Erlich says. “However, DNA is not known for its great computation speed.” But Ceze, whose group is currently researching applications of DNA computing and storage, notes that one solution might be a hybrid electronic-molecular design. “Some things we can do with electronics can’t be beaten with molecules,” he says. “But you can do some things in molecular form much better than in electronics. We want to perform part of the computation directly in chemical form, and part in electronics.”

- A solid version of if Agile isn't working you aren't doing Agile right. Why Isn’t Agile Working?

- Figuring out this sequence of doom must have sucked. I picture a series of dominoes tripping, finally ending in mushroom cloud over the datacenter as the last trick fired off. Azure fell over for 7 hours in Europe because someone accidentally set off the fire extinguishers: The problems started when one of Microsoft's data centers was carrying out routine maintenance on fire extinguishing systems, and the workmen accidentally set them off. This released fire suppression gas, and triggered a shutdown of the air con to avoid feeding oxygen to any flames and cut the risk of an inferno spreading via conduits. This lack of cooling, though, knackered nearby powered-up machines, bringing down a "storage scale unit."...the ambient temperature in isolated areas of the impacted suppression zone rose above normal operational parameters. Some systems in the impacted zone performed auto shutdowns or reboots triggered by internal thermal health monitoring to prevent overheating of those systems...some of the overheated servers and storage systems "did not shutdown in a controlled manner...As a result, virtual machines were axed to avoid any data corruption by keeping them alive. Azure Backup vaults were not available, and this caused backup and restore operation failures. Azure Site Recovery lost failover ability and HDInsight, Azure Scheduler and Functions dropped jobs as their storage systems went offline. Azure Monitor and Data Factory showed serious latency and errors in pipelines, Azure Stream Analytics jobs stopped processing input and producing output, albeit only for a few minutes, and Azure Media Services saw failures and latency issues for streaming requests, uploads, and encoding.

- Good CppCon 2017 Trip Report: This year, there seemed to be more focus on how Modern C++ is taught... IncludeOS is a micro-unikernel operating system developed in and exposed to C++, allowing one to write almost any micro-service...He really did a great job advocating for people to adopt fuzzing techniques, showing the ease of coding, easy access of tooling, and value proposition in bugs discovered...It discussed the design of the Modern C++ library interface to AWS.

- Epic post on Monitoring in the time of Cloud Native: Containers, Kubernetes, microservices, service meshes, immutable infrastructure are all incredibly promising ideas which fundamentally change the way we operate software...we’ll be at that point where the network and underlying hardware failures have been robustly abstracted away from us, leaving us with the sole responsibility to ensure our application is good enough to piggy bank on top of the latest and greatest in networking and scheduling abstractions...We’re at a time when it has never been easier for application developers to focus on just making their service more robust...the nature of failure is changing, the way our systems behave (or misbehave) as a whole is changing...We often hear that we live in an era when failure is the norm...Observability — in and of itself, and like most other things — isn’t particularly useful. The value of the Observability of a system directly stems from the business value we derive from it. For many businesses, having a good alerting strategy and time-series based “monitoring” is probably all that’s required to be able to deliver on the business goals.

- A good example from Dropbox of compensating transactions in an eventually consistent system. Handling System Failures during Payment Communication. The real world is messy.

- Counting raindrops using mobile-phone towers: The basic insight is straightforward enough: rain weakens electromagnetic signals. Many mobile-phone towers, especially in remote areas, use microwaves to communicate with other towers on the network. A dip in the strength of those microwaves could therefore reveal the presence of rain. The technique is not as accurate as rooftop rain gauges. But, as Dr Kacou points out, transmission towers are far more numerous, they report their data automatically and they cost meteorologists nothing.

- Essential Image Optimization. @duhroach: Amazingly comprehensive eBook on everything Image Compression for webdevs by the awesome @addyosmani

- CS 7680 Programming Models for Distributed Computing. Topics include RPC, Futures, Promises, message passing, etc., along with links to relevant papers. The syllabus says "Your weekly research paper summaries (20%)". Wouldn't be nice if those were made generally available?

- LuxLang/lux: Lux is a new programming language in the making. It's meant to be a functional, statically-typed Lisp that will run on several platforms, such as the Java Virtual Machine and JavaScript interpreters.

- nasa/EADINLite: This code was created to support the Distributed Engine Control task as part of the Fixed Wing Aeronautics project. The purpose of this research was to enable multiple microcontrollers to speak with eacho ther per the protocol specified in the preliminary release of EADIN BUS.

- fnproject/fn: is an event-driven, open source, functions-as-a-service compute platform that you can run anywhere.

- Nextdoor/bender: an extendable Java framework for creating serverless ETL functions on AWS Lambda. Bender Core handles the complex plumbing and provides the interfaces necessary to build modules for all aspects of the ETL process.

- angr/angr: a suite of python libraries that let you load a binary and do a lot of cool things to it: Disassembly and intermediate-representation lifting; Program instrumentation; Symbolic execution Control-flow analysis; Data-dependency analysis; Value-set analysis (VSA)

- mbeaudru/modern-js-cheatsheet: a cheatsheet for JavaScript you will frequently encounter in modern projects and most contemporary sample code.

- Category Theory for Programmers: Composition is at the very root of category theory — it’s part of the definition of the category itself. And I will argue strongly that composition is the essence of programming. We’ve been composing things forever, long before some great engineer came up with the idea of a subroutine. Some time ago the principles of structural programming revolutionized programming because they made blocks of code composable. Then came object oriented programming, which is all about composing objects. Functional programming is not only about composing functions and algebraic data structures — it makes concurrency composable — something that’s virtually impossible with other programming paradigms.

- Persistent Memory Programming: In the four years that have passed, the spec has been published, and, as predicted, one of the programming models contained in the spec has become the focus of considerable follow-up work. That programming model, described in the spec as NVM.PM.FILE, states that persistent memory (PM) should be exposed by operating systems as memory-mapped files. In this article, I’ll describe how the intended persistent memory programming model turned out in actual OS implementations, what work has been done to build on it, and what challenges are still ahead of us

Hey, just letting you know I've written a new book: Explain the Cloud Like I'm 10. It's pretty much exactly what the title says it is. If you've ever tried to explain the cloud to someone, but had no idea what to say, send them this book.

I've also written a novella: The Strange Trial of Ciri: The First Sentient AI. It explores the idea of how a sentient AI might arise as ripped from the headlines deep learning techniques are applied to large social networks. Anyway, I like the story. If you do too please consider giving it a review on Amazon.

Thanks for your support!